当前位置:网站首页>[point cloud series] multi view neural human rendering (NHR)

[point cloud series] multi view neural human rendering (NHR)

2022-04-23 13:18:00 【^_^ Min Fei】

List of articles

1. Summary

Yu Jingyi's team work ,CVPR2020, Neural rendering series

Address of thesis :https://openaccess.thecvf.com/content_CVPR_2020/papers/Wu_Multi-View_Neural_Human_Rendering_CVPR_2020_paper.pdf

Project address :https://wuminye.github.io/NHR/

Data sets :https://wuminye.github.io/NHR/datasets.html

2. motivation

Specifically for human body rendering end-to-end frame (NHR): Use a little cloud PointNet++ To extract 3D features + Project to 2D Smooth CNN To deal with noise and deformity . In essence, point cloud is introduced to guide the rendering method .

3. Method

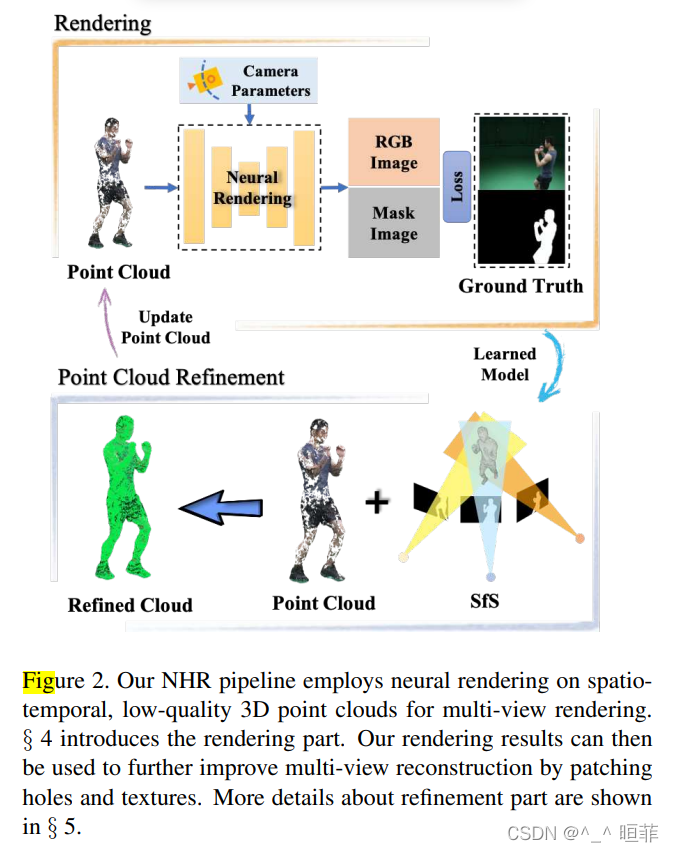

flow chart

The overall framework

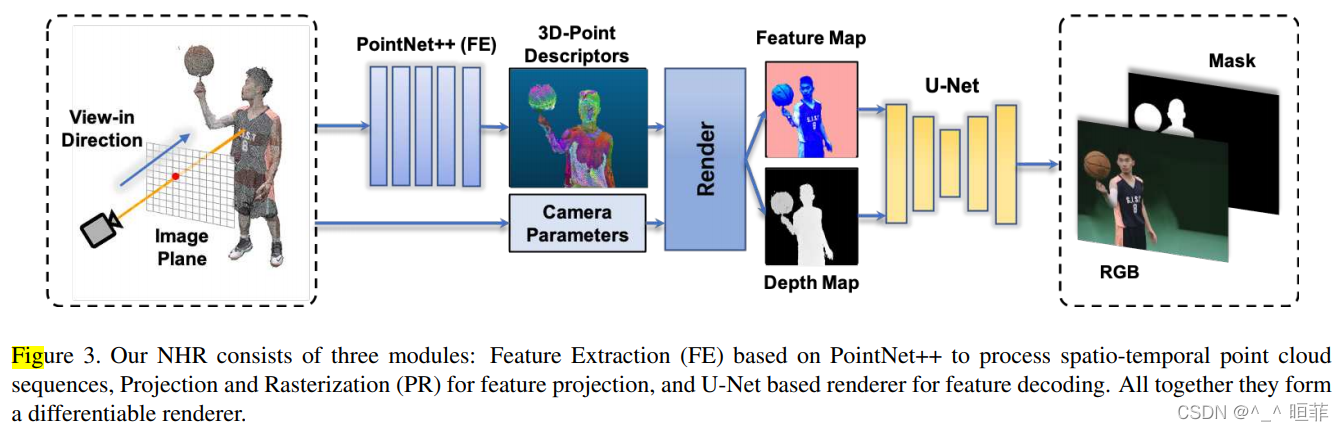

It includes three modules :

- feature extraction (FE)

- Projection and rasterization (PR)

- Rendering (RE)

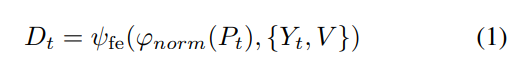

modular 1: feature extraction (FE)

Ψ f e \varPsi_{fe} Ψfe: PointNet++ Feature extraction operations , Remove classification branches , Keep only split branches as FE The branch of .

D t D_t Dt: Feature descriptor of point cloud

V = v i V={v_i} V=vi: Normalized viewing angle direction , v i = p t i − o ∣ ∣ p t i − o ∣ ∣ 2 v^i = \frac{p^i_t-o}{||p^i_t-o||}_2 vi=∣∣pti−o∣∣pti−o2, among o o o Is the projection center of the target angle camera .

{ . } \{.\} {

.}: Indicates splicing , This refers to the mosaic color and the viewing angle direction of the normalized point , The spliced features are used as initialization point attributes for feature extraction .

φ n o r m \varphi_{norm} φnorm Indicates that the point coordinates have been normalized .

modular 2: Projection and rasterization (PR)

S S S: After projection 2D Characteristics of figure , among S x , y = d t i S_{x,y}=d^i_t Sx,y=dti, d t i d^i_t dti It's No i i i Feature descriptor of a point .

E E E: Depth map of the current view

Objective mark phase machine ginseng Count Target camera parameters Objective mark phase machine ginseng Count : K ^ \hat{K} K^、 T ^ \hat{T} T^

can learn xi Of ginseng Count Learnable parameters can learn xi Of ginseng Count : θ d \theta_d θd

ψ p r \psi_{pr} ψpr: The whole process of projection and rasterization

modular 3: Rendering (RE)

ψ r e n d e r \psi_{render} ψrender: An improved version of U-Net, Output 4 passageway , The first three channels are RGB Images I ∗ I* I∗, The last passage is mask yes M ∗ M* M∗, Use sigmoid.

Loss of training

L1 Loss + Loss of perception

n b n_b nb:batch_size size

I i ∗ I_i* Ii∗、 M i ∗ M_i* Mi∗: The first i i i A graph of rendered output and mask.

ψ v g g \psi_{vgg} ψvgg: Extract the... Respectively 2 Tier and tier 4 layer VGG-19 Characteristics of

Geometric improvement

To refine the geometry , Rendered a dense set of new views , And use the generated mask mask As an outline , And give space engraving or contour shape for reconstruction .

Due to multi view stereo input ( In fact, it is a rough point cloud input ) There may be empty places or sheltered areas .

Mask And shape generation : By training the rendering model, we get something similar to RGB Cutout , Then render on a new viewpoint set with unified sampling mask, Each has a corresponding camera parameter , The size is 800x600. And then , have access to shape-from-silhouettes(SfS) To reconstruct the human body mesh.

Point sampling and coloring : It can be done by MVS Calculate the corresponding color from the point cloud on the , Use the nearest neighbor .

Hole completion :

Completion block mechanism : For each point u t i ∈ U t u^i_t\in U_t uti∈Ut, And P t P_t Pt Medium p t i p^i_t pti Euclidean distance of a point ϕ ( u t i , p i ) t ) \phi(u^i_t,p^i)t) ϕ(uti,pi)t) than P ^ t − U t \hat{P}_t-U_t P^t−Ut Big . So set the threshold τ 1 \tau_1 τ1 As formula (5): The experiment is set to 0.2

Then calculate P t ^ \hat{P_t} Pt^ In the middle p t j p^j_t ptj Of Euclidean distance < Number of threshold points , Remember to do s t i = # { b t i ∣ b t i < τ 1 } s^i_t=\#\{b^i_t|b^i_t<\tau_1\} sti=#{

bti∣bti<τ1}

And then use 15 individual bins Calculation s t i s^i_t sti All histograms of , By bisecting the maximum distance value 15 individual bins. As observed in the first bin It contains a more important point than the second , So use the first bin The maximum distance is used as the second threshold : τ 2 \tau_2 τ2 To select the last point value :

The figure below shows that after the hole is filled , It can reduce the flicker when changing the viewing point .

Be careful : The final set will still have artifacts , Because its quality depends on the threshold τ \tau τ

The figure shows how to set the threshold more intuitively , And distance measurement .

4. experiment

Data sets

adopt 80 Multiple camera systems collect 5 A sequence of . Per second 25 frame . All sequences are in 8-24 second . Characters wear different clothes to do different actions .

Each sequence includes :RGB Images 、 prospects mask,RGB Point cloud sequence and camera calibration .

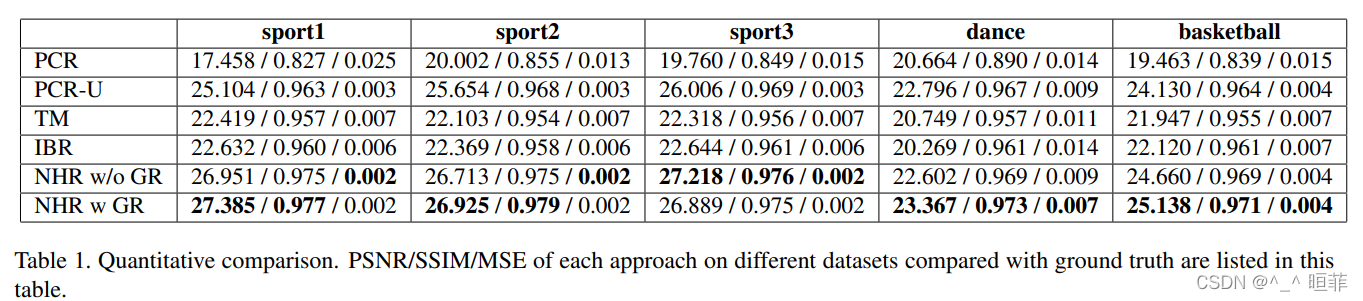

Experimental results :

The experiment shows that , Take full advantage of the benefits of point clouds working with images .

stay 5 Comparison of effects on data sets :

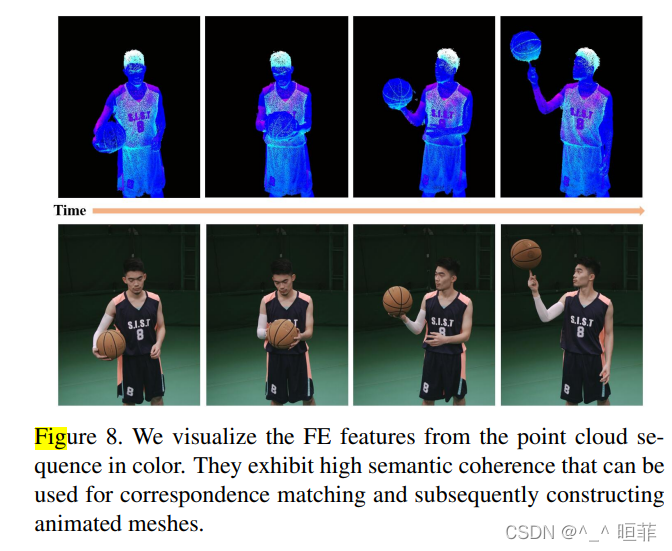

Visualize the color part of the point cloud feature map :

5. summary

In essence, it also adopts rough point cloud + Good picture + Some geometric tips to get good results .

版权声明

本文为[^_^ Min Fei]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230611136796.html

边栏推荐

- 「玩转Lighthouse」轻量应用服务器自建DNS解析服务器

- decast id.var measure. Var data splitting and merging

- 【行走的笔记】

- mui picker和下拉刷新冲突问题

- Esp32 vhci architecture sets scan mode for traditional Bluetooth, so that the device can be searched

- 【微信小程序】flex布局使用记录

- Ffmpeg common commands

- decast id.var measure.var数据拆分与合并

- AUTOSAR from introduction to mastery 100 lectures (52) - diagnosis and communication management function unit

- Translation of multi modal visual tracking: review and empirical comparison

猜你喜欢

Melt reshape decast long data short data length conversion data cleaning row column conversion

100 GIS practical application cases (52) - how to keep the number of rows and columns consistent and aligned when cutting grids with grids in ArcGIS?

缘结西安 | CSDN与西安思源学院签约,全面开启IT人才培养新篇章

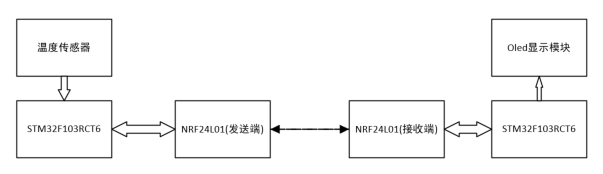

Design of STM32 multi-channel temperature measurement wireless transmission alarm system (industrial timing temperature measurement / engine room temperature timing detection, etc.)

2020最新Android大厂高频面试题解析大全(BAT TMD JD 小米)

AUTOSAR from introduction to mastery lecture 100 (84) - Summary of UDS time parameters

解决虚拟机中Oracle每次要设置ip的问题

MySQL5. 5 installation tutorial

AUTOSAR from introduction to mastery 100 lectures (81) - FIM of AUTOSAR Foundation

Riscv MMU overview

随机推荐

[quick platoon] 215 The kth largest element in the array

@Excellent you! CSDN College Club President Recruitment!

web三大组件之Filter、Listener

2020年最新字节跳动Android开发者常见面试题及详细解析

How to build a line of code with M4 qprotex

The filter() traverses the array, which is extremely friendly

AUTOSAR from introduction to mastery 100 lectures (83) - bootloader self refresh

decast id.var measure. Var data splitting and merging

Async void provoque l'écrasement du programme

Servlet of three web components

Stack protector under armcc / GCC

Wu Enda's programming assignment - logistic regression with a neural network mindset

AUTOSAR from introduction to mastery 100 lectures (50) - AUTOSAR memory management series - ECU abstraction layer and MCAL layer

Playwright contrôle l'ouverture de la navigation Google locale et télécharge des fichiers

The project file '' has been renamed or is no longer in the solution, and the source control provider associated with the solution could not be found - two engineering problems

filter()遍历Array异常友好

[Technical Specification]: how to write technical documents?

X509 parsing

Imx6ull QEMU bare metal tutorial 1: GPIO, iomux, I2C

nodejs + mysql 实现简单注册功能(小demo)