当前位置:网站首页>Data mining -- naive Bayesian classification

Data mining -- naive Bayesian classification

2022-04-22 05:44:00 【Muxi dare】

《 data mining 》 National Defense University of science and technology

《 data mining 》 Qingdao University

Python: Bayesian classification

Bayesian classification is based on Bayesian theorem , It is one of the core methods of machine learning .

At present, there are four kinds of Bayesian classifiers :

- Naive Bayes classifier (Naive Bayes Classifier, or NBC)

- TAN

- BAN

- GBN

Naive Bayesian classification of data mining

• Naive Bayesian classifier has a solid mathematical foundation , And stable classification efficiency . meanwhile , The estimation required for this model

There are few parameters in the meter , Less sensitive to missing data , The algorithm is also relatively simple .

Bayes theorem

The ultimate goal is to achieve p( Category | features ) .

• Simplicity in naive Bayes is to assume that features are independent of each other .

working process

Advantages and disadvantages

- advantage :

(1) The logic is simple 、 Easy to implement 、 The time and space overhead of the algorithm in the classification process is relatively small ;

(2) The algorithm is relatively stable 、 It has good robustness - shortcoming : There is the assumption of conditional independence between attributes , In many practical problems, this independence assumption is not tenable , If you ignore this in the practical problem of correlation between attributes , It will reduce the classification effect .

Add

come from : Whiteboard derivation notes

Python Realization

sklearn.naive_bayes: Naive Bayes modular

According to the characteristic data Prior distribution Different ,scikit-learn The library provides 5 Different naive Bayesian classification algorithms :

- Bernoulli naive Bayes (BernoulliNB)

- Like naive Bayes (CategoricalNB)

- Gaussian naive Bayes (GaussianNB)

- Polynomial naive Bayes (MultinomialNB)

- Add naive Bayes (ComplementNB)

example

import pandas as pd

from sklearn.naive_bayes import GaussianNB

data_url="../1. data mining - National Defense University of science and technology / Data packets /diabetes.csv"

df = pd.read_csv(data_url)

x = df.iloc[0:735,0:8]

y = df.iloc[0:735,8]

clf = GaussianNB().fit(x,y)

dftest = df.iloc[735:768,0:8]

df1 = pd.DataFrame(columns=['test','true'])

df2 = df.iloc[735:768,8].to_frame()

df2 = df2.reset_index(drop=True)

df1['test'] = clf.predict(dftest)

df1['true']=df2

# Accuracy calculation

m = 0

for i in range(0,df1.shape[0]):

if df1.at[i,'test']==df1.at[i,'true']:

m = m + 1

i = i + 1

acc = m/df1.shape[0]

print(" Accuracy rate is :",acc)

import pandas as pd

from sklearn.naive_bayes import GaussianNB

from sklearn.model_selection import train_test_split

import numpy as np

# Function encapsulation :

def Bayes_test(df):

X = df.iloc[:,1:4]

y = df.iloc[:,4]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0)

print(y_test)

# Use Gaussian naive Bayes to calculate

clf = GaussianNB()

clf.fit(X_train, y_train)

# assessment

y_pred = clf.predict(X_test)

print(y_pred)

acc = np.sum(y_test == y_pred) / X_test.shape[0]

return acc

# Read the data and calculate the accuracy :

data_url = "../1. data mining - National Defense University of science and technology / Data packets /iris.csv"

df = pd.read_csv(data_url,index_col=0)

acc = Bayes_test(df)

print(" Accuracy rate is :",acc)

版权声明

本文为[Muxi dare]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204220535577182.html

边栏推荐

- fastjson判断JSON字符串是Object还是List<Object>

- TP50、TP90、TP99的理解和使用

- The ECDSA host key for raspberrypi.local has changed 解决方案

- strcpy的实现你知道吗?

- 数位dp(模板)

- Simulate the infectious disease model with MATLAB (only do matlab simulation learning and practice, not actual situation and application)

- 折现分割平面

- Homebrew的基本使用与常见异常

- My common development software

- Mysql基础知识

猜你喜欢

随机推荐

stl alloc 空间分配器 代码解析

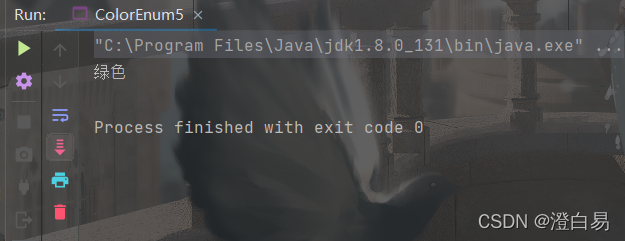

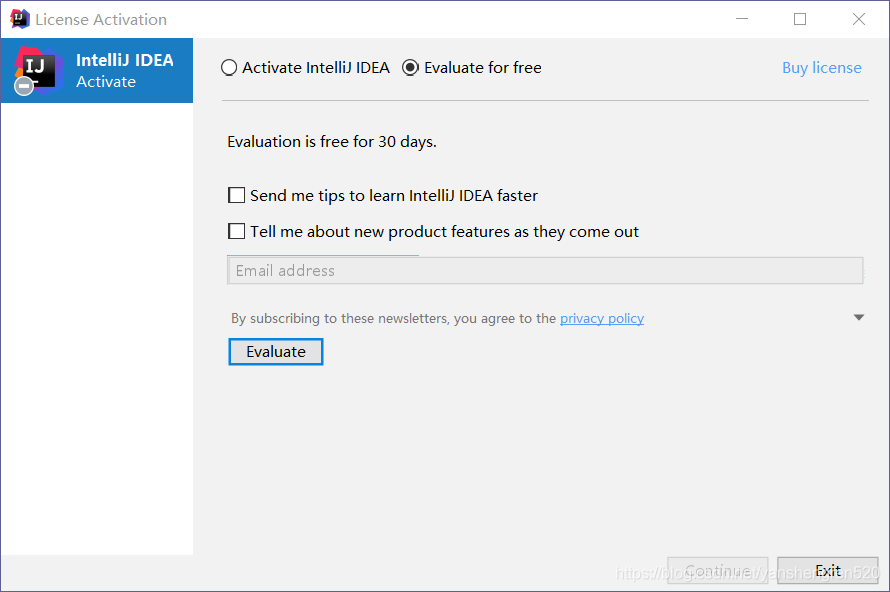

ENUM et expressions lambda

Write a program to automatically generate the two-color ball number of welfare lottery with MATLAB

认识和安装MySQL

01背包问题(模板)

MySQL command

事件监听器

kibana 搜索语法

线程池的几个常识

提高工作效率的利器

随机字符串工具类RandomStringUtils详解

The nearest common ancestor problem of sequentially stored binary trees | PTA

IDEA值得推荐的20款优秀的插件

Recommended system notes (Miscellaneous)

AcWing 836. 合并集合(并查集)

记录一次项目经历和项目中遇到的技术

力扣19. 删除链表的倒数第 N 个结点

可重入锁卖票示例

How to use on duplicate key update in MySQL

MySQL表约束和表设计