当前位置:网站首页>Machine learning model fusion method!

Machine learning model fusion method!

2022-04-22 14:44:00 【Datawhale】

Integrated learning foundation

Ensemble learning is a machine learning model that combines two or more models . Ensemble learning is a branch of machine learning , It is usually used in the pursuit of greater predictive ability .

Ensemble learning is often used by top and winning participants in machine learning competitions . Modern machine learning library (scikit-learn、XGBoost) Common integrated learning methods have been combined internally .

Introduction to integrated learning

Ensemble learning combines multiple different models , Then combine with a single model to complete the prediction . Usually , Ensemble learning can find better performance than a single model .

There are three common types of integrated learning technologies :

Bagging, Such as . Bagged Decision Trees and Random Forest.

Boosting, Such as . Adaboost and Gradient Boosting

Stacking, Such as . Voting and using a meta-model.

Using ensemble learning can reduce the variance of prediction results , At the same time, it also has better performance than a single model .

Bagging

Bagging By sampling the samples of the training data set , Training to get a variety of models , And then get a variety of prediction results . When combining the prediction results of the model , The prediction results of a single model can be voted or averaged .

Bagging The key is the sampling method of the data set . The common way can be from the line ( sample ) Dimension for sampling , Here, we are doing a sampling with a return .

Bagging It can be done by BaggingClassifier and BaggingRegressor Use , By default, they use the decision tree as the basic model , Can pass n_estimators Parameter specifies the number of trees to create .

from sklearn.datasets import make_classification

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import RepeatedStratifiedKFold

from sklearn.ensemble import BaggingClassifier

# Create a sample dataset

X, y = make_classification(random_state=1)

# establish bagging Model

model = BaggingClassifier(n_estimators=50)

# Set the data division method of the validation set

cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1)

# Verify model accuracy

n_scores = cross_val_score(model, X, y, scoring='accuracy', cv=cv, n_jobs=-1)

# Print the accuracy of the model

print('Mean Accuracy: %.3f (%.3f)' % (mean(n_scores), std(n_scores)))Random Forest

Random forest is Bagging Combination with tree model :

The random forest is integrated to fit the decision tree on different guide samples of the training data set .

The random forest will also analyze the characteristics of each data set ( Column ) sampling .

When building each decision tree , Random forest does not consider all features when selecting segmentation points , Instead, the feature is limited to a random subset of features .

Random forest integration can be achieved through RandomForestClassifier and RandomForestRegressor Class in scikit-learn gain . You can n_estimators Parameter specifies the number of trees to create , And pass max_features The parameter specifies the number of randomly selected features to be considered at each split point .

from sklearn.datasets import make_classification

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import RepeatedStratifiedKFold

from sklearn.ensemble import RandomForestClassifier

# Create a sample dataset

X, y = make_classification(random_state=1)

# Create a random forest model

model = RandomForestClassifier(n_estimators=50)

# Set the data division method of the validation set

cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1)

# Verify model accuracy

n_scores = cross_val_score(model, X, y, scoring='accuracy', cv=cv, n_jobs=-1)

# Print the accuracy of the model

print('Mean Accuracy: %.3f (%.3f)' % (mean(n_scores), std(n_scores)))AdaBoost

Boosting Try to correct the errors caused by the previous model during the iteration , The more iterations, the fewer integration errors , At least within the limits of data support and before overfitting the training data set .

Boosting The idea was originally developed as a theoretical idea ,AdaBoost The algorithm is the first successful implementation based on Boosting The method of integrating algorithms .

AdaBoost Fit the decision tree on the version of the weighted training data set , So that the tree can pay more attention to examples of previous member errors .AdaBoost Not a complete decision tree , Instead, use a very simple tree , Make a single decision on an input variable before making a prediction . These short trees are called decision stumps .

AdaBoost It can be done by AdaBoostClassifier and AdaBoostRegressor Use , They use decision trees by default ( Decision tree stump ) As a basic model , Can pass n_estimators Parameter specifies the number of trees to create .

from sklearn.datasets import make_classification

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import RepeatedStratifiedKFold

from sklearn.ensemble import AdaBoostClassifier

# Create a sample dataset

X, y = make_classification(random_state=1)

# establish adaboost Model

model = AdaBoostClassifier(n_estimators=50)

# Set the data division method of the validation set

cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1)

# Verify model accuracy

n_scores = cross_val_score(model, X, y, scoring='accuracy', cv=cv, n_jobs=-1)

# Print the accuracy of the model

print('Mean Accuracy: %.3f (%.3f)' % (mean(n_scores), std(n_scores)))Gradient Boosting

Gradient Boosting Is a framework for improving integration algorithms , It's right AdaBoosting An extension of .Gradient Boosting It is defined as the additive model under the statistical framework , It also allows the use of any loss function to make it more flexible , And allow the use of loss penalties ( shrinkage ) To reduce overfitting .

Gradient Boosting Introduced Bagging The operation of , For example, sampling of training dataset rows and columns , It's called random gradient lifting .

For structured or tabular data ,Gradient Boosting A very successful integration technology , Although because the models are added in order , Therefore, fitting the model may be slow . More efficient implementations have been developed , Such as XGBoost、LightGBM.

Gradient Boosting You can go through GradientBoostingClassifier and GradientBoostingRegressor Use , By default, the decision tree is used as the base model . You can n_estimators Parameter specifies the number of trees to create , adopt learning_rate Parameters control the learning rate of the contribution of each tree .

from sklearn.datasets import make_classification

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import RepeatedStratifiedKFold

from sklearn.ensemble import GradientBoostingClassifier

# Create a sample dataset

X, y = make_classification(random_state=1)

# establish GradientBoosting Model

model = GradientBoostingClassifier(n_estimators=50)

# Set the data division method of the validation set

cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1)

# Verify model accuracy

n_scores = cross_val_score(model, X, y, scoring='accuracy', cv=cv, n_jobs=-1)

# Print the accuracy of the model

print('Mean Accuracy: %.3f (%.3f)' % (mean(n_scores), std(n_scores)))Voting

Voting Use simple statistics to combine predictions from multiple models .

Hard voting : Vote on forecast categories ;

Soft voting : Calculate the mean value of the prediction probability ;

Voting It can be done by VotingClassifier and VotingRegressor Use . You can use the basic model list as a parameter , Each model in the list must be a tuple with a name and a model ,

from sklearn.datasets import make_classification

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import RepeatedStratifiedKFold

from sklearn.ensemble import VotingClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.linear_model import LogisticRegression

# Create a dataset

X, y = make_classification(random_state=1)

# Model list

models = [('lr', LogisticRegression()), ('nb', GaussianNB())]

# establish voting Model

model = VotingClassifier(models, voting='soft')

# Set the data division method of the validation set

cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1)

# Verify model accuracy

n_scores = cross_val_score(model, X, y, scoring='accuracy', cv=cv, n_jobs=-1)

# Print the accuracy of the model

print('Mean Accuracy: %.3f (%.3f)' % (mean(n_scores), std(n_scores)))Stacking

Stacking The prediction of combining many different types of basic models , and Voting similar . but Stacking The weight of each model can be adjusted according to the validation set .

Stacking It needs to be used with cross validation , It can also be done through StackingClassifier and StackingRegressor Use , The basic model can be provided as a parameter of the model .

from sklearn.datasets import make_classification

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import RepeatedStratifiedKFold

from sklearn.ensemble import StackingClassifier

from sklearn.neighbors import KNeighborsClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.linear_model import LogisticRegression

# Create a dataset

X, y = make_classification(random_state=1)

# Model list

models = [('knn', KNeighborsClassifier()), ('tree', DecisionTreeClassifier())]

# Set the data division method of the validation set

cv = RepeatedStratifiedKFold(n_splits=10, n_repeats=3, random_state=1)

# Verify model accuracy

n_scores = cross_val_score(model, X, y, scoring='accuracy', cv=cv, n_jobs=-1)

# Print the accuracy of the model

print('Mean Accuracy: %.3f (%.3f)' % (mean(n_scores), std(n_scores)))

Dry goods learning , spot Fabulous Three even ↓

版权声明

本文为[Datawhale]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204221433395573.html

边栏推荐

- 集合类

- Itologicaloperator instructions

- People always simply think that the industrial Internet is a broader platform than the Internet

- 20 required questions for 25K + Android Engineer Interview and Netease Android interview

- 985硕艰难转行Android之路 加面经分享,美团安卓面试

- Awk command

- GeoServer WMTs slice calculation

- Gradle references peer projects

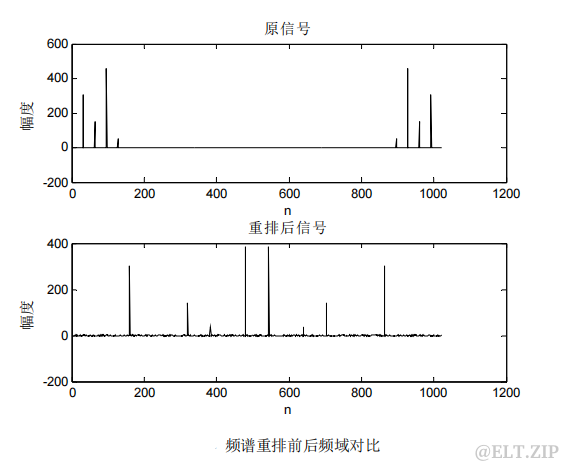

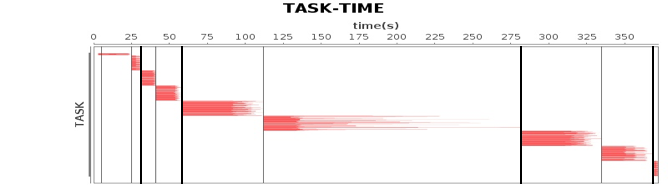

- 【ELT.ZIP】OpenHarmony啃论文俱乐部——一文穿透多媒体过往前沿

- Mobile terminal adaptive and responsive layout

猜你喜欢

数据库资源负载管理(下篇)

阿里云IoT流转到postgresql数据库方案

android 国际区号注册手机号编码 以及常用城市列表,安卓内存优化面试

博睿数据携手F5共同构建金融科技从代码到用户的全数据链DNA

【ELT.ZIP】OpenHarmony啃论文俱乐部——细数生活中的压缩点滴

Weekly Q & A highlights: is polardb-x fully compatible with MySQL?

![[ELT. Zip] openharmony paper Club - you shouldn't miss these small landscapes](/img/a6/d8be8fb98e26199bceecb491282568.jpg)

[ELT. Zip] openharmony paper Club - you shouldn't miss these small landscapes

Android 面试:事件分发8连问,安卓基础面试题

带你了解极具弹性的Spark架构的原理

机器学习模型融合大法!

随机推荐

People always simply think that the industrial Internet is a broader platform than the Internet

TIANTI race - l2-002 linked list weight removal (25 points)

Sonar of code coverage

dxg:TableView.FormatConditions 表格按条件高亮显示

Collection class

985硕艰难转行Android之路 加面经分享,美团安卓面试

人们总是简单的认为产业互联网是一个比互联网还要广大的平台

Arcengine打印视图与布局视图原理解析

Android interview: event distribution 8 consecutive questions, Android basic interview questions

postMassage留个坑

Arcengine 自定义工具鼠标样式设置

ArcEngine line to face conversion

内卷大厂系列《搜索自动补全二连击》

dxg:TableView. The FormatConditions table is highlighted by criteria

带你了解极具弹性的Spark架构的原理

Wireguard series (1): what is v * *?

Archengine custom tool mouse style settings

远程服务器上共享文件夹的上传与下载

jar 反编译(decode compiler) 在线工具

@Resources and constructors