当前位置:网站首页>Environment build onnxruntime 】

Environment build onnxruntime 】

2022-08-09 09:16:00 【YunZhe.】

1, Introduction

onnxruntime is an engine for onnx model inference.

2, install

2.1 cuda,cudnn

2.2 cmake, version>=3.13.0

sudo apt-get install libssl-devsudo apt-get autoremove cmake # uninstallwget https://cmake.org/files/v3.17/cmake-3.17.3.tar.gztar -xf cmake-3.17.3cd cmake-3.17.3./bootstrapmake -j 8sudo make installcmake -versioncmake version 3.17.3CMake suite maintained and supported by Kitware (kitware.com/cmake).2.3 tensorrt

2.4 onnxruntime

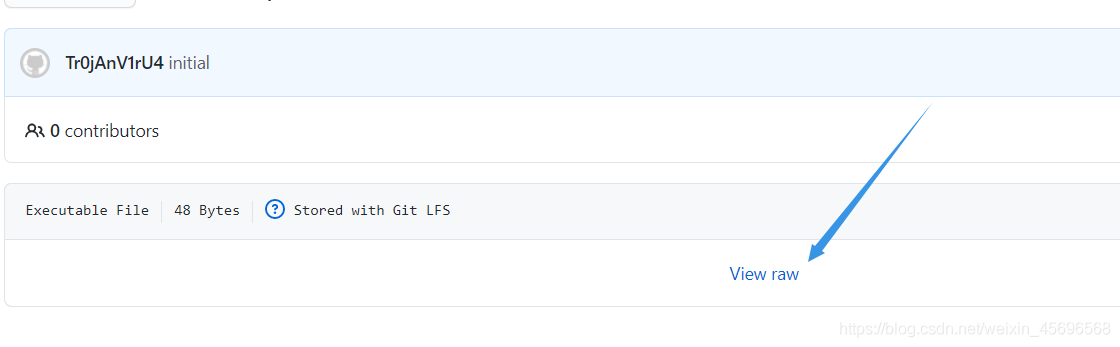

conda activate py36 # Switch virtual environmentgit clone https://github.com/microsoft/onnxruntime.gitcd onnxruntimegit submodule syncgit submodule update --init --recursive./build.sh \--use_cuda \--cuda_version=11.0 \--cuda_home=/usr/local/cuda \--cudnn_home=/usr/local/cuda \--use_tensorrt --tensorrt_home=$TENSORRT_ROOT \--build_shared_lib --enable_pybind \--build_wheel --update --buildpip build/Linux/Debug/dist/onnxruntime_gpu_tensorrt-1.6.0-cp36-cp36m-linux_x86_64.whl# Get the specified versiongit clone -b v1.6.0 https://github.com/microsoft/onnxruntime.gitcd onnxruntimegit checkout -b v1.6.0git submodule syncgit submodule update --init --recursive3,pip install

pip install onnxruntime-gpu==1.6.0 onnx==1.9.0 onnxconverter_common==1.6.0 # cuda 10.2pip install onnxruntime-gpu==1.8.1 onnx==1.9.0 onnxconverter_common==1.8.1 # cuda 11.0import onnxruntimeonnxruntime.get_available_providers()sess = onnxruntime.InferenceSession(onnx_path)sess.set_providers(['CUDAExecutionProvider'], [ {'device_id': 0}])result = sess.run([output_name], {input_name:x}) # x is input3, onnxruntime and pytorch inference

# -*- coding: utf-8 -*-import torchfrom torchvision import modelsimport onnxruntimeimport numpy as npmodel = models.resnet18(pretrained=True)model.eval().cuda()##pytorchx = torch.rand(2,3,224,224).cuda()out_pt = model(x)print(out_pt.size())# onnxonnx_path = "resnet18.onnx"dynamic_axes = {'input': {0: 'batch_size'}}torch.onnx.export(model,x,onnx_path,export_params=True,opset_version=11,do_constant_folding=True,input_names=['input'],dynamic_axes=dynamic_axes)sess = onnxruntime.InferenceSession(onnx_path)sess.set_providers(['CUDAExecutionProvider'], [ {'device_id': 0}])input_name = sess.get_inputs()[0].nameoutput_name = sess.get_outputs()[0].name# onnxruntime inferencexx = x.cpu().numpy().astype(np.float32)result = sess.run([output_name], {input_name:xx}) # x is inputprint(result[0].shape)#MSEmse = np.mean((out_pt.data.cpu().numpy() - result[0]) ** 2)print(mse)Output results

torch.Size([2, 1000])(2, 1000)9.275762e-13边栏推荐

猜你喜欢

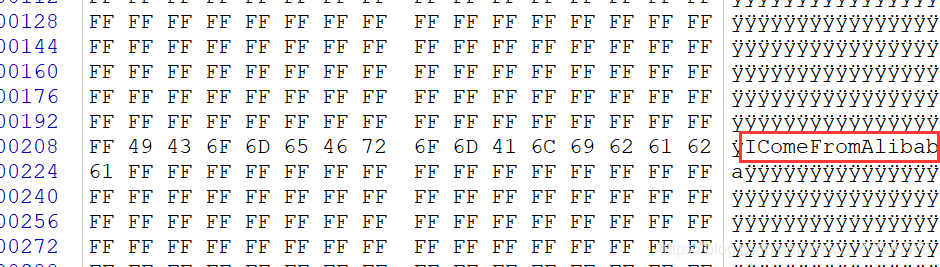

The 5th Blue Cap Cup preliminary misc reappears after the game

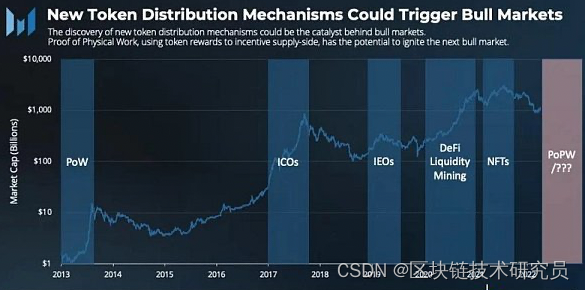

PoPW token distribution mechanism may ignite the next bull market

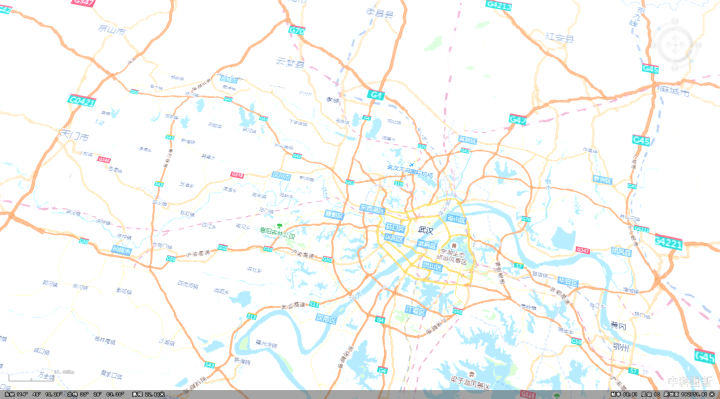

中国打造国产“谷歌地球”清晰度吓人

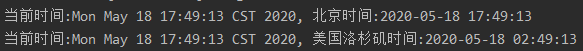

Calendar类和Date类转换时区 && 部分时区城市列表

BUUCTF MISC Writing Notes (1)

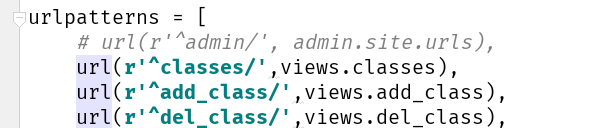

Django实现对数据库数据增删改查(一)

政务中心导航定位系统,让高效率办事成为可能

【场景化解决方案】OA审批与金智CRM数据同步

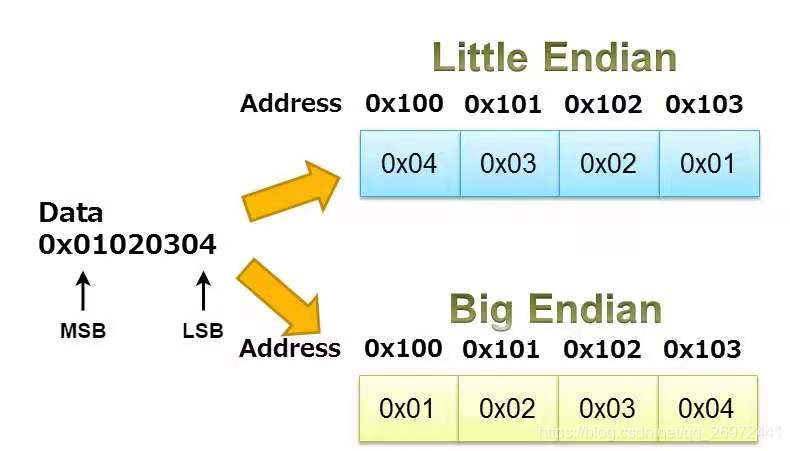

The difference between big-endian and little-endian storage is easy to understand at a glance

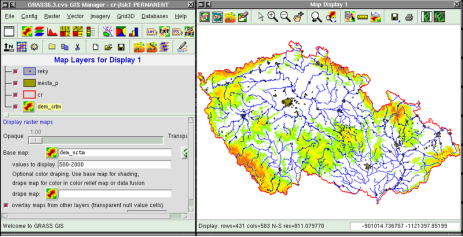

这12个GIS软件一个比一个好用

随机推荐

nodeMCU(ESP8266)和RC522的接线图

MySQL事务隔离

js实现看板全屏功能

MySQL event_single event_timed loop event

【Pytorch】安装mish_cuda

Domestic Google earth, terrain analysis seconds kill with the map software

Amplify Shader Editor手册 Unity ASE(中文版)

教你如何免费获取0.1米高精度卫星地图

【LeetCode每日一题】——225.用队列实现栈

栈的实现之用链表实现

Redis高可用

ARMv8/ARMv9视频课程-Trustzone/TEE/安全视频课程

绝了,这套RESTful API接口设计总结

JMeter参数化4种实现方式

AES/ECB/PKCS5Padding加解密

没有对象的可以进来看看, 这里有对象介绍

Flutter的基础知识之Dart语法

SQL语言中的distinct说明

location.href用法

Calendar类和Date类转换时区 && 部分时区城市列表