当前位置:网站首页>Transformer XL: attention language modelsbbeyond a fixed length context paper summary

Transformer XL: attention language modelsbbeyond a fixed length context paper summary

2022-04-23 08:22:00 【A grain of sand in the vast sea of people】

Paper:Transformer-XL: Attentive Language ModelsBeyond a Fixed-Length Context

Code:Transformer-XL code

1. Brief introduction of the paper

Transfomer-XL = Transformer Extra Long

2. What is? Transformer

XLNet Used Transformer-XL Medium Segment Recurrence Mechanism ( Segmental circulation ) and Relative Positional Encoding ( Relative position coding ) To optimize .

Segment Recurrence Mechanism The segment loop mechanism will save the information output from the previous text , Calculation for the current text , So that the model can have broader context information .

After introducing the previous information , There may be two token Have the same location information , For example, the position information of the first word in the previous paragraph is the same as that of the current paragraph . therefore Transformer-XL Adopted Relative Positional Encoding ( Relative position coding ) , No fixed position , Instead, we use the relative positions of words to encode .

3. Vanilla transfomer langange models Brief introduction and disadvantages

3.1 Brief introduction

3.2 shortcoming

3.2.1 Training with the Vanilla Model (Vanila The training phase of )

1. Tokens at the beginning of each segment do not have sufficent context for proper optimization.

2. Limited by a fixed-length context

3.2.2 Evaluation with the Vanilla Model

1. Longest context limited by segment length.

2. very expensive due to recomputation.

3.2.3. Temporal Incoherence

4. Transformer-XL Contribution or major improvement

4.1 Transformer-XL Introduce

4.1.1 Training with Transformer-XL

4.1.2 Evaluation with Transformer-XL

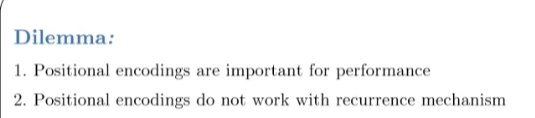

4.1.3. Solution: Relative Positional Encodings

Benefits:

1. Allows recurrence mechanism

2. Better generalization

-> WordLM: Train with memory length 150 , evaluate with 640

-> CharLM: Train with memory length 680, evalute with 3800

4.1 Segment-level Recurrence

Cache and reuse hidden states from last batch

Analogous to Truncated BPTT for RNN : pass the last hidden state to the next segment as the initial hidden

4.2. Keep Temporal information coherenet

5. summary

Reference material

Transformer-XL_ Attentive Language Models beyond a Fixed-Length Context_ Bili, Bili _bilibili

版权声明

本文为[A grain of sand in the vast sea of people]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230704087027.html

边栏推荐

- word加水印

- freertos学习02-队列 stream buffer message buffer

- Online yaml to XML tool

- mysql查询字符串类型的字段使用数字类型查询时问题

- 总线结构概述

- Shell脚本进阶

- The whole house intelligence bet by the giant is driving the "self revolution" of Hisense, Huawei and Xiaomi

- Transformer-XL: Attentive Language ModelsBeyond a Fixed-Length Context 论文总结

- Compiling principle questions - with answers

- js常用数组方法

猜你喜欢

Kubernetes in browser and IDE | interactive learning platform killercoda

Description of the abnormity that the key frame is getting closer and closer in the operation of orb slam

Detailed explanation of ansible automatic operation and maintenance (I) installation and deployment, parameter use, list management, configuration file parameters and user level ansible operating envi

Search the complete navigation program source code

synchronized 实现原理

2022.4.11-4.17 AI industry weekly (issue 93): the dilemma of AI industry

Community group purchase applet source code + interface DIY + nearby leader + supplier + group collage + recipe + second kill + pre-sale + distribution + live broadcast

LeetCode-199-二叉树的右视图

5.6 综合案例-RTU-

Idea: export Yapi interface using easyyapi plug-in

随机推荐

Campus transfer second-hand market source code download

SYS_CONNECT_BY_PATH(column,'char') 结合 start with ... connect by prior

DOM 学习之—添加+-按钮

基于TCP/IP协议的网络通信实例——文件传输

LeetCode简单题之三除数

An example of network communication based on TCP / IP protocol -- file transmission

synchronized 实现原理

vmware 搭建ES8的常见错误

js常用数组方法

社区团购小程序源码+界面diy+附近团长+供应商+拼团+菜谱+秒杀+预售+配送+直播

【路科V0】验证环境2——验证环境组件

QFileDialog 选择多个文件或文件夹

Comparison of indoor positioning technology

Online app resource download website source code

Goland 调试go使用-大白记录

如何保护开源项目免遭供应链攻击-安全设计(1)

stm32以及freertos 堆栈解析

How to encrypt devices under the interconnection of all things

The third divisor of leetcode simple question

搜一下导航完整程序源码