当前位置:网站首页>[pytorch image classification] RESNET network structure

[pytorch image classification] RESNET network structure

2022-04-21 18:15:00 【Stephen-Chen】

Catalog

Batch Normalization Approval planning level

1. introduction

ResNet Is in 2015 Year by year He Kaiming Bring up the , Capture the year ImageNet The first place in the classification task in the competition , First place in target detection task , get COCO First place in target detection in data set , First place in image segmentation ,NB. The original paper is :Deep Residual Learning for Image Recognition.

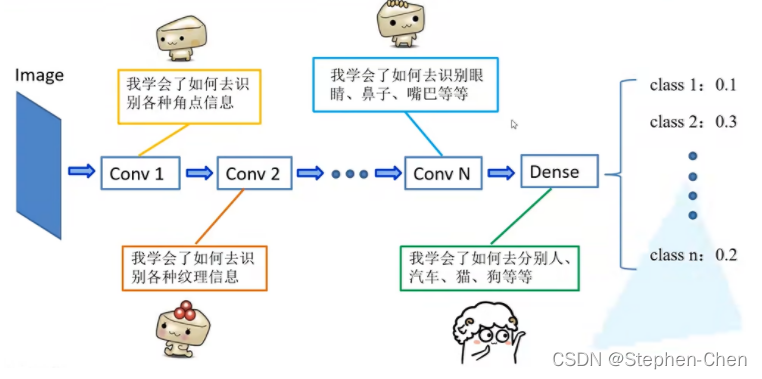

As the network goes deeper , What happened

The phenomenon of the disappearance of gradients

The gradient correlation returned by the network will be worse and worse , Close to white noise , The gradient update is also close to random disturbance

The following is a degenerate ( Poor performance in both training and test sets ), Not too fitting ( Good performance on the training set , The test set is poor )

The deeper the network shown in the figure below , The higher the error rate

2. Network innovation

Residual- Residual block

The depth residual framework is introduced ,

Let the convolution network take the learning residual mapping

Instead of every complete fitting of the stacked network, the potential fitting function

Compared with directly optimizing potential mapping H(x), Optimizing residual mapping is more tolerant

- Be careful : Add it and then go through ReLU Activation function .

- Be careful : The main branch and shortcut Of the output characteristic matrix of the branch shape Must be consistent to add elements . review ,GoogleNet Is to splice in the depth direction .

-

The output of down sampling by maximizing the pool is [56,56,64]

-

Just the input required for the solid line residual structure shape

How to understand Residual Well ?

Suppose we require the mapping of the solution to be : H ( x ) H(x) H(x). Now let's turn this problem into solving the residual mapping function of the network , That is to say F ( x ) F(x) F(x), among F ( x ) = H ( x ) − x F(x) = H(x)-x F(x)=H(x)−x. The residual is the difference between the observed value and the estimated value . here H ( x ) H(x) H(x) Is the observed value , x x x It's an estimate ( That is, the upper floor Residual Output feature mapping ). We usually call it x x x by Identity Function( Identity transformation ), It's a jump connection ; call F ( x ) F(x) F(x) by Residual Function.

Then the problem we want to solve becomes H ( x ) = F ( x ) + x H(x) = F(x)+x H(x)=F(x)+x. A little partner may wonder , Why do we have to go through F ( x ) F(x) F(x) Then we solve H ( x ) H(x) H(x) ah ! Why is it so troublesome , Can't you do it directly , Neural networks are so strong ( Three layer full connection can fit any function )! Let's analyze : If a general convolutional neural network is used , What we originally asked for is H ( x ) = F ( x ) H(x) = F(x) H(x)=F(x) Is this value right ? that , Let's now assume , When my network reaches a certain depth , Our network has reached its optimal state , in other words , At this time, the error rate is the lowest , Further deepening the network will lead to the problem of degradation ( The problem of rising error rate ). It will be very troublesome for us to update the weight of the next layer network , The value of weight is to make the next layer network also the optimal state , Right ? We assume that the input-output feature size remains unchanged , Then the next best state is to learn an identity map , It's best not to change the input characteristics , In this way, the subsequent calculation will keep , Wrong, just like the shallow layer . But it's hard , Just imagine , Here you are 3 × 3 3 \times 3 3×3 Convolution , Mathematically, the convolution kernel parameters of identity mapping have a , That is, the middle is 1, Others are 0. But it can't be learned if you want to learn , Especially when the initialization weight is far away .

But using residual network can solve this problem well . Or suppose the depth of the current network can minimize the error rate , If we continue to increase our ResNet, In order to ensure that the network state of the next layer is still the optimal state , We just need to order F ( x ) = 0 F(x)=0 F(x)=0 That's it ! because x x x Is the optimal solution of the current output , In order to make it the optimal solution of the next layer, that is, we hope our output H ( x ) = x H(x)=x H(x)=x Words , Just let F ( x ) = 0 F(x)=0 F(x)=0 That's it ? This is very convenient , As long as the convolution kernel parameters are small enough , After multiplication and addition is 0 ah . Of course, the above mentioned is only the ideal situation , When we are actually testing x x x It must be difficult to achieve the best , But there will always be a time when it can be infinitely close to the optimal solution . use Residual Words , Only a small update F ( x ) F(x) F(x) Part of the weight value is OK ! You don't have to fight like a normal convolution layer !

Be careful : If the residual mapping ( F ( x ) F(x) F(x)) The dimension of the result is connected with the jump ( x x x) Different dimensions of , Then we can't add the two of them , Must be right x x x Upgrade the dimension , Only when they have the same dimension can they calculate . There are two ways to upgrade dimension :

whole 0 fill

use 1×1 Convolution

Bottleneck The realization of the class

import torch

from torch import nn

class Bottleneck(nn.Module):

# The two convoluted channels in the residual block are represented by 64->256,256->1024, So by 4 that will do

def __init__(self,in_dim,out_dim,stride = 1):

super(Bottleneck,self).__init__()

# The network stack layer uses 1*1 3*3 1*1 These three convolutions make up , There is BN layer

self.bottleneck = nn.Sequential(

nn.Conv2d(in_channels=in_dim,out_channels=in_dim,kernel_size=1,bias=False),

nn.BatchNorm2d(in_dim),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=in_dim,out_channels=in_dim,kernel_size=3,padding=1,bias=False),

nn.BatchNorm2d(in_dim),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=in_dim,out_channels=out_dim,kernel_size=1,padding=1,bias=False),

nn.BatchNorm2d(out_dim)

)

self.relu =nn.ReLU(inplace=True)

#Downsample Part is a that contains BN Layer of 1*1 Convolution composition

''' utilize DownSample The structure changes the number of channels of identity mapping to be the same as the convolution stack layer , So that we can add '''

self.downslape = nn.Sequential(

nn.Conv2d(in_channels=in_dim,out_channels=out_dim,kernel_size=1,padding=1,stride=1),

nn.BatchNorm2d(out_dim)

)

def forward(self,x):

identity = x

out = self.bottleneck(identity)

identity = self.downslape(x)

# take identity( Identity mapping ) Add to the network stack layer output . And pass by Relu Post output

out +=identity

out =self.relu(out)

return out

bottleneck_1 = Bottleneck(64,256)

print(bottleneck_1)

input = torch.randn(1,64,56,56)

out = bottleneck_1(input)

print(out.shape)

Batch Normalization Approval planning level

Here we can see the previous interpretation : Deep learning theory -BN layer

The migration study

advantage

Can quickly train to an ideal result

When the data set is small, it can also train the ideal effect

understand :

Take the shallow information learned from the previous network , Become a general recognition method

Be careful : When using the parameters of others' pre training model , Pay attention to other people's pretreatment methods

Common transfer learning methods : It is generally recommended to train all parameters after loading weights

3. Network architecture

For the residual structure requiring down sampling (conv_3, conv_4, conv_5 The first residual structure of ), The paper uses the following form ( The original paper has several forms , What we are talking about here is the form chosen by the last author ), The main line 3×3 The convolution layer uses stride = 2, Implement downsampling ; The dotted line 1 × 1 1 \times 1 1×1 Convolution and stride = 2:

4. Code implementation

class ResNet(nn.Module):

def __init__(self,block,blocks_num,num_class=1000,include_top =True,groups =1):

super(ResNet, self).__init__()

self.include_top = include_top

self.in_channel = 64

self.groups = groups

self.conv1 = nn.Conv2d(3,self.in_channel,kernel_size=7,stride=2,padding=3,bias=False)

self.bn1 = nn.BatchNorm2d(self.in_channel)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3,stride=2)

self.layer1 = self._make_layer(block, 64, blocks_num[0])

self.layer2 = self._make_layer(block, 128, blocks_num[1],stride=2)

self.layer3 = self._make_layer(block, 256, blocks_num[2],stride=2)

self.layer1 = self._make_layer(block, 512, blocks_num[0],stride=2)

if self.include_top:

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * block.expansion, num_class)

def _make_layer(self,block,channel,block_num,stride=1) ->nn.Sequential:

downsample = None

if stride != 1 or self.in_channel != channel*block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.in_channel,channel*block.expansion,kernel_size=1,stride=stride,bias=False),

nn.BatchNorm2d(channel*block.expansion)

)

layers = []

layers.append(block(self.in_channel,channel,downsample=downsample,stride=stride))

self.in_channel = channel*block.expansion

# Put the residual structure of the solid line in

for _ in range(1,block_num):

layers.append(block(self.in_channel,channel))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

if self.include_top:

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def resNet34(num_class=1000,include_top=True)->ResNet:

return ResNet(BasicBlock,[3,4,6,3],num_class=num_class,include_top=include_top)

def resNet50(num_class=1000,include_top=True):

return ResNet(Bottleneck,[3,4,5,6],num_class=num_class,include_top=include_top)

def resNet101(num_class=1000,include_top=True):

return ResNet(Bottleneck,[3,4,23,3],num_class=num_class,include_top=include_top)5. summary

Learn nested functions (nested function) It is an ideal situation for training neural networks . In deep neural networks , Learn another layer as identity mapping (identity function) Easier ( Although this is an extreme case ).

Residual mapping makes it easier to learn the same function , For example, approximate the parameters in the weight layer to zero .

Using the residual block (residual blocks) It can train an effective deep neural network : Input can be propagated faster through residual connections between layers .

Residual network (ResNet) It has a far-reaching impact on the subsequent deep neural network design .

版权声明

本文为[Stephen-Chen]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204211810506017.html

边栏推荐

- 【acwing】166. 数独****(DFS)

- Laravel soar (2. X) - automatically monitor and output SQL optimization suggestions and assist laravel to apply SQL optimization

- MySQL的默认用户名和密码的什么?

- C语言进阶第45式:函数参数的秘密(下)

- Just got the byte beat offer "floating"

- MySQL - remote connection to non local MySQL database server, Error 1130: host 192.168.3.100 is not allowed to connect to this MySQL s

- Golang中Json的序列化和反序列化怎么使用

- fastjson自动升级成fastjson2后 IDEA开发环境正常 打成jar包发布生成环境后报错异常 pom.xml的version自动升级导致

- 接口测试框架实战(二)| 接口请求断言

- How does IOT platform realize business configuration center

猜你喜欢

Eating this open source gadget makes MCU development as efficient as Arduino

华为、TCL、大疆

Shallow comparison between oceanbase and tidb - implementation plan

靶机渗透练习69-DC1

为什么switch里的case没有break不行

Debugging garbled code of vs2019 visual studio terminal

刚拿的字节跳动offer“打水漂”

Golang中Json的序列化和反序列化怎么使用

靶机渗透练习77-DC9

mysql汉化-workbench汉化-xml文件

随机推荐

Huawei cloud gaussdb (for influx) unveiling phase VI - hierarchical data storage

【pytorch图像分类】ResNet网络结构

【Redis】 使用Redis优化省份展示数据不显示

[intensive reading of Thesis] perception based seam cutting for image stitching

Porting openharmony and adding WiFi driver

爬虫案例01

华为、TCL、大疆

封装的JDBC工具

mysql 中的mysql数据库不见了

Failed to install network card driver (resolved)

Reptile case 01

mysql8.0设置忽略大小写后无法启动

靶机渗透练习70-DC2

Educational Codeforces Round 116 (Rated for Div. 2) E. Arena

【acwing】1118. 分成互质组 ***(DFS)

SQL command delete (I)

【论文精读】Perception-based seam cutting for image stitching

【网络】4G、5G频段汇总

Part time jobs are higher than wages. In 2022, there are three sidelines with a monthly income of more than 10000

laravel-soar(2.x) - 自动监控输出 SQL 优化建议、辅助 laravel 应用 SQL 优化