当前位置:网站首页>How pyspark works

How pyspark works

2022-08-08 22:01:00 【Code_LT】

python has gained momentum in recent years, occupying the first and second positions in many programming language rankings.It is friendly to beginners, elegant programming style, and high development efficiency. These features make python the choice of many Internet industry practitioners.In particular, python's rich ecological support in the field of data science has made many software architects enter the embrace of python in order to unify the programming language in scenarios where they need to do both system architecture and data algorithms.Among the development languages supported by spark, python has a relatively high usage ratio.

When I was young when I first came into contact with spark, I saw that spark could support python for development. I took it for granted that spark should convert the python spark code we wrote into java bytecode or the underlying machine language and thenPut it on each machine node to run.Of course, this is just an idea without further research. How does spark run the code developed with pyspark.

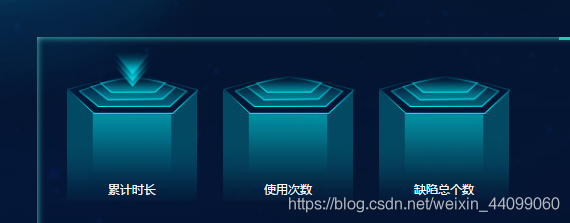

Logical architecture diagram of spark core framework

When using scala and java to develop spark applications, as shown in the figure above, both driver and executor use JVM as a carrier to run and execute tasks.When using python to develop spark applications, in order to ensure the unity of the core architecture, spark encapsulates a layer of python around the core architecture. The core architecture functions of spark include application of computing resources, management and allocation of tasks, and between drivers and executors.The communication, the communication between executors, the RDD carrier, etc. are all based on the JVM.

On the driver side, the pyspark application written by the user communicates with the jvm Driver through py4j. The sparkcontext created in the pyspark application will be mapped to the sparkcontext object in the jvm Driver. When the rdd object created in the program is executed to the action operation, the same rddAnd actions are also mapped to the jvm Driver and executed in the jvm Driver.On the executor side, the pyspark worker is started through the pyspark daemon, and the udf and lambda functions implemented by python are executed in the worker. Based on socket communication, the executor sends data to the worker, and the worker returns the result to the executor.The carrier of rdd is still in the executor. When there is udf and lambda logic, the executor needs to communicate data with the worker.

Spark's design can be said to be very convenient for the expansion of multiple development languages.However, it can also be clearly seen that compared with the udf running inside the jvm, when the udf is executed in the python worker, the additional loss of data serialization, deserialization, and communication IO between the executor jvm and the python worker is increased, andCompared with java, python has a certain performance disadvantage in program operation.In spark tasks with a large proportion of computing logic, the pyspark program using custom udf will obviously have more performance loss.Of course using the built-in udf in spark sql reduces or removes the performance difference created in the above description.

The final choice of spark development language should be considered from the aspects of development efficiency, operation efficiency, team technology stack selection, etc., to choose the development language suitable for your team.

About resource configuration, jump to:

https://blog.csdn.net/Code_LT/article/details/123737940

Architecture details can refer to:

边栏推荐

猜你喜欢

随机推荐

中国石油大学(北京)-《钻井液工艺原理》第三阶段在线作业

SRv6故障管理

玩转C#网页抓取

《第一行代码(第二版)》学习中百分比布局依赖导入问题

深度学习-神经网络原理2

软件设计原则

中国石油大学(北京)-《 修井工程》第一阶段在线作业

我的世界常用快捷键指令大全

地宫寻宝蓝桥杯,详细讲解。

Mysql 主键自增长

如何配合代理使用cURL?

第十章 异常处理

第九章 常用类解析

JQGrid通过json请求nodejs数据,表格信息保存在mysql数据库中

Crawler series: read CSV, PDF, Word documents

用固态U盘让你的办公环境随身移动

活动推荐 | 快手StreamLake品牌发布会,8月10日一起见证!

蚂蚁感冒,蓝桥杯,简易AC代码讲解

IPv6 私有地址

爬虫系列:读取 CSV、PDF、Word 文档