当前位置:网站首页>Go language crawler Foundation

Go language crawler Foundation

2022-04-22 05:13:00 【yuzhang_ zy】

One . Go Language crawling

Go Language crawler and python similar ( You can refer to the official website http Bao He regexp package ), however Go Language crawling is more efficient , General crawling is mainly used to ioutil,http,regexp,sync And other related processing packages ,ioutil Package is mainly a toolkit for reading and writing data ( This includes reading and writing data to files ),http It is a package about the request operation of the client and server ,regexp Is a package about regular expression matching related operations ,sync It is about the package related to synchronization operation ( Including locking and unlocking operations ), Here is a simple crawl mailbox , link , cell-phone number , Example of ID number , Be similar to python Crawling of , First you need to send url request , The server returns a response body , We read the data from the response body and convert it into a string ( When reading data, the returned data may be byte section ), Regular expressions are then used to match the contents of the string , The core of crawling is the writing of regular expressions , You can refer to Blog . In the following example GetPageStr() Function transfer url, In this function http In bag http.Get(url) Send a request , Use ioutil In bag ReadAll() Function read Get() The content of the response body returned by the function , Pass errors to if Go Language built in err Output the wrong result ( You can use one method to handle errors ), because ReadAll() The function returns the of the read content byte section , So we need to use string() The function converts it to a string , Finally through regexp In bag MustCompile() Compiled regular expression matches ( Be similar to compile function ), Core or regular expression , Take the matching of ID number as an example. :"[123456789]\d{5}((19\d{2})|(20[01]\d))((0[1-9])|(1[012]))((0[1-9])|([12]\d)|(3[01]))\d{3}[\dXx]",[] Represents a collection ,[123456789] From 1~9 One of the numbers in ( Match only one number ),\d Representation number ,{5} Appear 5 Time ,| Express or ,(19\d{2}) Said to 19 The first year ,(20[01]\d) Express 200 perhaps 201 The first year ,(0[1-9])|(1[012]) Express 0 The first month or 1 First month ,(0[1-9])|([12]\d)|(3[01]) Indicates the day of the month ,\d{3} Indicates that three numbers appear ,[\dXx] Represents a number or "x","X":

package main

import (

"fmt"

"io/ioutil"

"net/http"

"regexp"

)

var (

// w Represents upper and lower case letters + Numbers + Underline

reEmail = `\w+@\w+\.\w+`

// s? With or without s, + Represent 1 Times or times , \s\S Various characters , +? Represents greedy mode

reLink = `href="(https?://[\s\S]+?)"`

// Regular expression of mobile phone number

rePhone = `1[3456789]\d{9}`

// Regular expression of ID number

reIdcard = `[123456789]\d{5}((19\d{2})|(20[01]\d))((0[1-9])|(1[012]))((0[1-9])|([12]\d)|(3[01]))\d{3}[\dXx]`

)

// Handling exceptions

func HandleError(err error, why string) {

if err != nil {

fmt.Println(why, err)

}

}

// Crawl mailbox

func GetEmail(url string) {

pageStr := GetPageStr(url)

re := regexp.MustCompile(reEmail)

results := re.FindAllStringSubmatch(pageStr, -1)

for _, result := range results {

fmt.Println(result)

}

}

// According to crawling url Get page content

func GetPageStr(url string) (pageStr string) {

resp, err := http.Get(url)

HandleError(err, "http.Get url")

// Use defer The key word in GetPageStr() Close when function returns resp.Body()

defer resp.Body.Close()

// Read page content

pageBytes, err := ioutil.ReadAll(resp.Body)

HandleError(err, "ioutil.ReadAll")

// Use string() Function to convert a byte to a string

pageStr = string(pageBytes)

return pageStr

}

func main() {

// 1. Crawl mailbox

// GetEmail("https://tieba.baidu.com/p/6051076813?red_tag=1573533731")

// 2. Crawling Links

//GetLink("https://pkg.go.dev/regexp#section-documentation")

// 3. Climb to get your cell phone number

//GetPhone("https://www.zhaohaowang.com/")

// 4. Crawling for ID number

GetIdCard("http://sfzdq.uzuzuz.com/sfz/230182.html")

}

// Crawling for ID number

func GetIdCard(url string) {

pageStr := GetPageStr(url)

// Compile regular expressions

re := regexp.MustCompile(reIdcard)

results := re.FindAllStringSubmatch(pageStr, -1)

for _, result := range results {

fmt.Println(result)

}

}

// Crawling Links

func GetLink(url string) {

pageStr := GetPageStr(url)

re := regexp.MustCompile(reLink)

results := re.FindAllStringSubmatch(pageStr, -1)

for _, result := range results {

fmt.Println(result[1])

}

}

// Climb to get your cell phone number

func GetPhone(url string) {

pageStr := GetPageStr(url)

re := regexp.MustCompile(rePhone)

results := re.FindAllStringSubmatch(pageStr, -1)

for _, result := range results {

fmt.Println(result)

}

}Two . regexp package

regexp The official documentation of the package provides 16 There are two types of methods to match a regular expression or recognized text , Their names contain the following words :Find(All)?(String)?(Submatch)?(Index)?, These methods pass a parameter n, When n >= 0 These functions return at most n A match or sub match , When n Less than 0 All matches will be returned when ,,"All" A continuous non overlapping match that matches the entire expression ,"String" Indicates that the search or return is a string ,"Submatch" Indicates a sub match , The return value is a slice that contains all consecutive sub matching expressions , A submatch is a parenthesis expression match , That is, match the contents in parentheses , For example, the current regular expression is "xxx()()()xxx", The first sub match satisfies all the contents of the regular expression , The second child matches the contents of the first bracket of the regular expression , The third child matches the contents of the second bracket of the regular expression , And so on , For example, when the ID number is used in the above example, , Parentheses are used , Then it will eventually match the contents of each bracket :

package main

import (

"fmt"

"regexp"

)

func main() {

s := `[123456789]\d{5}((19\d{2})|(20[01]\d))((0[1-9])|(1[012]))((0[1-9])|([12]\d)|(3[01]))\d{3}[\dXx]`

re := regexp.MustCompile(s)

// -1 Means to match all

results := re.FindAllStringSubmatch("230182195604045724", -1)

fmt.Println(results)

return

}Output results :

[[230182195604045724 1956 1956 04 04 04 04 ]]3、 ... and . Crawl the picture and download it locally

The idea of crawling : We definitely need to know the specific location of the picture first , That is, the corresponding hyperlink , Take crawling multi page pictures as an example , We need to get the hyperlinks to the pictures on each page first , Then download the picture of the hyperlink to the local , So it belongs to two tasks , Then we can use go Keyword open two goroutine Complete the tasks of crawling the picture link and downloading the picture to the local ,goroutine It is generally used in combination with channels , These two goroutine You can communicate through the channel :

版权声明

本文为[yuzhang_ zy]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204220511170757.html

边栏推荐

- All conditions that trigger epollin and epollout

- 2021-10-17

- Introduction to swagger UI

- Batch resolves the IP address of the domain name and opens the web page

- 【高通SDM660平台】(8) --- Camera MetaData介绍

- 【Pytorch】Tensor. Use and understanding of continguous()

- Learn from me: how to release your own plugin or package in China

- [redis notes] data structure and object: Dictionary

- MySQL数据库第十一次作业-视图的应用

- Query result processing

猜你喜欢

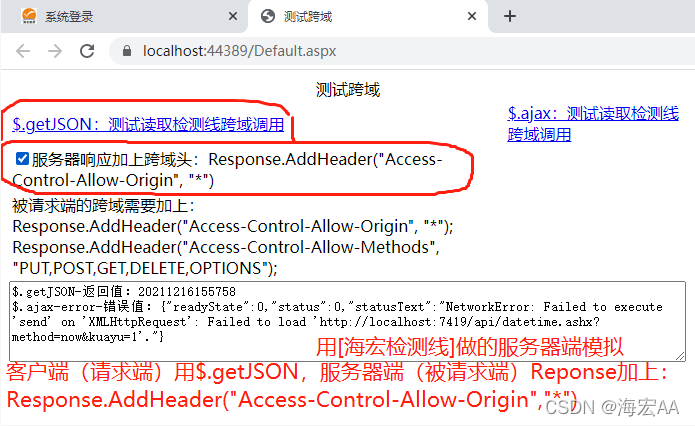

Summary of browser cross domain problems

物联网测试都有哪些挑战,软件检测机构如何保证质量

Acrobat Pro DC tutorial: how to create PDF using text and picture files?

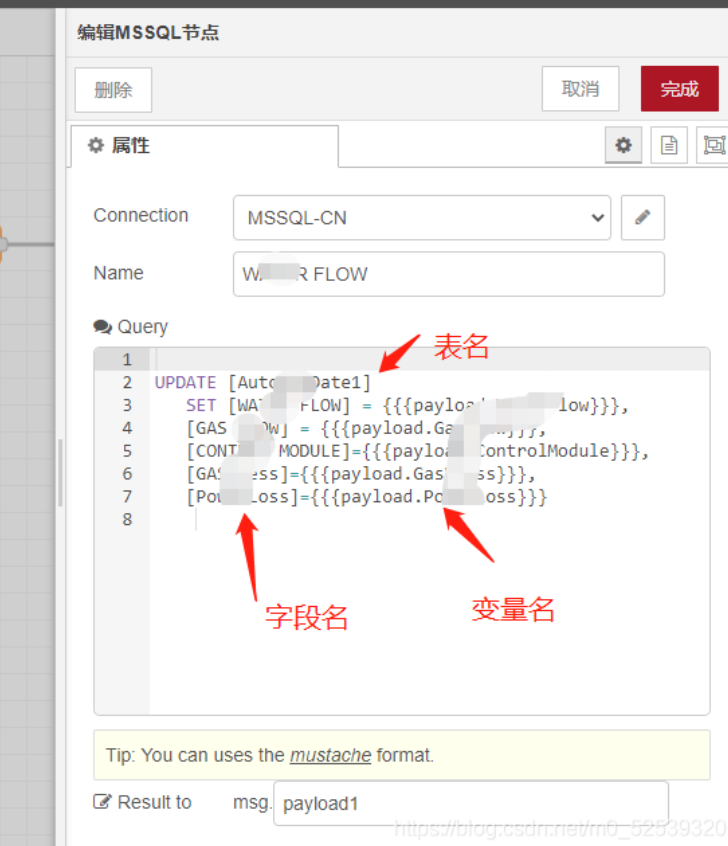

What kind of programming language is this to insert into the database

2022.04.20 Huawei written examination

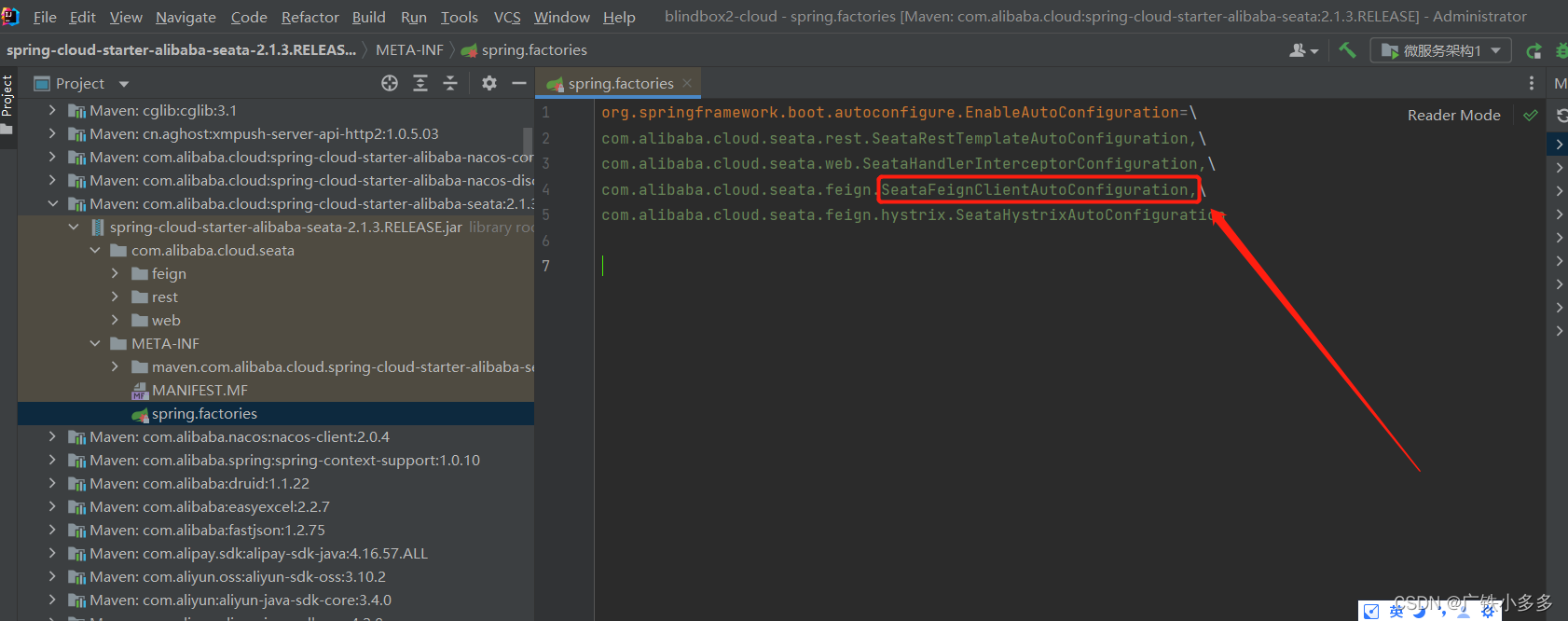

feign调用服务,被调用服务seata事务不开启或者xid为空

How to restrict Oracle sub query

MySQL view character set and proofing rules

Database (II) addition, deletion, modification and query of MySQL table (basic)

Clonal map of writing in mind

随机推荐

Remote wake-up server

2019个人收集框架库总结

Spark 入门程序 : WordCount

Not sure whether it is a bug or a setting

MySQL double master and double slave + atlas data test

Chapter VIII affairs

Drawing scatter diagram with MATLAB

Regular expression of shell script

Regular expression validation

Junit简介与入门

Learn from me: how to release your own plugin or package in China

What is an iterator

Clonal map of writing in mind

All conditions that trigger epollin and epollout

What is idempotency

Visio setting network topology

How to copy the variables in the chrome console as they are, save and download them locally

Error: ER_ NOT_ SUPPORTED_ AUTH_ MODE: Client does not support authentication protocol requested by serv

Garbled code in Web Applications

MySQL encoding problem