当前位置:网站首页>Machine learning training template, summarizing multiple classifiers

Machine learning training template, summarizing multiple classifiers

2022-04-22 12:19:00 【Sing for me alone】

1. Data aspect : It mainly includes pandas operation

import matplotlib.pyplot as plt

import seaborn as sns

import pandas as pd

sns.set(font="simhei") # Give Way heatmap According to Chinese

plt.rcParams['font.sans-serif'] = ['SimHei'] # Set in the matplotlab Chinese font on

plt.rcParams['axes.unicode_minus'] = False # stay matplotlib The drawing displays symbols normally

pd.set_option("display.max_rows", None)

data = pd.read_csv('train.csv')

#info、describe、head、value_counts etc.

print(data.shape)

print(data['label'].unique())

####### Delete a column 、 Delete the blank 、 Change of name ##########

x = data.drop('label', axis=1)

data = data.dropna() #,axis=1 Is to delete columns

data.rename(columns={‘old_name’: ‘new_ name’})

####### Sum up #############

data['col3']=data[['col1','col2']].sum(axis=1)

#data=data.reset_index(drop=True) # to update

###### Handle labels #############

classes = data.loc[:, 'label'] # Remove all labels

df.label= df.label.astype(str).map({'False.':0, 'True.':1}) # Change label tf by 01

######### In terms of data correlation, Pearson correlation coefficient 、 Heat map display

2. normalization 、 Hot coding alone :

from sklearn.preprocessing import StandardScaler,MinMaxScaler

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.2)

######## normalization #########

std = StandardScaler() # Or change to MinMaxScaler

x_train = std.fit_transform(x_train)

x_test = std.transform(x_test)

######## Text to value ##### There is a little problem with the recent use

from sklearn.preprocessing import LabelEncoder

le = preprocessing.LabelEncoder()

train_x = data.apply(le.fit_transform)

####### Hot coding alone ######## There are many ways

## Method 1 ##

from sklearn.preprocessing import OrdinalEncoder

enc = OrdinalEncoder(categories='auto').fit(data_ca)

result = enc.transform(data_ca)

## Method 2 ##

data_dummies = pd.get_dummies(data[['col','col2']])

## Method 3 ##

y_train = np_utils.to_categorical(y_train, num_classes=10) # For labels ; Divided into ten categories

#eg.[1 0 0]

# --------------

# [[0. 1.]

# [1. 0.]

# [1. 0.]]

3. Model : Only for classification problems

from sklearn.neighbors import KNeighborsClassifier

from sklearn.neural_network import MLPClassifier

from sklearn.gaussian_process import GaussianProcessClassifier

from sklearn.gaussian_process.kernels import RBF

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier, AdaBoostClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.svm import SVC

from sklearn.metrics import classification_report, accuracy_score

# define scoring method

scoring = 'accuracy'

# Define models to train

names = ["Nearest Neighbors"

# , "Gaussian Process"

,"Decision Tree"

, "Random Forest"

, "Neural Net"

# , "AdaBoost"

,"Naive Bayes"

, "SVM Linear"

, "SVM RBF"

, "SVM Sigmoid"]

classifiers = [

KNeighborsClassifier(n_neighbors = 3)

# ,GaussianProcessClassifier(1.0 * RBF(1.0))

,DecisionTreeClassifier(max_depth=5)

,RandomForestClassifier(max_depth=5, n_estimators=10, max_features=1)

,MLPClassifier(alpha=1)

# ,AdaBoostClassifier()

,GaussianNB()

,SVC(kernel = 'linear')

,SVC(kernel = 'rbf')

,SVC(kernel = 'sigmoid')

]

models = zip(names, classifiers)

# evaluate each model in turn

results = []

names = []

for name, model in models:

kfold = model_selection.KFold(n_splits=10, random_state = seed, shuffle=True)

cv_results = model_selection.cross_val_score(model, X_train, y_train, cv=kfold, scoring=scoring)

results.append(cv_results)

names.append(name)

msg = "%s: %f (%f)" % (name, cv_results.mean(), cv_results.std())

print(msg)

model.fit(X_train, y_train)

predictions = model.predict(X_test)

print('Test-- ',name,': ',accuracy_score(y_test, predictions))

print()

print(classification_report(y_test, predictions))

4. assessment :

from sklearn import metrics

########### Self contained

print(clf.score(X_test,y_test))

########### Cross validation

scores = cross_val_score(clf, iris.data, iris.target, cv=5) #cross_val_scorel To complete cross validation

print('scores:',scores)

print("Accuracy: {:.4f} (+/- {:.4})".format(scores.mean(), scores.std() * 2))

################F1

score=metrics.f1_score(y_true=y_true,y_pred=preds,average="macro")

################ Confusion matrix and visualization

from sklearn.metrics import confusion_matrix, accuracy_score

conf = confusion_matrix(test_y, preds) # Confusion model , Compare the predicted value with the real value

label = ["0","1"] # Here is the second category

sns.heatmap(conf, annot = True, xticklabels=label, yticklabels=label)

plt.show()

################ other

print(' Accuracy rate :', metrics.accuracy_score(y_true, y_pred))

print(' Precision category :', metrics.precision_score(y_true, y_pred, average=None)) # Not average

print(' Macro average precision :', metrics.precision_score(y_true, y_pred, average='macro'))

print(' Micro average recall rate :', metrics.recall_score(y_true, y_pred, average='micro'))Reference link :

版权声明

本文为[Sing for me alone]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204221209111726.html

边栏推荐

- Case 4-1.7: file transfer (concurrent search)

- LeetCode_DFS_中等_200.岛屿数量

- 离职的互联网人,都去哪儿了?

- 手机刷新率越高越好吗?

- 【并发编程055】如下守护线程是否会执行finally模块中的代码?

- Efr32 crystal calibration guide

- STM32F429BIT6 SD卡模拟U盘

- 案例4-1.7:文件传输(并查集)

- [concurrent programming 050] type and description of memory barrier?

- [deeply understand tcallusdb technology] delete all data interface descriptions in the list - [list table]

猜你喜欢

一种自动切换过流保护模块的热泵装置保护电路介绍(ACS758/CH704应用案例)

congratulations! You have been concerned about the official account for 1 years, and invite you to join NetEase data analysis training.

Ner brief overview

EFR32晶体校准指南

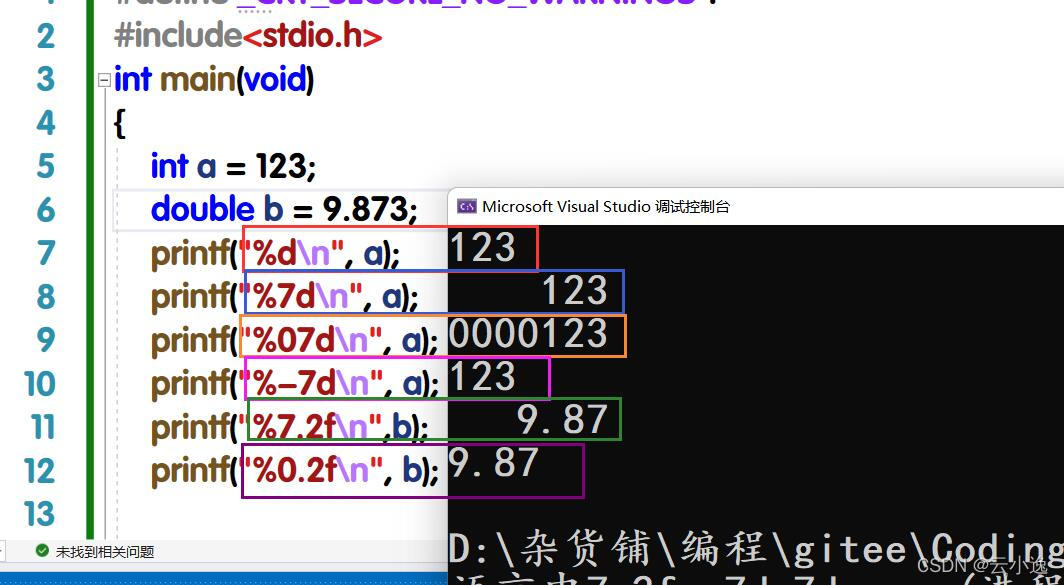

C语言%7.2d、%-7d、%7.2f、%0.2f的含义

LeetCode 83、删除排序链表中的重复元素

【生活中的逻辑谬误】以暴制暴和压制理性

Developer friendly public chain Neo | how to connect web2 developers to Web3 world

电工第一讲

Redis新版本发布,你还认为Redis是单线程?

随机推荐

[concurrent programming 055] will the following daemon thread execute the code in the finally module?

【深入理解TcaplusDB技术】扫描数据接口说明——[List表]

Redis新版本发布,你还认为Redis是单线程?

ONT和ONU

【生活中的逻辑谬误】以暴制暴和压制理性

Synchronized锁及其膨胀

模糊集合论

Esp32-cam usage history

Setting policy of thread pool size

2019年华为鸿蒙加入手机系统阵营,如何看待鸿蒙这三年的发展?

Distributed transaction and lock

[concurrent programming 054] multithreading status and transition process?

[in depth understanding of tcallusdb technology] data interface description for reading the specified location in the list - [list table]

Electrician Lecture 1

LeetCode 1768、交替合并字符串

【并发编程050】内存屏障的种类以及说明?

[concurrent programming 047] cache locking performance is better than bus locking. Why not eliminate bus locking?

How can fresh graduates break through the encirclement of internet job hunting

1086 tree traversals again (25 points)

[in depth understanding of tcallusdb technology] scan data interface description - [list table]