当前位置:网站首页>[Deep Learning 21 Days Learning Challenge] Memo: What does our neural network model look like? - detailed explanation of model.summary()

[Deep Learning 21 Days Learning Challenge] Memo: What does our neural network model look like? - detailed explanation of model.summary()

2022-08-04 06:05:00 【Live up to [email protected]】

活动地址:CSDN21天学习挑战赛

学完手写识别和服装分类,Want to stop for a while to digest what I've learned,也总结一下,今天就从keras的model.summary()Let's start the output!

1、model.summary()是什么

构建深度学习模型,我们会通过model.summary()输出模型各层的参数状况,Have we had just learned model as an example:

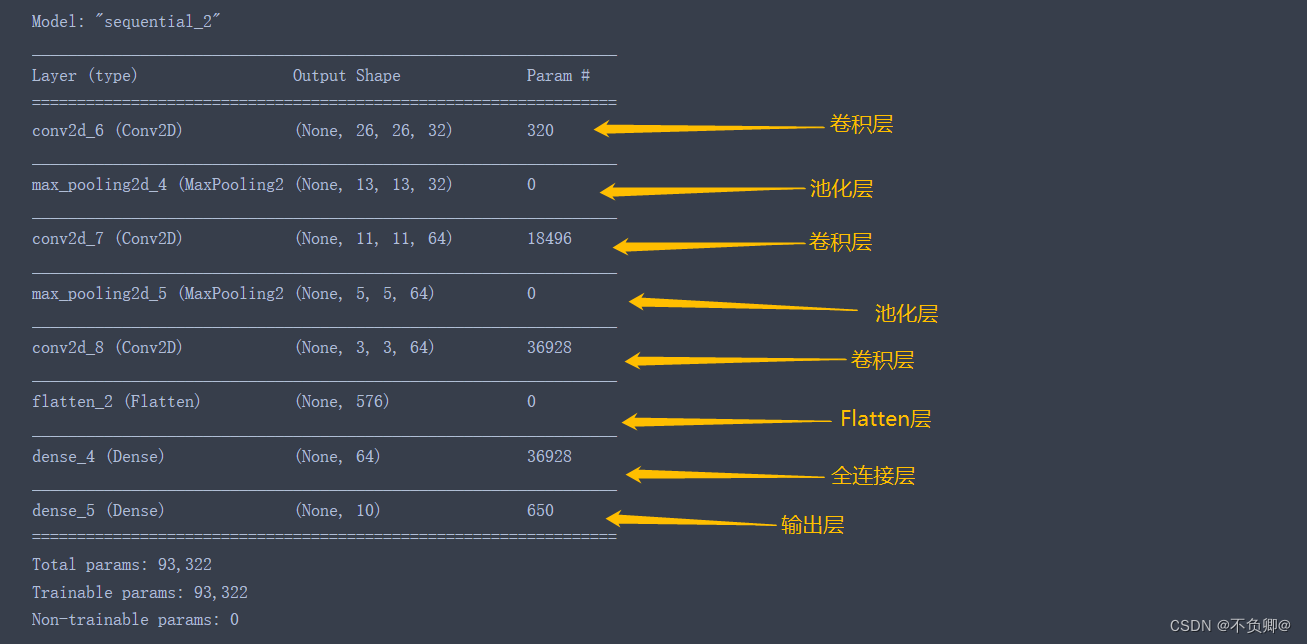

这里可以看出,model.summary()打印出的内容,is the same as the hierarchical relationship in which we build the model,Clothing classification model as an example:

#Build model code

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)), #卷积层1,卷积核3*3

layers.MaxPooling2D((2, 2)), #池化层1,2*2采样

layers.Conv2D(64, (3, 3), activation='relu'), #卷积层2,卷积核3*3

layers.MaxPooling2D((2, 2)), #池化层2,2*2采样

layers.Conv2D(64, (3, 3), activation='relu'), #卷积层3,卷积核3*3

layers.Flatten(), #Flatten层,连接卷积层与全连接层

layers.Dense(64, activation='relu'), #全连接层,特征进一步提取

layers.Dense(10) #输出层,输出预期结果

])

2、model.summary()输出含义

Still take the clothing classification model as an example:

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_6 (Conv2D) (None, 26, 26, 32) 320

#创建: layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)), #卷积层1,卷积核3*3

_________________________________________________________________

max_pooling2d_4 (MaxPooling2 (None, 13, 13, 32) 0

#创建:layers.MaxPooling2D((2, 2)), #池化层1,2*2采样

_________________________________________________________________

conv2d_7 (Conv2D) (None, 11, 11, 64) 18496

#创建:layers.Conv2D(64, (3, 3), activation='relu'), #卷积层2,卷积核3*3

_________________________________________________________________

max_pooling2d_5 (MaxPooling2 (None, 5, 5, 64) 0

#创建:layers.MaxPooling2D((2, 2)), #池化层2,2*2采样

_________________________________________________________________

conv2d_8 (Conv2D) (None, 3, 3, 64) 36928

#创建:layers.Conv2D(64, (3, 3), activation='relu'), #卷积层3,卷积核3*3

_________________________________________________________________

flatten_2 (Flatten) (None, 576) 0

#创建:layers.Flatten(), #Flatten层,连接卷积层与全连接层

_________________________________________________________________

dense_4 (Dense) (None, 64) 36928

#创建:layers.Dense(64, activation='relu'), #全连接层,特征进一步提取

_________________________________________________________________

dense_5 (Dense) (None, 10) 650

#创建:layers.Dense(10) #输出层,输出预期结果

=================================================================

Total params: 93,322

Trainable params: 93,322

Non-trainable params: 0

_________________________________________________________________

Param:该层输入参数个数, So how did this number come about??

a、The formula for calculating the number of parameters of the convolution layer:(卷积核长度*卷积核宽度*通道数+1)*卷积核个数

例:

第一个卷积层:(3*3*1+1)*32 = 320

第二个卷积层:(3*3*32+1)*64 = 18496

第三个卷积层:(3*3*64+1)*64 = 36928b、The formula for calculating the number of parameters of the fully connected layer:

(输入数据维度+1)* 神经元个数

例:

Fully connected layer before the output layer:(64+1)*10=650

这里之所以要加1,Because every neuron has a偏置(Bias).Output Shape :The output data shape of this layer

Total params: Total number of model parameters,

The parameters of each layer are accumulatedTrainable params: 模型可训练参数

Non-trainable params:Model untrainable parameters

3、Understand the shape model process

通过model.summary(),Let's look at this picture again,就清楚多了

版权声明

本文为[Live up to [email protected]]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/216/202208040525326927.html

边栏推荐

猜你喜欢

【深度学习21天学习挑战赛】备忘篇:我们的神经网模型到底长啥样?——model.summary()详解

flink-sql自定义函数

CAS与自旋锁、ABA问题

线性回归简介01---API使用案例

Android connects to mysql database using okhttp

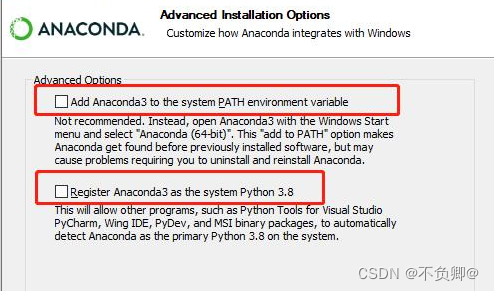

【深度学习21天学习挑战赛】0、搭建学习环境

flink on yarn任务迁移

Commons Collections1

(十三)二叉排序树

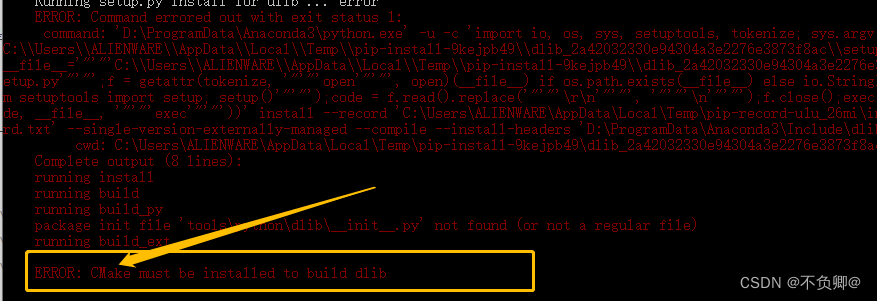

Install dlib step pit record, error: WARNING: pip is configured with locations that require TLS/SSL

随机推荐

flink onTimer定时器实现定时需求

TensorFlow2 study notes: 6. Overfitting and underfitting, and their mitigation solutions

postgresql 游标(cursor)的使用

剑指 Offer 2022/7/5

Redis持久化方式RDB和AOF详解

自动化运维工具Ansible(2)ad-hoc

SQL练习 2022/7/4

TensorFlow2学习笔记:4、第一个神经网模型,鸢尾花分类

Androd Day02

线性回归02---波士顿房价预测

关系型数据库-MySQL:约束管理、索引管理、键管理语句

flink-sql所有数据类型

MySql--存储引擎以及索引

(十五)B-Tree树(B-树)与B+树

BUUCTF——MISC(一)

NFT市场开源系统

剑指 Offer 2022/7/3

记一次flink程序优化

简单明了,数据库设计三大范式

剑指 Offer 2022/7/4