当前位置:网站首页>Scrapy modifies the time in the statistics at the end of the crawler as the current system time

Scrapy modifies the time in the statistics at the end of the crawler as the current system time

2022-04-23 07:47:00 【Brother Bing】

Scrapy Modify the time in the statistics at the end of the crawler to the current system time

One 、 The problem background

scrapy At the end of each run, a pile of statistical information will be displayed , Among them, there are statistical time data , however !!! What time was that UTC Time (0 The time zone ), It's not the system local time we're used to , And the total running time of the crawler inside is calculated in seconds , Not in line with our daily habits , So I flipped scrapy Source code , Find the relevant content and rewrite it , Feeling ok , Ladies and gentlemen, take it with you !

Two 、 Problem analysis

Through log information , Find the corresponding class that counts the running time of the crawler :scrapy.extensions.corestats.CoreStats

- The log information is displayed as follows :

# Extended configuration 2021-05-10 10:43:50 [scrapy.middleware] INFO: Enabled extensions: ['scrapy.extensions.corestats.CoreStats', # Signal collector , There is information about the running time of the crawler 'scrapy.extensions.telnet.TelnetConsole', 'scrapy.extensions.logstats.LogStats'] # Statistics 2021-05-10 10:44:10 [scrapy.statscollectors] INFO: Dumping Scrapy stats: { 'downloader/exception_count': 3, 'downloader/exception_type_count/twisted.internet.error.ConnectionRefusedError': 2, 'downloader/exception_type_count/twisted.internet.error.TimeoutError': 1, 'downloader/request_bytes': 1348, 'downloader/request_count': 4, 'downloader/request_method_count/GET': 4, 'downloader/response_bytes': 10256, 'downloader/response_count': 1, 'downloader/response_status_count/200': 1, 'elapsed_time_seconds': 18.806005, # The total time taken for the crawler to run 'finish_reason': 'finished', 'finish_time': datetime.datetime(2021, 5, 10, 2, 44, 10, 418573), # Reptile end time 'httpcompression/response_bytes': 51138, 'httpcompression/response_count': 1, 'log_count/INFO': 10, 'response_received_count': 1, 'scheduler/dequeued': 4, 'scheduler/dequeued/memory': 4, 'scheduler/enqueued': 4, 'scheduler/enqueued/memory': 4, 'start_time': datetime.datetime(2021, 5, 10, 2, 43, 51, 612568)} # Reptile start time 2021-05-10 10:44:10 [scrapy.core.engine] INFO: Spider closed (finished) - The screenshot of the source code is as follows :

3、 ... and 、 resolvent

-

rewrite

CoreStatsclass# -*- coding: utf-8 -*- # Rewrite the signal collector import time from scrapy.extensions.corestats import CoreStats class MyCoreStats(CoreStats): def spider_opened(self, spider): """ The crawler starts running """ self.start_time = time.time() start_time_str = time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(self.start_time)) # Convert format self.stats.set_value(' Reptile start time : ', start_time_str, spider=spider) def spider_closed(self, spider, reason): """ The crawler finished running """ # Reptile end time finish_time = time.time() # Convert time format finish_time_str = time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(finish_time)) # Calculate the total running time of the crawler elapsed_time = finish_time - self.start_time m, s = divmod(elapsed_time, 60) h, m = divmod(m, 60) self.stats.set_value(' Reptile end time : ', finish_time_str, spider=spider) self.stats.set_value(' The total time taken for the crawler to run : ', '%d when :%02d branch :%02d second ' % (h, m, s), spider=spider) self.stats.set_value(' Reptile end reason : ', reason, spider=spider) -

Modify profile information

EXTENSIONS = { 'scrapy.extensions.corestats.CoreStats': None, # Disable the default data collector ' Project name .extensions.corestats.MyCoreStats': 500, # Custom collector enabled signal }

Four 、 Effect display

2021-05-10 11:11:03 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{

'downloader/exception_count': 5,

'downloader/exception_type_count/twisted.internet.error.ConnectionRefusedError': 3,

'downloader/exception_type_count/twisted.internet.error.TimeoutError': 2,

'downloader/request_bytes': 1976,

'downloader/request_count': 6,

'downloader/request_method_count/GET': 6,

'downloader/response_bytes': 10266,

'downloader/response_count': 1,

'downloader/response_status_count/200': 1,

'httpcompression/response_bytes': 51139,

'httpcompression/response_count': 1,

'log_count/INFO': 10,

'response_received_count': 1,

'scheduler/dequeued': 6,

'scheduler/dequeued/memory': 6,

'scheduler/enqueued': 6,

'scheduler/enqueued/memory': 6,

' Reptile end reason ': 'finished',

' Reptile start time : ': '2021-05-10 11:10:39',

' Reptile end time : ': '2021-05-10 11:11:03',

' The total time taken for the crawler to run : ': '0 when :00 branch :24 second '}

版权声明

本文为[Brother Bing]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230625585327.html

边栏推荐

- 'NPM' is not an internal or external command, nor is it a runnable program or batch file

- 设置了body的最大宽度,但是为什么body的背景颜色还铺满整个页面?

- Rethink | open the girl heart mode of station B and explore the design and implementation of APP skin changing mechanism

- Date object (JS built-in object)

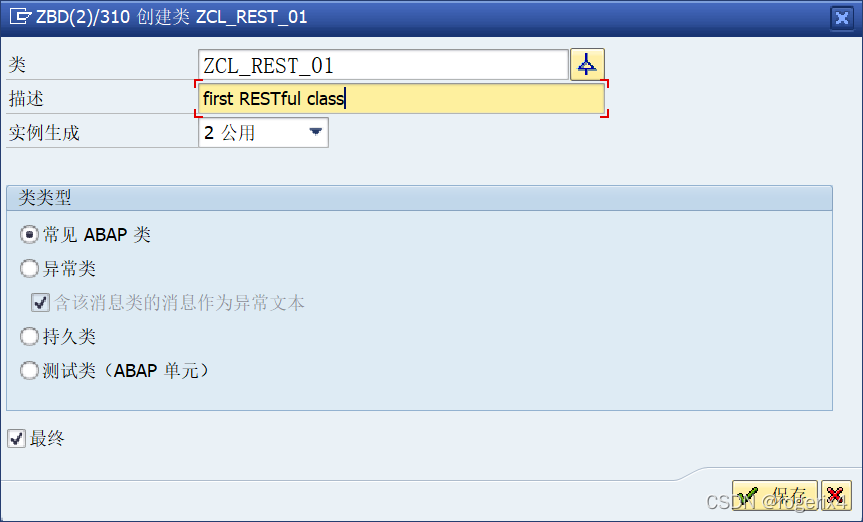

- SAP pi / PO rfc2restful publishing RFC interface is a restful example (proxy indirect method)

- 系统与软件安全研究(一)

- SAP Excel 已完成文件级验证和修复。此工作簿的某些部分可能已被修复或丢弃。

- js之函数的两种声明方式

- SampleCameraFilter

- NodeJS(二)同步读取文件和异步读取文件

猜你喜欢

Dropping Pixels for Adversarial Robustness

Scrapy 修改爬虫结束时统计数据中的时间为当前系统时间

Authorization server (simple construction of authorization server)

Custom time format (yyyy-mm-dd HH: mm: SS week x)

Ogldev reading notes

ABAP 实现发布RESTful服务供外部调用示例

SAP RFC_CVI_EI_INBOUND_MAIN BP主数据创建示例(仅演示客户)

防抖和节流

将指定路径下的所有SVG文件导出成PNG等格式的图片(缩略图或原图大小)

SAP 导出Excel文件打开显示:“xxx“的文件格式和扩展名不匹配。文件可能已损坏或不安全。除非您信任其来源,否则请勿打开。是否仍要打开它?

随机推荐

SVG中Path Data数据简化及文件夹所有文件批量导出为图片

promise all的实现

instanceof的实现原理

自己封装unity的Debug函数

SAP 03-AMDP CDS Table Function using ‘WITH‘ Clause(Join子查询内容)

驼峰命名对像

RGB颜色转HEX进制与单位换算

js中对象的三种创建方式

Install and configure Taobao image NPM (cnpm)

Authorization+Token+JWT

利用Lambda表达式解决c#文件名排序问题(是100大还是11大的问题)

快排的练习

大学学习路线规划建议贴

根据某一指定的表名、列名及列值来向前或向后N条查相关列值的SQL自定义标量值函数

SAP ECC连接SAP PI系统配置

C#操作注册表全攻略

C reads the registry

ES6使用递归实现深拷贝

Dropping Pixels for Adversarial Robustness

Samplecamerafilter