当前位置:网站首页>Detailed explanation of VIT transformer

Detailed explanation of VIT transformer

2022-08-09 20:44:00 【The romance of cherry blossoms】

1.VIT overall structure

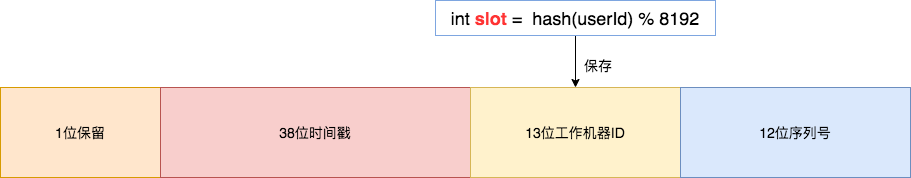

Build a patch sequence for image data

For an image, divide the image into 9 windows. To pull these windows into a vector, such as a 10*10*3-dimensional image, we first need to pull the image into a 300-dimensional vector.

Location code:

There are two ways of position coding. The first coding is one-dimensional coding. These windows are coded into 1, 2, 3, 4, 5, 6, 7, 8, 9 in order.The second way is two-dimensional encoding, which returns the coordinates of each image window.

Finally, connect a layer of fully-connected layers to map the image encoding and positional encoding to a more easily recognizable encoding for computation.

So, what does the 0 code in the architecture diagram do?

We generally add 0 codes to image classification. Image segmentation and target detection generally do not need to be added. 0patch is mainly used for feature integration to integrate the feature vectors of each window. Therefore, 0 patch can be added in any position.

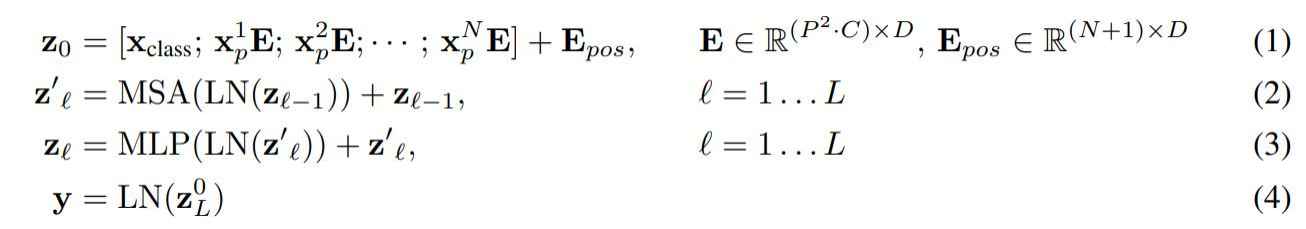

2. Detailed explanation of the formula

3. The receptive field of multi-head attention

As shown in the figure, the vertical axis represents the distance of attention, which is also equivalent to the receptive field of convolution. When there is only one head, the receptive field is relatively small, and the receptive field is also large. With the number of headsThe increase of , the receptive field is generally relatively large, which shows that Transformer extracts global features.

4.Position coding

Conclusion: The encoding is useful, but the encoding has little effect. Simply use the simple one. The effect of 2D (calculating the encoding of rows and columns separately, and then summing) is stillIt is not as good as 1D, and it is not very useful to add a shared position code to each layer

Of course, this is a classification task, and positional encoding may not have much effect

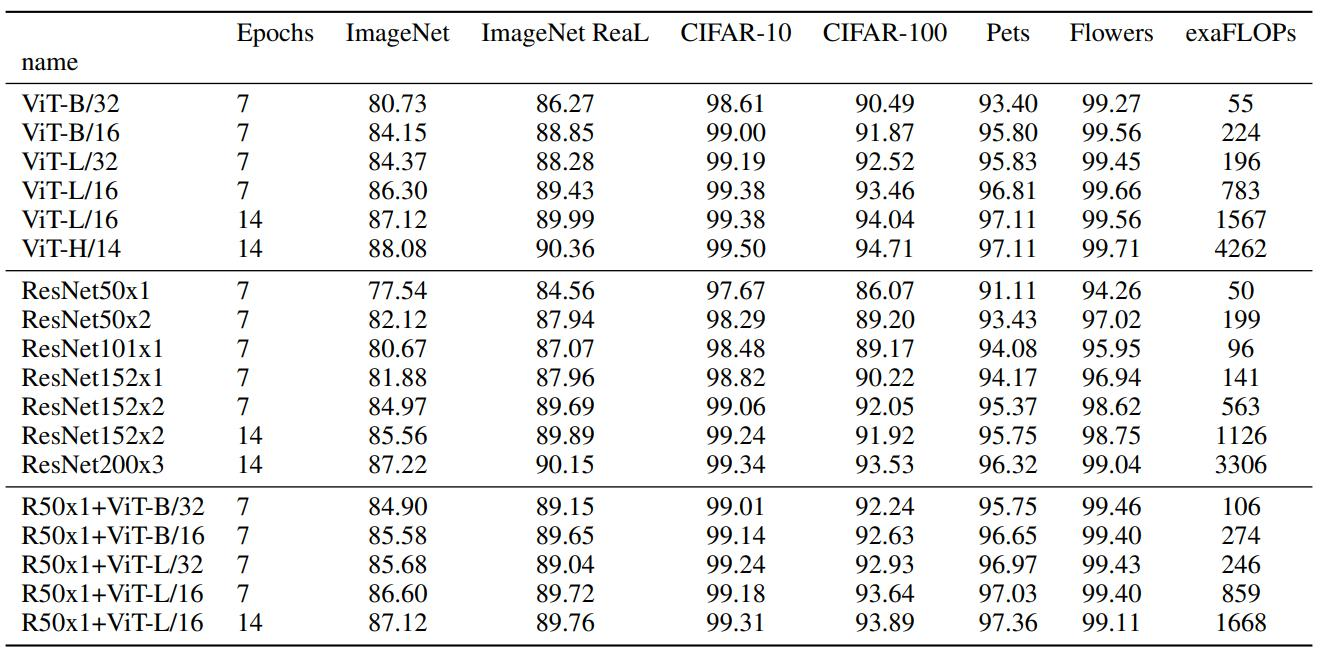

5. Experimental effect(/14 indicates the side length of the patch)

6.TNT: Transformer in Transformer

VIT only models the pathch, ignoring the smaller details

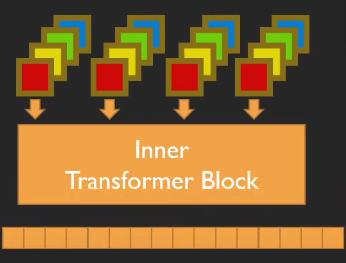

The external transformer divides the original image into windows, and generates a feature vector through image encoding and position encoding.

The internal transformer will further reorganize the window of the external transformer into multiple superpixels and reorganize them into new vectors. For example, the external transformer will split the image into 16*16*3 windows, and the internal transformer will split it again.It is divided into 4*4 superpixels, and the size of the small window is 4*4*48, so that each patch integrates the information of multiple channels.The new vector changes the output feature size through full connection. At this time, the internal combined vector is the same as the patch code size, and the internal vector and the external vector are added.

Visualization of TNT's PatchEmbedding

For the blue dots represent the features extracted by TNT, it can be seen from the visual image that the features of the blue dots are more discrete, have larger variance, and are more conducive to separation, More distinctive features and more diverse distribution

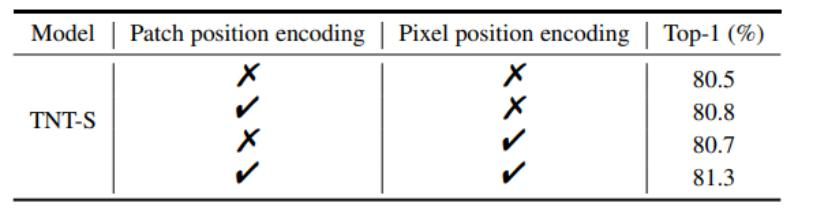

Experimental Results

For both internal and external training, the best effect is to add coding

边栏推荐

- 从功能测试到自动化测试你都知道他们的有缺点吗?

- 【知识点合辑】numpy常用函数+jupyter小用法

- 说了半天跨平台,今儿咱就来跨跨!(完结篇)——Kubenetes上手实践

- API接口是什么?API接口常见的安全问题与安全措施有哪些?

- YOLO v3源码详解

- 安装搭建私有仓库 Harbor

- 论文精读:VIT - AN IMAGE IS WORTH 16X16 WORDS: TRANSFORMERS FOR IMAGE RECOGNITION AT SCALE

- 论文解读:Deep-4MCW2V:基于序列的预测指标,以鉴定大肠杆菌中的N4-甲基环胞嘧啶位点

- 【工业数字化大讲堂 第二十一期】企业数字化能碳AI管控平台,特邀技术中心总经理 王勇老师分享,8月11日(周四)下午4点

- 苦日子快走开

猜你喜欢

随机推荐

HarmonyOS - 基于ArkUI (JS) 实现图片旋转验证

李乐园:iMetaLab Suite宏蛋白质组学数据分析与可视化(视频+PPT)

虚拟修补:您需要知道的一切

Ng DevUI 周下载量突破1000啦!

ref的使用

ThreadLocal 夺命 11 连问,万字长文深度解析

mysql双主备份失败?

Wallys/QCA 9880/802.11ac Mini PCIe Wi-Fi Module, Dual Band, 2,4GHz / 5GHz advanced edition

anno arm移植Qt环境后,编译正常,程序无法正常启动问题的记录

ASP.NET Core依赖注入之旅:针对服务注册的验证

SSM框架练手项目,高企必备的管理系统—CRM管理系统

ceph 创建池和制作块设备基操

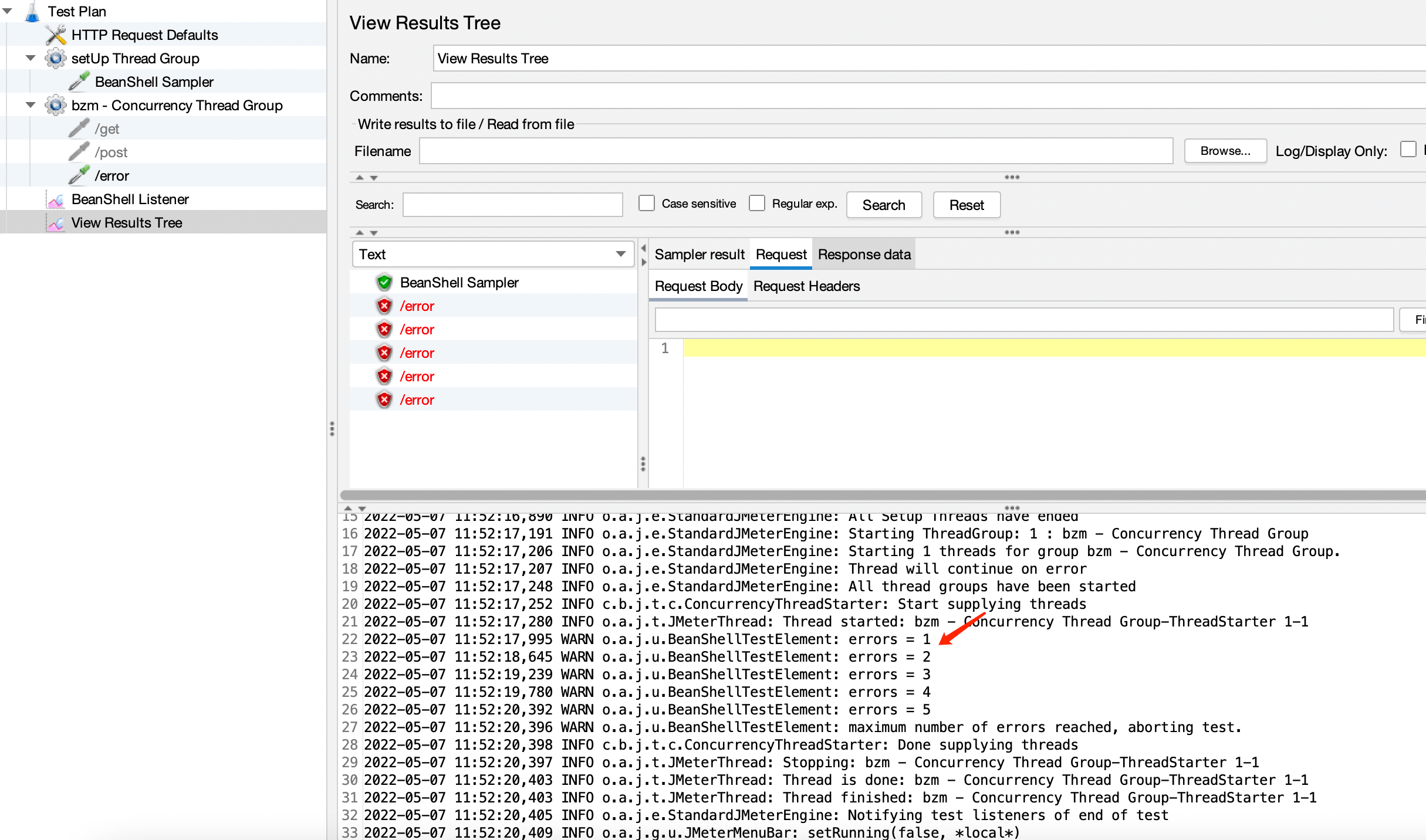

jmeter - record script

集合框架Collection与Map的区别和基本使用

论文解读:Deep-4MCW2V:基于序列的预测指标,以鉴定大肠杆菌中的N4-甲基环胞嘧啶位点

uniapp 实现底部导航栏tabbar

商业智能BI行业分析思维框架:铅酸蓄电池行业(一)

太厉害了!华为大牛终于把 MySQL 讲的明明白白(基础 + 优化 + 架构)

什么是藏宝计划(TPC),2022的一匹插着翅膀的黑马!

win10 uwp 获得Slider拖动结束的值