当前位置:网站首页>分别用BeautifulSoup和scrapy爬取某一城市天气预报

分别用BeautifulSoup和scrapy爬取某一城市天气预报

2022-08-08 21:05:00 【大脸猿】

分别用BeautifulSoup和scrapy爬取某一城市天气预报

爬取网站:中国天气网 http://www.weather.com.cn

此次我们以北京为例。

1、首先我们搜索进入到北京页面:

http://www.weather.com.cn/weather/101010100.shtml?from=cityListCmp

然后分析页面源代码构造

BeautifulSoup

from urllib import request

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

url = "http://www.weather.com.cn/weather/101010100.shtml"

try:

headers = {

"User-Agent":"Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36"}

req = request.Request(url,headers=headers)

data = request.urlopen(req)

data = data.read() #爬取该网页全部内容

#print(data)

dammit = UnicodeDammit(data,["Utf-8","gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data,"lxml")

lis = soup.select("ul[class='t clearfix'] li") # [tagName][attName[=value]]

# print(lis) # 查找到li所有内容

for li in lis:

try:

data1 = li.select('h1')[0].text #日期

weather = li.select("p[class='wea']")[0].text #天气

tem = li.select("p[class='tem' i]")[0].text #温度

print(data1+" "+weather+" "+tem+"\n")

except Exception as e1:

print(e1)

except Exception as e2:

print(e2)

scrapy

(其他步骤省略)

# -*- coding: utf-8 -*-

import scrapy

from ..items import TqpcItem

from scrapy.http import Request

class TqSpider(scrapy.Spider):

name = 'tq'

allowed_domains = ['weather.com']

#start_urls = ['http://weather.com/']

header = {

"User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36"}

def start_requests(self):

url = "http://www.weather.com.cn/weather/101010100.shtml"

yield Request(url, callback=self.parse)

def parse(self, response):

for i in range(0,8):

item = TqpcItem()

item["day"] = response.xpath("//ul[@class='t clearfix']/li/h1/text()")[i].extract()

item["w1"] = response.xpath("//ul[@class='t clearfix']/li/p[@class='wea']/text()")[i].extract()

item["w2"] = response.xpath("//ul[@class='t clearfix']/li/p[@class='tem']/span/text()")[i].extract()

print(item["day"]+" "+item["w1"]+" "+item["w2"])

yield item

边栏推荐

猜你喜欢

随机推荐

[highcharts application - double pie chart]

Educational Codeforces Round 112 D. Say No to Palindromes

Flask 教程 第八章:粉丝

复合索引使用

【Voice of dreams】

day11 基于Rest的操作、查询聚合索引

常见的病毒(攻击)分类

jmeter逻辑控制器使用

手机投影到deepin

Idea修改全部变量名

js写一个气泡屏保能碰撞

Under the Windows socket (TCP) console program

安全策略及电商购物订单简单用例

C语言求积分的近似值

阿里云祝顺民:算力网络架构的新探索

charles简单使用

Flask 教程 第七章:错误处理

使用LBP特征进行图像分类

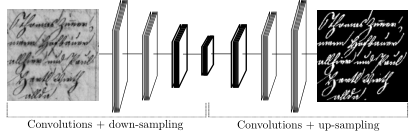

去噪论文 Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising

目标检测论文 Few-Shot Object Detection with Attention-RPN and Multi-Relation Detector