当前位置:网站首页>hugging face tutorial - Chinese translation - Loading pre-trained instances with AutoClass

hugging face tutorial - Chinese translation - Loading pre-trained instances with AutoClass

2022-08-09 16:49:00 【wwlsm_zql】

使用 AutoClass Load a pretrained instance

Since there are so many different ones Transformer 体系结构,为您的 checkpoint 创建一个 Transformer Architecture is a challenge.作为 Transformers core 哲学的一部分,AutoClass 可以从给定的checkoutThe correct architecture is automatically inferred and loaded,Thus making the library easy、Simple and flexible to use.来自 pretrained method It allows you to quickly load a pre-trained model for any architecture,This way you don't have to invest time and resources to train a model from scratch.这种类型的checkoutCode-agnostic means if your code works for onecheckout,then it will work for the other onecheckout——as long as it is trained for similar tasks——Even if the architecture is different.

请记住,Architecture refers to the skeleton of a model,而checkoutis the weight for a given architecture.例如,BERT 是一种架构,而 BERT-base-uncased 是一种checkout.Model is a generic term,It can represent architecture,也可以表示checkout.

在本教程中,学习:

- Load a pretrained tokenizer

- Load a trained feature extractor

- Load a pre-trained processor

- 加载一个预先训练好的模型

AutoTokenizer

几乎每个 NLP Tasks all start with a tokenizer.The tokenizer converts your input into a format that the model can handle.

用 AutoTokenizer.from_pretrained()加载一个 tokenizer:

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

Then label your input like below:

sequence = "In a hole in the ground there lived a hobbit."print(tokenizer(sequence))

{

'input_ids': [101, 1999, 1037, 4920, 1999, 1996, 2598, 2045, 2973, 1037, 7570, 10322, 4183, 1012, 102],

'token_type_ids': [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]}

Automatic feature extractor

for audio and visual tasks,Feature extractors process audio signals or images into the correct input format.

使用 AutoFeatureExtractor.from_pretrainedLoad a feature extractor:

from transformers import AutoFeatureExtractor

feature_extractor = AutoFeatureExtractor.from_pretrained(

... "ehcalabres/wav2vec2-lg-xlsr-en-speech-emotion-recognition"... )

automatic processor

Multi-pass tasks require a processor that combines both preprocessing tools.例如,layoutlmv2The model needs a feature extractor to process the image,A tokenizer is also required to process the text; The processor combines the two.

用 AutoProcessor.from_pretrained加载处理器:

from transformers import AutoProcessor

processor = AutoProcessor.from_pretrained("microsoft/layoutlmv2-base-uncased")

AutoModel

Pytorch

最后,AutoModelFor Class allows you to load a pretrained model for a given task(See the full list of available tasks here).例如,使用 AutoModelForSequenceClassification.from_pretrainedLoad a sequence classification model

from transformers import AutoModelForSequenceClassification

Easily reuse the samecheckoutto load schemas for different tasks:

from transformers import AutoModelForTokenClassification

model = AutoModelForTokenClassification.from_pretrained("distilbert-base-uncased")

通常,我们建议使用 AutoTokenizer 类和 AutoModelFor class to load pretrained model instances.This will ensure you load the correct architecture every time.在下一个教程中,Learn how to use the newly loaded tokenizer、Feature extractors and processors preprocess the dataset for fine-tuning.

Tensorflow

最后,tfautomatodelfor Class allows you to load a pretrained model for a given task(See here for a full list of available tasks).例如,使用 tfautomatodelforsequenceclassification.

from_pretrainedLoad a sequence classification model

from transformers import TFAutoModelForSequenceClassification

model = TFAutoModelForSequenceClassification.from_pretrained("distilbert-base-uncased")

Easily reuse the samecheckoutto load schemas for different tasks:

from transformers import TFAutoModelForTokenClassification

model = TFAutoModelForTokenClassification.from_pretrained("distilbert-base-uncased")

通常,我们建议使用 AutoTokenizer 类和 TFAutoModelFor class to load pretrained model instances.This will ensure you load the correct architecture every time.在下一个教程中,Learn how to use the newly loaded tokenizer、Feature extractors and processors preprocess the dataset for fine-tuning.

This article is the translation of the hug face tutorial,仅学习记录

边栏推荐

猜你喜欢

Introduction to OpenCV and build the environment

跨平台桌面应用 Electron 尝试(VS2019)

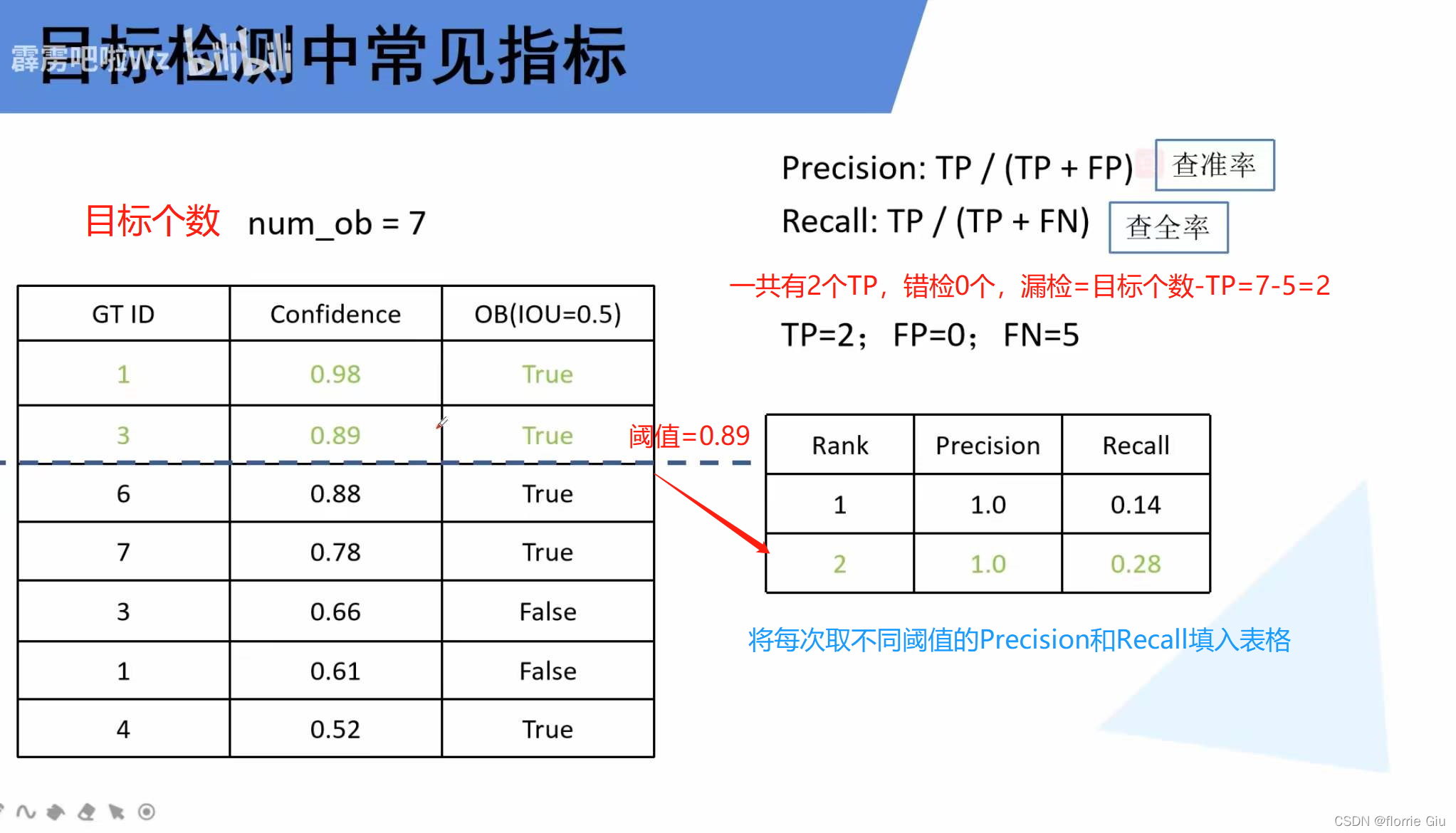

【深度学习】目标检测之评价指标

【研究生工作周报】(第八周)

【研究生工作周报】(第十二周)

抱抱脸(hugging face)教程-中文翻译-模型概要

hugging face tutorial - Chinese translation - preprocessing

It is deeply recognized that the compiler can cause differences in the compilation results

Arduino 飞鼠 空中鼠标 陀螺仪体感鼠标

记一次解决Mysql:Incorrect string value: ‘\xF0\x9F\x8D\x83\xF0\x9F...‘ for column 插入emoji表情报错问题

随机推荐

如何防止浏览器指纹关联

[Elementary C language] Detailed explanation of branch statements

Example of file operations - downloading and merging streaming video files

实现一个支持请求失败后重试的JS方法

PathMeasure 轨迹动画神器

crontab失效怎么解决

众所周知亚马逊是全球最大的在线电子商务公司。如今,它已成为全球商品种类最多的在线零售商,日活跃买家约为20-25亿。另一方面,也有大大小小的企业,但不是每个人都能赚到刀! 做网店的同学都知道,

【深度学习】梳理凸优化问题(五)

AsyncTask 串行还是并行

抱抱脸(hugging face)教程-中文翻译-对预先训练过的模特进行微调

如何正确使用防关联浏览器

NLP-阅读理解任务学习总结概述

【研究生工作周报】(第七周)

Different compilers, different modes, impact on results

大数组减小数组常用方法

stream去重相同属性对象

【深度学习】归一化(十一)

【深度学习】原始问题和对偶问题(六)

ASP.Net Core实战——初识.NetCore

链游是什么意思 链游和游戏的区别是什么