当前位置:网站首页>Pytorch builds a two-way LSTM to realize time series forecasting (load forecasting)

Pytorch builds a two-way LSTM to realize time series forecasting (load forecasting)

2022-04-22 05:10:00 【Cyril_ KI】

Catalog

I. Preface

The previous articles are all one-way LSTM, This article talks about two-way LSTM.

Series articles :

- In depth understanding of PyTorch in LSTM Input and output of ( from input Input to Linear Output )

- PyTorch build LSTM Time series prediction is realized ( Load forecasting )

- PyTorch build LSTM Realize multivariable time series prediction ( Load forecasting )

- PyTorch build LSTM Realize multivariable and multi step time series prediction ( Load forecasting )

- PyTorch Build a two-way network LSTM Time series prediction is realized ( Load forecasting )

II. principle

About LSTM The input and output of is in In depth understanding of PyTorch in LSTM Input and output of ( from input Input to Linear Output ) It has been described in detail in .

About nn.LSTM Parameters of , The interpretation given in the official documents is :

There are seven parameters in total , Only the first three are necessary . Because it's widely used PyTorch Of DataLoader To form batch data , therefore batch_first It's more important .LSTM Two common application scenarios are text processing and timing prediction , Therefore, I will explain each parameter from these two aspects .

- input_size: In text processing , Because a word cannot participate in the operation , So we have to pass Word2Vec To embed words , Represent each word as a vector , here input_size=embedding_size. For example, there are five words in each sentence , Use one for each word 100 Dimension vector , So here input_size=100; In time series prediction , For example, it is necessary to predict the load , Each load is a separate value , Can directly participate in the operation , Therefore, it is not necessary to represent each load as a vector , here input_size=1. But if we use Multivariable To make predictions , For example, we Before utilization 24 Every hour [ load 、 The wind speed 、 temperature 、 Pressure 、 humidity 、 The weather ] To predict the load at the next moment , So at this time input_size=7.

- hidden_size: Number of hidden layer nodes . It can be set at will .

- num_layers: The layer number .nn.LSTMCell And nn.LSTM comparison ,num_layers The default is 1.

- batch_first: The default is False, See the following text for the significance .

Inputs

About LSTM The input of , The definition given in the official document is :

You can see , The input consists of two parts :input、( The initial hidden state h_0, Initial unit state c_0)

among input:

input(seq_len, batch_size, input_size)

- seq_len: In text processing , If a sentence has 7 Word , be seq_len=7; In time series prediction , Suppose we use the former 24 One hour load to predict the load at the next moment , be seq_len=24.

- batch_size: One time input LSTM The number of samples in . In text processing , You can enter many sentences at once ; In time series prediction , You can also enter many pieces of data at one time .

- input_size: See above .

(h_0, c_0):

h_0(num_directions * num_layers, batch_size, hidden_size)

c_0(num_directions * num_layers, batch_size, hidden_size)

h_0 and c_0 Of shape Agreement .

- num_directions: If it is two-way LSTM, be num_directions=2; otherwise num_directions=1.

- num_layers: See above .

- batch_size: See above .

- hidden_size: See above .

Outputs

About LSTM Output , The definition given in the official document is :

You can see , The output also consists of two parts :otput、( Hidden state h_n, Unit status c_n)

among output Of shape by :

output(seq_len, batch_size, num_directions * hidden_size)

h_n and c_n Of shape remain unchanged , See the previous text for parameter explanation .

batch_first

If it's initializing LSTM season batch_first=True, that input and output Of shape Will be made by :

input(seq_len, batch_size, input_size)

output(seq_len, batch_size, num_directions * hidden_size)

Turn into :

input(batch_size, seq_len, input_size)

output(batch_size, seq_len, num_directions * hidden_size)

namely batch_size advance .

Output extraction

Suppose we finally get output(batch_size, seq_len, 2 * hidden_size), We need to input it into the linear layer , There are two ways to refer to :

(1) Direct input

Like one-way , We can output Type directly into Linear. In one way LSTM in :

self.linear = nn.Linear(self.hidden_size, self.output_size)

And in both directions LSTM in :

self.linear = nn.Linear(2 * self.hidden_size, self.output_size)

Model :

class BiLSTM(nn.Module):

def __init__(self, input_size, hidden_size, num_layers, output_size, batch_size):

super().__init__()

self.input_size = input_size

self.hidden_size = hidden_size

self.num_layers = num_layers

self.output_size = output_size

self.num_directions = 2

self.batch_size = batch_size

self.lstm = nn.LSTM(self.input_size, self.hidden_size, self.num_layers, batch_first=True, bidirectional=True)

self.linear = nn.Linear(2 * self.num_directions * self.hidden_size, self.output_size)

def forward(self, input_seq):

h_0 = torch.randn(self.num_directions * self.num_layers, self.batch_size, self.hidden_size).to(device)

c_0 = torch.randn(self.num_directions * self.num_layers, self.batch_size, self.hidden_size).to(device)

# print(input_seq.size())

seq_len = input_seq.shape[1]

# input(batch_size, seq_len, input_size)

input_seq = input_seq.view(self.batch_size, seq_len, self.input_size)

# output(batch_size, seq_len, num_directions * hidden_size)

output, _ = self.lstm(input_seq, (h_0, c_0))

# print(self.batch_size * seq_len, self.hidden_size)

output = output.contiguous().view(self.batch_size * seq_len, self.num_directions * self.hidden_size) # (5 * 30, 64)

pred = self.linear(output) # pred()

pred = pred.view(self.batch_size, seq_len, -1)

pred = pred[:, -1, :]

return pred

(2) Enter... After processing

stay LSTM in , After passing through the linear layer output Of shape by (batch_size, seq_len, output_size). Suppose we use the former 24 Hours (1 to 24) After forecast 2 An hour's load (25 to 26), that seq_len=24, output_size=2. according to LSTM Principle , The final output contains the predicted values of all positions , That is to say ((2 3), (3 4), (4 5)…(25 26)). Obviously, we only need the last prediction , namely output[:, -1, :].

And in both directions LSTM in , In limine output(batch_size, seq_len, 2 * hidden_size), This contains the output in both directions of all positions . Simply speaking ,output[0] by The sequence outputs the first hidden layer state from left to right and The last hidden layer state output of the sequence from right to left The joining together of ;output[-1] by The last hidden layer state output of the sequence from left to right and the first hidden layer state output of the sequence from right to left The joining together of .

If we want to use both forward and backward output , We can cut them from the middle , Then average . such as output Of shape by (30, 24, 2 * 64), We turn it into (30, 24, 2, 64), And then in dim=2 On average , Get one shape by (30, 24, 64) Output , This is the same as one-way LSTM The output of is consistent .

How to deal with it :

output = output.contiguous().view(self.batch_size, seq_len, self.num_directions, self.hidden_size)

output = torch.mean(output, dim=2)

The model code :

class BiLSTM(nn.Module):

def __init__(self, input_size, hidden_size, num_layers, output_size, batch_size):

super().__init__()

self.input_size = input_size

self.hidden_size = hidden_size

self.num_layers = num_layers

self.output_size = output_size

self.num_directions = 2

self.batch_size = batch_size

self.lstm = nn.LSTM(self.input_size, self.hidden_size, self.num_layers, batch_first=True, bidirectional=True)

self.linear = nn.Linear(self.hidden_size, self.output_size)

def forward(self, input_seq):

h_0 = torch.randn(self.num_directions * self.num_layers, self.batch_size, self.hidden_size).to(device)

c_0 = torch.randn(self.num_directions * self.num_layers, self.batch_size, self.hidden_size).to(device)

# print(input_seq.size())

seq_len = input_seq.shape[1]

# input(batch_size, seq_len, input_size)

input_seq = input_seq.view(self.batch_size, seq_len, self.input_size)

# output(batch_size, seq_len, num_directions * hidden_size)

output, _ = self.lstm(input_seq, (h_0, c_0))

output = output.contiguous().view(self.batch_size, seq_len, self.num_directions, self.hidden_size)

output = torch.mean(output, dim=2)

pred = self.linear(output)

# print('pred=', pred.shape)

pred = pred.view(self.batch_size, seq_len, -1)

pred = pred[:, -1, :]

return pred

III. Training and forecasting

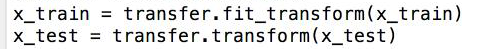

Data processing 、 Training and prediction are the same as those in the previous articles .

Here to Single step multivariable Compare the predictions of , If other conditions are consistent , The experimental results are as follows :

| Method | LSTM | BiLSTM(1) | BiLSTM(2) |

|---|---|---|---|

| MAPE | 7.43 | 9.29 | 9.29 |

You can see , Only for the data I use , A one-way LSTM Is much better . For the two methods mentioned above , There seems to be little difference .

IV. Source code and data

I put the source code and data in GitHub On , When downloading, please give me a follow and star, thank !

LSTM-Load-Forecasting

版权声明

本文为[Cyril_ KI]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204220508203870.html

边栏推荐

- The problem that the video in video becomes muted when the mouse scrolls

- Realization of online Preview PDF file function

- 数据库(二)MySQL表的增删改查(基础)

- [Reading Notes - > statistics] 07-01 introduction to the concept of discrete probability distribution geometric distribution

- Chapter IX user and authority

- Detailed explanation of Neptune w800 lighting (interruption) project

- Does lvgl really need to write code for each control? Don't be naive. When you know the principle, let's drag the control~

- Generic types in classes and generic types

- Nexus private server - (II) console installation of version 3.2.0, initial password location

- Chapter 6 Association query

猜你喜欢

Prediction of KNN Iris species after normalization and standardization

Introduction to JUnit

Discussion on data set division

QBoxSet、QBoxPlotSeries

Nexus私服——(二) 3.2.0版 控制台安装,初始密码位置

JVM——》CMS

Chapter VIII affairs

Iris species prediction -- Introduction to data set

Linear regression API

A collection of common methods of data exploratory analysis (EDA)

随机推荐

Talk about anti reverse connection circuit in combination with practice (summary of anti reverse connection circuit)

Performance analysis of PostgreSQL limit

KNN, cross validation, grid search

Libevent implements UDP communication

scanf、printf的输入输出(格式控制符)

2019个人收集框架库总结

[matlab] draw Zernike polynomials

Restful style API design

EMO-DB 数据集的 Speech 特征提取

Linear regression API

Recently, it was found that Baidu cloud sharing should be set with extraction code, and can not be set to sharing without extraction code This article will teach you how to bypass Baidu to set up shar

【Pytorch】torch. Bmm() method usage

Overview of over fitting and under fitting treatment methods of linear regression

Does lvgl really need to write code for each control? Don't be naive. When you know the principle, let's drag the control~

Alibaba cloud performance test PTS new features in March

KNN prediction minimum case summary

JVM throughput

Iris species prediction -- Introduction to data set

Go array slice

Junit断言