当前位置:网站首页>Pytorch deep learning practice_ 11 convolutional neural network

Pytorch deep learning practice_ 11 convolutional neural network

2022-04-23 05:32:00 【Muxi dare】

B Standing at the of Mr. Liu er 《PyTorch Deep learning practice 》Lecture_11 GoogLeNet+Deep Residual Learning

Lecture_11 Convolution neural network advanced Convolutional Neural Network

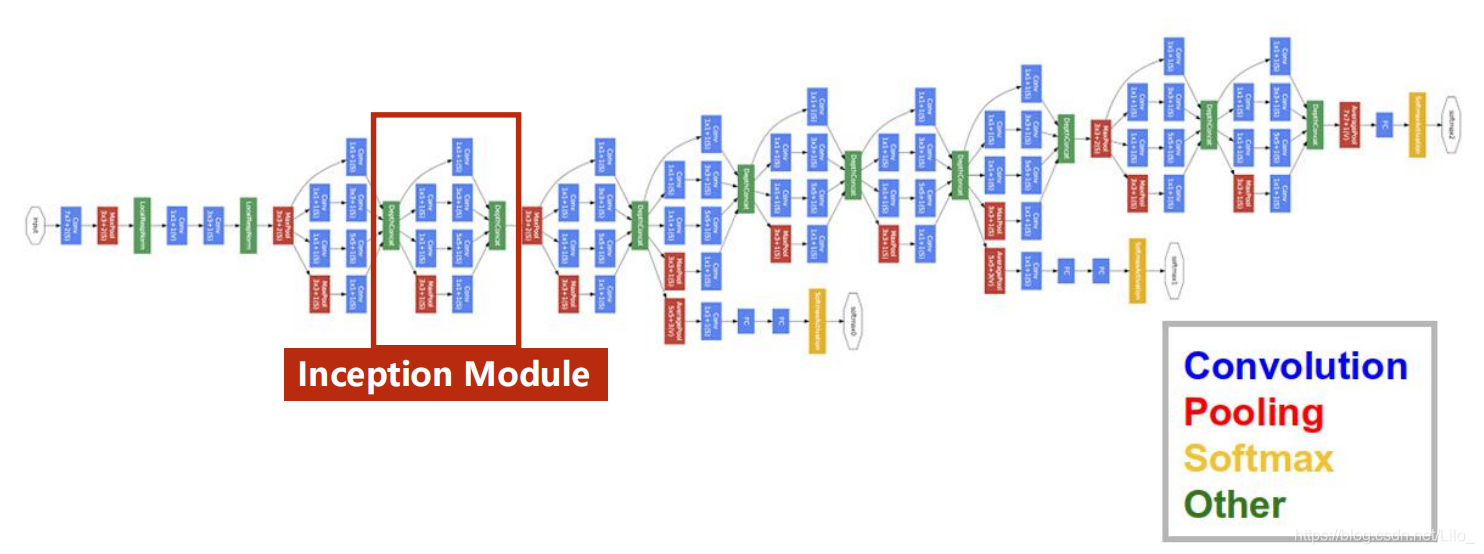

GoogLeNet

Be good at finding the same module in complex code and writing it into a function / class →Inception Module

Inception Module

I don't know which effect is good , So use multiple convolutions for stacking , Through training will be good to increase the weight , Bad weight reduction

Brutally enumerate every kind of super parameter , Use gradient descent to automatically select the most appropriate

Note that the input and output of each circuit should be consistent

1x1 convolution?

Multi channel information fusion : Multiple channels of information are fused together

Implementation of Inception Module

class InceptionA(nn.Module):

"""docstring for InceptionA"""

def __init__(self,in_channels):

super(InceptionA, self).__init__()

self.branch1x1 = nn.Conv2d(in_channels,16,kernel_size=1)

self.branch5x5_1 = nn.Conv2d(in_channels,16,kernel_size=1)

self.branch5x5_2 = nn.Conv2d(16,24,kernel_size=5,padding=2)

self.branch3x3_1 = nn.Conv2d(in_channels,16,kernel_size=1)

self.branch3x3_2 = nn.Conv2d(16,24,kernel_size=3,padding=1)

self.branch3x3_3 = nn.Conv2d(24,24,kernel_size=3,padding=1)

self.branch_pool = nn.Conv2d(in_channels,24,kernel_size=1)

def forward(self,x):

branch1x1 = self.branch1x1(x)

branch5x5 = self.branch5x5_1(x)

branch5x5 = self.branch5x5_2(branch5x5)

branch3x3 = self.branch3x3_1(x)

branch3x3 = self.branch3x3_2(branch3x3)

branch3x3 = self.branch3x3_3(branch3x3)

branch_pool = F.avg_pool2d(x,kernel_size=3,stride=1,padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1,branch5x5,branch3x3,branch_pool]

return torch.cat(outputs,dim=1) # Along the first (channel) Splicing

Using Inception Module

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1 = nn.Conv2d(1,10,kernel_size=5)

self.conv2 = nn.Conv2d(88,20,kernel_size=5)

self.incep1 = InceptionA(in_channels=10)

self.incep2 = InceptionA(in_channels=20)

self.mp = nn.MaxPool2d(2)

self.fc = nn.Linear(1408,10)

def forward(self,x):

in_size = x.size(0)

x = F.relu(self.mp(self.conv1(x)))

x = self.incep1(x)

x = F.relu(self.mp(self.conv2(x)))

x = self.incep2(x)

x = x.view(in_size,-1)

x = self.fc(x)

return x

Code reappearance ( Output curve )

Pay attention to observation test accuracy To determine the training rounds , If the accuracy of a test set reaches a new high , Save its parameters

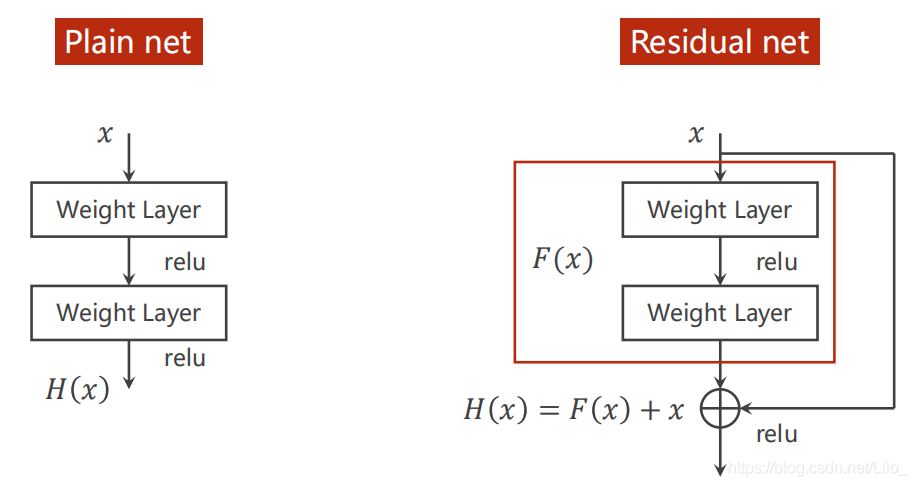

Excessively increasing the number of network layers will cause the gradient to disappear !!!

Deep Residual Learning Residual network

Residual Block

class ResidualBlock(nn.Module):

"""docstring for ResidualBlock"""

def __init__(self, channels):

super(ResidualBlock, self).__init__()

self.channels = channels

self.conv1 = nn.Conv2d(channels,channels,kernel_size=3,padding=1)

self.conv2 = nn.Conv2d(channels,channels,kernel_size=3,padding=1)

def forward(self,x):

y = F.relu(self,conv1(x))

y = self.conv2(y)

return F.relu(x+y)

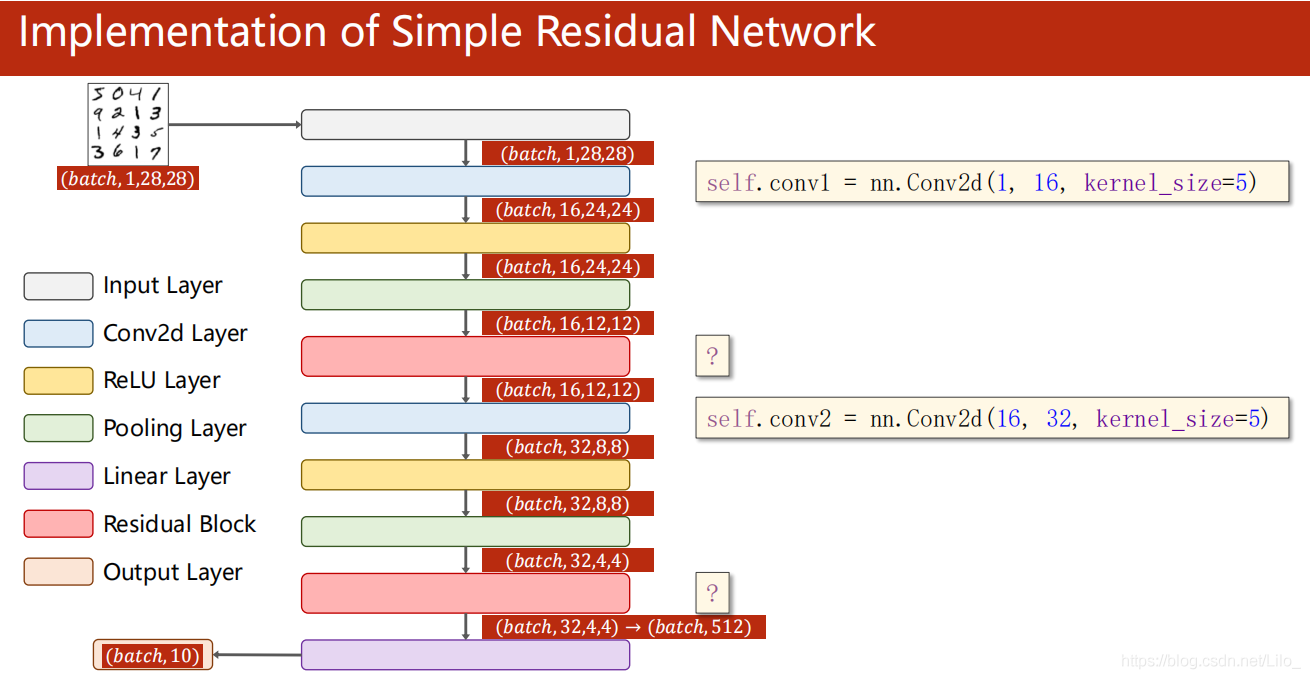

Implementation of Simple Residual Network

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 16, kernel_size=5)

self.conv2 = nn.Conv2d(16, 32, kernel_size=5)

self.mp = nn.MaxPool2d(2)

self.rblock1 = ResidualBlock(16)

self.rblock2 = ResidualBlock(32)

self.fc = nn.Linear(512, 10)

def forward(self, x):

in_size = x.size(0)

x = self.mp(F.relu(self.conv1(x)))

x = self.rblock1(x)

x = self.mp(F.relu(self.conv2(x)))

x = self.rblock2(x)

x = x.view(in_size, -1)

x = self.fc(x)

return x

版权声明

本文为[Muxi dare]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204220535577592.html

边栏推荐

- Why can't V-IF and V-for be used together

- Excel 2016 打开文件第一次打不开,有时空白,有时很慢要打开第二次才行

- The QT debug version runs normally and the release version runs crash

- Watch depth monitoring mode

- Usage and difference of shellexecute, shellexecuteex and winexec in QT

- Basic knowledge of redis

- Redis的基本知识

- Processus d'exécution du programme exécutable

- Multiple mainstream SQL queries only take the latest one of the data

- If the route reports an error after deployment according to the framework project

猜你喜欢

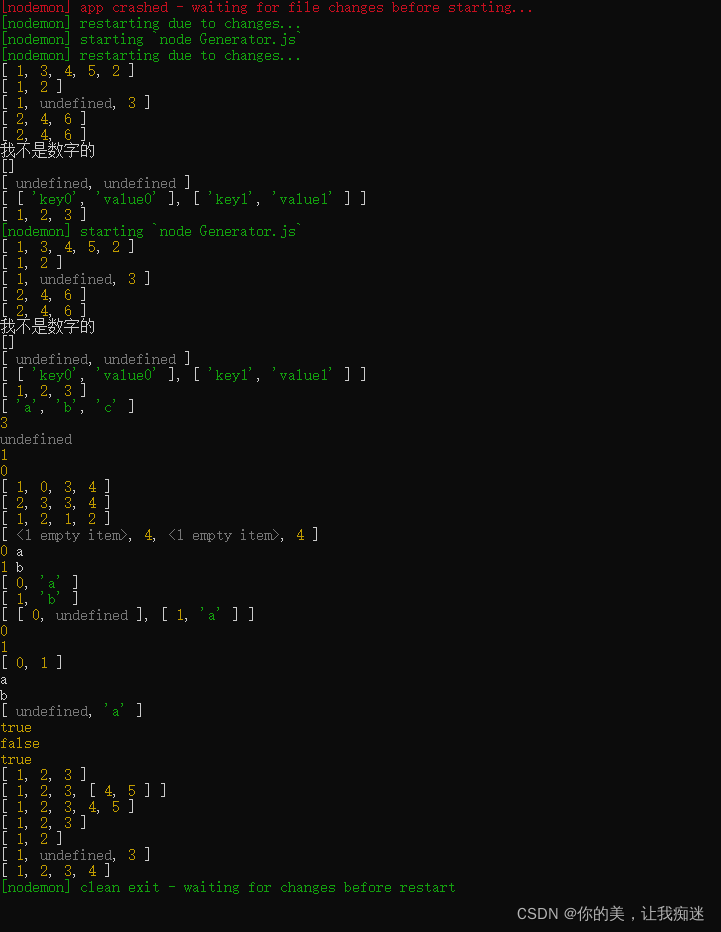

Use of ES6 array

es6数组的使用

Excel 2016 打开文件第一次打不开,有时空白,有时很慢要打开第二次才行

转置卷积(Transposed Convolution)

CPT 104_TTL 09

![[untitled] Notepad content writing area](/img/0a/4a3636025c3e0441f45c99e3c67b67.png)

[untitled] Notepad content writing area

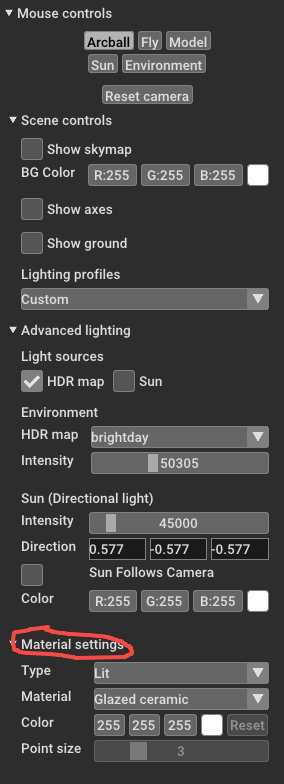

Parameter analysis of open3d material setting

The title bar will be pushed to coincide with the status bar

Camera imaging + homography transformation + camera calibration + stereo correction

Understand the relationship between promise async await

随机推荐

STL learning notes 0x0001 (container classification)

点击添加按钮--出现一个框框(类似于添加学习经历-本科-研究生)

Qwebsocket communication

JSON.

SQL语句简单优化

Necessity of selenium preloading cookies

Laravel routing settings

双击.jar包无法运行解决方法

Three methods of list rendering

创建进程内存管理copy_mm - 进程与线程(九)

Processus d'exécution du programme exécutable

The prefix of static of egg can be modified, including boots

QSS, qdateedit, qcalendarwidget custom settings

es6数组的使用

CORS and proxy (づ  ̄ 3  ̄) in egg ~ the process of stepping on the pit and filling the pit ~ tot~

d. TS --- for more detailed knowledge, please refer to the introduction on the official website (chapter of declaration document)

Phlli in a VM node

巴普洛夫与兴趣爱好

Laravel routing job

Create process memory management copy_ Mm - processes and threads (IX)