当前位置:网站首页>Segmentation Learning (loss and Evaluation)

Segmentation Learning (loss and Evaluation)

2022-08-11 09:22:00 【Ferry fifty-six】

Reference

Loss function for semantic segmentation

(cross entropy loss function)

- Pixel-wise cross-entropy

- Also need to consider the problem of sample equalization (some)

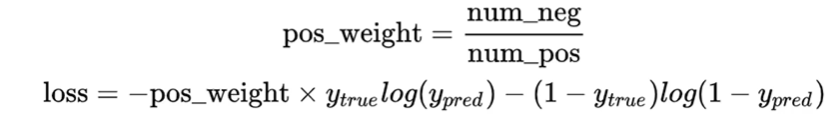

The cross-entropy loss function here adds the pos_weight parameter.The proportions of the foreground and background are different, making the importance of each pixel inconsistent.In general, we set this parameter according to the proportion of positive and negative examples.

Here will explain the next two-category cross-entropy loss function (remove pos_weight):

The smaller the loss function, the better

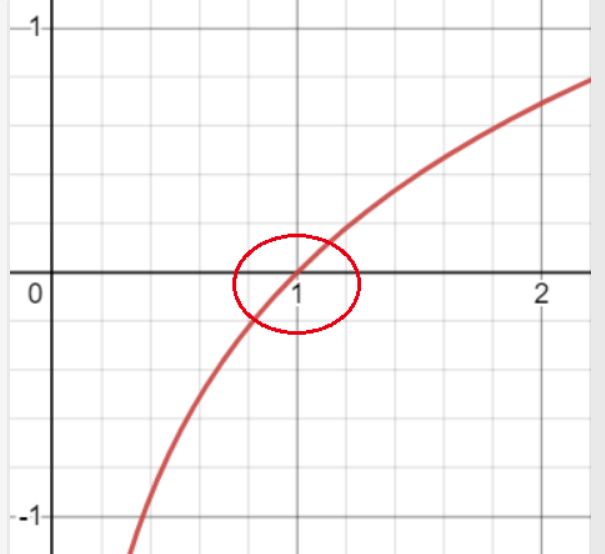

- log function is as follows

As can be seen from the imageWhen the X value is closer to 1, the absolute value of Y is smaller, and when the X value is closer to 0, the absolute value of Y is larger.

eg:

y_true: true sample label 0, 1

y_pred: sample predicted label 0, 1

| - | y_true=0 | y_true=1 |

|---|---|---|

| y_pred=0 | At this time, the loss function is brought in, loss=0, you can see that when y_true=0, the closer y_pred is to 0, the smaller the absolute value of the loss function is | When y_true=1, the closer y_pred is to 0, the greater the absolute value of the loss function |

| y_pred=1 | When y_true=0, the closer y_pred is to 1, the greater the absolute value of the loss function | When y_true=1, the closer y_pred is to 1, the smaller the absolute value of the loss function is |

It can be seen from this that the closer y_pred is to the true label y_true, the smaller its loss function is, and can distinguish foreground label 1 and background label 0.

We also need to pay attention to the loss of judging the true label as 1 and the predicted label when y_true log(y_pred), think about it, when the true label is 0, this part is directly 0, so the other part is judged trueLoss of label 0

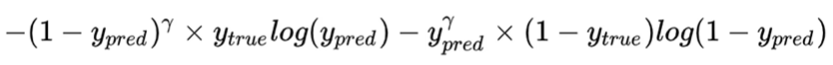

(Focal loss)

Ideas: Difficulty of pixels: The more difficult the sample, the greater the reward.

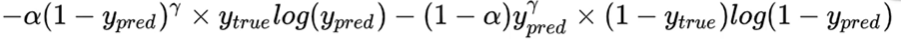

Implementation: Based on the cross entropy loss function, increase the difference between the real labels 0 and 1 and the predicted labels 0 and 1 γ \gamma γPower parameter.

Assumptions γ \gamma γ=2

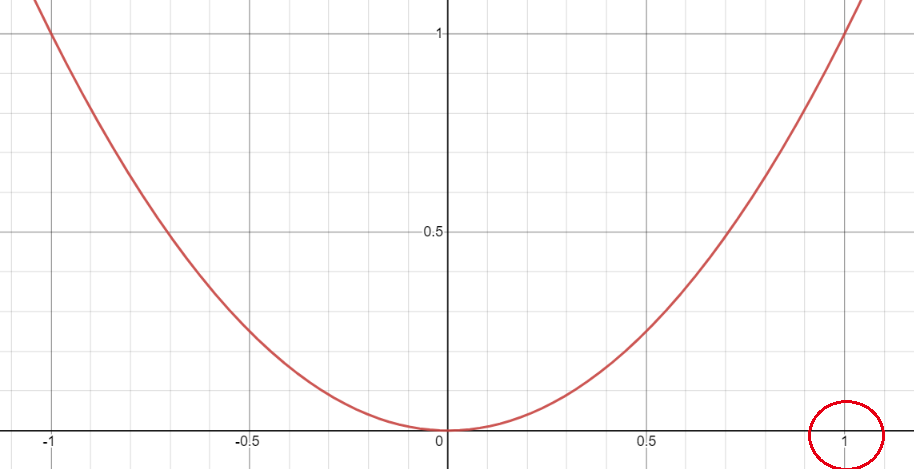

The image of the quadratic square function is as follows:

We can see that the function's growth trend between [0, 1]is increasing, so when the difference between the real label y_true and the predicted label y_pred is very large, the difference is close to 1, and the value after the square term is also very large. When the difference is close to 1, the value after the square termIt is also very small, so for the samples that are easy to be divided, the loss incentive is very small, and for the samples that are difficult to be divided, the loss incentive is very large.This makes a distinction between difficult and easy samples (or pixels).

Weights in combined sample size alpha \alpha α (the ratio of positive and negative samples mentioned above pos_weight), it is the complete Focal Loss function.

Evaluation Criteria

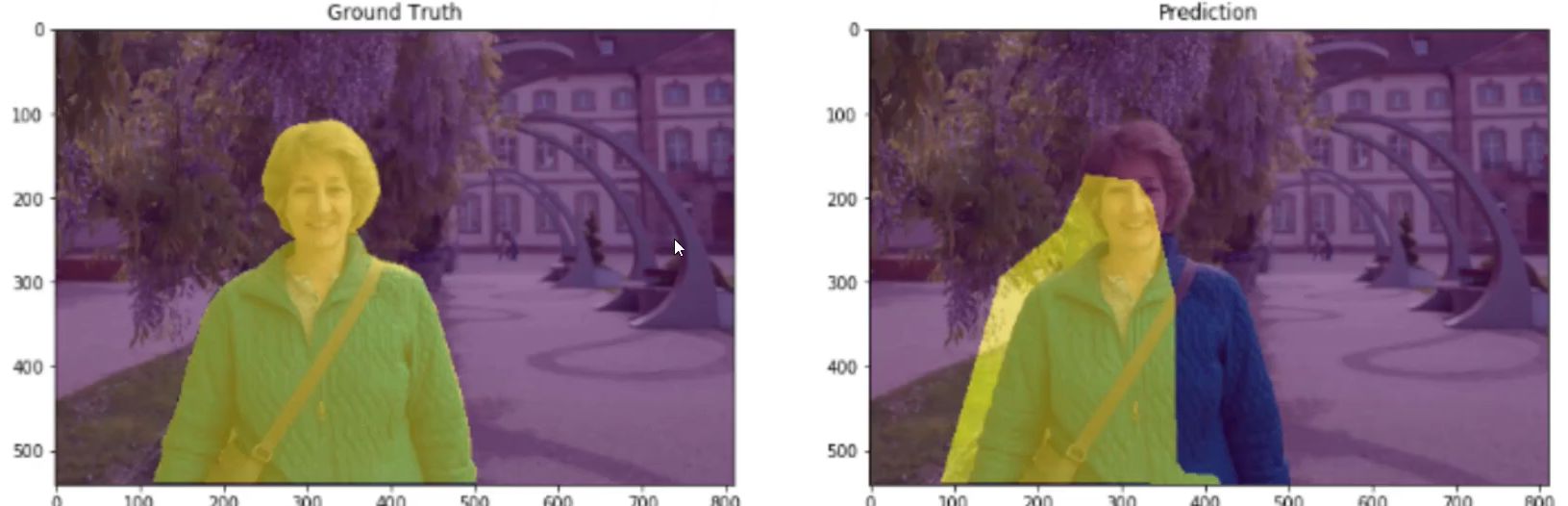

IoU: Intersection and Union Ratio (the intersection of the yellow parts in the figure/the union of the yellow parts)

MIoU (calculate the average of all categories, generally used as a segmentation task evaluation index)

边栏推荐

- 代码签名证书可以解决软件被杀毒软件报毒提醒吗?

- pycharm cancel msyql expression highlighting

- idea插件自动填充setter

- DataGrip配置OceanBase

- 【系统梳理】当我们在说服务治理的时候,其实我们说的是什么?

- MySQL性能调优,必须掌握这一个工具!!!(1分钟系列)

- Software custom development - the advantages of enterprise custom development of app software

- Adobe LiveCycle Designer 报表设计器

- 前几天,小灰去贵州了

- UNITY gameobject代码中setacvtive(false)与面板中直接去掉勾 效果不一样

猜你喜欢

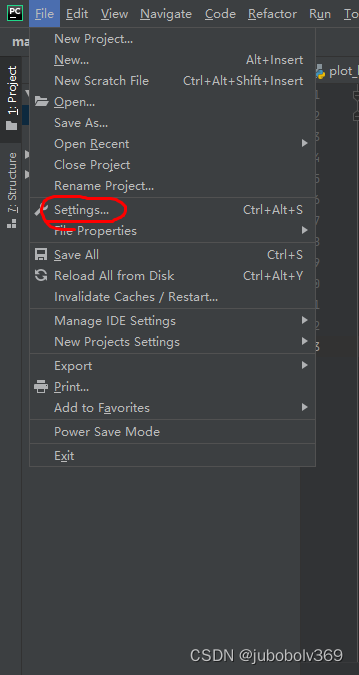

pycharm中绘图,显示不了figure窗口的问题

数组、字符串、日期笔记【蓝桥杯】

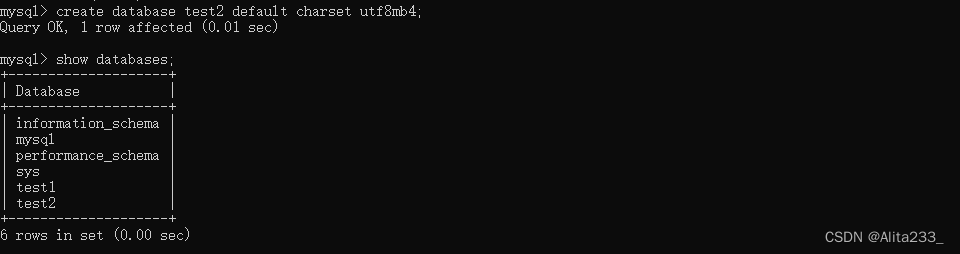

基础SQL——DDL

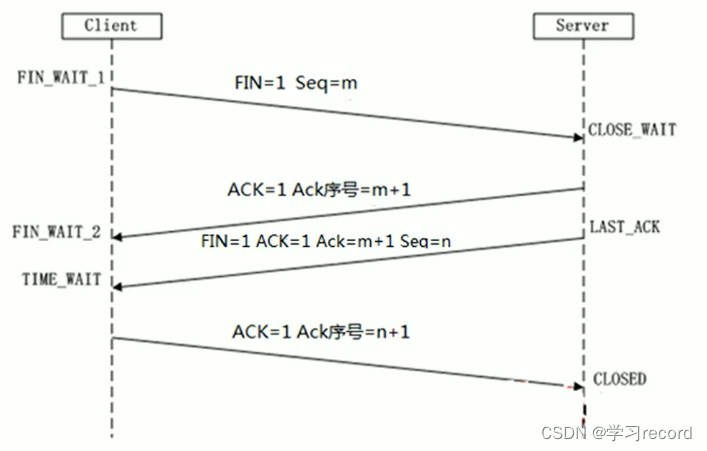

Three handshakes and four waves

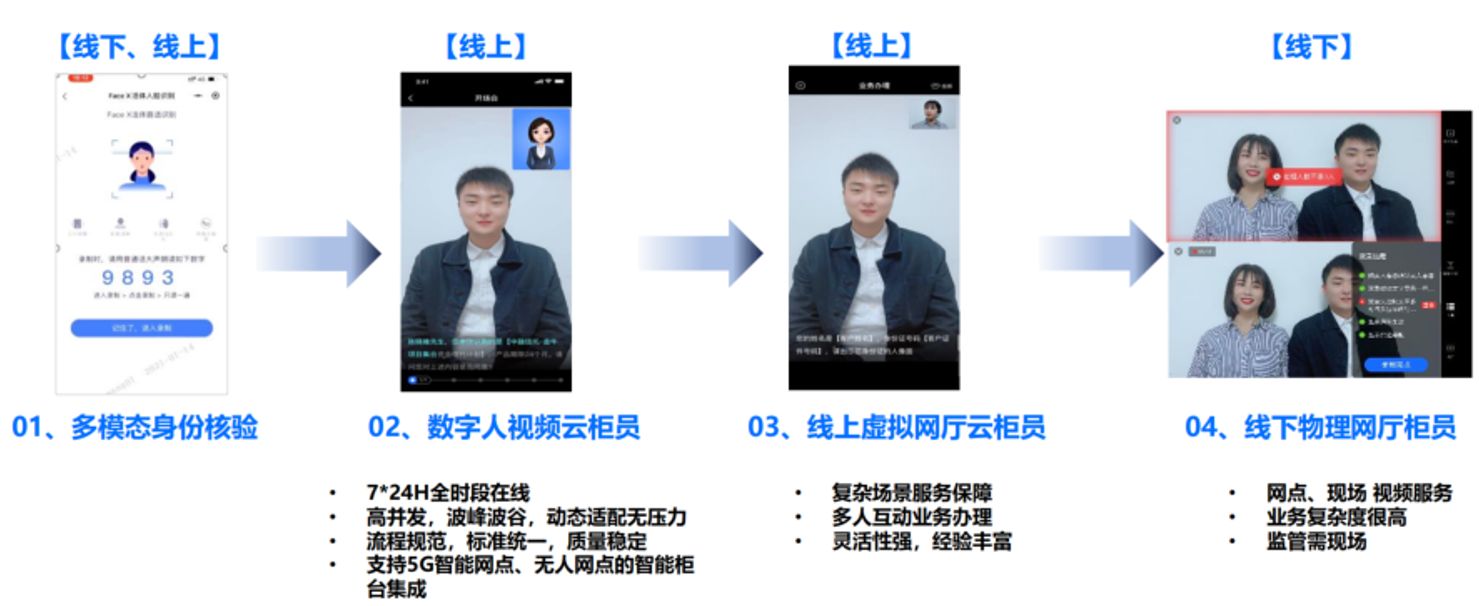

音视频+AI,中关村科金助力某银行探索发展新路径 | 案例研究

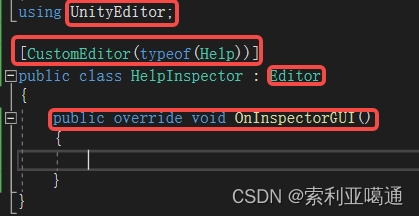

Unity3D - modification of the Inspector panel of the custom class

Redis的客户端连接的可视化管理工具

Software custom development - the advantages of enterprise custom development of app software

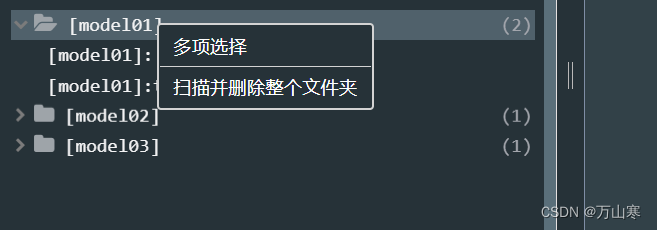

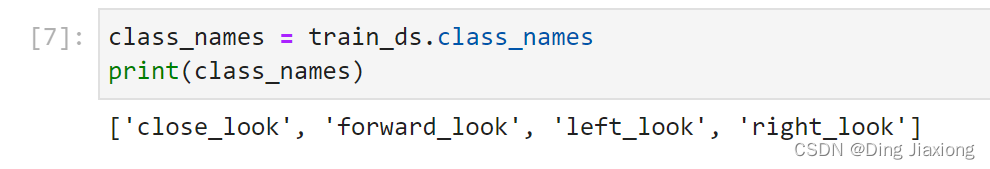

深度学习100例 —— 卷积神经网络(CNN)识别眼睛状态

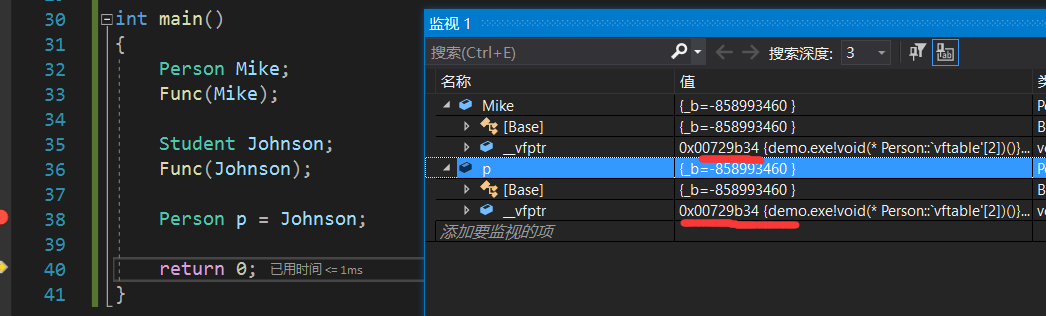

万字长文带你了解多态的底层原理,这一篇就够了

随机推荐

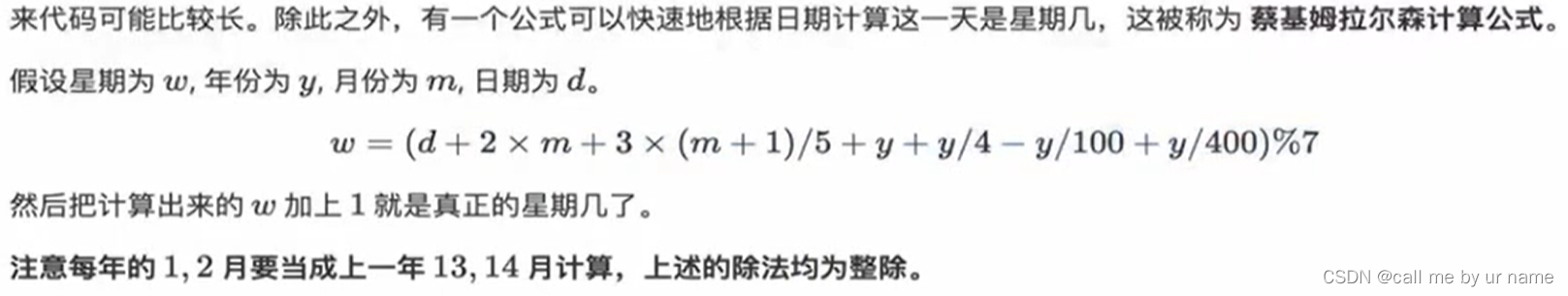

数组、字符串、日期笔记【蓝桥杯】

基于hydra库实现yaml配置文件的读取(支持命令行参数)

框架外的PHP读取.env文件(php5.6、7.3可用版)

基于consul的注册发现的微服务架构迁移到servicemesh

Typescrip编译选项

Quickly submit a PR (Web) for OpenHarmony in 5 minutes

2022-08-10:为了给刷题的同学一些奖励,力扣团队引入了一个弹簧游戏机, 游戏机由 N 个特殊弹簧排成一排,编号为 0 到 N-1, 初始有一个小球在编号

小程序组件不能修改ui组件样式

Oracle database use problems

The no-code platform helps Zhongshan Hospital build an "intelligent management system" to realize smart medical care

2022-08-09 顾宇佳 学习笔记

海信自助机-HV530刷机教程

Getting Started with Kotlin Algorithm to Calculate the Number of Daffodils

Initial use of IDEA

SQL语句

万字长文带你了解多态的底层原理,这一篇就够了

gRPC系列(二) 如何用Protobuf组织内容

idea 方法注释:自定义修改method的return和params,void不显示

验证拦截器的执行流程

基于 VIVADO 的 AM 调制解调(2)工程实现