当前位置:网站首页>Pytorch learning record (III): structure of neural network + using sequential and module to define the model

Pytorch learning record (III): structure of neural network + using sequential and module to define the model

2022-04-23 05:54:00 【Zuo Xiaotian ^ o^】

for example :

nn.Linear(in,out)

Such as input layer 4 Nodes , Output 2 Nodes , It can be used nn.Linear(4,2) To express , meanwhile nn.Linear(in,out,bias=False) Offset can be omitted , The default is True.

N Layer neural networks do not include the input layer ,

therefore A layer by layer neural network means that there is no hidden layer 、 Neural network with only input layer and output layer .

Logistic Regression is a layer by layer neural network .

The output layer generally has no activation function , Because the output layer usually represents the score of a category or a real value target of regression , So the output layer can be any real number .

The representation ability and capacity of the model

The above three figures are the results of two classification of three network models , Each network model is a hidden layer , But the number of nodes in each hidden layer is different , From left to right are 3 individual 、6 And 20 Hidden nodes , The results obtained after the training of these three models are completely different , It can be seen that models with more hidden nodes can represent more complex models , However, according to the results we want , In fact, the model on the far left is the best , Although the model on the far right has a more complex shape , But it ignores potential data relationships , The interference of noise is amplified , This effect is called Over fitting (overfitting).

The loss function of neural network is generally nonconvex , Networks with small capacity are more likely to fall into local minima and fail to achieve the optimal effect , At the same time, the variance of these local minimum points is particularly large , let me put it another way , That is, the difference of the best points of each part is particularly large , So you train when you train the network 10 There is a big difference in the possible results . But for larger capacity neural networks , The variance of its local minima is particularly small , In other words, although training for many times may fall into different local minima , But the difference between them is very small , In this way, the training will not rely entirely on random initialization .

Sequential and Module

**Sequential ( Sequence )** Allows us to build serialized modules , An ordered container , The neural network modules will be added to the calculation diagram in the order of incoming constructors , At the same time, the ordered dictionary with the neural network module as the element can also be used as the incoming parameter .

namely : Used to store the layers of neural network

# Sequential

seq_net = nn.Sequential(

nn.Linear(2, 4), # PyTorch Linear layer in ,wx + b

nn.Tanh(),

nn.Linear(4, 1)

)

# The sequence module can access each layer through the index

seq_net[0] # first floor

Linear(in_features=2, out_features=4)

# Print out the weight of the first layer

w0 = seq_net[0].weight

print(w0)

# result

Parameter containing:

-0.4964 0.3581

-0.0705 0.4262

0.0601 0.1988

0.6683 -0.4470

[torch.FloatTensor of size 4x2]

adopt parameters You can get the parameters of the model , Directly applied to the construction of optimizer

# adopt parameters You can get the parameters of the model

param = seq_net.parameters()

# Define optimizer

optim = torch.optim.SGD(param, 1.)

# We train 10000 Time

for e in range(10000):

out = seq_net(Variable(x))

loss = criterion(out, Variable(y))

optim.zero_grad()

loss.backward()

optim.step()

if (e + 1) % 1000 == 0:

print('epoch: {}, loss: {}'.format(e+1, loss.data[0]))

result :

epoch: 1000, loss: 0.2839296758174896

epoch: 2000, loss: 0.2716798782348633

epoch: 3000, loss: 0.2647360861301422

epoch: 4000, loss: 0.26001378893852234

epoch: 5000, loss: 0.2566395103931427

epoch: 6000, loss: 0.2541380524635315

epoch: 7000, loss: 0.25222381949424744

epoch: 8000, loss: 0.2507193386554718

epoch: 9000, loss: 0.24951006472110748

epoch: 10000, loss: 0.2485194206237793

You can see , Training 10000 Time loss Lower than before , This is because PyTorch The built-in module is more stable than what we wrote .

Save model

Parameter is w and b

The model is defined seq_net

Save parameters and models together

# Save parameters and models together

torch.save(seq_net, 'save_seq_net.pth')

torch.save There are two parameters , The first is the model to be saved , The second parameter is the saved path

Read the saved model

# Read the saved model

seq_net1 = torch.load('save_seq_net.pth')

Save model parameters

# Save model parameters

torch.save(seq_net.state_dict(), 'save_seq_net_params.pth')

If you want to re read in the parameters of the model , First, we need to redefine the model , Then read in the parameters again

as follows ;

seq_net2 = nn.Sequential(

nn.Linear(2, 4),

nn.Tanh(),

nn.Linear(4, 1)

)

# Load parameters

seq_net2.load_state_dict(torch.load('save_seq_net_params.pth'))

seq_net2

Sequential(

(0): Linear(in_features=2, out_features=4)

(1): Tanh()

(2): Linear(in_features)

print(seq_net2[0].weight)

Parameter containing:

-0.5532 -1.9916

0.0446 7.9446

10.3188 -12.9290

10.0688 11.7754

[torch.FloatTensor of size 4x2]

In this way, we also re read the same model , Print the parameter comparison of the first layer , It is found that there are two ways to save and read the model as in the previous method , The second is recommended , Because the second is more portable .

Module( Model ) It is a more flexible way of model definition , Let's use Sequential and Module To define the above neural network .

Use Module Defined templates

class Network name (nn.Module):

def __init__(self, Some defined parameters ):

super( Network name , self).__init__()

self.layer1 = nn.Linear(num_input, num_hidden)

self.layer2 = nn.Sequential(...)

...

Define the network layer that needs to be used

def forward(self, x): # Define forward propagation

x1 = self.layer1(x)

x2 = self.layer2(x)

x = x1 + x2

...

return x

give an example

class module_net(nn.Module):

def __init__(self, num_input, num_hidden, num_output):

super(module_net, self).__init__()

self.layer1 = nn.Linear(num_input, num_hidden) # Input layer

self.layer2 = nn.Tanh() # Activation function

self.layer3 = nn.Linear(num_hidden, num_output) # Output layer , The number of hidden layers should always be , Last output one

def forward(self, x):

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

return x

mo_net = module_net(2, 4, 1)

You can access a layer in the model directly by name

# You can access a layer in the model directly by name

# first floor

l1 = mo_net.layer1

print(l1)

Linear(in_features=2, out_features=4)

# Print out the weight of the first layer

print(l1.weight)

Parameter containing:

0.1492 0.4150

0.3403 -0.4084

-0.3114 -0.0584

0.5668 0.2063

[torch.FloatTensor of size 4x2]

# Define optimizer

optim = torch.optim.SGD(mo_net.parameters(), 1.)

# We train 10000 Time

for e in range(10000):

out = mo_net(Variable(x))

loss = criterion(out, Variable(y))

optim.zero_grad()

loss.backward()

optim.step()

if (e + 1) % 1000 == 0:

print('epoch: {}, loss: {}'.format(e+1, loss.data[0]))

epoch: 1000, loss: 0.2618132531642914

epoch: 2000, loss: 0.2421271800994873

epoch: 3000, loss: 0.23346386849880219

epoch: 4000, loss: 0.22809192538261414

epoch: 5000, loss: 0.224302738904953

epoch: 6000, loss: 0.2214415818452835

epoch: 7000, loss: 0.21918588876724243

epoch: 8000, loss: 0.21736061573028564

epoch: 9000, loss: 0.21585838496685028

epoch: 10000, loss: 0.21460506319999695

# Save the model

torch.save(mo_net.state_dict(), 'module_net.pth')

版权声明

本文为[Zuo Xiaotian ^ o^]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230543244247.html

边栏推荐

- Font shape `OMX/cmex/m/n‘ in size <10.53937> not available (Font) size <10.95> substituted.

- 字符串(String)笔记

- 多线程与高并发(1)——线程的基本知识(实现,常用方法,状态)

- Insert picture in freemark

- 图像恢复论文简记——Uformer: A General U-Shaped Transformer for Image Restoration

- Pytorch——数据加载和处理

- MySQL事务

- 你不能访问此共享文件夹,因为你组织的安全策略阻止未经身份验证的来宾访问

- 手动删除eureka上已经注册的服务

- JDBC工具类封装

猜你喜欢

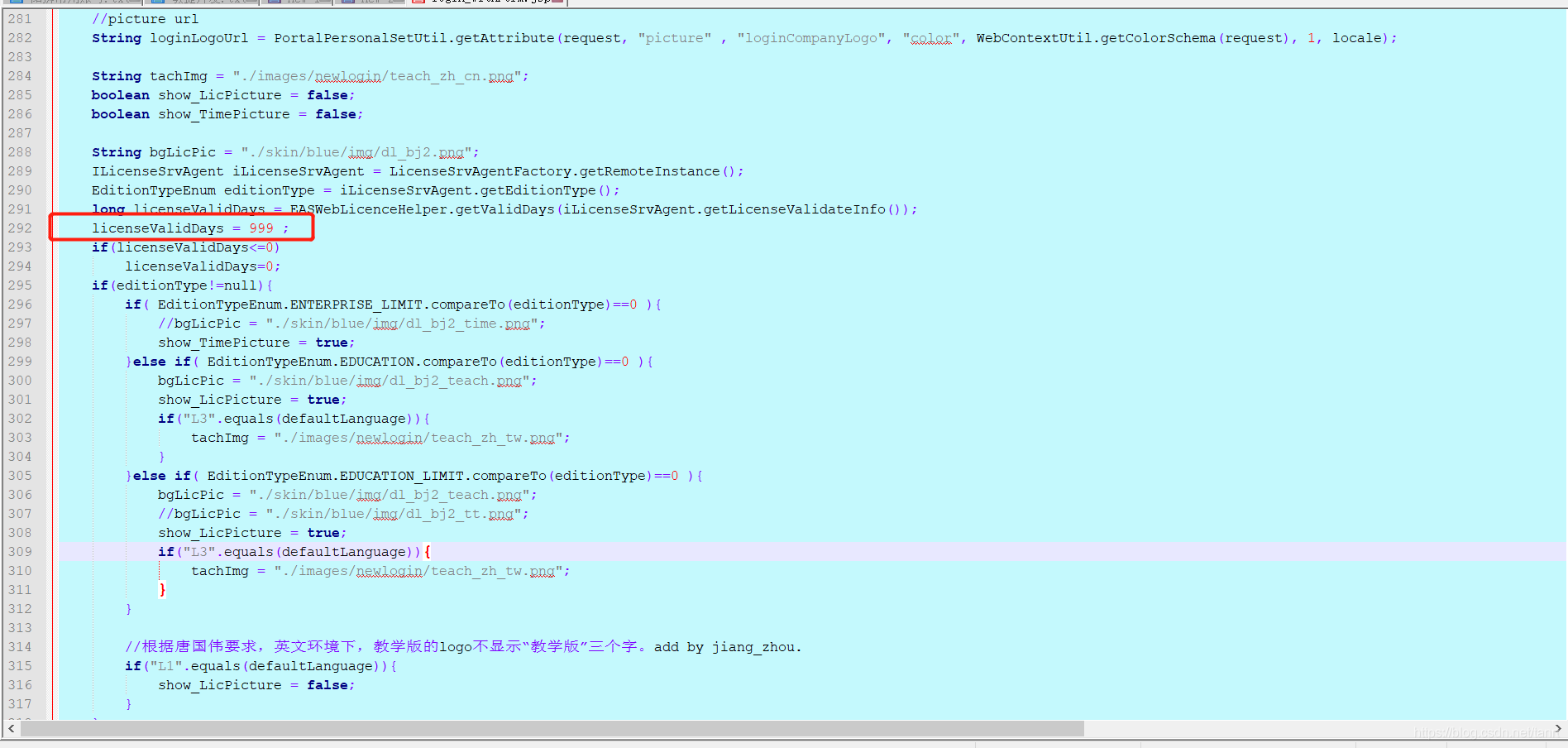

开发环境 EAS登录 license 许可修改

opensips(1)——安装opensips详细流程

LDCT图像重建论文——Eformer: Edge Enhancement based Transformer for Medical Image Denoising

手动删除eureka上已经注册的服务

PyQy5学习(三):QLineEdit+QTextEdit

图像恢复论文简记——Uformer: A General U-Shaped Transformer for Image Restoration

建表到页面完整实例演示—联表查询

Pytorch learning record (XII): learning rate attenuation + regularization

Multithreading and high concurrency (3) -- synchronized principle

Pytorch学习记录(十一):数据增强、torchvision.transforms各函数讲解

随机推荐

The official website of UMI yarn create @ umijs / UMI app reports an error: the syntax of file name, directory name or volume label is incorrect

opensips(1)——安装opensips详细流程

Postfix变成垃圾邮件中转站后的补救

mysql如何将存储的秒转换为日期

Pytorch学习记录(四):参数初始化

excel获取两列数据的差异数据

filebrowser实现私有网盘

Ptorch learning record (XIII): recurrent neural network

关于二叉树的遍历

Pyqy5 learning (III): qlineedit + qtextedit

MySQL lock mechanism

Pytorch——数据加载和处理

编程记录——图片旋转函数scipy.ndimage.rotate()的简单使用和效果观察

类的加载与ClassLoader的理解

Write your own redistemplate

CONDA virtual environment management (create, delete, clone, rename, export and import)

SQL基础:初识数据库与SQL-安装与基本介绍等—阿里云天池

Solution record of slow access speed of SMB service in redhat6

PyQy5学习(三):QLineEdit+QTextEdit

Pytorch learning record (IX): convolutional neural network in pytorch