当前位置:网站首页>Sklearn data preprocessing

Sklearn data preprocessing

2022-08-09 07:02:00 【Anakin6174】

sklearn.preprocessingThe package provides some common utility functions to preprocess the data.一般来说,Machine learning algorithms can achieve better results on preprocessed data.

1, 标准化

数据集的标准化是许多在scikit-learn中实现的Machine Learning Estimator的普遍要求.If the individual features look more or less like standard normally distributed data,then their performance may be poor:The mean and unit variance are zero高斯.

在实践中,We often ignore the shape of the distribution,Instead just transform the data to center it by removing the mean of each feature,It is then scaled by dividing the non-constant features by their standard deviation.

函数scaleProvides a quick and easy way to do this on a single array-like dataset;该preprocessingThe module also provides a utility classStandardScaler,The utility class 实现了TransformerAPI,to calculate the mean and standard deviation on the training set,To be able to reapply the same transformation on the test set later.

Another normalization method is to scale the features between a given minimum and maximum value,Usually between zero and one,Or scale the maximum absolute value of each feature to unit size.可以分别使用MinMaxScaler或MaxAbsScaler来实现.This method mainly targets sparse data,Because centralization destroys the properties of sparse data.

If your data contains many outliers,Then scaling using the mean and variance of the data may not work well.在这些情况下,您可以使用 robust_scale和RobustScaler作为替代产品.They use more reliable estimates of the center and extent of the data.

API接口: sklearn.preprocessing.robust_scale(X, axis=0, with_centering=True, with_scaling=True, quantile_range=(25.0, 75.0), copy=True)

2,非线性变换

有两种类型的转换:Quantile conversion and power conversion.Both quantile and power transformations are based on monotonic transformations of features,The ranks of the values along each feature are thus preserved.

Quantile transforms can smooth unusual distributions,And compared to the scaling method,Outliers have less influence.但是,It does distort correlations and distances within and between features.A power transformation is a set of parametric transformations,Aims to map data from any distribution to a near Gaussian distribution.

QuantileTransformer并quantile_transformProvides non-parametric conversions,to map data to values between0和1之间的均匀分布.

in many modeling scenarios,Normality of features in the dataset is required.Power transformations are a set of parameterizations,Monotonic transformation,Aims to map data from any distribution to as close as possible to a Gaussian distribution,to stabilize variance and minimize skew.'PowerTransformer` Two such power transformations are currently provided,即Yeo-Johnson变换和Box-Cox变换.

3, 归一化(normalization)

归一化是将A single sample is scaled to a process with unit norm.If you plan to use the dot product or any other quadratic form of the kernel to quantify the similarity of any pair of samples,then this procedure may be useful.该假设是向量空间模型的基础,该向量空间模型Often used in text classification and clustering contexts.

函数normalize提供了一种快速简便的方法,可以使用l1or或l2 nors Do this on a single array-like dataset;preprocessingThe module also provides a utility classNormalizer,The utility class 使用TransformerAPI 来实现相同的操作;

4, Encoding categorical features(encoding categorical features)

有时候,Features are not continuous values but categorical values,For example, people are divided into gendermale和femal;To convert categorical features to such integer codes,可以使用 OrdinalEncoder.This estimator converts each categorical feature to an integer(0到n_categories-1)的新特征.

但是,Such integer representations cannot be directly associated with allscikit-learn估计器一起使用,Because they expect continuous input,And will interpret the categories as ordered,This is usually not desired(That is, the set of browsers is arbitrarily ordered).Convert categorical features to scikit-learnAnother possibility for the features used with the estimator is to use K的一,Also known as one-hot or pseudo-encoding.can be used to obtain this type of encodingOneHotEncoder,it will come withn_categoriesPossible values for each categorical feature 转换为n_categories二进制特征,其中一个为1,所有其他为0.

5, 离散化(Discretization)

离散化 (Also known as quantization or consolidation)Provides a way to divide continuous features into discrete values.Certain datasets with continuous features may benefit from discretization,Because discretization can transform a dataset of continuous attributes into a dataset with only nominal attributes.

KBinsDiscretizerDiscretize the features to K 个bin中.

6,缺失值处理

Missing values can be used with before and after values,或均值,Or complement it as a constant1或0;Specifically combined with business processing;

7, 生成多项式特征(generating polynomial features)

通常,Taking into account the nonlinear characteristics of the input data increases the complexity of the model.Polynomial features are a simple and commonly used method,It can obtain higher order and interaction terms of features.它在PolynomialFeaturesThe following locations are implemented.

边栏推荐

- 字节也开始缩招了...

- mysql summary

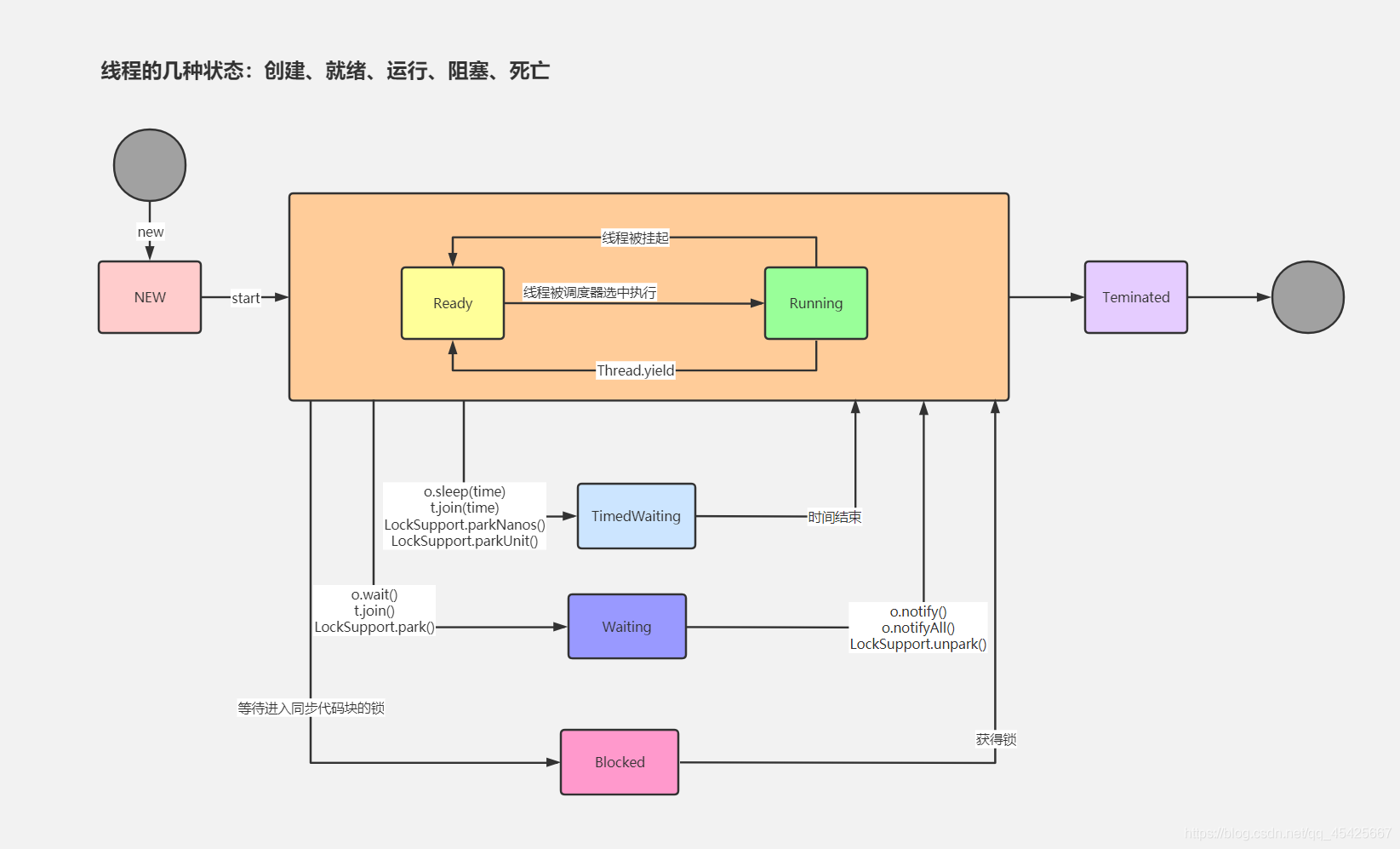

- The JVM thread state

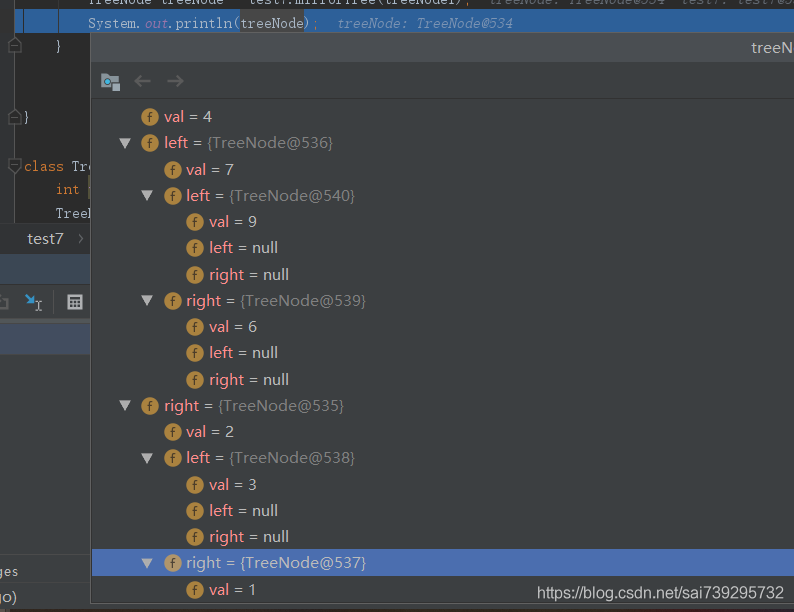

- 字节跳动面试题之镜像二叉树2020

- AD picture PCB tutorial 20 minutes clear label shop operation process, copper network

- 【Docker】Docker安装MySQL

- 常用测试用例设计方法之正交实验法详解

- 【修电脑】系统重装但IP不变后VScode Remote SSH连接失败解决

- 虚拟机网卡报错:Bringing up interface eth0: Error: No suitable device found: no device found for connection

- Transaction concluded

猜你喜欢

随机推荐

长沙学院2022暑假训练赛(一)六级阅读

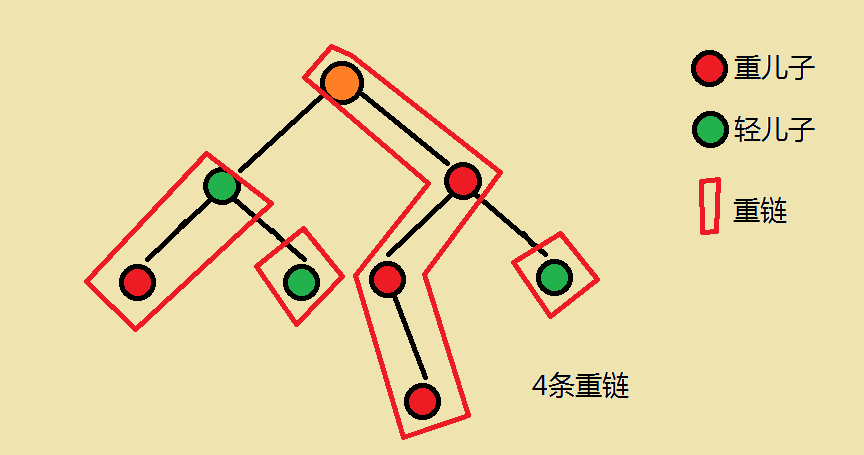

HDU - 3183 A Magic Lamp 线段树

The AD in the library of library file suffix. Intlib. Schlib. Pcblib difference

集合内之部原理总结

常用测试用例设计方法之正交实验法详解

高项 04 项目变更管理

bzoj 5333 [Sdoi2018]荣誉称号

shardingsphere data sharding configuration item description and example

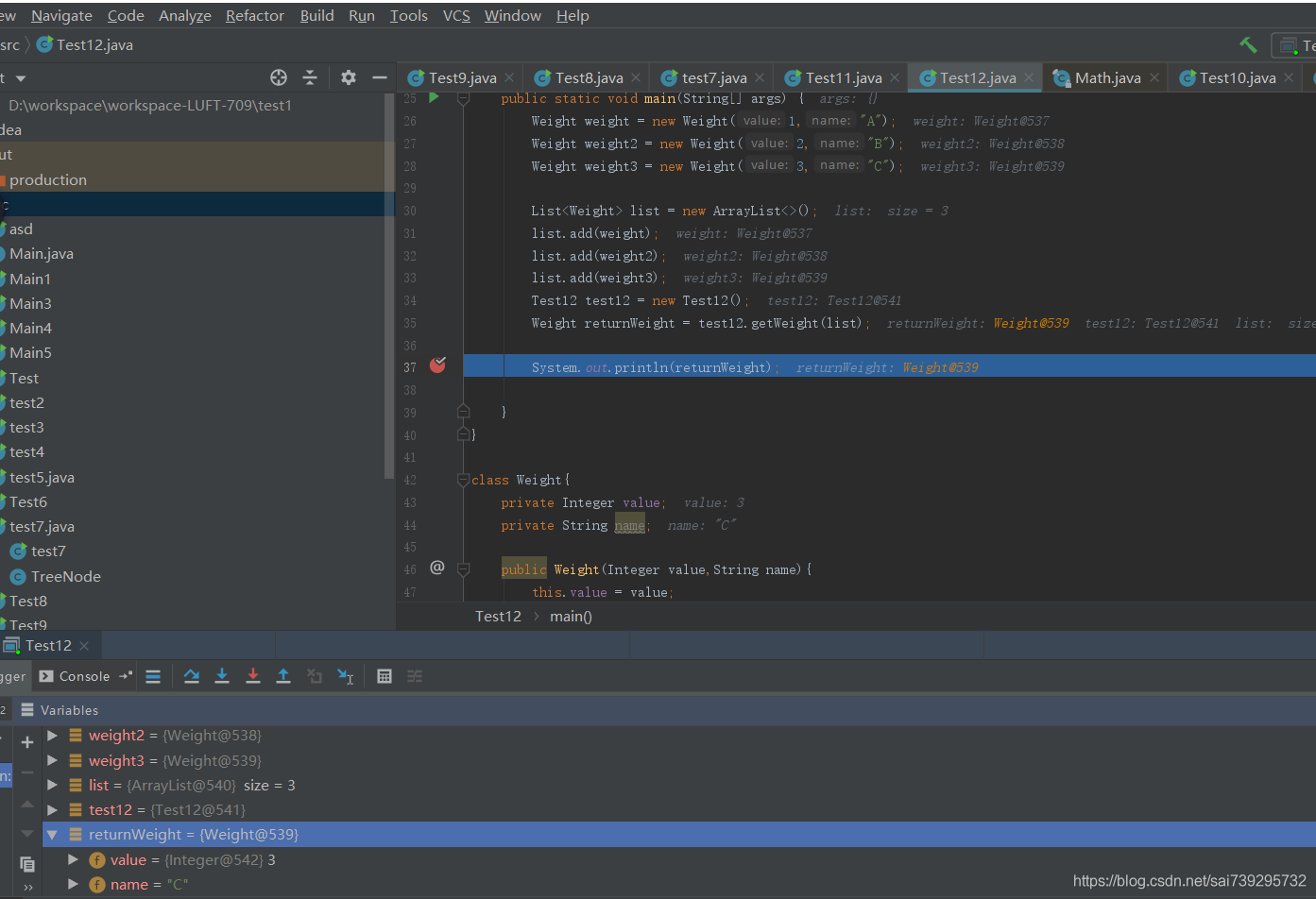

Variable used in lambda expression should be final or effectively final报错解决方案

SIGINT,SIGKILL,SIGTERM信号区别,各类信号总结

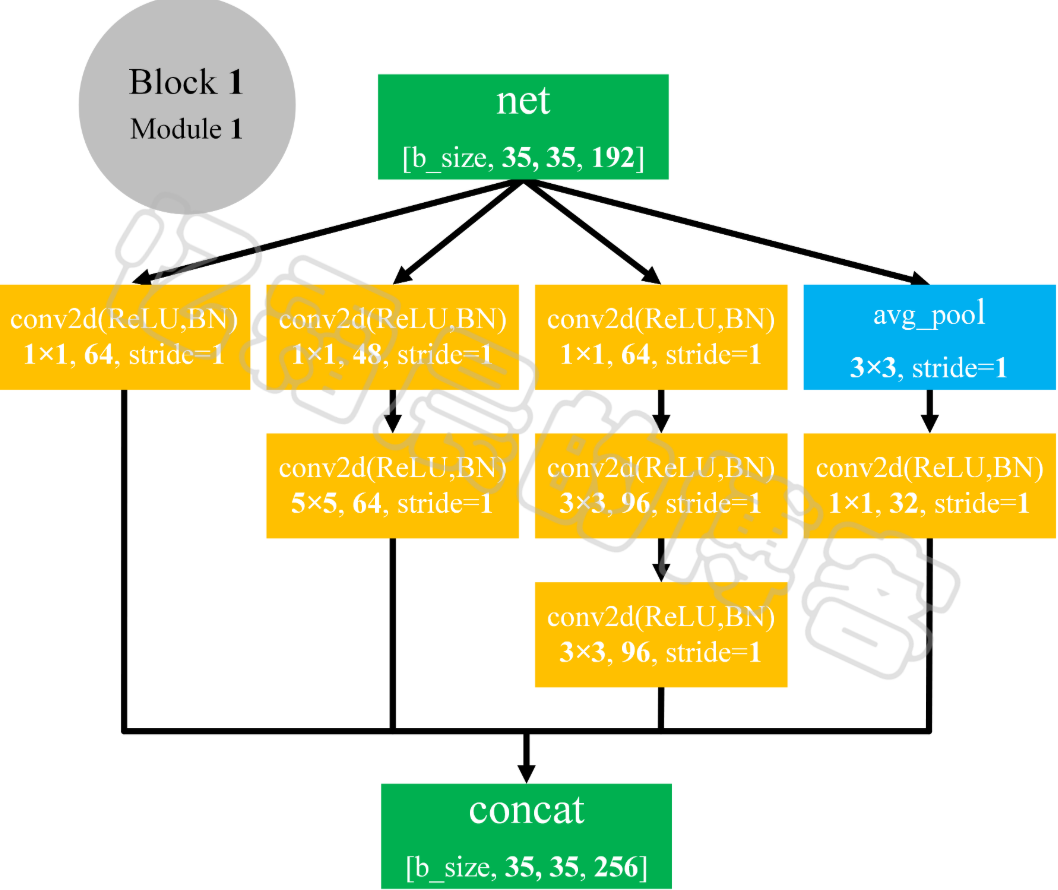

Inception V3 闭眼检测

日期处理,字符串日期格式转换

The Integer thread safe

XILINX K7 FPGA+RK3399 PCIE驱动调试

composer 内存不足够

Thread Pool Summary

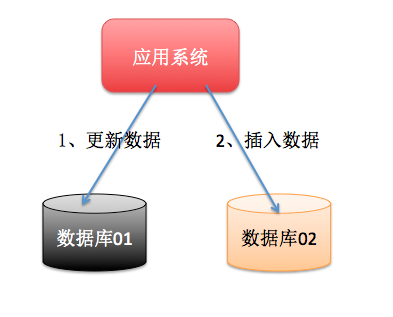

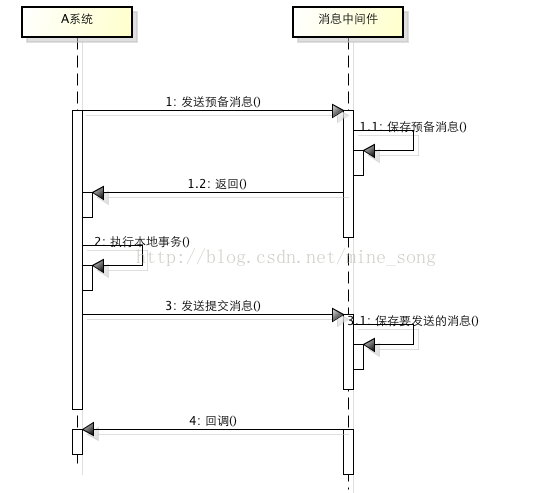

分布式事务的应用场景

eyb:Redis学习(2)

Simple Factory Pattern

线程池总结