当前位置:网站首页>16、 Anomaly detection

16、 Anomaly detection

2022-04-23 02:26:00 【Dragon Fly】

List of articles

1、 Anomaly detection (Anormly Detection) Introduce

\qquad Anomaly detection refers to a given set of unlabeled data sets { x ( 1 ) , x ( 2 ) , . . . , x ( m ) } \{x(1), x(2),..., x(m)\} {

x(1),x(2),...,x(m)}, Train a model for this data set p ( x ) p(x) p(x), To determine the similarity between a certain data and most data in the data set ( The probability that a data falls in the central area of a given data set ), If a data x t e s t x_{test} xtest Very similar to most given data , be p ( x t e s t ) ≥ ϵ p(x_{test})\geq\epsilon p(xtest)≥ϵ, It indicates that there is no obvious abnormality in the data ; otherwise p ( x t e s t ) < ϵ p(x_{test})<\epsilon p(xtest)<ϵ, Explain the data x t e s t x_{test} xtest And most of the data , It indicates that the given data may be an abnormal data .

\qquad That is, if a certain data does not fall within the range of most data , Then the probability of such data is relatively small , Treat the detected data as abnormal data .

2、 Anomaly detection algorithm

\qquad First, for m m m Select... From data samples n n n There are two features that may be abnormal x j , j ∈ n x_{j},j\in n xj,j∈n; Then calculate the mean value of each feature for all samples μ j , j ∈ n \mu_{j},j \in n μj,j∈n And variance σ j 2 , j ∈ n \sigma_{j}^2,j \in n σj2,j∈n; For a new given data x x x Calculation p ( x ) p(x) p(x), if p ( x ) < ϵ p(x)<\epsilon p(x)<ϵ, Then it is determined that the data is abnormal data . The flow of anomaly detection algorithm is as follows :

\qquad The visual representation of anomaly detection of two-dimensional features is as follows :

3、 Evaluate anomaly detection algorithms

\qquad The evaluation method of anomaly detection algorithm is illustrated by an example of aircraft engine anomaly detection :

\qquad First, divide the training data set into , Cross validation data sets and test data sets ; Divide a small part of the known abnormal data into cross validation data set and test data set , therefore CV set and test set It can be seen as having data with labels .

\qquad Then according to 2 The Gaussian model introduced in this paper uses the selected features to detect the anomaly of the training data set p ( x ) p(x) p(x) Fitting of ; Then the fitted model p ( x ) p(x) p(x) in the light of CV set Carry out model inspection , This test is similar to skewed data testing standard , Then according to the calculated F 1 F_1 F1 Value to determine the quality of the model , At the same time, you can adjust the selected feature types and parameters of the model ϵ \epsilon ϵ Value size . Finally, the trained model will use the test set test set To verify the quality of the model .

3.1 Use anomaly detection or supervised learning

\qquad Usually when the number of abnormal samples is small (e.g., 0-20), But when the number of normal samples is large , Suitable for anomaly detection ; At the same time, when the characteristics of the abnormality cannot be determined , Anomaly detection is usually used , Such as abnormal parts detection , Data center computer supervision ; When the number of normal samples and abnormal samples is large , The sample contains sufficient abnormal sample information , It is suitable to use supervised learning , Such as spam detection , Weather forecast , Disease detection, etc .

4、 Handle the feature vector of exception detection

\qquad To use gaussian Distribution to fit the anomaly detection model , It is necessary to ensure that the data distribution of the eigenvector satisfies the approximate Gaussian distribution , If the eigenvector of the initial data does not satisfy the Gaussian distribution , The data needs to be transformed , Make it approximately satisfy the Gaussian distribution , So that the algorithm can achieve better results . The processing method can take logarithm , take α ∈ ( 0 , 1 ) \alpha \in(0,1) α∈(0,1) Power, etc .

\qquad How to select features , Yes, you can first select some features , According to the training data, an anomaly detection model is trained , Then the model is validated on the cross validation data set , Increase or decrease the number of features by verifying the effect . meanwhile , Usually select those features with larger or smaller values at outliers .

5、 multivariate (multivariate) Gaussian distribution

\qquad If there is n Dimension eigenvector x ∈ R n x \in R^n x∈Rn, Multivariate Gaussian distribution does not fit each one-dimensional eigenvector separately p ( x 1 ) p(x_1) p(x1) and p ( x 2 ) p(x_2) p(x2), Instead, all eigenvectors are fitted into a probability function p ( x ) p(x) p(x). The set form Gaussian anomaly detection model needs to use parameters μ ∈ R n \mu \in R^n μ∈Rn, Σ ∈ R ( n ∗ n ) ( Association Fang Bad Moment front ) \Sigma \in R^{(n*n)}( Covariance matrix ) Σ∈R(n∗n)( Association Fang Bad Moment front ), be p ( x ; μ , Σ ) = 1 ( 2 π ) n 2 ∣ Σ ∣ 1 2 e x p − 1 2 ( x − μ ) T Σ − 1 ( x − μ ) p(x;\mu, \Sigma)=\frac{1}{(2\pi)^\frac{n}{2}|\Sigma|^{\frac{1}{2}}}exp^{-\frac{1}{2}(x-\mu)^T\Sigma^{-1}(x-\mu)} p(x;μ,Σ)=(2π)2n∣Σ∣211exp−21(x−μ)TΣ−1(x−μ)

\qquad Several images with multivariate Gaussian distribution μ \mu μ and Σ \Sigma Σ The changes are as follows :

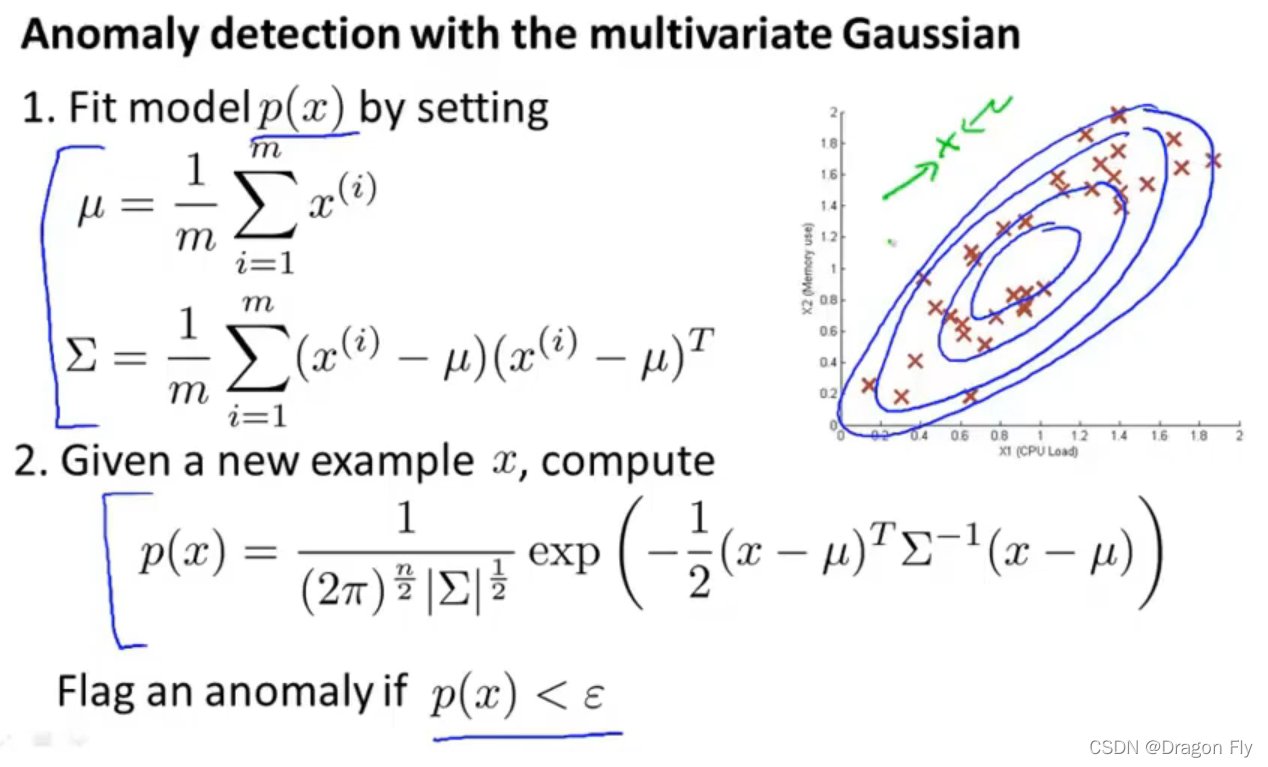

5.1 Using multivariate Gaussian distribution to develop anomaly detection model

\qquad Given training set x ( 1 ) , x ( 2 ) , . . . , x ( m ) x^{(1)},x^{(2)},...,x^{(m)} x(1),x(2),...,x(m), The method of constructing anomaly detection model using multivariate Gaussian distribution is as follows :

5.2 The difference between original model and multivariate Gaussian distribution model

\qquad Set the covariance matrix of multivariate Gaussian distribution model to... Except diagonal elements 0 after , Multivariate Gaussian model is the original model .

5.3 Choose to use the original model / Multivariate Gaussian model

\qquad The original model needs to manually select the combined values between some features , However, multivariate Gaussian model can automatically capture the relationship between features ; The original model is more efficient than multivariate Gaussian model ; Multivariate Gaussian model must meet the number of training data m m m Greater than the number of features n n n, In this way, the covariance matrix Σ \Sigma Σ Is reversible .

\qquad The covariance matrix is irreversible in the following two cases : If the number of training data in the training set is less than the number of features ; If there is a linear correlation between features , That is, there are redundant features .

THE END

版权声明

本文为[Dragon Fly]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230222184783.html

边栏推荐

- Common formatting problems after word writing

- The importance of ERP integration to the improvement of the company's system

- 【无标题】

- New book recommendation - IPv6 technology and application (Ruijie version)

- Program design: l1-49 ladder race, allocation of seats (simulation), Buxiang pill hot

- 010_ StringRedisTemplate

- The 16th day of sprint to the big factory, noip popularization Group Three Kingdoms game

- Dynamic memory management

- 一、序列模型-sequence model

- How many steps are there from open source enthusiasts to Apache directors?

猜你喜欢

010_StringRedisTemplate

Open3d point cloud processing

This is how the power circuit is designed

Halo open source project learning (I): project launch

Dynamic batch processing and static batch processing of unity

Latin goat (20204-2022) - daily question 1

001_redis设置存活时间

Network jitter tool clumsy

![Handwritten memory pool and principle code analysis [C language]](/img/9e/fdddaa628347355b9bcf9780779fa4.png)

Handwritten memory pool and principle code analysis [C language]

Usage of vector common interface

随机推荐

Go language ⌈ mutex and state coordination ⌋

SQL server2019无法下载所需文件,这可能表示安装程序的版本不再受支持,怎么办了

每日一题(2022-04-21)——山羊拉丁文

Latin goat (20204-2022) - daily question 1

Global, exclusive and local routing guard

Flink real-time data warehouse project - Design and implementation of DWS layer

On LAN

012_ Access denied for user ‘root‘@‘localhost‘ (using password: YES)

校园转转二手市场源码

Unicorn bio raised $3.2 million to turn prototype equipment used to grow meat into commercial products

中金财富跟中金公司是一家公司吗,安全吗

They are all intelligent in the whole house. What's the difference between aqara and homekit?

go语言打怪通关之 ⌈互斥锁和状态协程⌋

PTA: praise the crazy devil

013_基于Session实现短信验证码登录流程分析

Day18 -- stack queue

LeetCode 349. Intersection of two arrays (simple, array) Day12

006_ redis_ Sortedset type

Program design: l1-49 ladder race, allocation of seats (simulation), Buxiang pill hot

007_ Redis_ Jedis connection pool