当前位置:网站首页>Pytorch learning record (7): skills in processing data and training models

Pytorch learning record (7): skills in processing data and training models

2022-04-23 05:54:00 【Zuo Xiaotian ^ o^】

Good data preprocessing and parameter initialization can quickly achieve twice the result with half the effort . Use some training skills in model training , Can make the model finally achieve state-of-art The effect of , In this section, we will talk about data processing and training models .

Data preprocessing

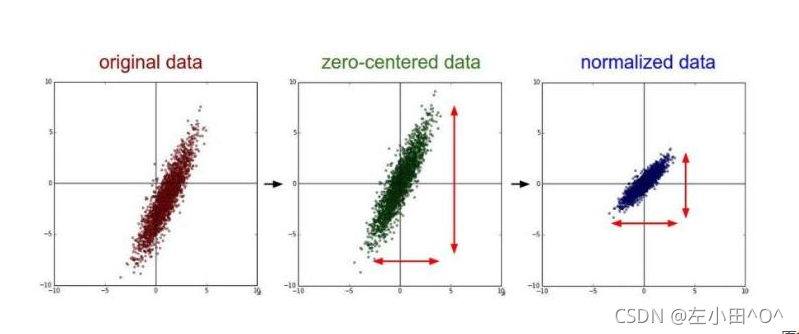

1. Centralization

Each feature dimension subtracts the corresponding mean to achieve centralization , This makes the data 0 mean value , Especially for some image data , For convenience, we subtract the same value from all the data .

What is zero mean

In deep learning , Generally, we will preprocess the training pictures fed to the network model , The most commonly used method is zero mean (zero-mean) Centralization , Even if The pixel value range becomes [-128,127], With 0 Centered .

effect

The advantage of this is to Accelerate the convergence of weight parameters of each layer in the network in back propagation .

You can avoid Z Type update , This can accelerate the convergence speed of neural network .

2. Standardization

In making the data become 0 After mean , We also need to use standardized methods to make different feature dimensions of data have the same scale .

There are two common methods : One is divided by the standard deviation , In this way, the distribution of new data can be close to the standard Gaussian distribution ; Another way is to scale the maximum and minimum values of each feature dimension to -1~1 Between .

3. PCA Principal component analysis

Explain the website in detail https://blog.csdn.net/program_developer/article/details/80632779

4. White noise

First of all, I will follow PCA Project data into a feature space , Then each dimension is divided by the eigenvalue to standardize the data , Intuitively, a multivariate Gaussian distribution is transformed into a 0 mean value , The covariance matrix is 1 Multivariate Gaussian distribution .

In the actual processing data , Centralization and standardization are particularly important . We calculate the statistics of the training set, such as the mean , Then these statistics are applied to the test set and verification set . however PCA And white noise are not used in convolution networks , Because the convolution network can automatically learn how to extract these features without manual intervention .

Weight initialization

Detailed explanation of initialization method :https://zhuanlan.zhihu.com/p/72374385

Preprocess parameters

1. whole 0 initialization

Initialize all parameters to 0. You can't take this approach

2. Random initialization

At present, we know that we want the weight initialization to be as close as possible to 0, But it can't all be equal to 0, So you can Initialize the weights to some values 0 The random number , In this way, symmetry can be broken . The general randomization strategy is Gaussian randomization 、 Uniform randomization, etc , It should be noted that the smaller the randomization, the better the results , Because the smaller the weight initialization , The smaller the gradient of weight in back propagation , Because the gradient is proportional to the size of the parameter , So this will greatly weaken the signal of gradient flow , It has become a hidden danger in neural network training .

3. Sparse initialization

Sparse initialization , Initialize all weights to 0, Then, in order to break the symmetry, randomly select some parameters and attach some random values . The advantage of this method is that the parameters occupy less memory , Because there are more 0, But it is rarely used in practice .

4. Initialize the bias (bias)

about ** bias (bias), It is usually initialized to 0,** Because the weight has broken the symmetry , So use 0 To initialize is the simplest .

5. Batch of standardized (Batch Normalization)

Batch of standardized , Its core idea is standardization. This process is differentiable , Many unreasonable initialization problems are reduced , Therefore, we can apply the standardization process to each layer of neural network for forward propagation and back propagation , Batch standardization is usually applied behind the full connection layer 、 In front of nonlinear layer .

Prevent over fitting

1. Regularization

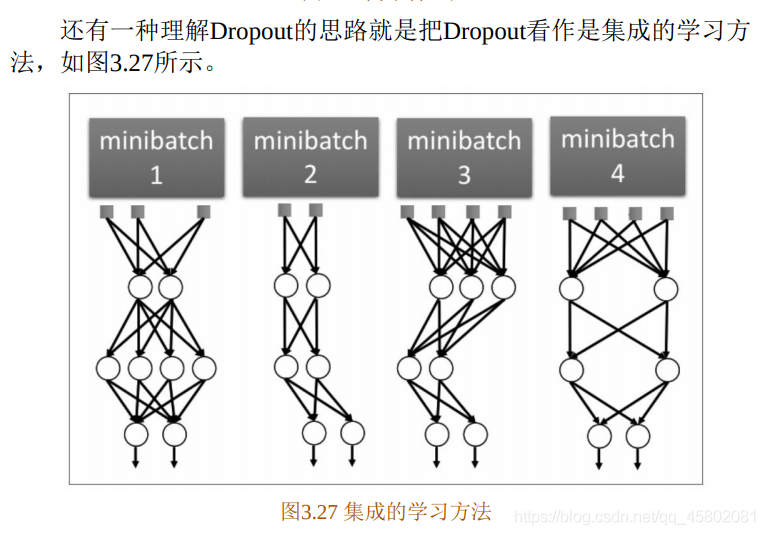

2.Dropout

版权声明

本文为[Zuo Xiaotian ^ o^]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230543244206.html

边栏推荐

- Latex quick start

- 解决报错:ImportError: IProgress not found. Please update jupyter and ipywidgets

- RedHat realizes keyword search in specific text types under the directory and keyword search under VIM mode

- Common status codes

- Dva中在effects中获取state的值

- Pytorch学习记录(十一):数据增强、torchvision.transforms各函数讲解

- 线程的底部实现原理—静态代理模式

- Traitement des séquelles du flux de Tensor - exemple simple d'enregistrement de torche. Utils. Données. Dataset. Problème de dimension de l'image lors de la réécriture de l'ensemble de données

- 图解HashCode存在的意义

- Pytorch学习记录(十):数据预处理+Batch Normalization批处理(BN)

猜你喜欢

Ptorch learning record (XIII): recurrent neural network

JVM系列(3)——内存分配与回收策略

框架解析1.系统架构简介

一文读懂当前常用的加密技术体系(对称、非对称、信息摘要、数字签名、数字证书、公钥体系)

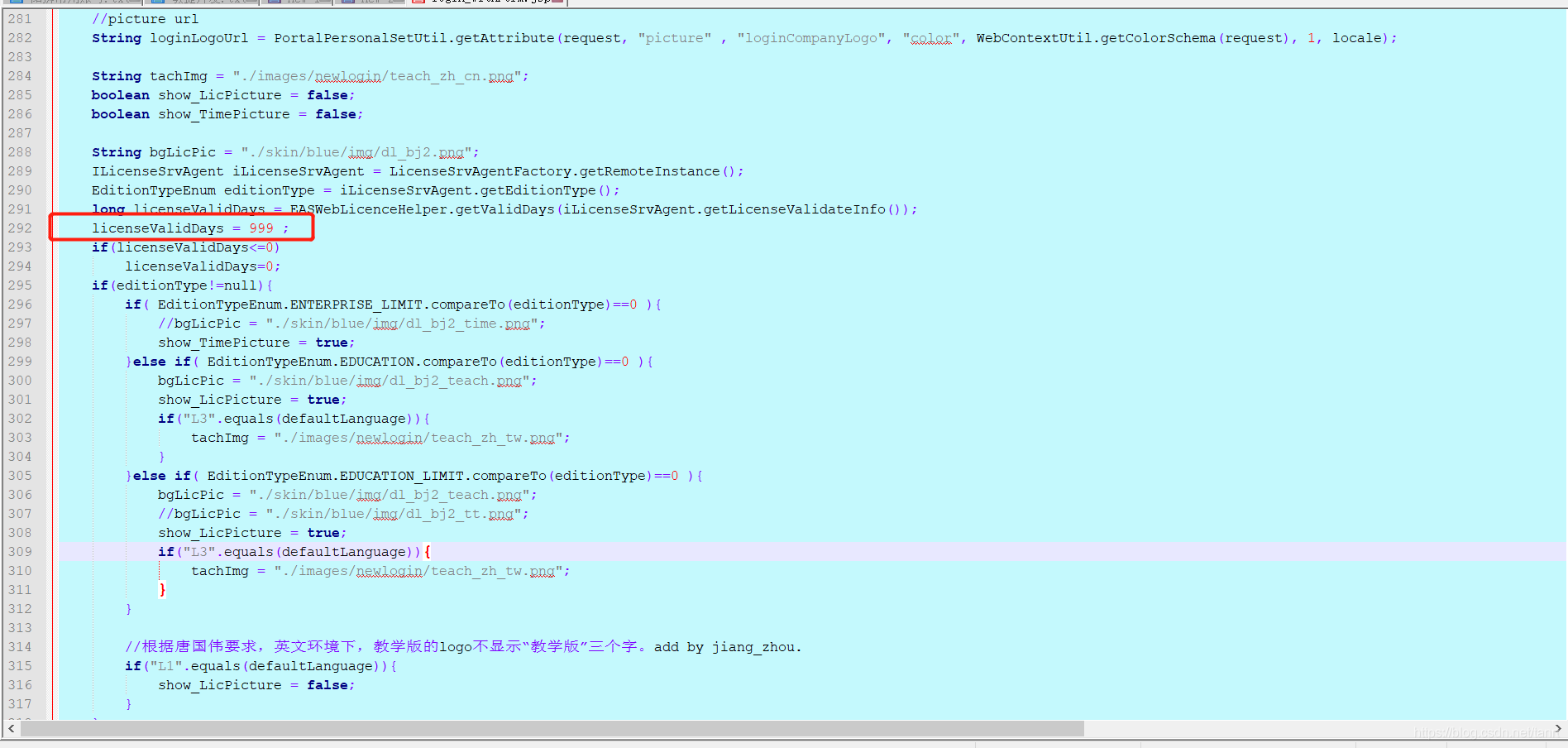

Development environment EAS login license modification

容器

sklearn之 Gaussian Processes

建表到页面完整实例演示—联表查询

PyQy5学习(四):QAbstractButton+QRadioButton+QCheckBox

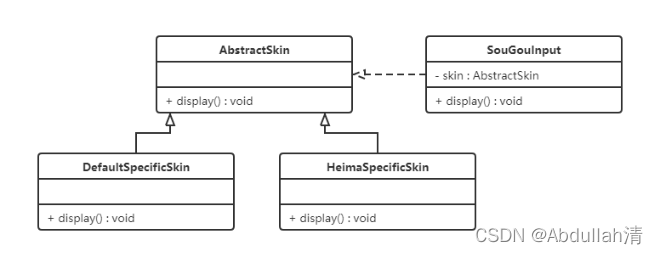

2 - software design principles

随机推荐

ValueError: loaded state dict contains a parameter group that doesn‘t match the size of optimizer‘s

线性规划问题中可行解,基本解和基本可行解有什么区别?

lambda表达式

Pytorch learning record (XII): learning rate attenuation + regularization

开发环境 EAS登录 license 许可修改

一文读懂当前常用的加密技术体系(对称、非对称、信息摘要、数字签名、数字证书、公钥体系)

Pytorch学习记录(七):处理数据和训练模型的技巧

Excel obtains the difference data of two columns of data

SQL注入

DBCP使用

MySQL realizes master-slave replication / master-slave synchronization

常用编程记录——parser = argparse.ArgumentParser()

图解numpy数组矩阵

Latex快速入门

JVM系列(3)——内存分配与回收策略

MySQL triggers, stored procedures, stored functions

图解HashCode存在的意义

Pytorch学习记录(十一):数据增强、torchvision.transforms各函数讲解

interviewter:介绍一下MySQL日期函数

多线程与高并发(2)——synchronized用法详解