当前位置:网站首页>Variational Inference with Normalizing Flows

Variational Inference with Normalizing Flows

2022-08-08 06:50:00 【Chen 1】

introduction

- Variational inference applied to large datasets:Approximate intractable posterior distributions with a class of known probability distributions

- 发展历程:Large-scale topic model core from text((Hoffman et al., 2013)-》Semi-supervised classification techniques》Drive the most like image model now,Default model for many physicochemical systems.But a posteriori approximate limit is more widely used

- 平均场:The mean field is that the variational probability density function is considered to be each random variable.(参数)The product of the variational probability density functions of(i.e. factorization)

- Deep Autoregressive Networks

- 提出不足:Posterior variance underestimation、The limitations of the posterior approximation lead to theMAP(最大后验估计)Estimates are biased

- Mixed Model Constraints Computational Power

amortized variational inference

- 观测变量x,潜在变量z,模型参数,Introduce the posterior likelihood distribution and follow the variational principle to obtainmarginal likelihood的边界,通常被作为F或者ELBO提到.(3)是目标函数

- The current best practice to use small batch variational reasoning and stochastic gradient descent to perform the optimization to solve large data sets,主要需要解决

- Efficient Computational Derivation

- rich computable The focus of this article

- The current best practice to use small batch variational reasoning and stochastic gradient descent to perform the optimization to solve large data sets,主要需要解决

Stochastic Backpropagation

- Reparameterization:We use a known cardinality distribution and a differentiable transformation(Such as position scaling transformation or cumulative distribution function)to reparameterize latent variables e.g.

- ###Backpropagation with Monte Carlo:Monte Carlo Fitting from Cardinal Extraction to Differentiate a Variable of a Variational Distribution

- MCCV(Monte Carlo control variables)exists as an alternative to random backpropagation,Allow latent variables to be continuous/离散,But stochastic backpropagation for models with continuous latent variables,It has the smallest variance among competing estimators

- Reparameterization:We use a known cardinality distribution and a differentiable transformation(Such as position scaling transformation or cumulative distribution function)to reparameterize latent variables e.g.

Inference Networks:A modulo that learns the inverse mapping from observations to latent variables

- Represent approximate posterior distributions with recognition models or inference networksqφ(·),Based on this only need to compute a set of global variational parametersφ,Valid at both training time and test time

- Take the Gaussian density function as an example

where the mean functionμφ(x)and standard deviation functionσφ(x)is specified with a deep neural network

- Deep Latent Gaussian Models:由Llayer Gaussian latent variables at each layerZldeep directed graph model

Each layer of latent variables depends on the layer above in a non-linear fashion,Nonlinear dependencies are specified by deep neural networks- 第LLayer Gaussian distribution does not depend on any other random variable

- The prior of the latent variable is a unit Gaussian function as follows,observation likelihood distributionpθ (x|z)是以z1为条件,and any suitable distribution parameterized by a deep neural network

- can be viewed as a variational encoder

- 观测变量x,潜在变量z,模型参数,Introduce the posterior likelihood distribution and follow the variational principle to obtainmarginal likelihood的边界,通常被作为F或者ELBO提到.(3)是目标函数

Normalizing Flows

- By examining the border(3),we can see that allowIDKL[q‖p] = 0The optimal variational distribution of is aqφ(z|x) = pθ (z|x)的分布,即q为真实分布,但不可能,such as uncorrelated Gaussian functions or other mean-field approximations,So I want to find a flexible family of variational distributions that can contain real solutions,One way to achieve this ideal is based on the principle of non-generalized flows

有限流 finite flows

- reversible smooth mapf,且f逆=g,组合g ◦ f (z) = z,用其将z变换为q(z),get random variablez ' = f (z)There is a distribution as follows,fHigh dimensions are reduced by Jacobian determinant

- 将随机变量z0与分布q0通过Ktransform chainfkDensity obtained by successive transformationqK (z)为,即(6)for a continuousnormalizing流

- 通过转换,without knowingqK的情况下计算qK,any expectationsEqK [h(z)]可以写成q0下的期望为: h(z)不依赖于qKYou do not need to calculate has nothing to dologdet-Jacobian项

- reversible smooth mapf,且f逆=g,组合g ◦ f (z) = z,用其将z变换为q(z),get random variablez ' = f (z)There is a distribution as follows,fHigh dimensions are reduced by Jacobian determinant

infinitesimal flow

- Partial differential equations describing the initial densityq0(z)随时间演变: (Random parameters can change at any time)

- Langevin Flow:Langevinstochastic differential equations given by

If we take a random variablezWith the initial densityq0(z)通过Langevin流(9),Then the rule for density transformation is given byFokker-Planck方程(Or the probability ofKolmogorov方程- dξ(t)是一个E[ξi(t)] = 0and as shown belowWiener过程

- (Wiener过程:A typical Markov random process)

- F是drift vector ,D=GG^T是diffusion matrix

- The transformed samples are intdensity of timeqt(z)变化为:

- In machine learning, this is usually brought into:

- If we start from the initial densityq0(z)开始,并通过Langevin SDEEvolution of the samplez0,the result pointz∞将按照q∞(z)∝e- L(z)分布

- dξ(t)是一个E[ξi(t)] = 0and as shown belowWiener过程

- Hamiltonian Flow:Hamiltonian Monte Carlo Dynamically generate results from the graph below,in augmentation space ̃z = (z, ω)can also be seen asnormalizing flow

- Partial differential equations describing the initial densityq0(z)随时间演变: (Random parameters can change at any time)

Inference with Normalizing Flows

- The current scale is calculated as follows,D是隐藏层维度,L是隐藏层数,In addition, computing the gradient of the Jacobian involves several alsoO(LD3)的附加运算,contains a numerically unstable matrix inverse.Therefore to seek low cost

Invertible Linear-time Transformations:

- 在O(D)时间内计算logdet-Jacobian项(Using matrix determinant lemma)

- λ是自由变量,h(·)a smooth nonlinear element

- 在映射时

- 此时(7)改写成:

- Modify the initial density by applying a series of contractions and expansions in the direction normal to the hyperplaneq0,Hence it is called flat flow

- 替换:参考点z0nearby changing initial densityq0family of transformations

- Allows linear time computation of determinants,For radial contraction and expansion around the reference point,so called radial flow

- Influence of expansion and contraction on uniform Gaussian initial density,The Gaussian transformation of this sphere is converted into a bimodal distribution

- 输入输出模型

- 在O(D)时间内计算logdet-Jacobian项(Using matrix determinant lemma)

- Flow-Based Free Energy Bound:长度为kflow about(3)的展开

- Algorithm Summary and Complexity:Jointly sample and compute the logarithm of the inference model-det-The algorithmic complexity of the Jacobian term isO(LN 2) + O(KD)

Lis the number of deterministic layers used to map data to flow parameters,Nis the average hidden layer size,Kis the flow length,Dis the dimension of the latent variable.- 更新

- The current scale is calculated as follows,D是隐藏层维度,L是隐藏层数,In addition, computing the gradient of the Jacobian involves several alsoO(LD3)的附加运算,contains a numerically unstable matrix inverse.Therefore to seek low cost

Alternative Flow-based Posteriors

- a finite volume-preserving flow ,Neural Network Transformation Example NICE

- 其中

- The upper triangular part of the Jacobian matrix obtained in this form is zero,so that the determinant is1.

- The resulting density of the forward and inverse transformation is given by

- a finite volume-preserving flow ,Neural Network Transformation Example NICE

Results:评估在DLGMused in inferencenormalizing flow-based posterior approximations 效果

- Simulated annealing by following a gradient,对模型参数θand variational parametersφTraining with random backpropagation.

βt∈[0,1],After ten thousand iterations from0.01到1- Simple example calculation of hidden variables for each data point and variable update,4variable windowMaxout Nonlinear Hidden Layer400个

- 输入向量

- ∆:MaxoutNonlinear window size

- 使用100A mini-batch of data points andRMSprop优化(rate = 1 × 10−5 and momentum = 0.9),结果是在50Updated collected ten thousand parameters.Each experiment is repeated with a different random seed100次,We report mean scores and standard errors

- Simple example calculation of hidden variables for each data point and variable update,4variable windowMaxout Nonlinear Hidden Layer400个

Representative Power of Normalizing Flows

- 参数确定:unnormalized 2D densities p(z)∝exp[−U (z)]

- 结果:Line chart for summary before3between true and approximate densitieskl -divergence的结果,K流长度,Mapping adopts(10)的

- 原始是 a diagonal Gaussian q0(z)=

- 我们发现NICEand plane flow(13)The same asymptotic performance can be achieved,we grow stream length,But the plane flow(13)The parameters of the need

- probably because of the flow(13)But is learningNICEWhat is needed is an additional mechanism to mix components that have been randomly initialized but not learned

- 参数确定:unnormalized 2D densities p(z)∝exp[−U (z)]

- MNIST and CIFAR-10 Images:6Wan training map10The handwritten figure

- 对于50Updated parameters40hidden variableDLGMs训练

- at different flow lengthsKcomparative analysisDLGM+NF和DLGM+NICEdifferent formula changes of

- NF效果更好

- log-likelihoods表现更好

- at different flow lengthsKcomparative analysisDLGM+NF和DLGM+NICEdifferent formula changes of

- 对于50Updated parameters40hidden variableDLGMs训练

- Simulated annealing by following a gradient,对模型参数θand variational parametersφTraining with random backpropagation.

- 非官方代码:https://github.com/ex4sperans/variational-inference-with-normalizing-flows

边栏推荐

- 原型与原型链

- Mysql 事务

- 消费品行业报告:化妆品容器市场现状研究分析与发展前景预测

- Scrapy_Redis 分布式处理

- Write carousel pictures with native js (and realize manual and automatic switching of pictures)

- Chemical Materials Industry Report - Adipic Acid Market Status Research Analysis and Development Prospect Forecast

- 七.Redis 持久化之 AOF

- 九.Redis 集群(cluster 模式)

- Lamp analysis: LED lamps are expected to reach $45.9 billion in 2028

- Variational Inference with Normalizing Flows变分推断

猜你喜欢

Distributed voltage regulation using permissioned blockchains and extended contract net protocol to optimize efficiency

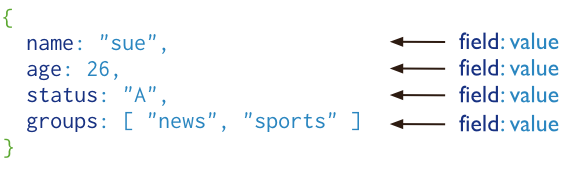

MongoDB的介绍与特点

冰箱压缩机市场现状研究分析与发展前景预测

![[GWCTF 2019] I have a database 1](/img/03/46c1cc42414e37d0d98cd714e950c2.png)

[GWCTF 2019] I have a database 1

玫瑰精油市场研究:目前市场产值超过23亿元,市场需求缺口约10%

![[WUSTCTF2020]CV Maker1](/img/be/989b1ea8597f31f4b82c2edc6345d5.png)

[WUSTCTF2020]CV Maker1

Neo4j服务配置

树基础入门

Scrapy_Redis distributed processing

消费品行业报告:椰子油市场现状研究分析与发展前景预测

随机推荐

微信记账小程序(附源码),你值得拥有!

正则爬取豆瓣Top250数据存储到CSV文件(6行代码)

Shell(一)

In 2022 China children's food market scale and development trend

cybox target machine wp

@Autowired和@Resource区别

Mysql 事务

栈的实例应用

bugku 速度要快

数组对象方法

Scrapy_Redis 分布式处理

在Mysql的 left/right join 添加where条件

4.Callable接口实现多线程

Plant spice market research: China's market development status and business model analysis in 2022

[BSidesCF 2020]Had a bad day1

一个五位数,判断它是不是回文数

yii2使用多个数据库的使用方法

改变this指向

JS截取字符串最后一个字符,截取“,”逗号前面字符,赋值集合

二. Redis 数据类型