当前位置:网站首页>Detailed explanation of slam monocular dense reconstruction

Detailed explanation of slam monocular dense reconstruction

2022-04-22 00:13:00 【Blue feather bird】

The depth of monocular calculation is complex , It can be used in general RGB-D The camera gets the depth directly , But practice .

Because it's dense reconstruction , Calculate the depth for each pixel , So it's not feature extraction .

Depth cannot be estimated from one image alone , Use images from different perspectives to estimate .

In the feature point matching method , It is to triangulate and estimate the depth according to the different positions of the same feature point in different viewing angles ,

But dense reconstruction does not need feature points , To match every pixel , Then triangulate . How to match , Use epipolar search and block matching .

What is epipolar search , as follows ( Post a picture of someone else ):

First of all, god horse is the polar line , The camera 1 A pixel in p1, Its possible depth is , such as [0.1, 5]( The range is... On the graph d), then p1 The pixel coordinates of combine this minimum and maximum depth (d On both sides of the road ), Formed two positions , These two positions are mapped to the camera 2 Two points of , The line segment formed by these two points is the polar line .

First of all, god horse is the polar line , The camera 1 A pixel in p1, Its possible depth is , such as [0.1, 5]( The range is... On the graph d), then p1 The pixel coordinates of combine this minimum and maximum depth (d On both sides of the road ), Formed two positions , These two positions are mapped to the camera 2 Two points of , The line segment formed by these two points is the polar line .

What about search , It's on the polar line , Search and p1 The same point , How to judge the same , Is to use various similarity functions , This is used here. NCC( Later said ), Searching only one point is not robust , Just search for a block , The size of the block is defined by itself .

Search for p2 Point and p1 After the point matching degree is high , Estimate by triangulation p The depth of the ,

Now put the picture 1 As p1 Picture of , To estimate the picture 1 The depth of all pixels in the , At present, there are pictures taken from different perspectives 2 To match p2, Estimated depth .

Just one picture 2, The depth must not be stable enough ,

So let's take several pictures from different perspectives 3,4,5…,

Each one is related to p1 matching , Then estimate the picture 1 The depth of all pixels in the , So I got a lot of different depth maps .

So many estimated depth maps , Always merge into a depth map , After all, just estimate a picture 1 The depth of the .

Here is Gaussian fusion .

Gaussian fusion is the assumption of depth d Is subject to Gaussian distribution , The initial mean is μ \mu μ, The variance is σ \sigma σ,

Then according to the picture 2,3,4… A new depth mean variance is obtained : μ o b s \mu_{obs} μobs, σ o b s \sigma_{obs} σobs,

According to the product of Gaussian distribution , Get the fusion formula

σ o b s 2 μ + σ 2 μ o b s σ 2 + σ o b s 2 \frac{ \sigma ^{2}_{obs}\mu + \sigma ^{2}\mu_{obs} }{\sigma ^{2} + \sigma ^{2}_{obs}} σ2+σobs2σobs2μ+σ2μobs

Be careful , Because each pixel has to calculate the depth , So this average , Variance is a matrix of image size .

final μ \mu μ Is to get the depth map .

The next question is , μ o b s \mu_{obs} μobs and σ o b s \sigma_{obs} σobs How to calculate .

μ o b s \mu_{obs} μobs This is the depth estimate , Because the estimation is the average value of ( The following code will say )

σ o b s \sigma_{obs} σobs According to the uncertainty formula ,

Come to the conclusion first : σ o b s = ∥ p ∥ − ∥ p ′ ∥ \sigma_{obs} = \left \| p \right \| - \left \| p' \right \| σobs=∥p∥−∥p′∥,

Come to the conclusion first : σ o b s = ∥ p ∥ − ∥ p ′ ∥ \sigma_{obs} = \left \| p \right \| - \left \| p' \right \| σobs=∥p∥−∥p′∥,

among p It refers to... In the figure O 1 P O_{1}P O1P vector ,p’ refer to O 1 P ′ O_{1}P' O1P′ vector

So the problem comes back to P and P’ How to calculate the two points .

First p1 Corresponding p2 The point is searched through the epipolar line , adopt p1, p2 Two point triangulation p1 Estimated location of P,

And then put p2 Move one pixel along the epipolar line , obtain p2’,

According to p1, p2’ These two points estimate another location P’.

In fact, you don't need to estimate twice , In mobile p2 obtain p2’ after ,

According to some edges and corners marked on the figure , Get the following relationship

a = p − t a = p - t a=p−t ( Vector minus )

α = a r c c o s < p , t > \alpha = arccos<p, t> α=arccos<p,t>

β = a r c c o s < p , − t > \beta = arccos<p, -t> β=arccos<p,−t>

β ′ = a r c c o s < O 2 p 2 ′ , − t > \beta' = arccos<O_{2}p_{2}', -t> β′=arccos<O2p2′,−t>

γ = π − α − β \gamma = \pi - \alpha - \beta γ=π−α−β ( The sum of the interior angles of a triangle =180 degree )

Then according to the sine theorem :

∥ p ′ ∥ s i n β ′ = ∥ t ∥ s i n γ \frac{\left \| p' \right \| }{sin\beta'} = \frac{\left \| t \right \| }{sin\gamma} sinβ′∥p′∥=sinγ∥t∥

Got it.

∥ p ′ ∥ = ∥ t ∥ s i n β ′ s i n γ \left \| p' \right \| = \left \| t \right \|\frac{sin\beta' }{sin\gamma} ∥p′∥=∥t∥sinγsinβ′

Pay attention to this t It's camera pose transformation T The translation part of .

As for triangulation, how to calculate , This is annotated in the code .

Next, complete the above process step by step .

First initialize the above μ \mu μ and σ \sigma σ matrix

double init_depth = 3.0; // Initial value of depth

double init_cov2 = 3.0; // Initial value of variance

Mat depth(height, width, CV_64F, init_depth); // Depth map

Mat depth_cov2(height, width, CV_64F, init_cov2); // Depth map variance

from p1 It is estimated that p2 It needs the pose of the camera , This pose from data Read from

This is the camera pose of the first picture .

SE3d pose_ref_TWC = poses_TWC[0];

First image

Mat ref = imread(color_image_files[0], 0); // grayscale

The next step is to traverse all the images ( Pictures taken in different positions )

We call the first image ref, TWC From current Camera coordinate system to world Transformation matrix of coordinate system T,

that p1 To world Coordinate system , Until then p2, You need a handle ref The coordinate system is transformed to current Transformation of camera coordinate system T_C_R.

It is from ref Of T_W_C(current to world) Left by camera 2 Of T_C_W(world to current).

for(int index = 1; index < color_image_files.size(); index ++) {

cout << "*** loop " << index << " ***" << endl;

Mat curr = imread(color_image_files[index], 0);

if(curr.data == nullptr) continue;

SE3d pose_curr_TWC = poses_TWC[index]; // Transformation matrix from the current coordinate system to the world coordinate system T

SE3d pose_T_C_R = pose_curr_TWC.inverse() * pose_ref_TWC; // Left multiplication ,ref->world->cur: T_C_W * T_W_R = T_C_R

update(ref, curr, pose_T_C_R, depth, depth_cov2);

}

All right, the pose is solved , The following is update The epipolar search and Gaussian fusion that need to be done in .

Vector2d pt_curr; // The current position

Vector2d epipolar_direction; // Polar direction

bool ret = epipolarSearch(

ref,

curr,

T_C_R,

Vector2d(x, y),

depth.ptr<double>(y)[x],

sqrt(depth_cov2.ptr<double>(y)[x]),

pt_curr,

epipolar_direction

);

if(ret == false) continue; // Matching failure

// The match is successful , Update depth filter

updateDepthFilter(Vector2d(x, y), pt_curr, T_C_R, epipolar_direction, depth, depth_cov2);

Epipolar search , The details are written in the notes

// Epipolar search

bool epipolarSearch(

const Mat &ref, const Mat &curr,

const SE3d &T_C_R, const Vector2d &pt_ref,

const double &depth_mu, const double &depth_cov,

Vector2d &pt_curr, Vector2d &epipolar_direction) {

Vector3d f_ref = px2cam(pt_ref); // Convert to unified camera coordinates (X/Z, Y/Z, 1)

f_ref.normalize(); // Unit vector

Vector3d P_ref = f_ref * depth_mu; //(X/Z, Y/Z, 1) * Z = (X, Y, Z), Reference frame P vector

Vector2d px_mean_curr = cam2px(T_C_R * P_ref); // Cast to the second 2 Pixel coordinates of a camera , In the book p2 spot

double d_min = depth_mu - 3 * depth_cov; // Small probability event left mu - 3*sigma

double d_max = depth_mu + 3 * depth_cov; // Small probability events mu + 3*sigma

if(d_min < 0.1) d_min = 0.1; // Considering the actual situation , It won't be any closer

// Because you know the posture , hold P The point is projected to... According to the depth range p2, You can get the polar line

Vector2d px_min_curr = cam2px(T_C_R * (f_ref * d_min)); // By depth d_min Projective p2 spot

Vector2d px_max_curr = cam2px(T_C_R * (f_ref * d_max)); // By depth d_max Projective p2 spot

Vector2d epipolar_line = px_max_curr - px_min_curr; // Polar line

epipolar_direction = epipolar_line; // Vector form

epipolar_direction.normalize(); // Normalized by length , Get the unit vector

double half_length = 0.5 * epipolar_line.norm(); // The half length of a polar line segment

if(half_length > 100) half_length = 100; // You don't need to search too much

// Search on the polar line , Point by depth mean (p2) Centered , Take half the length on the left and right

double best_ncc = -1.0;

Vector2d best_px_curr;

for(double l = -half_length; l <= half_length; l += 0.7) {

// l+=sqrt(2)/2

Vector2d px_curr = px_mean_curr + l * epipolar_direction; //px_mean_curr: Calculated according to the average depth p1 The point is projected directly onto p2

if(!inside(px_curr)) continue;

// Calculation NCC

double ncc = NCC(ref, curr, pt_ref, px_curr);

if(ncc > best_ncc) {

best_ncc = ncc;

best_px_curr = px_curr;

}

}

if(best_ncc < 0.85f) return false; // Greater than the threshold is considered a match

pt_curr = best_px_curr; // Pass matching points by referencing formal parameters

return true;

}

Block matching at NCC In the function

double NCC(

const Mat &ref, const Mat &curr,

const Vector2d &pt_ref, const Vector2d &pt_curr) {

double mean_ref = 0;

double mean_curr = 0;

vector<double> values_ref, values_curr; // The mean value of the reference frame and the current frame

// Take the reference point p1, p2 Centered , Block scan , Pixel value normalization

for(int x = -ncc_window_size; x <= ncc_window_size; x ++) {

for(int y = -ncc_window_size; y <= ncc_window_size; y ++) {

double value_ref = double(ref.ptr<uchar>(int(pt_ref(1, 0) + y))[int(pt_ref(0, 0) + x)]) / 255.0;

mean_ref += value_ref;

//pt_curr Is the coordinates of the polar search , Is not an integer , Use bilinear interpolation

double value_curr = getBilinearInterpolatedValue(curr, pt_curr + Vector2d(x, y));

mean_curr += value_curr;

values_ref.push_back(value_ref); // The value of each pixel in the block area

values_curr.push_back(value_curr);

}

}

// The average value of pixels in the block area

mean_ref /= ncc_area;

mean_curr /= ncc_area;

// Calculate the de mean NCC

double numerator = 0, demoniator1 = 0, demoniator2 = 0;

for(int i = 0; i < values_ref.size(); i++) {

// set NCC The formula , It uses de averaging NCC

double n = (values_ref[i] - mean_ref) * (values_curr[i] - mean_curr);

numerator += n;

demoniator1 += (values_ref[i] - mean_ref) * (values_ref[i] - mean_ref);

demoniator2 += (values_curr[i] - mean_curr) * (values_curr[i] - mean_curr);

}

return numerator / sqrt(demoniator1 * demoniator2 + 1e-10); // Prevent denominator from being 0

}

Gaussian fusion , It contains triangulation , The derivation of the triangulation formula is written in the notes .

bool updateDepthFilter(

const Vector2d &pt_ref,

const Vector2d &pt_curr,

const SE3d &T_C_R,

const Vector2d &epipolar_direction,

Mat &depth,

Mat &depth_cov2) {

// Triangulation calculation depth

SE3d T_R_C = T_C_R.inverse();

Vector3d f_ref = px2cam(pt_ref); //p1 Normalized camera coordinates

f_ref.normalize(); //O1P Unit direction vector

Vector3d f_curr = px2cam(pt_curr); //p2 Normalized camera coordinates

f_curr.normalize(); //O2P Unit direction vector

// Triangulation is used to calculate the depth

// equation

// d_ref * f_ref = d_cur * ( R_RC * f_cur ) + t_RC

// f2 = R_RC * f_cur

// Into the following matrix equations

// => [ f_ref^T f_ref, -f_ref^T f2 ] [d_ref] [f_ref^T t]

// [ f_2^T f_ref, -f2^T f2 ] [d_cur] = [f2^T t ]

// Here I would like to comment on how it is transformed , What is the basis

// The triangulation formula is s2x2 = s1Rx1 + t, It's estimated by two cameras p Same position ,s2 by p2 depth ,s1 by p1 depth ,x1,x2 For feature points p1,p2 Normalized coordinates of ( Book 177 page )

// Now take the camera 2 As a ref, Push the camera backwards 1 Next p The depth of the ,

// therefore d_ref * f_ref = d_cur * ( R_RC * f_cur ) + t_RC

// Multiply left on both sides of the equation f_ref^T, obtain d_ref * f_ref^T * f_ref = d_cur * f_ref^T * f2 + f_ref^T * t_RC, among f2 = R_RC * f_cur

// Multiply left on both sides of the equation f2^T, obtain d_ref * f2^T * f_ref = d_cur * f2^T * f2 + f2^T * t_RC, among f2 = R_RC * f_cur

// Sort it out and get the above matrix equations

Vector3d t = T_R_C.translation(); // The first 2 A camera to the 1 The translation of a camera , In the triangulation formula t, At the same time, it is also the translation of two cameras

Vector3d f2 = T_R_C.so3() * f_curr; //O2P Rotate the direction of to the 1 A camera , Represents the in the triangulation formula Rx1

Vector2d b = Vector2d(t.dot(f_ref), t.dot(f2));

Matrix2d A;

A(0, 0) = f_ref.dot(f_ref); // Point multiplication , amount to f_ref^T * f_ref

A(0, 1) = -f_ref.dot(f2);

A(1, 0) = -A(0, 1);

A(1, 1) = -f2.dot(f2);

Vector2d ans = A.inverse() * b; // The matrix is small , Direct inversion

Vector3d xm = ans[0] * f_ref; // The camera 1 Estimate of the p Location

Vector3d xn = ans[1] * f2 + t; //T_R_C.so3() * (ans[1] * f_curr) + t, The camera 2 Estimate of the p Change the position back to the camera 1 In the coordinate system of

Vector3d p_esti = (xm + xn) / 2.0; //p Take the average of the two positions

double depth_estimation = p_esti.norm(); // Depth value ,2 norm , Square sum square , namely mu_obs

// Calculation uncertainty ( Take one pixel as the error )

Vector3d p = f_ref * depth_estimation; //p The location of ,O1P vector

Vector3d a = p - t; //O2P vector

double t_norm = t.norm();

double a_norm = a.norm();

double alpha = acos(f_ref.dot(t)/t_norm); //acos The required value is [-1,1], So we have to use the unit direction vector to calculate acos<p,t>

double beta = acos(-a.dot(t)/(a_norm * t_norm)); //acos<a, -t>

Vector3d f_curr_prime = px2cam(pt_curr + epipolar_direction); //p2 Move one pixel along the polar direction to get p2'

f_curr_prime.normalize(); // Get the unit direction vector

double beta_prime = acos(f_curr_prime.dot(-t) / t_norm); //acos<O2p2', -t>

double gamma = M_PI - alpha - beta_prime;

double p_prime = t_norm * sin(beta_prime) / sin(gamma); // Move a pixel to create a new p Depth estimation of

double d_cov = p_prime - depth_estimation;

double d_cov2 = d_cov * d_cov;

// Gaussian fusion

double mu = depth.ptr<double>(int(pt_ref(1, 0)))[int(pt_ref(0, 0))]; // Depth value in depth file

double sigma2 = depth_cov2.ptr<double>(int(pt_ref(1, 0)))[int(pt_ref(0, 0))];

double mu_fuse = (d_cov2 * mu + sigma2 * depth_estimation) / (sigma2 + d_cov2); // After fusion mu

double sigma_fuse2 = (sigma2 * d_cov2) / (sigma2 + d_cov2); // After fusion sigma

depth.ptr<double>(int(pt_ref(1, 0)))[int(pt_ref(0, 0))] = mu_fuse;

depth_cov2.ptr<double>(int(pt_ref(1, 0)))[int(pt_ref(0, 0))] = sigma_fuse2;

return true;

}

Full code reference link

版权声明

本文为[Blue feather bird]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204220007509741.html

边栏推荐

- 容器雲系列之容器技術相關概念介紹

- 华为Routing & Switching切换为Datacom,究竟变了些什么

- Deeptech released seven trends of advanced computing in 2022, breaking the shackles of Moore and winning the era of computing power

- Activity preview | on April 23, a number of wonderful openmldb sharing came, which lived up to the good time of the weekend!

- 开关电源中开关管与二极管EMI抑制方法分析

- Analysis of EMI suppression methods of switches and diodes in switching power supply

- 【无标题】2022煤炭生产经营单位(机电运输安全管理人员)上岗证题目模拟考试平台操作

- Busybox overview

- B. Vlad and Candies

- Main parameters and structure of LED

猜你喜欢

R语言广义线性模型GLM:线性最小二乘、对数变换、泊松、二项式逻辑回归分析冰淇淋销售时间序列数据和模拟

Why do requirements change during MES implementation? How to solve it?

09. Raspberry pie ASP Net environment configuration

Introduction à la technologie des conteneurs de la série Container Cloud

AI helps to monitor the wearing of labor protection equipment and escort the safe production of enterprises

Ascript Foundation: event flow and common event attributes

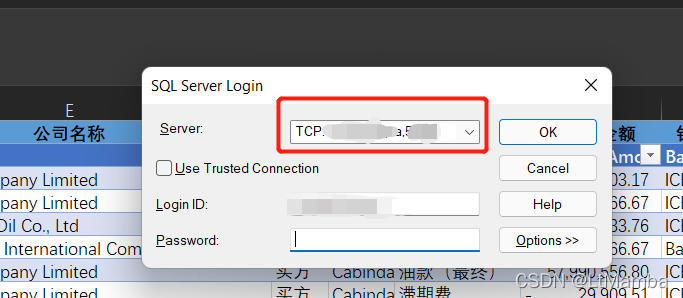

SqlServer——Excel连接数据库相关知识

CODESYS讀取csv文件的方法(非excel)

When the notebook expands the external display, the mouse cannot move to another screen outside the main display

应用层(一)

随机推荐

09. Raspberry pie ASP Net environment configuration

Very powerful time and date plug-in --- jedate js

TCP的三次握手详解

LeetCode刷题——字符串

What kind of sports headphones should you choose and what style of headphones are comfortable to wear

Fansea 4W single coil transmitting wireless charging 5W module

R语言广义线性模型GLM:线性最小二乘、对数变换、泊松、二项式逻辑回归分析冰淇淋销售时间序列数据和模拟

【无标题】2022煤炭生产经营单位(机电运输安全管理人员)上岗证题目模拟考试平台操作

Mobile power IC scheme

RT-Thread 应用篇 — 在STM32L051上使用 RT-Thread (一、无线温湿度传感器 之 新建项目)

go操作mysql

R language generalized linear model GLM: linear least squares, logarithmic transformation, Poisson, binomial logistic regression analysis, ice cream sales time series data and simulation

C# 利用委托事件进行窗体间的传值

Go operation MySQL

What is the process of complete data analysis

DeepTech发布《2022先进计算七大趋势》,破局摩尔桎梏,决胜算力时代

容器雲系列之容器技術相關概念介紹

How to choose the current limiting resistor of Zener diode

CoDeSys method of reading CSV file (non Excel)

L1-020 帅到没朋友