当前位置:网站首页>Implementation of resnet-34 CNN with kears

Implementation of resnet-34 CNN with kears

2022-04-23 05:15:00 【You have to smile when you see me】

Let's start to realize ResNet-34.

One 、 Create a ResidualUnit layer .

from functools import partial

import tensorflow as tf

from tensorflow import keras

DefaultConv2D = partial(keras.layers.Conv2D, kernel_size=3, strides=1,

padding="SAME", use_bias=False)

class ResidualUnit(keras.layers.Layer):

def __init__(self, filters, strides=1, activation="relu", **kwargs):

super().__init__(**kwargs)

self.activation = keras.activations.get(activation)

self.main_layers = [

DefaultConv2D(filters, strides=strides),

keras.layers.BatchNormalization(),

self.activation,

DefaultConv2D(filters),

keras.layers.BatchNormalization()]

self.skip_layers = []

if strides > 1:

self.skip_layers = [

DefaultConv2D(filters, kernel_size=1, strides=strides),

keras.layers.BatchNormalization()]

def call(self, inputs):

Z = inputs

for layer in self.main_layers:

Z = layer(Z)

skip_Z = inputs

for layer in self.skip_layers:

skip_Z = layer(skip_Z)

return self.activation(Z + skip_Z)

From above , In the constructor , We created all the layers we needed :

Main layer 、 Skip layer ( When the stride is greater than 1 The need when ).

stay call() In the method , We pass the input through the main layer 、 Skip layer , Then add the output layer and apply the activation function .

Two 、 use Sequential Model to build ResNet-34.

This model It's actually a Very long Layer sequence .

Now with the above ResidualUnit class , We can divide each residual unit into a layer .

model = keras.models.Sequential()

model.add(DefaultConv2D(64, kernel_size=7, strides=2,

input_shape=[224, 224, 3]))

model.add(keras.layers.BatchNormalization())

model.add(keras.layers.Activation("relu"))

model.add(keras.layers.MaxPool2D(pool_size=3, strides=2, padding="SAME"))

prev_filters = 64

for filters in [64] * 3 + [128] * 4 + [256] * 6 + [512] * 3:

strides = 1 if filters == prev_filters else 2

model.add(ResidualUnit(filters, strides=strides))

prev_filters = filters

model.add(keras.layers.GlobalAvgPool2D())

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(10, activation="softmax"))

There will be ResidualUnit layer Loop added to the model :

front 3 individual RU( Residual unit ) have 64 A filter , Then the rest 4 a 128 individual . And so on .

When the number of filters is the same as the previous RU The layers are the same , Set the stride to 1, Otherwise 2.

Then add ResidualUnit , The last update prev_filters.

3、 ... and 、 Don't forget to instantiate the model .

model.summary()

result :

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 112, 112, 64) 9408

batch_normalization (BatchN (None, 112, 112, 64) 256

ormalization)

activation (Activation) (None, 112, 112, 64) 0

max_pooling2d (MaxPooling2D (None, 56, 56, 64) 0

)

residual_unit (ResidualUnit (None, 56, 56, 64) 74240

)

residual_unit_1 (ResidualUn (None, 56, 56, 64) 74240

it)

residual_unit_2 (ResidualUn (None, 56, 56, 64) 74240

it)

residual_unit_3 (ResidualUn (None, 28, 28, 128) 230912

it)

residual_unit_4 (ResidualUn (None, 28, 28, 128) 295936

it)

residual_unit_5 (ResidualUn (None, 28, 28, 128) 295936

it)

residual_unit_6 (ResidualUn (None, 28, 28, 128) 295936

it)

residual_unit_7 (ResidualUn (None, 14, 14, 256) 920576

it)

residual_unit_8 (ResidualUn (None, 14, 14, 256) 1181696

it)

residual_unit_9 (ResidualUn (None, 14, 14, 256) 1181696

it)

residual_unit_10 (ResidualU (None, 14, 14, 256) 1181696

nit)

residual_unit_11 (ResidualU (None, 14, 14, 256) 1181696

nit)

residual_unit_12 (ResidualU (None, 14, 14, 256) 1181696

nit)

residual_unit_13 (ResidualU (None, 7, 7, 512) 3676160

nit)

residual_unit_14 (ResidualU (None, 7, 7, 512) 4722688

nit)

residual_unit_15 (ResidualU (None, 7, 7, 512) 4722688

nit)

global_average_pooling2d (G (None, 512) 0

lobalAveragePooling2D)

flatten (Flatten) (None, 512) 0

dense (Dense) (None, 10) 5130

=================================================================

Total params: 21,306,826

Trainable params: 21,289,802

Non-trainable params: 17,024

_________________________________________________________________

Reference resources :

Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow, 2nd Edition, author : Aurelien Geron( French ) , also O Reilly published , Book number 978-1-492-03264-9.

版权声明

本文为[You have to smile when you see me]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230512051635.html

边栏推荐

- Servlet3 0 + event driven for high performance long polling

- Data security has become a hidden danger. Let's see how vivo can make "user data" armor again

- Redis data type usage scenario

- 深度学习笔记 —— 语义分割和数据集

- [2022 ICLR] Pyramid: low complexity pyramid attention for long range spatiotemporal sequence modeling and prediction

- At pgconf Asia Chinese technology forum, listen to Tencent cloud experts' in-depth understanding of database technology

- 工具在数字化转型中扮演了什么样的角色?

- Golang select priority execution

- The applet calls the function of scanning QR code and jumps to the path specified by QR code

- Nacos source code startup error report solution

猜你喜欢

低代码和无代码的注意事项

引入精益管理方式,需要提前做到这九点

Power consumption parameters of Jinbei household mute box series

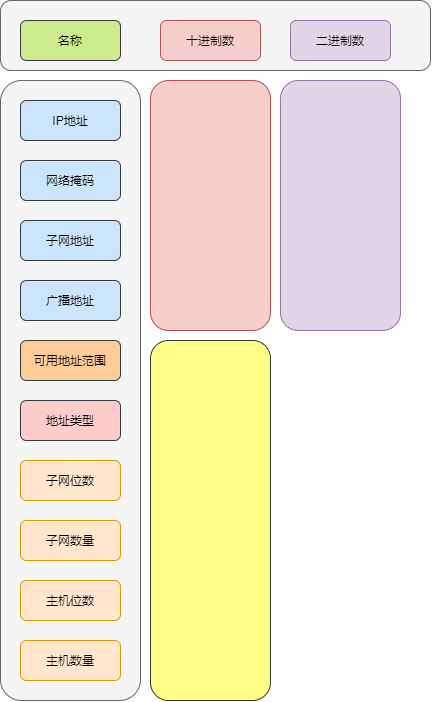

DIY is an excel version of subnet calculator

Making message board with PHP + MySQL

SQLyog的基本使用

MySQL foreign key constraint

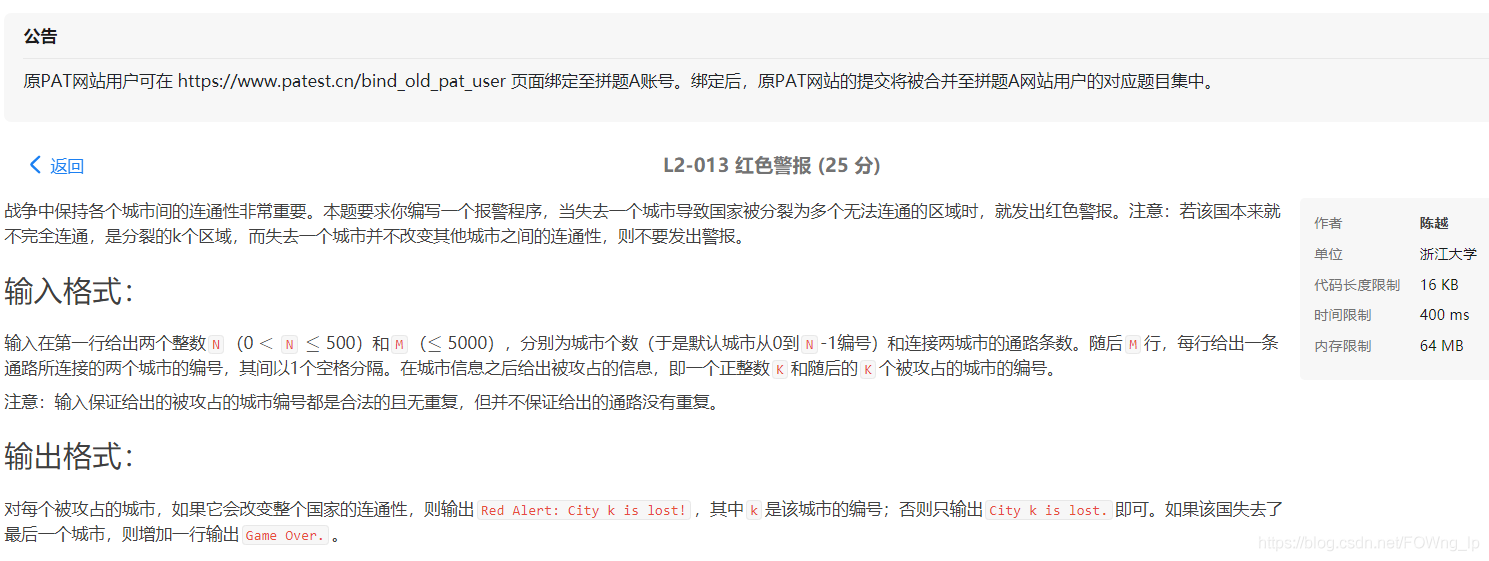

Simple application of parallel search set (red alarm)

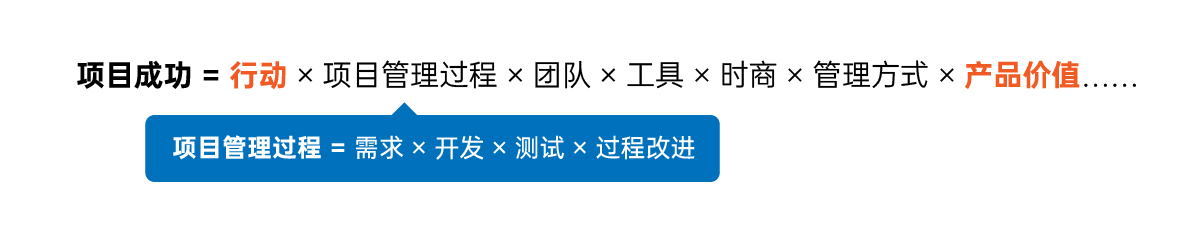

项目经理值得一试的思维方式:项目成功方程式

《2021多多阅读报告》发布,95后、00后图书消费潜力攀升

随机推荐

Publish your own wheel - pypi packaging upload practice

Swing display time (click once to display once)

At pgconf Asia Chinese technology forum, listen to Tencent cloud experts' in-depth understanding of database technology

C. Tree infection (simulation + greed)

Mariadb的半同步复制

HRegionServer的详解

Day. JS common methods

Redis persistence

Locks and transactions in MySQL

机器学习---线性回归

PHP counts the number of files in the specified folder

Redis lost key and bigkey

Transaction isolation level of MySQL transactions

Knowledge points sorting: ES6

4 个最常见的自动化测试挑战及应对措施

好的测试数据管理,到底要怎么做?

Harmonious dormitory (linear DP / interval DP)

Various ways of writing timed tasks of small programs

Grpc long connection keepalive

Redis data type usage scenario