当前位置:网站首页>Summary of image classification white box anti attack technology

Summary of image classification white box anti attack technology

2022-04-23 07:18:00 【Breeze_】

Catalog

-

- 1. Counter attack background knowledge

- 2. White box attack technology

-

- 2.1 Attack method based on direct optimization

- 2.2 Attack method based on gradient optimization

-

- 2.2.1 FGSM attack ( Countermeasure sample generation algorithm based on one-step gradient calculation )

- 2.2.2 I-FGSM attack ( Iterative FGSM Algorithm )

- 2.2.3 PGD attack ( Iterative FGSM Algorithm , And I-FGSM Similar attacks )

- 2.2.4 MI-FGSM attack ( The momentum based iteration generates a counter sample MI-FGSM Algorithm )

- 2.3 Attack method based on decision boundary analysis

- 2.4 Attack method based on generative neural network

- 2.5 Other attack methods

- Summary of anti attack algorithms

author & edit | WYH、 breeze _

1. Counter attack background knowledge

In the image classification task , Given a deep learning model y = f ( x ) y=f(x) y=f(x), x ∈ R m x \in \mathbf{R}^{\mathrm{m}} x∈Rm Input to the model , y ∈ Y y \in \ Y y∈ Y For the current input x x x The model of Out . Model f ( . ) f (.) f(.) Generally, it also includes a set of trained weight parameters θ \theta θ , For convenience , Omit this parameter when describing the model . Anti attack technology Described as For the target model f ( . ) f (.) f(.) The input of x x x Look for a small noise data r r r , When r r r Superimposed on x x x After entering the target model on the , bring f ( x + r ) ≠ f ( x ) f(x+r) \neq f(x) f(x+r)=f(x); In a target attack , bring f ( x + r ) = y t f(x+r)=y^t f(x+r)=yt, y t y^t yt For the target category that needs to be output by the model .

For use l 2 l_2 l2 Norm to constrain the disturbance size , In a target attack , The generation of countermeasure samples is described as the following optimization form :

Minimize ∥ r ∥ 2 s.t. 1. f ( x + r ) = y t 2. x + r ∈ R m \text { Minimize }\|r\|_{2} \\ \text { s.t. 1. } f(x+r)=y^{\mathrm{t}} \\ \text { 2. } x+r \in \mathbf{R}^{\mathrm{m}} Minimize ∥r∥2 s.t. 1. f(x+r)=yt 2. x+r∈Rm

y t y^t yt Is the target category to attack , x x x Is the original input sample , r r r Indicates disturbance noise , x + r x+r x+r Represents the countermeasure sample obtained , The following text also uses x A x^A xA Express .

2. White box attack technology

2.1 Attack method based on direct optimization

characteristic : This kind of attack method directly optimizes the objective function through the algorithm, and the generated counter disturbance is relatively small , But the optimization time is long , The algorithm takes a lot of time to find the appropriate hyperparametric problem .

2.1.1 be based on Box-constrained L-BFGS The attack of

principle : The Lagrange relaxation method is used to f ( x + r ) = y t f(x+r)=y^{\mathrm{t}} f(x+r)=yt The restriction is simplified to loss f ( x + r , y t ) \operatorname{loss}_{f}\left(x+r, y^{\mathrm{t}}\right) lossf(x+r,yt) To optimize , loss f \operatorname{loss}_{f} lossf Represents the cross entropy loss function . The final optimization objectives are as follows :

M i n i m i z e c ∥ r ∥ 2 + loss f ( x + r , y t ) s.t. x + r ∈ [ 0 , 1 ] m Minimize c\|r\|_{2}+\operatorname{loss}_{f}\left(x+r, y^{\mathrm{t}}\right)\\ \text { s.t. } x+r \in[0,1]^{\mathrm{m}} Minimizec∥r∥2+lossf(x+r,yt) s.t. x+r∈[0,1]m

among , The input image is normalized in [0,1] Between , To meet the box constraints in the convex optimization method , Make the above objectives available L-BFGS Algorithm to solve .

Solution idea : The algorithm is a target attack algorithm , Use this algorithm to find The idea of solution is to fix the hyperparameter first c To optimize the optimal solution under the current parameter value , Pass again c Linear search can find the satisfaction f ( x + r ) = y t f(x+r)=y^{\mathrm{t}} f(x+r)=yt Conditions of the Optimal anti disturbance r r r , The final countermeasure sample is x + r x+r x+r.

characteristic : It is the first designed anti attack algorithm , The algorithm abstracts the process of generating countermeasure samples as a convex optimization problem for the first time , It is an important anti attack algorithm based on optimization method .

2.1.2 C&W attack

characteristic : The attack algorithm can make use l 0 l_0 l0, l 2 l_2 l2, l ∞ l_\infty l∞ Norm respectively limits the disturbance and generates countermeasure samples , It is one of the more powerful target attack algorithms at present . It belongs to the attack algorithm of direct optimization , Is based on Box-constrained L-BFGS An improved version of the algorithm .

-

improvement 1: C&W The attack algorithm considers the relationship between the attack target class and other classes , Chose a better loss function :

loss f , t ( x A ) = max ( max { Z ( x A ) i : i ≠ t } − Z ( x A ) t , − k ) \operatorname{loss}_{f, t}\left(x^{\mathrm{A}}\right)= \max \left(\max \left\{Z\left(x^{\mathrm{A}}\right)_{i}: i \neq t\right\}-Z\left(x^{\mathrm{A}}\right)_{t},-k\right) lossf,t(xA)=max(max{ Z(xA)i:i=t}−Z(xA)t,−k)

among , Z ( x A ) = Logits ( x A ) Z\left(x^{\mathrm{A}}\right)=\operatorname{Logits}\left(x^{\mathrm{A}}\right) Z(xA)=Logits(xA) Indicates the target network Softmax Previous one Layer output ,i Indicates the label category ,t The tag class that represents the target attack ,k Indicates the attack success rate against the sample , k The bigger it is , The higher the attack success rate of the generated countermeasure sample . -

improvement 2: Remove the in the above formula Box-constrained Qualifications , The optimization problem is transformed into an unconstrained convex optimization problem , Easy to use Gradient descent method , Momentum gradient descent method and Adam Wait for the algorithm to solve . To achieve this purpose , Provide two effective methods :

-

Using the idea of projection gradient descent method, the x + r x+r x+r Cut in [ 0 , 1 ] m [0,1]^{\mathrm{m}} [0,1]m Within the scope of , To remove x + r x+r x+r Interval constraints of .

-

advantage : The generated counter sample has strong attack ability

-

shortcoming : The anti disturbance generated by the method of introducing variables for optimization is small , Yes x+r The loss of gradient information will be caused by line clipping

-

-

Introduce new variables ω \omega ω , Make ω ∈ [ − ∞ , + ∞ ] \omega \in[-\infty,+\infty] ω∈[−∞,+∞] Section , Constructing a mapping function will ω \omega ω from [ − ∞ , + ∞ ] [-\infty,+\infty] [−∞,+∞] Interval mapping to [0,1] District between , By optimizing the ω \omega ω Go to fall Fang Law ① in from x + r ∈ [ 0 , 1 ] m x+r \in[0,1]^{\mathrm{m}} x+r∈[0,1]m Optimization error caused by conditions .

-

-

In the selection of optimization algorithm , gradient descent , Momentum gradient descent and Adam And other optimization methods can generate confrontation samples of the same quality , but Adam The convergence speed of the algorithm is faster than the other two .

advantage : It can generate countermeasure samples with good attack ability against distillation defense network .C&W The countermeasure samples generated by the attack algorithm have good attack ability against the distillation defense model , It is a relatively powerful white box attack algorithm at present , It is also one of the main test algorithms used to evaluate the robustness of the model .

2.2 Attack method based on gradient optimization

The core idea : Perturb the input sample in the direction of the change of the model loss function , Make the model misclassify the input sample or make the model classify the input sample to the specified incorrect target category .

advantage : The advantage of this kind of attack method is that the method is simple to implement , And the success rate of white box against attack is high . It is a main anti attack technology at present .

2.2.1 FGSM attack ( Countermeasure sample generation algorithm based on one-step gradient calculation )

principle : Make the change of anti disturbance consistent with the change direction of model loss gradient . say concretely , In an aimless attack , Make the model loss function about the input x The gradient changes in the rising direction, and the disturbance achieves the effect of misclassification of the model .

Algorithm description : FGSM The algorithm is described as :

x A = x + α sign ( ∇ x J ( θ , x , y ) ) x^{\mathrm{A}}=x+\alpha \operatorname{sign}\left(\nabla_{x} J(\theta, x, y)\right) xA=x+αsign(∇xJ(θ,x,y))

Among them is α \alpha α Hyperparameters , The step size expressed as a one-step gradient , sign(.) As a symbol Sign function , Therefore, the disturbance generated by this method is in l ∞ l_{\infty} l∞ Anti disturbance under norm constraints .

advantage : The generated countermeasure samples have good migration attack ability , And this work is of far-reaching significance , Most of the later attack algorithms based on gradient optimization are FGSM Variations of the algorithm .

shortcoming : Since the gradient is calculated only once , Its attack ability is limited , And the precondition for the successful application of this method is that the gradient change direction of the loss function is linear in the local interval . In the nonlinear optimization interval , The countermeasure samples generated by large step length optimization along the gradient change direction can not guarantee the success of the attack .

2.2.2 I-FGSM attack ( Iterative FGSM Algorithm )

principle : Propose iterative FGSM Algorithm , The linear hypothesis is approximately established by reducing the optimization interval .

Algorithm description : I-FGSM The target free attack of the algorithm is described as :

x 0 A = x , x i + 1 A = Clip ( x i A + α sign ( ∇ x J ( θ , x i A , y ) ) ) x_{0}^{\mathrm{A}}=x, x_{i+1}^{\mathrm{A}}=\operatorname{Clip}\left(x_{i}^{\mathrm{A}}+\alpha \operatorname{sign}\left(\nabla_{x} J\left(\theta, x_{i}^{\mathrm{A}}, y\right)\right)\right) x0A=x,xi+1A=Clip(xiA+αsign(∇xJ(θ,xiA,y)))

among x i + 1 A x_{i+1}^{\mathrm{A}} xi+1A For the first time i The countermeasure samples obtained after the second iteration , Wei Chao α \alpha α Parameters , Expressed as the step size of each step gradient in the iterative process , fuck Clip ( . ) \operatorname{Clip}(.) Clip(.) Act beyond the legal scope x A x^{\mathrm{A}} xA Cut within the specified range .

advantage : a FGSM The anti sample attack ability generated by the algorithm is stronger

shortcoming : The generated anti sample migration attack ability is not as good as FGSM Algorithm

2.2.3 PGD attack ( Iterative FGSM Algorithm , And I-FGSM Similar attacks )

principle : And I-FGSM The algorithm is compared with ,PGD The number of iterations of the algorithm is more , In the iteration process, the results obtained from the previous version x A x^{\mathrm{A}} xA Noise initialization is carried out randomly , In order to avoid saddle points that may be encountered in the optimization process .

advantage : Is currently recognized as the strongest white box attack method , It is also one of the benchmark algorithms used to evaluate the robustness of the model . Use PGD The anti sample attack ability generated by the algorithm is better than I-FGSM Strong attack power

shortcoming : It also has the problem of weak migration attack ability

2.2.4 MI-FGSM attack ( The momentum based iteration generates a counter sample MI-FGSM Algorithm )

principle : stay I-FGSM Algorithm In the iteration of Introducing momentum technology , In this way, the velocity vector is accumulated in the direction of loss gradient change to stabilize the update direction of gradient , It makes the optimization process not easy to fall into local optimization .

Algorithm description : The algorithm is described as :

g i + 1 = μ g i + ∇ x J ( x i A , y ) ∥ ∇ x J ( x i A , y ) ∥ 1 x i + 1 A = x i A + α sign ( g i + 1 ) g_{i+1} = \mu g_{i}+\frac{\nabla_{x} J\left(x_{i}^{\mathrm{A}}, y\right)}{\left\|\nabla_{x} J\left(x_{i}^{\mathrm{A}}, y\right)\right\|_{1}} \\ x_{i+1}^{\mathrm{A}}=x_{i}^{\mathrm{A}}+\alpha \operatorname{sign}\left(g_{i+1}\right) gi+1=μgi+∥∥∇xJ(xiA,y)∥∥1∇xJ(xiA,y)xi+1A=xiA+αsign(gi+1)

among , g i + 1 g_{i+1} gi+1 Said in the first i The cumulative gradient momentum after the second iteration , μ \mu μ Is the attenuation factor of the momentum term ; When μ \mu μ= 0 , Then the above form is I-FGSM The form of the algorithm . Because the gradient obtained in multiple iterations is not of one order of magnitude , The current gradient obtained in each iteration ∇ x J ( x i A , y ) \nabla_{x} J\left(x_{i}^{\mathrm{A}}, y\right) ∇xJ(xiA,y) Through its own l 1 l_{1} l1 The distance is normalized .

advantage : On the basis of better attack ability, it also retains a certain migration attack ability , It is a commonly used white box attack method .

2.3 Attack method based on decision boundary analysis

The core idea : It is a special method of fighting attacks . By gradually reducing the distance between the sample and the decision boundary of the model, the model misclassifies the sample , Therefore, the generated countermeasure samples are generally small , But this kind of attack method does not have the target attack ability .

2.3.1 DeepFool attack ( Generate an anti disturbance image for a single input image DeepFool Attack algorithm )

characteristic : DeepFool The counter disturbance generated by the algorithm is very small , The disturbance is generally considered to be approximate to the minimum disturbance .

Algorithm description :

- For linear binary classification problem , Given a classifier k ^ ( x ) = sign ( f ( x ) ) , f ( x ) = ω x + b \hat{k}(x)=\operatorname{sign}(f(x)), \quad f(x)=\omega x+b k^(x)=sign(f(x)),f(x)=ωx+b, The decision boundary of the classifier is

F = { x : f ( x ) = 0 } F=\{x: f(x)=0\} F={ x:f(x)=0} Express , Here's the picture . To make the current data point x0 Misclassified by the model to the other side of the decision boundary , Its minimum disturbance is for x 0 x_0 x0 stay F Orthogonal projection on r ∗ ( x 0 ) r_{*}\left(x_{0}\right) r∗(x0): r ∗ ( x 0 ) ≡ arg min r ∥ r ∥ 2 r_{*}\left(x_{0}\right) \equiv \underset{r}{\arg \min }\|r\|_{2} r∗(x0)≡rargmin∥r∥2, according to f ( x ) = ω x + b f(x)=\omega x+b f(x)=ωx+b, Deduce r ∗ ( x 0 ) = − f ( x 0 ) ∥ x 0 ∥ 2 2 ω r_{*}\left(x_{0}\right)=-\frac{f\left(x_{0}\right)}{\left\|x_{0}\right\|_{2}^{2}} \omega r∗(x0)=−∥x0∥22f(x0)ω, This is the minimum disturbance value calculated when the decision boundary of the model is linear .

- For nonlinear binary classification problem , The data points can be approximately obtained through the iterative process x0 Minimum disturbance of r ∗ ( x 0 ) r_{*}\left(x_{0}\right) r∗(x0) .

2.3.2 UAPs attack ( A general anti disturbance algorithm is generated for the target model and the whole data set UAPs Attack algorithm )

principle : Moosavi-Dezfooli Wait for the discovery The deep learning model has a general anti disturbance which is independent of the input samples , This disturbance is related to the structure of the target model and the characteristics of the data set . When the general countermeasure disturbance is superimposed on the input sample of the data set, the countermeasure sample is obtained , Most of the countermeasure samples obtained have attack ability .

Algorithm description : UAPs The attack algorithm generates a general anti disturbance algorithm by iterative calculation on a small number of sampled data points .UAPs The perturbation calculation of the attack algorithm in the iterative process makes DeepFool Algorithm to solve . Final , After many iterations, the data points are pushed to the other side of the decision boundary of the model , Achieve the purpose of resisting attack .

2.4 Attack method based on generative neural network

characteristic : The attack method based on generative neural network uses self-monitoring , Generate countermeasure samples by training generative neural network . The characteristic of this kind of attack method is that once the generative model training is completed , It can efficiently generate a large number of countermeasure samples with good migration attack ability .

2.4.1 ATN attack ( Using generative neural network to generate attacks against samples )

Algorithm description : ATN The network converts an input sample into a countermeasure sample for the target model ,ATN The network is defined as follows : g f , θ ( x ) : x ∈ X → x A g_{f, \theta}(x): x \in X \rightarrow x^{\mathrm{A}} gf,θ(x):x∈X→xA, among θ \theta θ Represents a neural network g g g Parameters of , f f f Indicates the target network to be attacked . Target attack problem , Yes ATN The Internet g Training for , It can be described as the following optimization objectives :

arg min θ ∑ x i ∈ x β L X ( g f , θ ( x i ) , x i ) + L Y ( f ( g f , θ ( x i ) ) , f ( x i ) ) \underset{\theta}{\arg \min } \sum_{x_{i} \in x} \beta L_{X}\left(g_{f, \theta}\left(x_{i}\right), x_{i}\right)+L_{Y}\left(f\left(g_{f, \theta}\left(x_{i}\right)\right), f\left(x_{i}\right)\right) θargminxi∈x∑βLX(gf,θ(xi),xi)+LY(f(gf,θ(xi)),f(xi))

among , L X L_X LX Is the visual loss function , Common l 2 l_2 l2 Norm means ; L Y L_Y LY Loss function for category , Defined as L Y = L 2 ( y ′ , r ( y , t ) ) L_{Y}=L_{2}\left(y^{\prime}, r(y, t)\right) LY=L2(y′,r(y,t)), among y = f ( x ) y=f(x) y=f(x), y ′ = f ( g f ( x ) ) y'=f(g_f(x)) y′=f(gf(x)), t t t Is the type of target attack , r ( . ) r(.) r(.) Is a reordering function , It's right x Make changes , send y k < y t , ∀ k ≠ t y_{k}<y_{t}, \forall k \neq t yk<yt,∀k=t

advantage : This method generates countermeasure samples faster , And its attack ability is strong

shortcoming : Weak migration attack ability

2.4.2 UAN attack ( Based on neural network, a general anti disturbance attack algorithm is generated )

principle : UAN By training a simple deconvolution neural network, the attack will be one in the natural distribution N ( 0 , 1 ) 100 N(0,1)^{100} N(0,1)100 The up sampled random noise is converted into general anti disturbance .

Algorithm description : Hayes The following optimization functions are designed for the training of deconvolution neural network :

L t = max { { max i ≠ y ′ log [ f ( δ ′ + x ) ] i − log [ f ( δ ′ + x ) ] i , − k } + α ∥ δ ′ ∥ p \begin{aligned} L_{t}=\max \{&\left\{\max _{i \neq y^{\prime}} \log \left[f\left(\delta^{\prime}+x\right)\right]_{i}-\right.\left.\log \left[f\left(\delta^{\prime}+x\right)\right]_{i},-k\right\}+\alpha\left\|\delta^{\prime}\right\|_{p} \end{aligned} Lt=max{

{

i=y′maxlog[f(δ′+x)]i−log[f(δ′+x)]i,−k}+α∥δ′∥p

among , The loss function of the model is selected with C&W Attack the same marginal loss function , y T y^T yT The category selected for the target attack , α \alpha α Control the size of disturbance and the relative importance of optimization items .

advantage : In practical use UAN Attack mode when attacking , Use l 2 l_2 l2 or l ∞ l_\infty l∞ Norm can generate a general anti disturbance system with good attack ability , Its attack ability is stronger than that previously proposed UAPs attack .

2.4.3 AdvGAN attack

principle : Xiao In the attack algorithm generated based on Neural Network First introduction The idea of generative countermeasure network , An inclusion generator is proposed 、 Discriminator and Of the attack target model AdvGAN Attack the network .AdvGAN The network uses generative countermeasures against “ Against the loss ” term , To ensure the authenticity of the countermeasure sample .

Algorithm description : in the light of AdvGAN The overall optimization function of network training is designed as follows :

L = L adv f + α L G A N + β L hinge \mathcal{L}=\mathcal{L}_{\text {adv }}^{\mathrm{f}}+\alpha \mathcal{L}_{\mathrm{GAN}}+\beta \mathcal{L}_{\text {hinge }} L=Ladv f+αLGAN+βLhinge

α \alpha α and β \beta β Parameters are used to control the relative importance of each optimization item , As a whole , L G A N \mathcal{L}_{\mathrm{GAN}} LGAN The purpose of the loss term is to make the generated anti disturbance similar to the original sample , L a d v \mathcal{L}_{\mathrm{adv}} Ladv The purpose of the loss item is to resist the attack .

advantage : Trained AdvGAN The network can convert random noise into effective countermeasures , Here's the picture .

2.5 Other attack methods

2.5.1 JSMA attack

type : JSMA Attack is based on l 0 l_0 l0 Attacks under norm constraints , It makes the model misclassify the input samples by modifying several pixels in the image .

principle : JSMA The attack algorithm uses the saliency graph to show the influence of input characteristics on the prediction results , It modifies the pixels of a clean image one at a time , Then calculate the partial derivative of the output of the last layer of the model to each feature of the input . The forward derivative obtained by , Calculate the significant figure . Finally, the input features that have the greatest impact on the model output are found by using the saliency map , By modifying these feature points that have a great impact on the output, an effective countermeasure sample is obtained .

2.5.2 Single pixel attack

type : Single pixel attack is an attack algorithm based on differential evolution algorithm .

principle : The single pixel attack algorithm only modifies the number of sample data points at a time 1 Pixel values try to misclassify the model .

characteristic : Practical application , This is an extreme form of attack . This method has a good attack effect on simple data sets , such as MNIST Data sets . When the pixel space of the input image is large ,1 The change of pixels is difficult to affect the classification results , As the image grows , The search space of the algorithm will also increase rapidly , The performance of the algorithm is degraded .

2.5.3 stAdv attack

type : One is to transform image samples in spatial domain To produce a counter sample stAdv Attack algorithm

principle : The algorithm translates the local image features , Distortion and other operations realize the spatial transformation attack against the input samples .

characteristic : Use stAdv The countermeasure samples generated by the algorithm are better than those based on the traditional l p l_p lp The countermeasure samples generated by norm distance measurement are more real , And for the current model using countermeasure training measures, it has a good attack effect .

2.5.4 BPDA attack

type : The fragmentation gradient strategy is used to target FGSM,I-FGSM And other countermeasures and defense methods based on gradient attack method .

principle : The fragmentation gradient strategy uses a non differentiable function $ g(x) pre It's about The reason is transport Enter into sample Ben , send training Practice have to To Of model type Preprocess input samples , Make the trained model pre It's about The reason is transport Enter into sample Ben , send training Practice have to To Of model type f(g(x))$ stay x x x Upper non differentiable , This makes it impossible for the attacker to calculate the gradient used to resist sample generation .

Summary of anti attack algorithms

| Attack algorithm | Perturbation norm | Attack types | Attack power | Algorithmic advantage | Algorithmic disadvantage |

|---|---|---|---|---|---|

| L-BFGS | l 2 l_2 l2 | Single step | Have good migration attack ability against samples , It's number one A proposed anti attack algorithm | The algorithm takes a lot of time to optimize the hyperparameters c | |

| C&W | l 2 , l ∞ l_2,l_∞ l2,l∞ | iteration | The attack ability of most distillation defense models is strong and the generated disturbance is small | The efficiency of algorithm attack is low , It takes time to find the right The super parameter of | |

| FGSM | l ∞ l_∞ l∞ | Single step | The generation efficiency is very high, and the disturbance has good migration attack ability | Calculate a gradient to generate countermeasure samples , against The disturbance intensity of the sample is large | |

| I-FGSM | l ∞ l_∞ l∞ | iteration | Generate countermeasure samples by multi-step iteration , Strong attack power | Easy to overfit , Poor migration attack ability | |

| PGD | l ∞ l_∞ l∞ | iteration | Than I-FGSM The attack ability of the algorithm is strong | Poor migration attack ability | |

| MI-FGSM | l ∞ l_∞ l∞ | iteration | Both good attack ability and migration attack ability , The algorithm converges faster | Attack capability ratio PGD Algorithm difference | |

| DeepFool | l 2 , l ∞ l_2,l_∞ l2,l∞ | iteration | The disturbance obtained by accurate calculation is smaller | No target attack capability | |

| UAPs | l 2 , l ∞ l_2,l_∞ l2,l∞ | iteration | The generated general anti disturbance has good migration attack ability | There is no guarantee that the attack on specific data points will succeed rate | |

| ATN | l ∞ l_∞ l∞ | iteration | It can attack multiple target models at the same time , against The samples are more diverse | Train the generation network to find the appropriate hyperparameters | |

| UAN | l 2 , l ∞ l_2,l_∞ l2,l∞ | iteration | The speed of generating disturbance is fast and the attack ability is stronger than UAPs Algorithm | The training of generating model takes some time | |

| AdvGAN | l 2 l_2 l2 | iteration | The generated countermeasure samples are visually very similar to the real samples be similar | The process of confrontation training is unstable | |

| JSMA | l 0 l_0 l0 | iteration | The generated countermeasure samples are highly similar to the real samples | The generated countermeasure sample does not have migration attack ability force | |

| Single pixel attack | l 0 l_0 l0 | iteration | Only one pixel can be modified to attack | Large amount of computation , Only for smaller numbers According to the collection | |

| stAdv | - | iteration | It has a good attack effect for confrontation training defense | Model attack effectiveness only for specific defense strategies Good fruit | |

| BPDA | l ∞ l_∞ l∞ | iteration | It can effectively attack the model of confusion gradient defense | Attack only against the defense of confusion gradient |

Reference resources :《 Overview of white box anti attack technology in image classification _ Wei Jiaxuan 》

版权声明

本文为[Breeze_]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230610322927.html

边栏推荐

猜你喜欢

【2021年新书推荐】Red Hat RHCSA 8 Cert Guide: EX200

![Android interview Online Economic encyclopedia [constantly updating...]](/img/48/dd1abec83ec0db7d68812f5fa9dcfc.png)

Android interview Online Economic encyclopedia [constantly updating...]

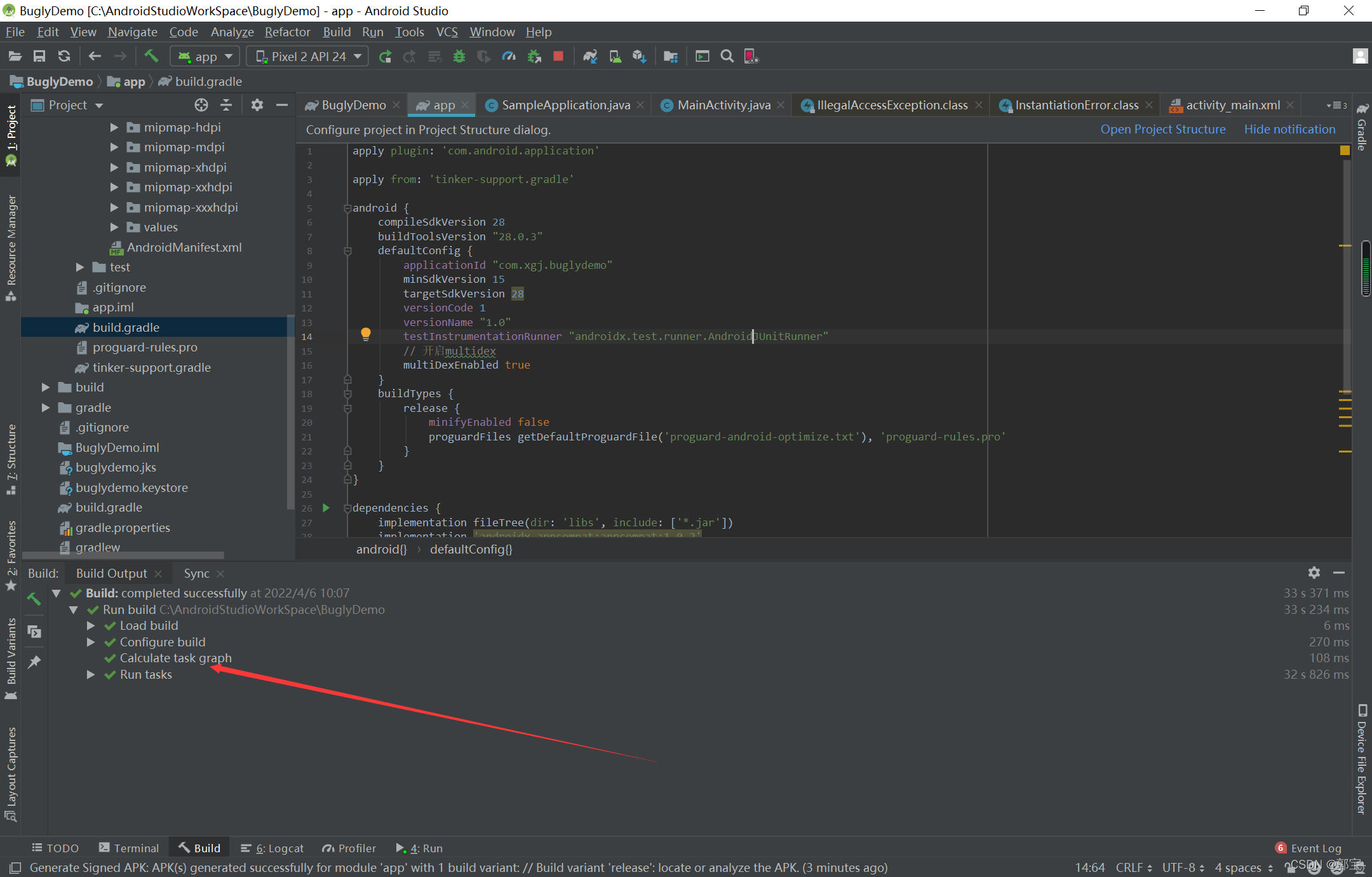

Project, how to package

PaddleOCR 图片文字提取

Cause: dx. jar is missing

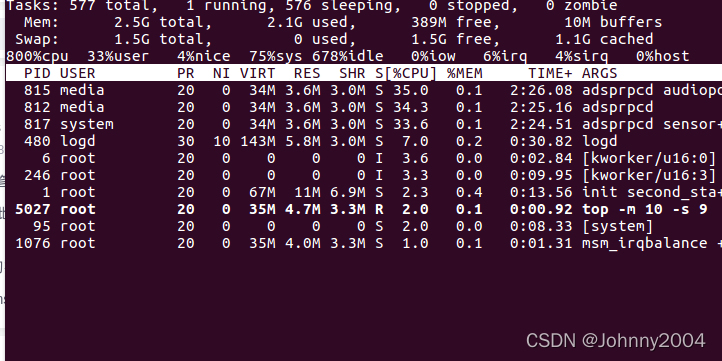

adb shell top 命令详解

第5 章 机器学习基础

免费使用OriginPro学习版

机器学习 二:基于鸢尾花(iris)数据集的逻辑回归分类

Viewpager2 realizes Gallery effect. After notifydatasetchanged, pagetransformer displays abnormal interface deformation

随机推荐

[Andorid] 通过JNI实现kernel与app进行spi通讯

【动态规划】杨辉三角

Android exposed components - ignored component security

取消远程依赖,用本地依赖

[recommendation for new books in 2021] professional azure SQL managed database administration

机器学习 三: 基于逻辑回归的分类预测

Bottom navigation bar based on bottomnavigationview

torch. mm() torch. sparse. mm() torch. bmm() torch. Mul () torch The difference between matmul()

BottomSheetDialogFragment + ViewPager+Fragment+RecyclerView 滑动问题

Component learning (2) arouter principle learning

Component learning

1.2 初试PyTorch神经网络

Reading notes - activity

Component based learning (3) path and group annotations in arouter

MySQL笔记4_主键自增长(auto_increment)

Cancel remote dependency and use local dependency

组件化学习(1)思想及实现方式

Pytorch模型保存与加载(示例)

MySQL笔记2_数据表

Fill the network gap