当前位置:网站首页>PyTorch笔记——通过搭建ResNet熟悉网络搭建方式(完整代码)

PyTorch笔记——通过搭建ResNet熟悉网络搭建方式(完整代码)

2022-04-23 05:44:00 【umbrellalalalala】

参考资料:《深度学习框架PyTorch:入门与实践》

注意:书籍作者表示要注意编程简洁,所以本文的网络搭建方式是可以借鉴的。

完整代码

部分讲解见注释:

from torch import nn

import torch as t

from torch.nn import functional as F

# 实现子module:Residual Block

class ResidualBlock(nn.Module):

def __init__(self, inchannel, outchannel, stride=1, shortcut=None):

super().__init__()

self.left = nn.Sequential(

# padding=1指的是上下左右各补一行,如果是padding=(1, 1)则是

# 上下各补一行、左右各补一行(先高后宽)

nn.Conv2d(inchannel, outchannel, 3, stride, 1, bias=False),

nn.BatchNorm2d(outchannel),

# inplace=True是指原地操作,直接修改上层传递下来的tensor,节省内存

nn.ReLU(inplace=True),

nn.Conv2d(outchannel, outchannel, 3, 1, 1, bias=False),

nn.BatchNorm2d(outchannel))

self.right = shortcut

def forward(self, x):

out = self.left(x)

residual = x if self.right is None else self.right(x)

out += residual

return F.relu(out)

class ResNet(nn.Module):

def __init__(self, num_classes=1000):

super().__init__()

self.pre = nn.Sequential(

nn.Conv2d(3, 64, 7, 2, 3, bias=False),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

# kernel_size, stride, padding

nn.MaxPool2d(3, 2, 1))

self.layer1 = self._make_layer(64, 128, 3)

self.layer2 = self._make_layer(128, 256, 4, stride=2)

self.layer3 = self._make_layer(256, 512, 6, stride=2)

self.layer4 = self._make_layer(512, 512, 3, stride=2)

self.fc = nn.Linear(512, num_classes)

def _make_layer(self, inchannel, outchannel, block_num, stride=1):

shortcut = nn.Sequential(

nn.Conv2d(inchannel, outchannel, 1, stride, bias=False),

nn.BatchNorm2d(outchannel))

layers = []

# 第一个把inchannel变成outchannel

layers.append(ResidualBlock(inchannel, outchannel, stride, shortcut))

for i in range(1, block_num):

layers.append(ResidualBlock(outchannel, outchannel))

# list a=[1,2,3],那么*a就是指1 2 3

return nn.Sequential(*layers)

def forward(self, x):

x = self.pre(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = F.avg_pool2d(x, 7)

# 变为[batch, ...]

x = x.view(x.size(0), -1)

return self.fc(x)

测试前向传播

model = ResNet()

# 模拟224×224的图片,通道为3。开头的1是batch_size

input = t.randn(1, 3, 224, 224)

o = model(input)

o.shape

输出结果:

torch.Size([1, 1000])

对应分类问题的1000个类别。

版权声明

本文为[umbrellalalalala]所创,转载请带上原文链接,感谢

https://blog.csdn.net/umbrellalalalala/article/details/119981166

边栏推荐

- POI exports to excel, and the same row of data is automatically merged into cells

- 多个一维数组拆分合并为二维数组

- MySQL realizes master-slave replication / master-slave synchronization

- MySQL transaction

- Pytorch——数据加载和处理

- Multithreading and high concurrency (3) -- synchronized principle

- SQL注入

- filebrowser实现私有网盘

- mysql如何将存储的秒转换为日期

- 治療TensorFlow後遺症——簡單例子記錄torch.utils.data.dataset.Dataset重寫時的圖片維度問題

猜你喜欢

Configure domestic image accelerator for yarn

Anaconda installed pyqt5 and pyqt5 tools without designer Exe problem solving

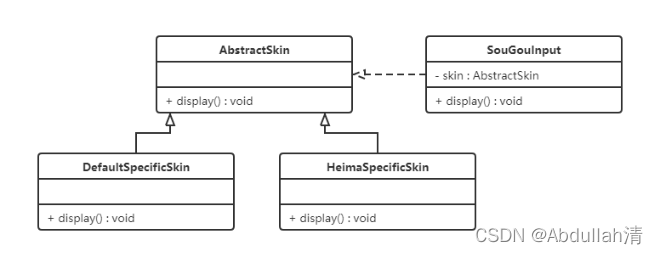

2-軟件設計原則

你不能访问此共享文件夹,因为你组织的安全策略阻止未经身份验证的来宾访问

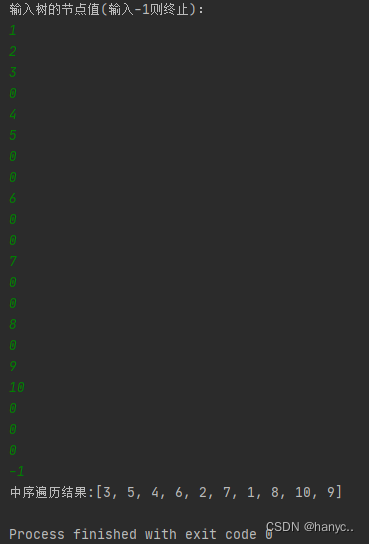

创建二叉树

lambda表达式

Latex quick start

SQL注入

Navicate连接oracle(11g)时ORA:28547 Connection to server failed probable Oeacle Net admin error

数字图像处理基础(冈萨雷斯)二:灰度变换与空间滤波

随机推荐

多线程与高并发(3)——synchronized原理

治療TensorFlow後遺症——簡單例子記錄torch.utils.data.dataset.Dataset重寫時的圖片維度問題

umi官网yarn create @umijs/umi-app 报错:文件名、目录名或卷标语法不正确

DBCP使用

jdbc入门\获取数据库连接\使用PreparedStatement

Hotkeys, interface visualization configuration (interface interaction)

编程记录——图片旋转函数scipy.ndimage.rotate()的简单使用和效果观察

异常的处理:抓抛模型

opensips(1)——安装opensips详细流程

Solve the error: importerror: iprogress not found Please update jupyter and ipywidgets

Pytorch学习记录(四):参数初始化

Pytorch学习记录(五):反向传播+基于梯度的优化器(SGD,Adagrad,RMSporp,Adam)

EditorConfig

mysql实现主从复制/主从同步

2 - principes de conception de logiciels

interviewter:介绍一下MySQL日期函数

手动删除eureka上已经注册的服务

Write your own redistemplate

Solution record of slow access speed of SMB service in redhat6

自定义异常类