当前位置:网站首页>[code analysis (1)] communication efficient learning of deep networks from decentralized data

[code analysis (1)] communication efficient learning of deep networks from decentralized data

2022-04-23 13:47:00 【Silent city of the sky】

sampling.py

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# Python version: 3.6

import numpy as np

from torchvision import datasets, transforms

'''

datasets: Provide common data set loading ,

It's all inheritance in design torch.utils.data.Dataset,

It mainly includes MNIST、CIFAR10/100、ImageNet、COCO etc. ;

transforms: Provides common data preprocessing operations ,

It mainly includes to Tensor as well as PIL Image Operations on objects ;

'''

def mnist_iid(dataset, num_users):

"""

Independent homologous distribution

Yes MNIST Dataset sampling data (IID data )

Sample I.I.D. client data from MNIST dataset

:param dataset:

:param num_users:

:return: dict of image index

"""

num_items = int(len(dataset)/num_users)

'''

len(dataset) All the elements ,= num_items * num_users

'''

dict_users, all_idxs = {

}, [i for i in range(len(dataset))]

'''

Not calling these and not outputting

print('-------------')

print(dict_users)

all_idxs Subscript

'''

for i in range(num_users):

dict_users[i] = set(np.random.choice(all_idxs, num_items,

replace=False))

'''

dict_users[i] dict type {

'0':{

1,3,4}}

replace Indicates whether to reuse elements

numpy.random.choice(a, size=None, replace=True, p=None)

a : If it's a one-dimensional array , It means random sampling from this one-dimensional array ; If it is int type , It means the from 0 To a-1 Random sampling in this sequence

from [0,1,2,3 ... len(dataset)] sampling num_items Elements

That's reasonable ,dataset It's equivalent to a matrix , Behavior user, As a Item

Every user For a line , As a item Number , So for each user sampling num_item Elements

'''

all_idxs = list(set(all_idxs) - dict_users[i])

'''

set(all_idxs):{

0,1,2,3,4,5,6,7...}

Every user All minus once , The last is empty

The function returns dict_users:dict of image index

dict_users[i] similar {

'0':{

1,3,4}}

'''

# print(dict_users)

return dict_users

def mnist_noniid(dataset, num_users):

"""

Non independent identically distributed

Sample non-I.I.D client data from MNIST dataset

:param dataset:

:param num_users:

:return:

"""

# 60,000 training imgs --> 200 imgs/shard X 300 shards

'''

shard debris

'''

num_shards, num_imgs = 200, 300

idx_shard = [i for i in range(num_shards)]

# similar [0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

dict_users = {

i: np.array([]) for i in range(num_users)}

# print(dict_users)

'''

initialization dict_users

'''

idxs = np.arange(num_shards*num_imgs)

'''

idxs similar [0 1 2 3 4 5 6 7 8....60000]

'''

# print(idxs) # [ 0 1 2 ... 59997 59998 59999]

labels = dataset.train_labels.numpy()

# print(labels) # [5 0 4 ... 5 6 8]

'''

Training set label (mnist.train.labels) It's a 55000 * 10 Matrix

In every line 10 A number represents the corresponding picture, which belongs to the number 0 To 9

Probability , The scope is 0 or 1. Only one tag line is 1,

The correct number indicating the picture is the corresponding subscript value , The rest is 0.

'''

# sort labels

idxs_labels = np.vstack((idxs, labels))

'''

idxs_labels The first is 0123 Subscript

The second element is the tag

print(idxs_labels)

Two np Data merging

[[ 0 1 2 ... 59997 59998 59999]

[ 5 0 4 ... 5 6 8]]

similar :

c = np.array([[1, 2],[3, 4]])

print(c)

[[1 2]

[3 4]]

'''

idxs_labels = idxs_labels[:, idxs_labels[1, :].argsort()]

'''

Yes idxs_labels Except for the first element (ndarry), That's the second one

Element ordering ( From small to large )

'''

idxs = idxs_labels[0, :]

# print(idxs) [30207 5662 55366 ... 23285 15728 11924]

'''

idxs Save subscript

'''

# divide and assign 2 shards/client

for i in range(num_users):

# num_shards=200

rand_set = set(np.random.choice(idx_shard, 2, replace=False))

'''

from idx_shard[0, 1, 2, 3, 4, 5, 6, 7, 8, 9...]

choice 2 An element, for example [1,4]

'''

idx_shard = list(set(idx_shard) - rand_set)

'''

idx_shard [0, 1, 2, 3, 4, 5, 6, 7, 8, 9...]

set() There are no duplicates in the set

'''

# print(dict_users[1])

# print(rand_set) {

8, 19}

# print('---------')

for rand in rand_set:

# print(dict_users[i])

dict_users[i] = np.concatenate(

(dict_users[i], idxs[rand*num_imgs:(rand+1)*num_imgs]), axis=0)

'''

idxs Save subscript

num_imgs=300

for example rand = 8

idxs[2400:2700]

Every dic_users[i] With 300 Subscript data

A disorderly

'''

# print(dict_users)

# print(idx_shard)

return dict_users

def mnist_noniid_unequal(dataset, num_users):

"""

It means that each client has a different amount of data ?

Sample non-I.I.D client data from MNIST dataset s.t clients

have unequal amount of data

:param dataset:

:param num_users:

:returns a dict of clients with each clients assigned certain

number of training imgs

"""

# 60,000 training imgs --> 50 imgs/shard X 1200 shards

num_shards, num_imgs = 1200, 50

idx_shard = [i for i in range(num_shards)]

# similar [0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

dict_users = {

i: np.array([]) for i in range(num_users)}

idxs = np.arange(num_shards*num_imgs)

'''

idxs similar [0 1 2 3 4 5 6 7 8....60000]

'''

labels = dataset.train_labels.numpy()

# sort labels

idxs_labels = np.vstack((idxs, labels))

idxs_labels = idxs_labels[:, idxs_labels[1, :].argsort()]

idxs = idxs_labels[0, :]

'''

Above and def mnist_noniid(dataset, num_users): equally

'''

# Minimum and maximum shards assigned per client:

# Assign each client a maximum of & The smallest fragment

min_shard = 1

max_shard = 30

# Divide the shards into random chunks for every client

# s.t the sum of these chunks = num_shards

'''

Divide the fragments into random blocks for each client

The sum of random blocks is the number of fragments 1200

The following notes are in the form of num_users For testing

'''

random_shard_size = np.random.randint(min_shard, max_shard+1,

size=num_users)

'''

Function returns an integer array , Range [min_shard, max_shard)

random_shard_size = np.random.randint(1, 30+1, size=10

[ 4 11 17 4 15 28 20 14 4 12]

'''

random_shard_size = np.around(random_shard_size /

sum(random_shard_size) * num_shards)

'''

sum(random_shard_size) The sum of the elements in the array

num_shards=1200

random_shard_size / sum(random_shard_size)

Divide each element of the array by the sum of the elements of the array

random_shard_size / sum(random_shard_size) * num_shards

np.around() effect : integer , The first decimal place is rounded off

random_shard_size

[161. 134. 152. 116. 188. 125. 36. 99. 63. 125.]

'''

random_shard_size = random_shard_size.astype(int)

'''

Each element is converted to an integer

'''

# Assign the shards randomly to each client

# Randomly assign fragments to each client

if sum(random_shard_size) > num_shards:

# [181 43 130 203 14 94 217 101 101 108] The sum is indeed greater than 1200

# Compared with the above, this condition is more

for i in range(num_users):

# First assign each client 1 shard to ensure every client has

# atleast one shard of data

'''

Assign a fragment to each client to ensure that each client

At least one piece of data

'''

rand_set = set(np.random.choice(idx_shard, 1, replace=False))

'''

idx_shard = [i for i in range(1200)]

Select a data

'''

idx_shard = list(set(idx_shard) - rand_set)

for rand in rand_set:

dict_users[i] = np.concatenate(

(dict_users[i], idxs[rand*num_imgs:(rand+1)*num_imgs]),

axis=0)

'''

num_imgs=50

dict_users[i] Yes 50 Subscript data

'''

# print(dict_users)

'''

Per client 50 Data

[10422., 10426., 10061., 55610., 55611., 3502., 57174.

'''

random_shard_size = random_shard_size-1

# random_shard_size Reduce each element in by one

# Next, randomly assign the remaining shards

# Randomly assign retained fragments

'''

***********

Different from the previous

***********

With 10 A client test

print(random_shard_size)

[181 43 130 203 14 94 217 101 101 108]

print(len(idx_shard))

1190

'''

for i in range(num_users):

'''

User by user

'''

if len(idx_shard) == 0:

continue

shard_size = random_shard_size[i]

'''

random_shard_size:

[181 43 130 203 14 94 217 101 101 108]

len(idx_shard)

1190

'''

if shard_size > len(idx_shard):

shard_size = len(idx_shard)

'''

It is almost impossible to be greater than len(idx_shard)

'''

rand_set = set(np.random.choice(idx_shard, shard_size,

replace=False))

'''

from idx_shard Middle selection shard_size individual

181 individual

43 individual

130 individual

Altogether 1192 individual

rand_set It's from idx_shard Mid extraction

shard_size(random_shard_size[i]) A collection of elements

'''

idx_shard = list(set(idx_shard) - rand_set)

'''

print(idx_shard) The last is empty

print(len(idx_shard))0

'''

# print(random_shard_size)

# print('******')

# print(rand_set)

for rand in rand_set:

'''

Every rand_set There are many elements {

......}

such as :rand=

idx_shard There are also 1190 Subscript data

client 1 from idx_shard I took it from inside 181 Data , that idx_shard

And then there were 1190-181 Data for the client 2 choice , And so on

For example, one of the clients rand_set={

801, 614, 204, 556, 721, 211, 188}

'''

dict_users[i] = np.concatenate(

(dict_users[i], idxs[rand*num_imgs:(rand+1)*num_imgs]),

axis=0)

'''

801:802*50

Then this client has len(rand_set)*50 Elements

(1)rand_set It's from idx_shard Mid extraction

shard_size(random_shard_size[i]) A collection of elements

idx_shard amount to :[0, 1, 2, ....1190]

10 The fragment size of clients is stored in :

random_shard_size:[181 43 130 203 14 94 217 101 101 108]

(1)random_shard_size How did you get it ?

1. It's from 1 To 30 selection 10(num_users) Data can be repeated

2. Divide each data by the sum of the data

3. Multiply each data 1200(num_shards)

client 1 The size of the fragment is 181 individual

(2) For each client rand_set It's from idx_shard Extracted from

client 1 Need from idx_shard Mid extraction 181 Data

(3) client 1 Of rand_set Yes 181 Data , For example, the first data is

801, So from idx take 50 Data range from [801*50——802*50],rand_set How many numbers are there , So in the end

dict_users[0] Eventually there will be 181*50 Data

idxs = np.arange(num_shards*num_imgs)

idxs similar [0 1 2 3 4 5 6 7 8....60000]

Before, each client had only 50 Data

Now the client 1 With 50+181*50 It's data

'''

# print(len(dict_users[1]))

# print(dict_users)

else:

# sum(random_shard_size) < num_shards(1200):

# There will be such a situation

for i in range(num_users):

shard_size = random_shard_size[i]

rand_set = set(np.random.choice(idx_shard, shard_size,

replace=False))

'''

random_shard_size:[181 43 130 203 14 94 217 101 101 108]

client 1 Of rand_set Yes 181 Elements

idx_shard = [i for i in range(1200)]

'''

idx_shard = list(set(idx_shard) - rand_set)

for rand in rand_set:

dict_users[i] = np.concatenate(

(dict_users[i], idxs[rand*num_imgs:(rand+1)*num_imgs]),

axis=0)

'''

random_shard_size:[181 43 130 203 14 94 217 101 101 108]

For example, the first data is

801, So from idx take 50 Data range from [801*50——802*50],rand_set How many numbers are there , So in the end

dict_users[0] Eventually there will be 181*50 Data

idxs = np.arange(num_shards*num_imgs)

idxs similar [0 1 2 3 4 5 6 7 8....60000]

Before, each client had 0 Data

Now the client 1 With 181*50 It's data

'''

'''

The following is the establishment of ,sum(random_shard_size) < num_shards

shard_size = random_shard_size[i]

idx_shard = list(set(idx_shard) - rand_set) yes

Greater than zero

namely len(idx_shard)>0

'''

if len(idx_shard) > 0:

'''

idx_shard = list(set(idx_shard) - rand_set)

there idx_shard It's the rest

'''

# Add the leftover shards to the client with minimum images:

shard_size = len(idx_shard)

'''

Add the remaining fragments to the client with the least images

because sum(random_shard_size) < num_shards(1200):

'''

# Add the remaining shard to the client with lowest data

k = min(dict_users, key=lambda x: len(dict_users.get(x)))

# print(k)

# print('xxxxxxxxxxxx')

'''

Add the remaining fragments to the client with the lowest amount of data

Find the serial number of the client with the least amount of data

'''

rand_set = set(np.random.choice(idx_shard, shard_size,

replace=False))

'''

idx_shard=1200

there idx_shard It's the rest :idx_shard = list(set(idx_shard) - rand_set)

No more 1200

shard_size = len(idx_shard)

hold rand_set Take all the elements in

{

873, 267,354}

'''

'''

idx_shard = list(set(idx_shard) - rand_set)

there idx_shard It's the rest

'''

idx_shard = list(set(idx_shard) - rand_set)

for rand in rand_set:

dict_users[k] = np.concatenate(

(dict_users[k], idxs[rand*num_imgs:(rand+1)*num_imgs]),

axis=0)

'''

idxs=60000

added len(rand_set)*50 Data

idxs With label The sorting has been disrupted

'''

return dict_users

def cifar_iid(dataset, num_users):

"""

And above mnist_iid Same drop

Sample I.I.D. client data from CIFAR10 dataset

:param dataset:

:param num_users:

:return: dict of image index

"""

num_items = int(len(dataset)/num_users)

dict_users, all_idxs = {

}, [i for i in range(len(dataset))]

for i in range(num_users):

dict_users[i] = set(np.random.choice(all_idxs, num_items,

replace=False))

all_idxs = list(set(all_idxs) - dict_users[i])

return dict_users

def cifar_noniid(dataset, num_users):

"""

And above mnist_noniid Same drop

Except for this sentence :labels = np.array(dataset.targets)

Take a moment 10 One user to test num_users=10

Sample non-I.I.D client data from CIFAR10 dataset

:param dataset:

:param num_users:

:return:

"""

num_shards, num_imgs = 200, 250

idx_shard = [i for i in range(num_shards)]

dict_users = {

i: np.array([]) for i in range(num_users)}

idxs = np.arange(num_shards*num_imgs)

# labels = dataset.train_labels.numpy()

labels = np.array(dataset.targets)

'''

labels = dataset.train_labels.numpy()

and

labels = np.array(dataset.train_labels)

All will report wrong.

AttributeError: 'CIFAR10' object has no attribute 'train_labels'

change the "train_labels" variable for "targets" everywhere

Just fine

'''

# print(labels) [6 9 9 ... 9 1 1]

# sort labels

idxs_labels = np.vstack((idxs, labels))

# print(idxs)

# [ 0 1 2 ... 49997 49998 49999]

'''

print(len(idxs))

print(len(labels))

50000

50000

'''

'''

[[ 0 1 2 ... 49997 49998 49999]

[ 6 9 9 ... 9 1 1]]

'''

idxs_labels = idxs_labels[:, idxs_labels[1, :].argsort()]

'''

idxs_labels = np.vstack((idxs, labels))

This step is equivalent to :

[[ 0 1 2 3 4 5 6 7]

[ 6 10 11 3 4 2 9 1]]

Sort Tags

[[ 7 5 3 4 0 6 1 2]

[ 1 2 3 4 6 9 10 11]]

The second array sorts , The order of the corresponding first array should be followed by the second array

Change together

It's no longer sequential

print(idxs_labels[1,:])

[0 0 0 ... 9 9 9]

'''

idxs = idxs_labels[0, :]

'''

print(idxs)

[29513 16836 32316... 36910 21518 25648]

idxs_labels The order of the first array in has changed with the second array

'''

# divide and assign

# Divide && Distribute

# and def mnist_noniid(dataset, num_users): equally

for i in range(num_users):

rand_set = set(np.random.choice(idx_shard, 2, replace=False))

idx_shard = list(set(idx_shard) - rand_set)

for rand in rand_set:

dict_users[i] = np.concatenate(

(dict_users[i], idxs[rand*num_imgs:(rand+1)*num_imgs]), axis=0)

'''

idxs Save subscript

num_imgs=300

for example rand = 8

idxs[2400:2700]

Every dic_users[i] With 300 Subscript data

A disorderly

'''

return dict_users

if __name__ == '__main__':

dataset_train = datasets.MNIST('./data/mnist/', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,),

(0.3081,))

]))

num = 100

d = mnist_noniid(dataset_train, num)

版权声明

本文为[Silent city of the sky]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230556365877.html

边栏推荐

- Basic SQL query and learning

- 零拷贝技术

- Oracle modify default temporary tablespace

- MySQL and PgSQL time related operations

- OSS cloud storage management practice (polite experience)

- Leetcode | 38 appearance array

- 【视频】线性回归中的贝叶斯推断与R语言预测工人工资数据|数据分享

- Database transactions

- Double pointer instrument panel reading (I)

- Innobackupex incremental backup

猜你喜欢

Dolphin scheduler integrates Flink task pit records

零拷贝技术

Isparta is a tool that generates webp, GIF and apng from PNG and supports the transformation of webp, GIF and apng

顶级元宇宙游戏Plato Farm,近期动作不断利好频频

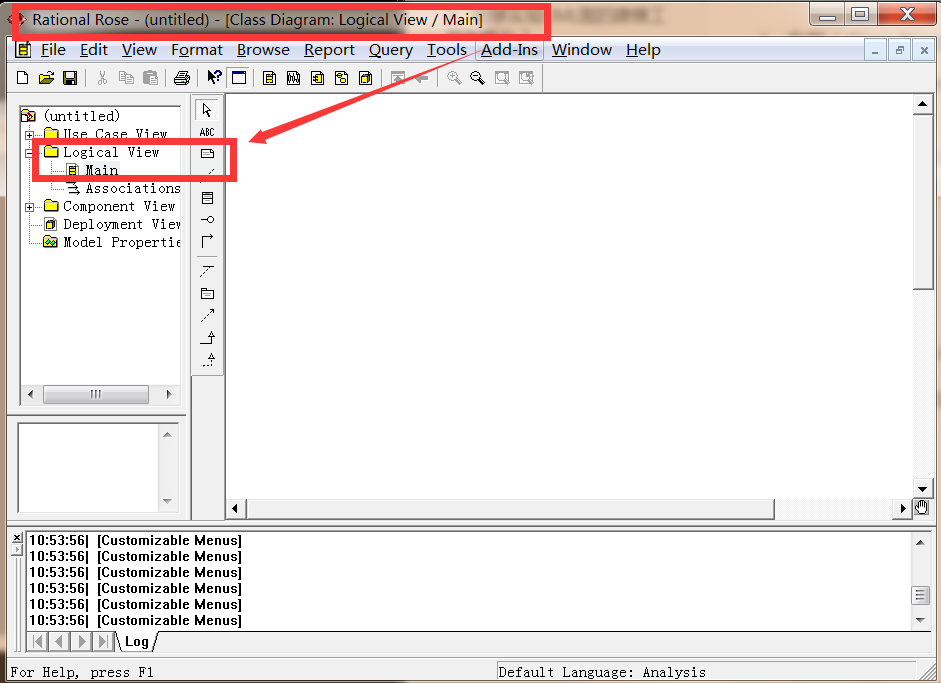

UML统一建模语言

PG SQL intercepts the string to the specified character position

Small case of web login (including verification code login)

Lenovo Saver y9000x 2020

Technologie zéro copie

![MySQL [SQL performance analysis + SQL tuning]](/img/71/2ca1a5799a2c7a822158d8b73bd539.png)

MySQL [SQL performance analysis + SQL tuning]

随机推荐

Move blog to CSDN

UNIX final exam summary -- for direct Department

Leetcode brush question 897 incremental sequential search tree

这个SQL语名是什么意思

Es introduction learning notes

Detailed explanation of constraints of Oracle table

The difference between is and as in Oracle stored procedure

Leetcode | 38 appearance array

Innobackupex incremental backup

Oracle lock table query and unlocking method

软考系统集成项目管理工程师全真模拟题(含答案、解析)

Leetcode brush question 𞓜 13 Roman numeral to integer

Oracle kills the executing SQL

自动化的艺术

Test the time required for Oracle library to create an index with 7 million data in a common way

Part 3: docker installing MySQL container (custom port)

Oracle modify default temporary tablespace

Dynamic subset division problem

Why do you need to learn container technology to engage in cloud native development

Detailed explanation and usage of with function in SQL