当前位置:网站首页>BPF program of type XDP

BPF program of type XDP

2022-04-23 07:00:00 【Wei Yanhua】

Catalog

After the content of the previous section ,bpf Procedure and map Has been loaded into the kernel . When bpf The program can play its role ?

This requires bpf The application system mounts it to the appropriate hook , When the path of the point where the hook is located is executed , Hook triggered ,BPF The program is executed .

The current application bpf The subsystems of are divided into two categories :

- tracing:kprobe、tracepoint、perf_event

- filter:sk_filter、sched_cls、sched_act、xdp、cg_skb

The next analysis XDP Type of bpf Program binding and triggering process

1. Loading via iproute2 ip

It does seem overkill to write a C program to simply load and attach a specific BPF-program. However, we still include this in the tutorial since it will help you integrate BPF into other Open Source projects.

As an alternative to writing a new loader, the standard iproute2 tool also contains a BPF ELF loader. However, this loader is not based on libbpf, which unfortunately makes it incompatible when starting to use BPF maps.

The iproute2 loader can be used with the standard ip tool; so in this case you can actually load our ELF-file xdp_pass_kern.o (where we named our ELF section “xdp”) like this:

ip link set dev lo xdpgeneric obj xdp_pass_kern.o sec xdp

Listing the device via ip link show also shows the XDP info:

$ ip link show dev lo

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 xdpgeneric qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

prog/xdp id 220 tag 3b185187f1855c4c jited

Removing the XDP program again from the device:

ip link set dev lo xdpgeneric off

2 bpf Program binding

It uses netlink Messages communicate with the kernel , hold fd and dev Information and flag Send to the kernel ,netlink It uses NETLINK_ROUTE news ID

int bpf_set_link_xdp_fd(int ifindex, int fd, __u32 flags)

{

int sock, seq = 0, ret;

struct nlattr *nla, *nla_xdp;

struct {

struct nlmsghdr nh;

struct ifinfomsg ifinfo;

char attrbuf[64];

} req;

__u32 nl_pid;

sock = libbpf_netlink_open(&nl_pid);

if (sock < 0)

return sock;

memset(&req, 0, sizeof(req));

req.nh.nlmsg_len = NLMSG_LENGTH(sizeof(struct ifinfomsg));

req.nh.nlmsg_flags = NLM_F_REQUEST | NLM_F_ACK;

req.nh.nlmsg_type = RTM_SETLINK;

req.nh.nlmsg_pid = 0;

req.nh.nlmsg_seq = ++seq;

req.ifinfo.ifi_family = AF_UNSPEC;

req.ifinfo.ifi_index = ifindex;

/* started nested attribute for XDP */

nla = (struct nlattr *)(((char *)&req)

+ NLMSG_ALIGN(req.nh.nlmsg_len));

nla->nla_type = NLA_F_NESTED | IFLA_XDP;

nla->nla_len = NLA_HDRLEN;

/* add XDP fd */

nla_xdp = (struct nlattr *)((char *)nla + nla->nla_len);

nla_xdp->nla_type = IFLA_XDP_FD;

nla_xdp->nla_len = NLA_HDRLEN + sizeof(int);

memcpy((char *)nla_xdp + NLA_HDRLEN, &fd, sizeof(fd));

nla->nla_len += nla_xdp->nla_len;

/* if user passed in any flags, add those too */

if (flags) {

nla_xdp = (struct nlattr *)((char *)nla + nla->nla_len);

nla_xdp->nla_type = IFLA_XDP_FLAGS;

nla_xdp->nla_len = NLA_HDRLEN + sizeof(flags);

memcpy((char *)nla_xdp + NLA_HDRLEN, &flags, sizeof(flags));

nla->nla_len += nla_xdp->nla_len;

}

req.nh.nlmsg_len += NLA_ALIGN(nla->nla_len);

if (send(sock, &req, req.nh.nlmsg_len, 0) < 0) {

ret = -errno;

goto cleanup;

}

ret = bpf_netlink_recv(sock, nl_pid, seq, NULL, NULL, NULL);

cleanup:

close(sock);

return ret;

}

int libbpf_netlink_open(__u32 *nl_pid)

{

struct sockaddr_nl sa;

socklen_t addrlen;

int one = 1, ret;

int sock;

memset(&sa, 0, sizeof(sa));

sa.nl_family = AF_NETLINK;

sock = socket(AF_NETLINK, SOCK_RAW, NETLINK_ROUTE);

if (sock < 0)

return -errno;

if (setsockopt(sock, SOL_NETLINK, NETLINK_EXT_ACK,

&one, sizeof(one)) < 0) {

fprintf(stderr, "Netlink error reporting not supported\n");

}

if (bind(sock, (struct sockaddr *)&sa, sizeof(sa)) < 0) {

ret = -errno;

goto cleanup;

}

addrlen = sizeof(sa);

if (getsockname(sock, (struct sockaddr *)&sa, &addrlen) < 0) {

ret = -errno;

goto cleanup;

}

if (addrlen != sizeof(sa)) {

ret = -LIBBPF_ERRNO__INTERNAL;

goto cleanup;

}

*nl_pid = sa.nl_pid;

return sock;

cleanup:

close(sock);

return ret;

}NETLINK_ROUTE Message handler registered in the kernel :

rtnl_register(PF_UNSPEC, RTM_SETLINK, rtnl_setlink, NULL, 0);

rtnl_setlink--->do_setlink

static int do_setlink(const struct sk_buff *skb,

struct net_device *dev, struct ifinfomsg *ifm,

struct netlink_ext_ack *extack,

struct nlattr **tb, char *ifname, int status)

{

const struct net_device_ops *ops = dev->netdev_ops;

int err;

err = validate_linkmsg(dev, tb);

if (err < 0)

return err;

if (tb[IFLA_NET_NS_PID] || tb[IFLA_NET_NS_FD] || tb[IFLA_IF_NETNSID]) {

struct net *net = rtnl_link_get_net_capable(skb, dev_net(dev),

tb, CAP_NET_ADMIN);

if (IS_ERR(net)) {

err = PTR_ERR(net);

goto errout;

}

err = dev_change_net_namespace(dev, net, ifname);

put_net(net);

if (err)

goto errout;

status |= DO_SETLINK_MODIFIED;

}

if (tb[IFLA_MAP]) {

struct rtnl_link_ifmap *u_map;

struct ifmap k_map;

if (!ops->ndo_set_config) {

err = -EOPNOTSUPP;

goto errout;

}

if (!netif_device_present(dev)) {

err = -ENODEV;

goto errout;

}

u_map = nla_data(tb[IFLA_MAP]);

k_map.mem_start = (unsigned long) u_map->mem_start;

k_map.mem_end = (unsigned long) u_map->mem_end;

k_map.base_addr = (unsigned short) u_map->base_addr;

k_map.irq = (unsigned char) u_map->irq;

k_map.dma = (unsigned char) u_map->dma;

k_map.port = (unsigned char) u_map->port;

err = ops->ndo_set_config(dev, &k_map);

if (err < 0)

goto errout;

status |= DO_SETLINK_NOTIFY;

}

if (tb[IFLA_ADDRESS]) {

struct sockaddr *sa;

int len;

len = sizeof(sa_family_t) + max_t(size_t, dev->addr_len,

sizeof(*sa));

sa = kmalloc(len, GFP_KERNEL);

if (!sa) {

err = -ENOMEM;

goto errout;

}

sa->sa_family = dev->type;

memcpy(sa->sa_data, nla_data(tb[IFLA_ADDRESS]),

dev->addr_len);

err = dev_set_mac_address(dev, sa);

kfree(sa);

if (err)

goto errout;

status |= DO_SETLINK_MODIFIED;

}

.........................

if (tb[IFLA_XDP]) {

struct nlattr *xdp[IFLA_XDP_MAX + 1];

u32 xdp_flags = 0;

err = nla_parse_nested(xdp, IFLA_XDP_MAX, tb[IFLA_XDP],

ifla_xdp_policy, NULL);

if (err < 0)

goto errout;

if (xdp[IFLA_XDP_ATTACHED] || xdp[IFLA_XDP_PROG_ID]) {

err = -EINVAL;

goto errout;

}

if (xdp[IFLA_XDP_FLAGS]) {

xdp_flags = nla_get_u32(xdp[IFLA_XDP_FLAGS]);

if (xdp_flags & ~XDP_FLAGS_MASK) {

err = -EINVAL;

goto errout;

}

if (hweight32(xdp_flags & XDP_FLAGS_MODES) > 1) {

err = -EINVAL;

goto errout;

}

}

if (xdp[IFLA_XDP_FD]) {

err = dev_change_xdp_fd(dev, extack,

nla_get_s32(xdp[IFLA_XDP_FD]),

xdp_flags);

if (err)

goto errout;

status |= DO_SETLINK_NOTIFY;

}

}

...............

}

return err;

}

according to IFLA_XDP_FD call dev_change_xdp_fd

int dev_change_xdp_fd(struct net_device *dev, struct netlink_ext_ack *extack,

int fd, u32 flags)

{

const struct net_device_ops *ops = dev->netdev_ops;

enum bpf_netdev_command query;

struct bpf_prog *prog = NULL;

bpf_op_t bpf_op, bpf_chk;

int err;

ASSERT_RTNL();

query = flags & XDP_FLAGS_HW_MODE ? XDP_QUERY_PROG_HW : XDP_QUERY_PROG;

bpf_op = bpf_chk = ops->ndo_bpf;

/* xdp There are three modes 1.generic XDP 2.native XDP 3.xdpoffload The latter two require driver or hardware support */

if (!bpf_op && (flags & (XDP_FLAGS_DRV_MODE | XDP_FLAGS_HW_MODE)))

return -EOPNOTSUPP;

if (!bpf_op || (flags & XDP_FLAGS_SKB_MODE))

bpf_op = generic_xdp_install;

if (bpf_op == bpf_chk)

bpf_chk = generic_xdp_install;

if (fd >= 0) {

if (__dev_xdp_query(dev, bpf_chk, XDP_QUERY_PROG) ||

__dev_xdp_query(dev, bpf_chk, XDP_QUERY_PROG_HW))

return -EEXIST;

if ((flags & XDP_FLAGS_UPDATE_IF_NOEXIST) &&

__dev_xdp_query(dev, bpf_op, query))

return -EBUSY;

prog = bpf_prog_get_type_dev(fd, BPF_PROG_TYPE_XDP,

bpf_op == ops->ndo_bpf);

if (IS_ERR(prog))

return PTR_ERR(prog);

if (!(flags & XDP_FLAGS_HW_MODE) &&

bpf_prog_is_dev_bound(prog->aux)) {

NL_SET_ERR_MSG(extack, "using device-bound program without HW_MODE flag is not supported");

bpf_prog_put(prog);

return -EINVAL;

}

}

err = dev_xdp_install(dev, bpf_op, extack, flags, prog);

if (err < 0 && prog)

bpf_prog_put(prog);

return err;

}

static int dev_xdp_install(struct net_device *dev, bpf_op_t bpf_op,

struct netlink_ext_ack *extack, u32 flags,

struct bpf_prog *prog)

{

struct netdev_bpf xdp;

memset(&xdp, 0, sizeof(xdp));

if (flags & XDP_FLAGS_HW_MODE)

xdp.command = XDP_SETUP_PROG_HW;

else

xdp.command = XDP_SETUP_PROG;

xdp.extack = extack;

xdp.flags = flags;

xdp.prog = prog;

return bpf_op(dev, &xdp);

}

static int generic_xdp_install(struct net_device *dev, struct netdev_bpf *xdp)

{

struct bpf_prog *old = rtnl_dereference(dev->xdp_prog);

struct bpf_prog *new = xdp->prog;

int ret = 0;

switch (xdp->command) {

case XDP_SETUP_PROG:

/* modify dev Of xdp_prog The pointer , Point to the new prog */

rcu_assign_pointer(dev->xdp_prog, new);

if (old)

bpf_prog_put(old);

if (old && !new) {

static_branch_dec(&generic_xdp_needed_key);

} else if (new && !old) {

static_branch_inc(&generic_xdp_needed_key);

dev_disable_lro(dev);

dev_disable_gro_hw(dev);

}

break;

case XDP_QUERY_PROG:

xdp->prog_id = old ? old->aux->id : 0;

break;

default:

ret = -EINVAL;

break;

}

return ret;

}3 bpf Program trigger

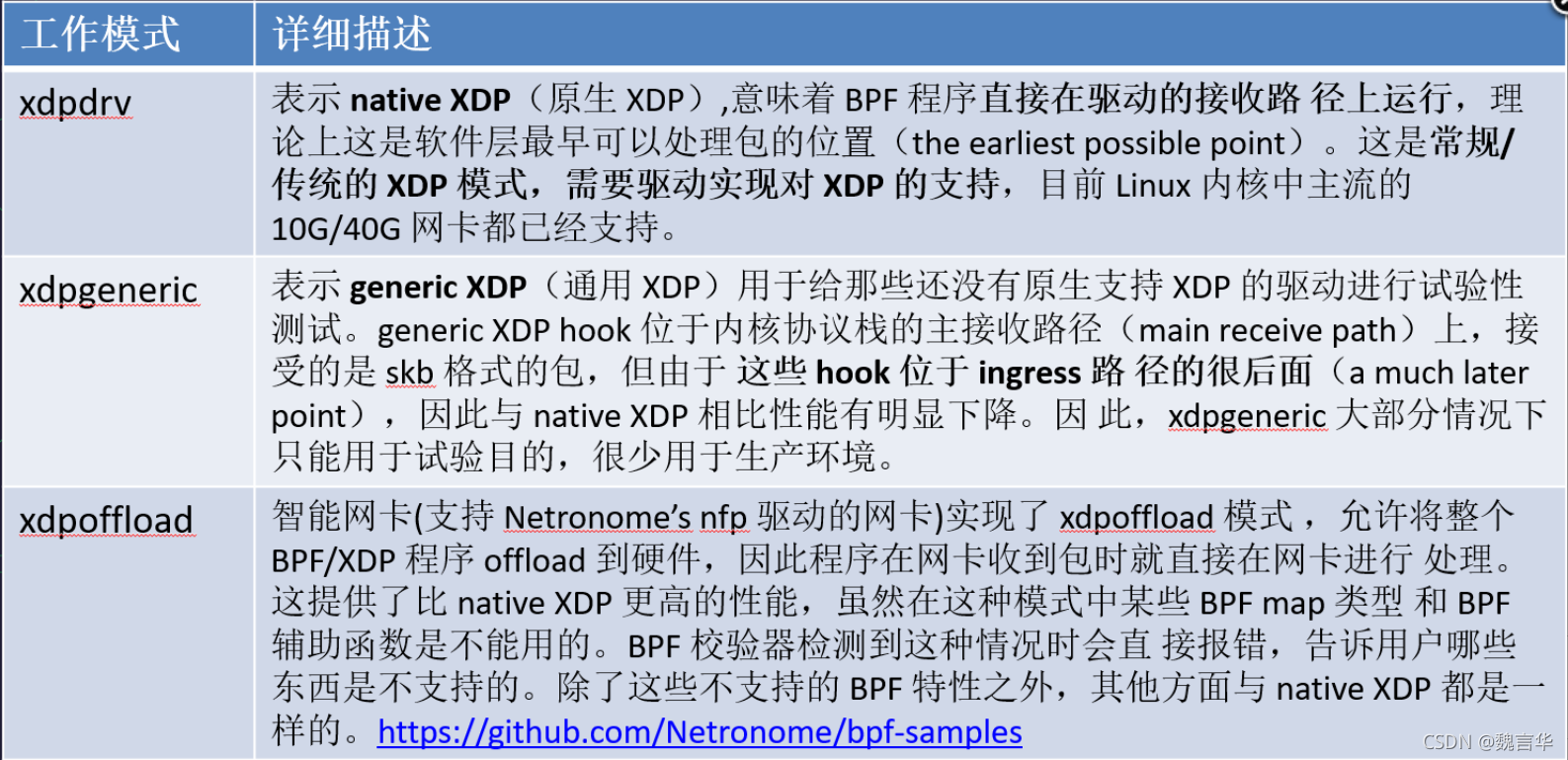

xdp There are three working modes , The specific description and application limitations are as follows :

Next, only the general XDP The trigger process ,__netif_receive_skb Will call and judge dev Is it mounted xdp_prog, If it's mounted xdp It will call do_xdp_generic

static int __netif_receive_skb_core(struct sk_buff **pskb, bool pfmemalloc,

struct packet_type **ppt_prev)

{

....................

if (static_branch_unlikely(&generic_xdp_needed_key)) {

int ret2;

preempt_disable();

ret2 = do_xdp_generic(rcu_dereference(skb->dev->xdp_prog), skb);

preempt_enable();

if (ret2 != XDP_PASS) {

ret = NET_RX_DROP;

goto out;

}

skb_reset_mac_len(skb);

}

....................

}int do_xdp_generic(struct bpf_prog *xdp_prog, struct sk_buff *skb)

{

if (xdp_prog) {

struct xdp_buff xdp;

u32 act;

int err;

act = netif_receive_generic_xdp(skb, &xdp, xdp_prog);

if (act != XDP_PASS) {

switch (act) {

case XDP_REDIRECT:

err = xdp_do_generic_redirect(skb->dev, skb,

&xdp, xdp_prog);

if (err)

goto out_redir;

break;

case XDP_TX:

generic_xdp_tx(skb, xdp_prog);

break;

}

return XDP_DROP;

}

}

return XDP_PASS;

out_redir:

kfree_skb(skb);

return XDP_DROP;

}

static u32 netif_receive_generic_xdp(struct sk_buff *skb,

struct xdp_buff *xdp,

struct bpf_prog *xdp_prog)

{

struct netdev_rx_queue *rxqueue;

void *orig_data, *orig_data_end;

u32 metalen, act = XDP_DROP;

__be16 orig_eth_type;

struct ethhdr *eth;

bool orig_bcast;

int hlen, off;

u32 mac_len;

/* Reinjected packets coming from act_mirred or similar should

* not get XDP generic processing.

*/

if (skb_is_tc_redirected(skb))

return XDP_PASS;

/* XDP packets must be linear and must have sufficient headroom

* of XDP_PACKET_HEADROOM bytes. This is the guarantee that also

* native XDP provides, thus we need to do it here as well.

*/

if (skb_cloned(skb) || skb_is_nonlinear(skb) ||

skb_headroom(skb) < XDP_PACKET_HEADROOM) {

int hroom = XDP_PACKET_HEADROOM - skb_headroom(skb);

int troom = skb->tail + skb->data_len - skb->end;

/* In case we have to go down the path and also linearize,

* then lets do the pskb_expand_head() work just once here.

*/

if (pskb_expand_head(skb,

hroom > 0 ? ALIGN(hroom, NET_SKB_PAD) : 0,

troom > 0 ? troom + 128 : 0, GFP_ATOMIC))

goto do_drop;

if (skb_linearize(skb))

goto do_drop;

}

/* The XDP program wants to see the packet starting at the MAC

* header.

*/

mac_len = skb->data - skb_mac_header(skb);

hlen = skb_headlen(skb) + mac_len;

xdp->data = skb->data - mac_len;

xdp->data_meta = xdp->data;

xdp->data_end = xdp->data + hlen;

xdp->data_hard_start = skb->data - skb_headroom(skb);

orig_data_end = xdp->data_end;

orig_data = xdp->data;

eth = (struct ethhdr *)xdp->data;

orig_bcast = is_multicast_ether_addr_64bits(eth->h_dest);

orig_eth_type = eth->h_proto;

rxqueue = netif_get_rxqueue(skb);

xdp->rxq = &rxqueue->xdp_rxq;

act = bpf_prog_run_xdp(xdp_prog, xdp);

/* check if bpf_xdp_adjust_head was used */

off = xdp->data - orig_data;

if (off) {

if (off > 0)

__skb_pull(skb, off);

else if (off < 0)

__skb_push(skb, -off);

skb->mac_header += off;

skb_reset_network_header(skb);

}

/* check if bpf_xdp_adjust_tail was used. it can only "shrink"

* pckt.

*/

off = orig_data_end - xdp->data_end;

if (off != 0) {

skb_set_tail_pointer(skb, xdp->data_end - xdp->data);

skb->len -= off;

}

/* check if XDP changed eth hdr such SKB needs update */

eth = (struct ethhdr *)xdp->data;

if ((orig_eth_type != eth->h_proto) ||

(orig_bcast != is_multicast_ether_addr_64bits(eth->h_dest))) {

__skb_push(skb, ETH_HLEN);

skb->protocol = eth_type_trans(skb, skb->dev);

}

switch (act) {

case XDP_REDIRECT:

case XDP_TX:

__skb_push(skb, mac_len);

break;

case XDP_PASS:

metalen = xdp->data - xdp->data_meta;

if (metalen)

skb_metadata_set(skb, metalen);

break;

default:

bpf_warn_invalid_xdp_action(act);

/* fall through */

case XDP_ABORTED:

trace_xdp_exception(skb->dev, xdp_prog, act);

/* fall through */

case XDP_DROP:

do_drop:

kfree_skb(skb);

break;

}

return act;

}

版权声明

本文为[Wei Yanhua]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230557207788.html

边栏推荐

- 【ES6快速入门】

- TP5 uses redis

- Oracle net service: listener and service name resolution method

- 【代码解析(4)】Communication-Efficient Learning of Deep Networks from Decentralized Data

- 异常记录-7

- How to use DBA_ hist_ active_ sess_ History analysis database history performance problems

- 数据库基本概念:OLTP/OLAP/HTAP、RPO/RTO、MPP

- LeetCode刷题|897递增顺序搜索树

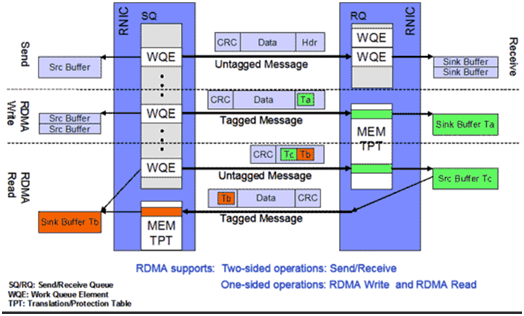

- rdma 介绍

- 修改Jupyter Notebook样式

猜你喜欢

随机推荐

Unix期末考试总结--针对直系

Imitation scallop essay reading page

[fish in the net] ansible awx calls playbook to transfer parameters

Oracle性能分析工具:OSWatcher

【MySQL基础篇】数据导出导入权限与local_infile参数

多线程

JS implementation of web page rotation map

rdam 原理解析

Openvswitch compilation and installation

【MySQL基础篇】启动选项、系统变量、状态变量

The arithmetic square root of X in leetcode

Typescript (top)

JS regular matching first assertion and last assertion

Number of stair climbing methods of leetcode

Passerelle haute performance pour l'interconnexion entre VPC et IDC basée sur dpdk

Ansible基本命令、角色、内置变量与tests判断

Redis 详解(基础+数据类型+事务+持久化+发布订阅+主从复制+哨兵+缓存穿透、击穿、雪崩)

Thanos如何为不同租户配置不同的数据保留时长

fdfs启动

【MySQL基础篇】启动选项与配置文件