当前位置:网站首页>(6) FlinkSQL writes kafka data to mysql Method 1

(6) FlinkSQL writes kafka data to mysql Method 1

2022-08-08 13:02:00 【NBI big data】

这里不展开zookeeper、kafka安装配置

(1)首先需要启动zookeeper和kafka

(2)定义一个kafka生产者

package com.producers;

import com.alibaba.fastjson.JSONObject;

import com.pojo.Event;

import com.pojo.WaterSensor;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.Properties;

import java.util.Random;

/**

* Created by lj on 2022-07-09.

*/

public class Kafaka_Producer {

public final static String bootstrapServers = "127.0.0.1:9092";

public static void main(String[] args) {

Properties props = new Properties();

//设置Kafka服务器地址

props.put("bootstrap.servers", bootstrapServers);

//设置数据key的序列化处理类

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

//设置数据value的序列化处理类

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

KafkaProducer<String, String> producer = new KafkaProducer<>(props);

try {

int i = 0;

Random r=new Random(); //不传入种子

String[] lang = {"flink","spark","hadoop","hive","hbase","impala","presto","superset","nbi"};

while(true) {

Thread.sleep(2000);

WaterSensor waterSensor = new WaterSensor(lang[r.nextInt(lang.length)],i,i);

i++;

String msg = JSONObject.toJSONString(waterSensor);

System.out.println(msg);

RecordMetadata recordMetadata = producer.send(new ProducerRecord<>("kafka_data_waterSensor", null, null, msg)).get();

// System.out.println("recordMetadata: {"+ recordMetadata +"}");

}

} catch (Exception e) {

System.out.println(e.getMessage());

}

}

}(3)定义一个消息对象

package com.pojo;

import java.io.Serializable;

/**

* Created by lj on 2022-07-05.

*/

public class WaterSensor implements Serializable {

private String id;

private long ts;

private int vc;

public WaterSensor(){

}

public WaterSensor(String id,long ts,int vc){

this.id = id;

this.ts = ts;

this.vc = vc;

}

public int getVc() {

return vc;

}

public void setVc(int vc) {

this.vc = vc;

}

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public long getTs() {

return ts;

}

public void setTs(long ts) {

this.ts = ts;

}

}(4)从kafka接入数据,并写入到mysql

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

//读取kafka的数据

Properties properties = new Properties();

properties.setProperty("bootstrap.servers","127.0.0.1:9092");

properties.setProperty("group.id", "consumer-group");

properties.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("auto.offset.reset", "latest");

DataStreamSource<String> streamSource = env.addSource(

new FlinkKafkaConsumer<String>(

"kafka_waterSensor",

new SimpleStringSchema(),

properties)

);

SingleOutputStreamOperator<WaterSensor> waterDS = streamSource.map(new MapFunction<String, WaterSensor>() {

@Override

public WaterSensor map(String s) throws Exception {

JSONObject json = (JSONObject)JSONObject.parse(s);

return new WaterSensor(json.getString("id"),json.getLong("ts"),json.getInteger("vc"));

}

});

// Convert stream to table

Table table = tableEnv.fromDataStream(waterDS,

$("id"),

$("ts"),

$("vc"),

$("pt").proctime());

tableEnv.createTemporaryView("EventTable", table);

tableEnv.executeSql("CREATE TABLE flinksink (" +

"componentname STRING," +

"componentcount BIGINT NOT NULL," +

"componentsum BIGINT" +

") WITH (" +

"'connector.type' = 'jdbc'," +

"'connector.url' = 'jdbc:mysql://localhost:3306/testdb?characterEncoding=UTF-8&useUnicode=true&useSSL=false&tinyInt1isBit=false&allowPublicKeyRetrieval=true&serverTimezone=Asia/Shanghai'," +

"'connector.table' = 'flinksink'," +

"'connector.driver' = 'com.mysql.cj.jdbc.Driver'," +

"'connector.username' = 'root'," +

"'connector.password' = 'root'," +

"'connector.write.flush.max-rows'='3'\r\n" +

")"

);

Table mysql_user = tableEnv.from("flinksink");

mysql_user.printSchema();

Table result = tableEnv.sqlQuery(

"SELECT " +

"id as componentname, " + //window_start, window_end,

"COUNT(ts) as componentcount ,SUM(ts) as componentsum " +

"FROM TABLE( " +

"TUMBLE( TABLE EventTable , " +

"DESCRIPTOR(pt), " +

"INTERVAL '10' SECOND)) " +

"GROUP BY id , window_start, window_end"

);

//方式一:写入数据库

// result.executeInsert("flinksink").print(); //;.insertInto("flinksink");

//方式二:写入数据库

tableEnv.createTemporaryView("ResultTable", result);

tableEnv.executeSql("insert into flinksink SELECT * FROM ResultTable").print();

// tableEnv.toAppendStream(result, Row.class).print("toAppendStream"); //追加模式

env.execute();

}(5)效果演示

边栏推荐

- LeetCode 219. Repeating Elements II (2022.08.07)

- 【SSR服务端渲染+CSR客户端渲染+post请求+get请求+总结】三种开启服务器的方法总结

- 别再到处乱放配置文件了!试试我司使用 7 年的这套解决方案,稳的一秕

- 动图图解!既然IP层会分片,为什么TCP层也还要分段?

- odps sql被删除了,能找回来吗

- 北京 北京超大旧货二手市场开集了,上千种产品随便选,来的人还真不少

- 请问如何实现两个不同环境的MySQL数据库实时同步

- What is the IP SSL certificate, how to apply for?

- SQL的INSERT INTO和INSERT INTO SELECT语句

- MeterSphere - open source test platform

猜你喜欢

随机推荐

leetcode 1584. 连接所有点的最小费用

office安装出现了“office对安装源的访问被拒绝30068-4(5)”错误

宏任务和微任务——三目算符与加号优先级——原生的js如何禁用button——0xff ^ 33 的结果是——in的用法——正则匹配网址

day02 -DOM - advanced events (register events, event listeners, delete events, DOM event flow, event objects, prevent default behavior, prevent event bubbling, event delegation) - commonly used mouse

各位,我想知道,既然数据全部读取过来存放内存,我flink sql窗口关闭之后再次查询这个cdc映射

C语言的三个经典题目:三步翻转法、杨氏矩阵、辗转相除法

STM32入门开发 制作红外线遥控器(智能居家-万能遥控器)

MYSQL 的 MASTER到MASTER的主主循环同步

vim /etc/profile 写入时 出现 E121:无法打开并写入文件解决方案

易周金融分析 | 互联网系小贷平台密集增资;上半年银行理财子公司综合评价指数发布

【C语言】动态内存管理

深度剖析-class的几个对象(utlis,component)-瀑布流-懒加载(概念,作用,原理,实现步骤)

Redis的那些事:一文入门Redis的基础操作

Jenkins - Introduction to Continuous Integration (1)

RT-Thread记录(三、RT-Thread 线程操作函数及线程管理与FreeRTOS的比较)

动图图解!既然IP层会分片,为什么TCP层也还要分段?

宝塔实测-TinkPHP5.1框架小程序商城源码

字节跳动资深架构师整理2022年秋招最新面试题汇总:208页核心体系

爱可可AI前沿推介(8.8)

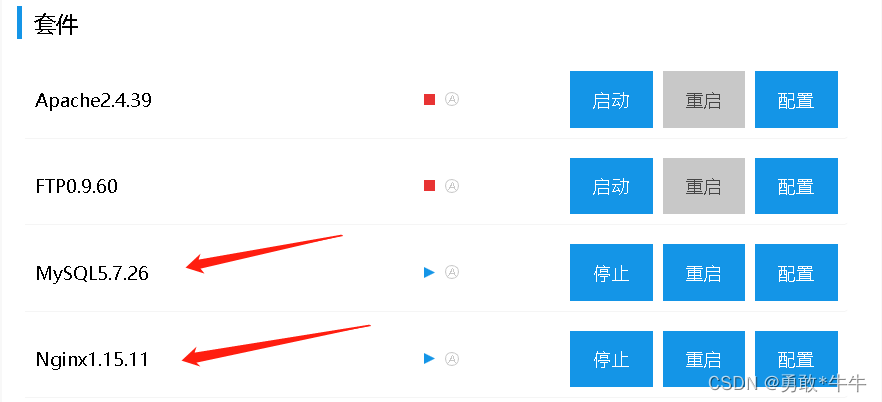

MySQL安装及使用