当前位置:网站首页>Introduction to openvino

Introduction to openvino

2022-04-22 14:45:00 【Bamboo leaf green LVYE】

Then the previous series of blogs continue to experiment , This article introduces OpenVINO, Mainly refer to the official website , As mentioned earlier, good things , Its introduction on the official website is very detailed , I think this is better than Tensor RT Our official website is better , Example sample Also many .

Get Started — OpenVINO documentation https://docs.openvino.ai/latest/get_started.html The blogger's computer software environment at this time is :

https://docs.openvino.ai/latest/get_started.html The blogger's computer software environment at this time is :

Ubuntu 20.04

python3.6.13 (Anaconda)

cuda version: 11.2

cudnn version: cudnn-11.2-linux-x64-v8.1.1.33

Because it involves model transformation and training your own data set , Bloggers install OpenVINO Development Tools, Later, when the raspberry pie is deployed , Try installing only OpenVINO Runtime

One . install

1. In order not to affect the environment configuration in the previous blogger series blog ( The previous work was also carried out in the virtual environment ), Here's a creation called testOpenVINO Virtual environment for , About Anaconda The details of creating a virtual environment can be found in Previous blogs

conda create -n testOpenVINO python=3.6

Next update Next pip

![]()

2. Execute the following command , Blogger's previous articles Blog What we use tensorflow2.6.2, In order to verify something , Here the framework specifies tensorflow2 and onnx

pip install openvino-dev[tensorflow2,onnx]After the completion of , Input mo -h To verify

openvino-dev · PyPI All the development tools mentioned in the web page have also been installed

Two . use model Optimizer transformation tensorflow2 Model

Converting a TensorFlow* Model — OpenVINO documentation

tensorflow The version is 2.5.2, A pre training model can be directly used to save_model Way,

import tensorflow as tf

import numpy as np

from tensorflow.keras.preprocessing import image

from tensorflow.keras.applications import resnet50

from tensorflow.keras.applications.resnet50 import preprocess_input, decode_predictions

from PIL import Image

import time

physical_devices = tf.config.list_physical_devices('GPU')

tf.config.experimental.set_memory_growth(physical_devices[0], True)

# Load pre training model

model = resnet50.ResNet50(weights='imagenet')

#save_model Method to save the model

tf.saved_model.save(model, "resnet/1/")

After operation , You can see the generated model

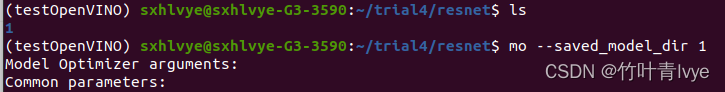

Execute the following commands to complete the transformation of the model

mo --saved_model_dir 1

You can see , Generated 3 File

3、 ... and . Use OpenVINO stay python Complete the inference under the environment

Integrate OpenVINO with Your Application — OpenVINO documentation

Load the converted model above , To predict kitten pictures

The code is as follows :

import openvino.runtime as ov

import numpy as np

import time

from tensorflow.keras.preprocessing import image

from tensorflow.keras.applications import resnet50

from tensorflow.keras.applications.resnet50 import preprocess_input, decode_predictions

from PIL import Image

core = ov.Core()

compiled_model = core.compile_model("./resnet/saved_model.xml", "AUTO")

infer_request = compiled_model.create_infer_request()

img = image.load_img('2008_002682.jpg', target_size=(224, 224))

img = image.img_to_array(img)

img = preprocess_input(img)

img = np.expand_dims(img, axis=0)

# Create tensor from external memory

input_tensor = ov.Tensor(array=img, shared_memory=False)

infer_request.set_input_tensor(input_tensor)

t_model = time.perf_counter()

infer_request.start_async()

infer_request.wait()

print(f'do inference cost:{time.perf_counter() - t_model:.8f}s')

# Get output tensor for model with one output

output = infer_request.get_output_tensor()

output_buffer = output.data

# output_buffer[] - accessing output tensor data

print(output_buffer.shape)

print('Predicted:', decode_predictions(output_buffer, top=5)[0])

print("ok")The operation results are as follows :

/home/sxhlvye/anaconda3/envs/testOpenVINO/bin/python3.6 /home/sxhlvye/trial4/test_inference.py

2022-04-20 12:54:36.780974: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcudart.so.11.0

do inference cost:0.02717873s

(1, 1000)

Predicted: [('n02123597', 'Siamese_cat', 0.16550684), ('n02108915', 'French_bulldog', 0.14137998), ('n04409515', 'tennis_ball', 0.08570903), ('n02095314', 'wire-haired_fox_terrier', 0.052046664), ('n02123045', 'tabby', 0.050695512)]

ok

Process finished with exit code 0

Compare before conversion , stay tensorflow Under the framework of Forecast time , Speed from 0.18s Promoted to 0.027s.

Four .c++ Environmental use OpenVINO

If you want to c++ Use in OpenVINO library , You also need the following configurations , You can download... On this page OpenVINO Of Toolkit

Download Intel Distribution of OpenVINO Toolkit https://www.intel.com/content/www/us/en/developer/tools/openvino-toolkit/download.html Choose your own options

https://www.intel.com/content/www/us/en/developer/tools/openvino-toolkit/download.html Choose your own options

The blogger's files are in this location ( Combine your own path )

Then follow the official website step-by-setp Just install it

Install and Configure Intel Distribution of OpenVINO Toolkit for Linux — OpenVINO documentation

The installation directory can be seen on the following interface

A prompt will appear after completion

You can see OpenVINO Installation directory

cd To directory , Then execute the following command

Next, you need to update the environment variable , edit ./bashrc file

sudo gedit ~/.bashrcAdd the statement ( Combine your own path )

source /home/sxhlvye/intel/openvino_2022/setupvars.sh When you're done, don't forget to execute source ~/.bashrc

When you're done, don't forget to execute source ~/.bashrc

This creates one at a time terminal When , About openvino All variables will be automatically added to the environment variables , If there are multiple versions on the computer openvino, By modifying the ./bashrc Medium setupvars.sh route , You can easily complete the switching .

opencv here , Bloggers don't install... For the time being , The computer still retains the information in the previous two blogs opencv Environmental Science

With the above environment , Blogger in c++ Load the above in the environment The second step is to generate Of openVINO runtime Intermediate model Intermediate Representation (IR), It's still used here QTCreator Make a compiler .QTCreator pure c++ Configuration of code , You can also see bloggers Previous blogs

The structure of the project catalogue is as follows :

main.cpp The code in is as follows ( Not the code of the official website , Write by yourself ), For reference only :

#include <openvino/openvino.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/opencv.hpp>

#include <cstdlib>

#include <fstream>

#include <iostream>

#include <sstream>

#include<ctime>

using namespace std;

using namespace cv;

int main()

{

ov::Core core;

ov::CompiledModel compiled_model = core.compile_model("/home/sxhlvye/trial4/resnet/saved_model.xml", "AUTO");

ov::InferRequest infer_request = compiled_model.create_infer_request();

auto input_port = compiled_model.input();

cout << input_port.get_element_type() << std::endl;

std::vector<size_t> size;

size.push_back(1);

size.push_back(224);

size.push_back(224);

size.push_back(3);

ov::Shape shape(size);

cv::Mat image = cv::imread("/home/sxhlvye/Trial1/Tensorrt/2008_002682.jpg", cv::IMREAD_COLOR);

//cv::cvtColor(image, image, cv::COLOR_BGR2RGB);

cout << image.channels() << "," << image.size().width << "," << image.size().height << std::endl;

cv::Mat dst = cv::Mat::zeros(224, 224, CV_32FC3);

cv::resize(image, dst, dst.size());

cout << dst.channels() << "," << dst.size().width << "," << dst.size().height << std::endl;

const int channel = 3;

const int inputH = 244;

const int inputW = 244;

// Read a random digit file

std::vector<float> fileData(inputH * inputW * channel);

/*for (int c = 0; c < channel; ++c)

{

for (int i = 0; i < dst.rows; ++i)

{

cv::Vec3b *p1 = dst.ptr<cv::Vec3b>(i);

for (int j = 0; j < dst.cols; ++j)

{

fileData[c * dst.cols * dst.rows + i * dst.cols + j] = p1[j][c] / 255.0f;

}

}

}*/

for (int i = 0; i < dst.rows; ++i)

{

cv::Vec3b *p1 = dst.ptr<cv::Vec3b>(i);

for (int j = 0; j < dst.cols; ++j)

{

for(int c = 0; c < 3; ++c)

{

fileData[i*dst.cols*3 + j*3 + c] = p1[j][c] / 255.0f;

}

}

}

ov::Tensor input_tensor(input_port.get_element_type(), shape, fileData.data());

infer_request.set_input_tensor(input_tensor);

clock_t startTime = clock();

infer_request.start_async();

infer_request.wait();

clock_t endTime = clock();

cout << "cost: "<< double(endTime - startTime) / CLOCKS_PER_SEC << "s" << endl;

// Get output tensor by tensor name

auto output_tensor = infer_request.get_output_tensor();

int outputSize = output_tensor.get_size();

cout << outputSize << std::endl;

const float *output_temp = output_tensor.data<const float>();

float output[1000];

for(int i=0;i<1000;i++)

{

output[i] = output_temp[i];

}

// Calculate Softmax

/* float sum{0.0f};

for (int i = 0; i < outputSize; i++)

{

output[i] = exp(output[i]);

sum += output[i];

}

for (int i = 0; i < outputSize; i++)

{

output[i] /= sum;

}*/

vector<float> voutput(1000);

for (int i = 0; i < outputSize; i++)

{

voutput[i] = output[i];

}

for(int i=0; i<1000; i++)

{

for(int j= i+1; j< 1000; j++)

{

if(output[i] < output[j])

{

int temp;

temp = output[i];

output[i] = output[j];

output[j] = temp;

}

}

}

for(int i=0; i<5;i++)

{

cout << output[i] << std::endl;

}

vector<string> labels;

string line;

ifstream readFile("/home/sxhlvye/Trial/yolov3-9.5.0/imagenet_classes.txt");

while (getline(readFile,line))

{

//istringstream record(line);

//string label;

// record >> label;

//cout << line << std::endl;

labels.push_back(line);

}

vector<int> indexs(5);

for(int i=0; i< 1000;i++)

{

if(voutput[i] == output[0])

{

indexs[0] = i;

}

if(voutput[i] == output[1])

{

indexs[1] = i;

}

if(voutput[i] == output[2])

{

indexs[2] = i;

}

if(voutput[i] == output[3])

{

indexs[3] = i;

}

if(voutput[i] == output[4])

{

indexs[4] = i;

}

}

cout << "top 5: " << std::endl;

cout << labels[indexs[0]] << "--->" << output[0] << std::endl;

cout << labels[indexs[1]] << "--->" << output[1] << std::endl;

cout << labels[indexs[2]] << "--->" << output[2] << std::endl;

cout << labels[indexs[3]] << "--->" << output[3] << std::endl;

cout << labels[indexs[4]] << "--->" << output[4] << std::endl;

cout << "ok" << std::endl;

return 0;

}

test8.pro The contents of the project configuration file are as follows :

TEMPLATE = app

CONFIG += console c++11

CONFIG -= app_bundle

CONFIG -= qt

SOURCES += \

main.cpp

INCLUDEPATH += /usr/local/include \

/usr/local/include/opencv \

/usr/local/include/opencv2 \

/home/sxhlvye/intel/openvino_2022/runtime/include \

/home/sxhlvye/intel/openvino_2022/runtime/include/ie \

LIBS += /usr/local/lib/libopencv_highgui.so \

/usr/local/lib/libopencv_core.so \

/usr/local/lib/libopencv_imgproc.so \

/usr/local/lib/libopencv_imgcodecs.so \

/home/sxhlvye/intel/openvino_2022/runtime/lib/intel64/libopenvino.so \

/home/sxhlvye/intel/openvino_2022/runtime/3rdparty/tbb/lib/libtbb.so.2 \

After running , Run the generated executable file under the terminal , The forecast information is as follows

Take a look here , And above The third step in python The predictions in the environment are very different , The main reason is the inconsistency of image preprocessing .

In order to verify , Bloggers will take the third step python Image preprocessing and c++ Keep consistent under , Also used opencv Library for processing , The modified code is as follows :

import cv2

import openvino.runtime as ov

import numpy as np

import time

from tensorflow.keras.preprocessing import image

from tensorflow.keras.applications import resnet50

from tensorflow.keras.applications.resnet50 import preprocess_input, decode_predictions

from PIL import Image

core = ov.Core()

compiled_model = core.compile_model("./resnet/saved_model.xml", "AUTO")

infer_request = compiled_model.create_infer_request()

# img = image.load_img('2008_002682.jpg', target_size=(224, 224))

# img = image.img_to_array(img)

# img = preprocess_input(img)

img = cv2.imread('2008_002682.jpg')

img = cv2.resize(img,(224,224))

img_np = np.array(img, dtype=np.float32) / 255.

img_np = np.expand_dims(img_np, axis=0)

print(img_np.shape)

# Create tensor from external memory

input_tensor = ov.Tensor(array=img_np, shared_memory=False)

infer_request.set_input_tensor(input_tensor)

t_model = time.perf_counter()

infer_request.start_async()

infer_request.wait()

print(f'do inference cost:{time.perf_counter() - t_model:.8f}s')

# Get output tensor for model with one output

output = infer_request.get_output_tensor()

output_buffer = output.data

# output_buffer[] - accessing output tensor data

print(output_buffer.shape)

print('Predicted:', decode_predictions(output_buffer, top=5)[0])

print("ok")The prediction results are as follows :

/home/sxhlvye/anaconda3/envs/testOpenVINO/bin/python3.6 /home/sxhlvye/trial4/test_inference.py

2022-04-21 07:59:02.427084: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcudart.so.11.0

[E:] [BSL] found 0 ioexpander device

[E:] [BSL] found 0 ioexpander device

(1, 224, 224, 3)

do inference cost:0.02702089s

(1, 1000)

Predicted: [('n01930112', 'nematode', 0.13559894), ('n03041632', 'cleaver', 0.041396398), ('n03838899', 'oboe', 0.034457874), ('n02783161', 'ballpoint', 0.02541826), ('n04270147', 'spatula', 0.023189805)]

ok

Process finished with exit code 0

You can see , After the pretreatment is consistent ,c++ and python The results on both sides are consistent .

There are many detailed information on the official website , I'll read it carefully when I have time , This is just the next step !

版权声明

本文为[Bamboo leaf green LVYE]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204221440138787.html

边栏推荐

- Wireguard series (1): what is v * *?

- Applying stochastic processes 09: discrete time Martingales

- Is it safe to open a stock account in Nanjing? Is it dangerous?

- [interpretation of orb_slam2 source code] Analyze orb_ How does slam2 rgbd calculate the position and attitude in frame 0

- 性能飙升66%的秘密:AMD 2.5万元768MB 3D缓存霄龙首次开盖

- 人们总是简单的认为产业互联网是一个比互联网还要广大的平台

- 学习记录568@RSA公钥体系及其解密证明方式二

- Arcengine开发效率优化(不定时更新)

- 中国 AI 的“黄埔军校”?MSRA 被曝停招“国防七子”及北邮学生

- Leave a hole in postmass

猜你喜欢

Awk command

第一阶段*第四章*项目管理一般知识

![[ELT. Zip] openharmony paper club -- Interpretation of compressed coding from the perspective of overview](/img/84/60eac05b35ff8b2a1352a5e92e3aab.png)

[ELT. Zip] openharmony paper club -- Interpretation of compressed coding from the perspective of overview

运行npm install命令的时候会发生什么?

机器学习模型融合大法!

Android interview: event distribution 8 consecutive questions, Android basic interview questions

每周问答精选:PolarDB-X完全兼容MySQL吗?

3. Fiddler certificate installation and fetching hettps settings

Take you to understand the principle of highly flexible spark architecture

数据库资源负载管理(下篇)

随机推荐

Borui data and F5 jointly build the full data chain DNA of financial technology from code to user

Tencent build project image

世界读书日晒出你的书单,有机会领取免费读书年卡!

dxg:TableView. The FormatConditions table is highlighted by criteria

App + applet container enterprises can also easily build a super application ecosystem

arcengine线与面的相互转换

远程服务器上共享文件夹的上传与下载

【ELT.ZIP】OpenHarmony啃论文俱乐部——轻翻那些永垂不朽的诗篇

Fundamentals of database (II)

[ELT. Zip] openharmony paper club -- counting the compressed bits of life

ArcGIS face gap inspection

When allowCredentials is true, allowedOrigins cannot contain the special value “*“ since that canno

Gradle references peer projects

Whampoa Military Academy of Chinese AI? MsrA was exposed to stop recruiting "national defense seven" and Beiyou students

Kotlin anonymous functions and functions

[mydatanotis.08001] mydatagrid connection error

Awk command

快速整明白Redis中的字典到底是个啥

Error unable to access jarfile solution

【ELT.ZIP】《CCF開源高校行第一期》觀後感