当前位置:网站首页>Deep Learning [Chapter 2]

Deep Learning [Chapter 2]

2022-08-11 01:41:00 【sweetheart7-7】

文章目录

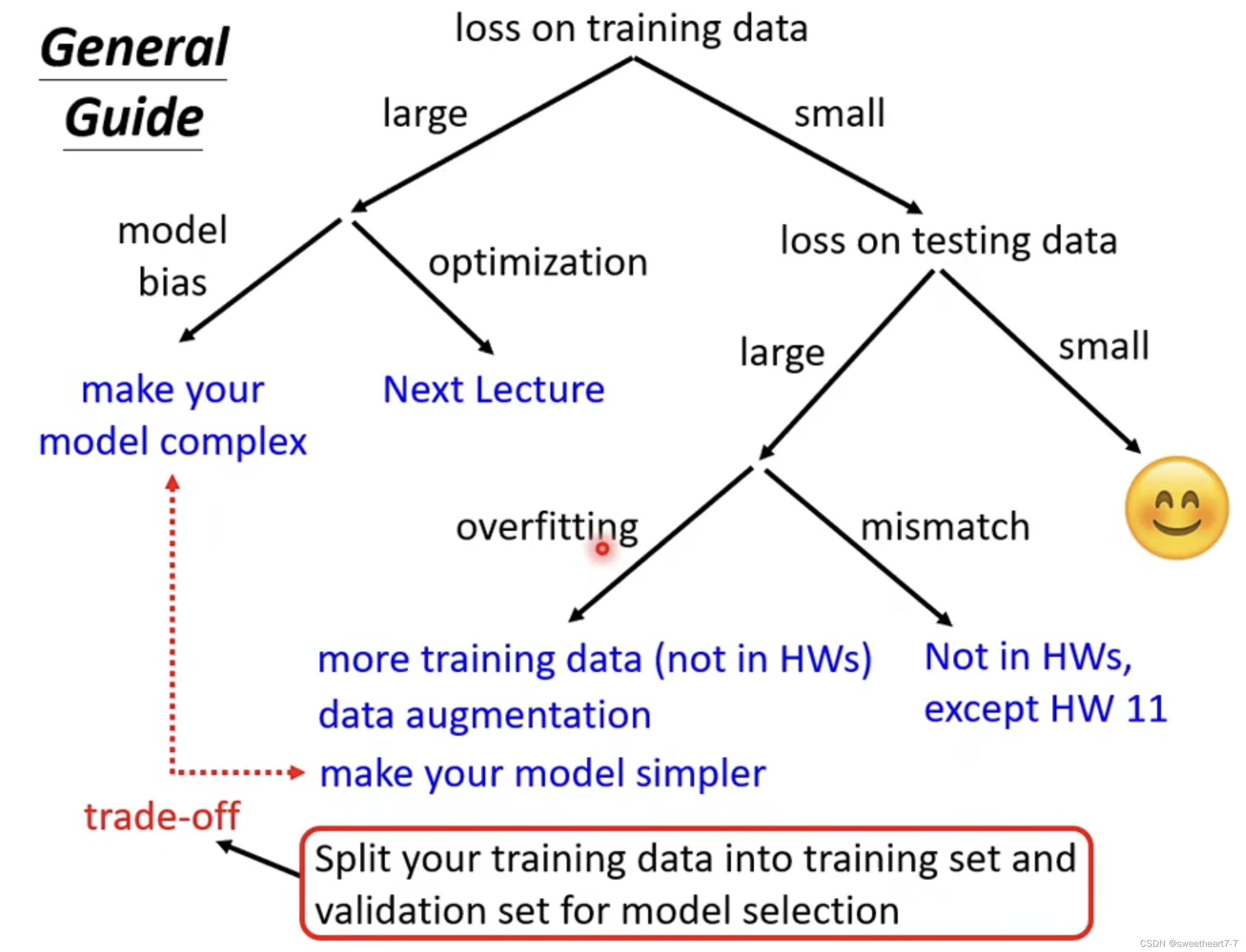

机器学习任务攻略

注意: 当 loss 在 training data When it is very big,如果增加模型复杂度,但是 loss 并没有减少,大概率是 optimization 有问题.

解决 o v e r f i t t i n g overfitting overfitting 的几种常见办法:

- 减少模型复杂度,Choose a simpler and smoother model

- 增加训练集数据

- Reduce parameters or share parameters

- 减少 feature

- Early stopping

- Regularization

- Dropout

How to pick as much as possible in the unknown testing data The above perform better model

You can add a validation set to choose a better one model,通常采用 N Fold cross-validation to split the dataset and perform validation.

类神经网络训练不起来怎么办

optimization Fails because…

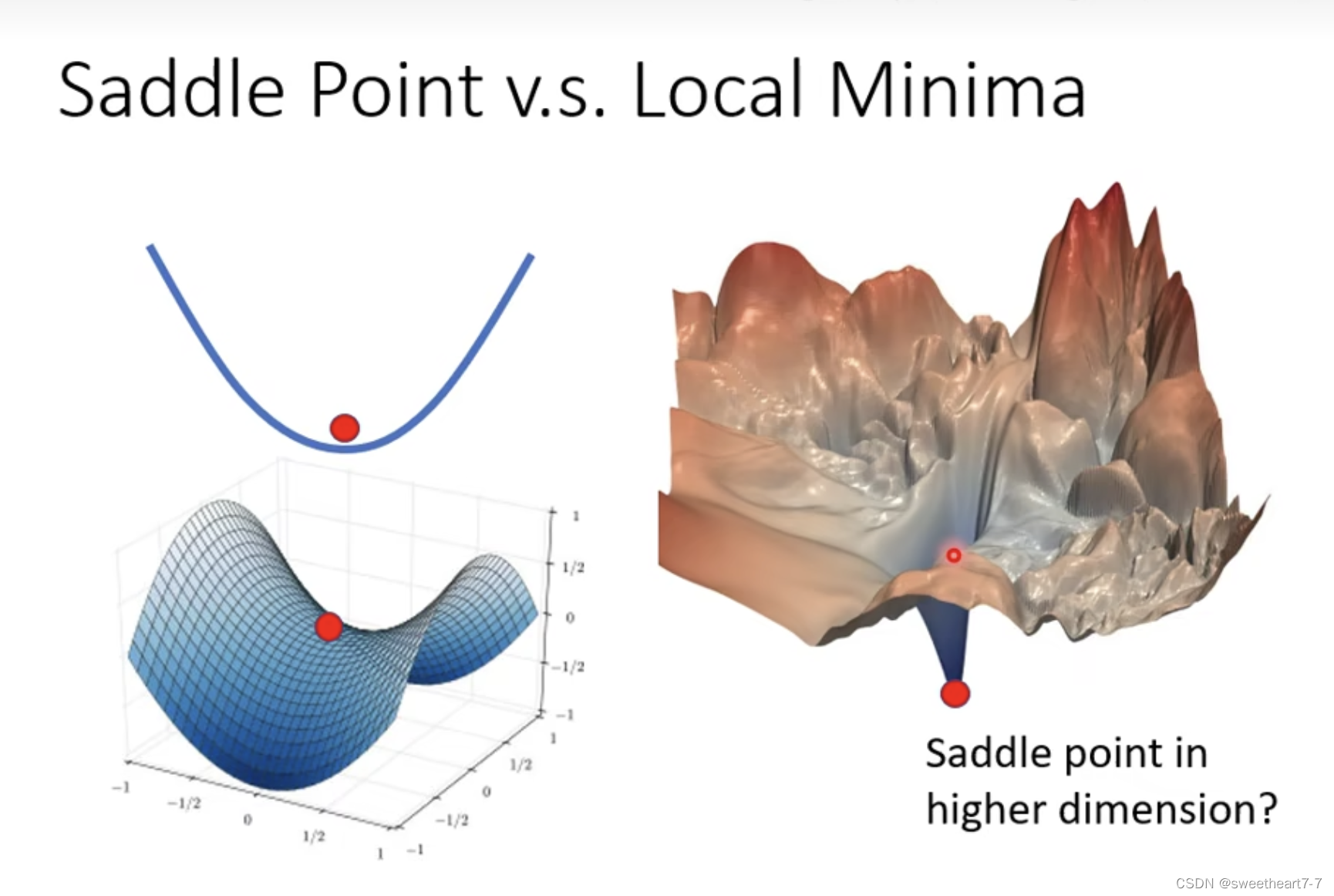

Local minima(局部最小值)与 saddle point(鞍点)

梯度为 0

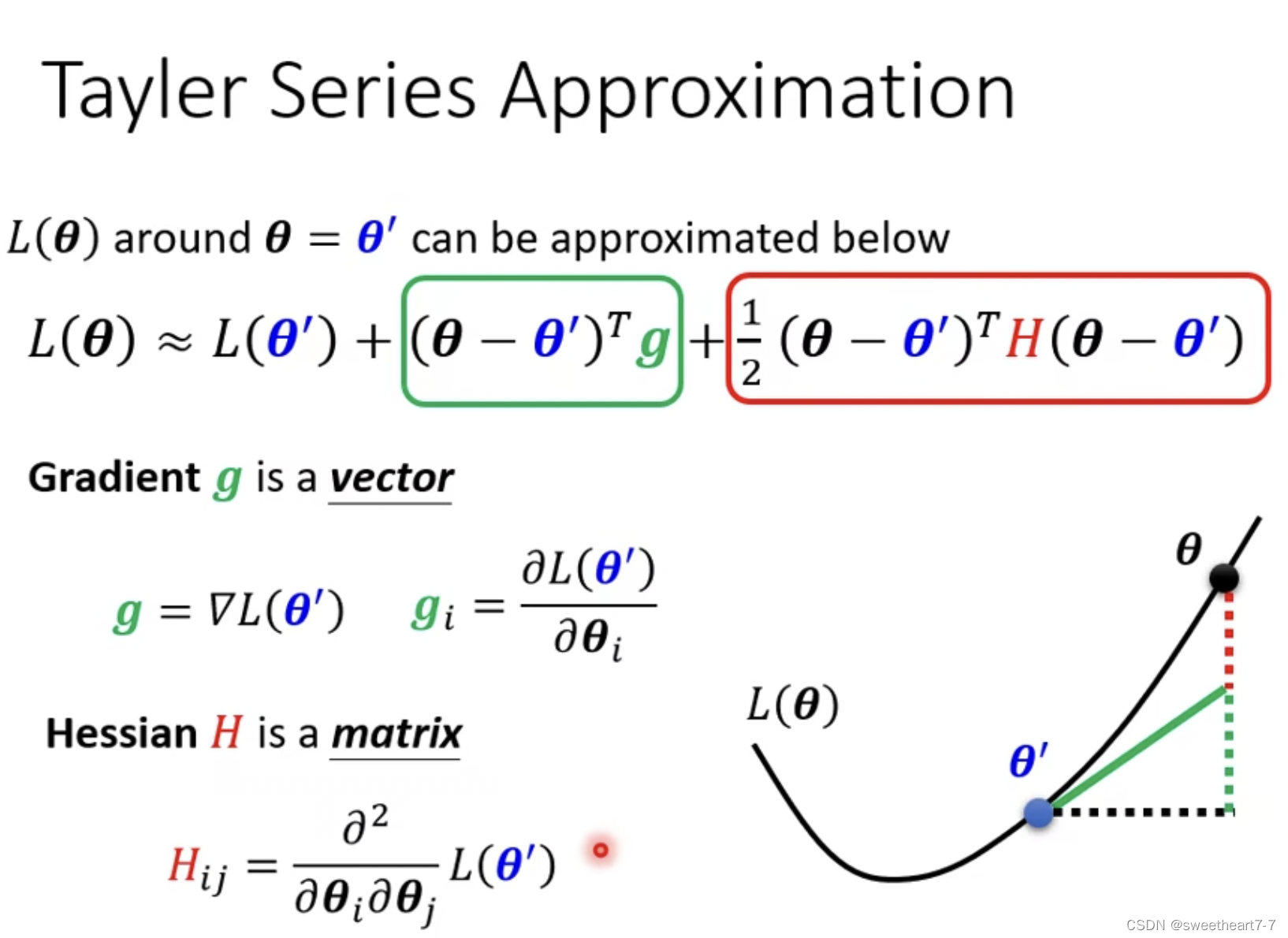

如何判断在 θ = θ ′ θ=θ' θ=θ′Loss function 形状:It is described by Taylor series expansion.

当满足 critical point 时,grdient 为 0

在 θ θ θ 为其他值时,如果都大于 L ( θ ′ ) L(θ') L(θ′) 时,Explain that this is the local minimum point…

But we can't bring all of them v v v 值,So it can be turned into the following judgment:

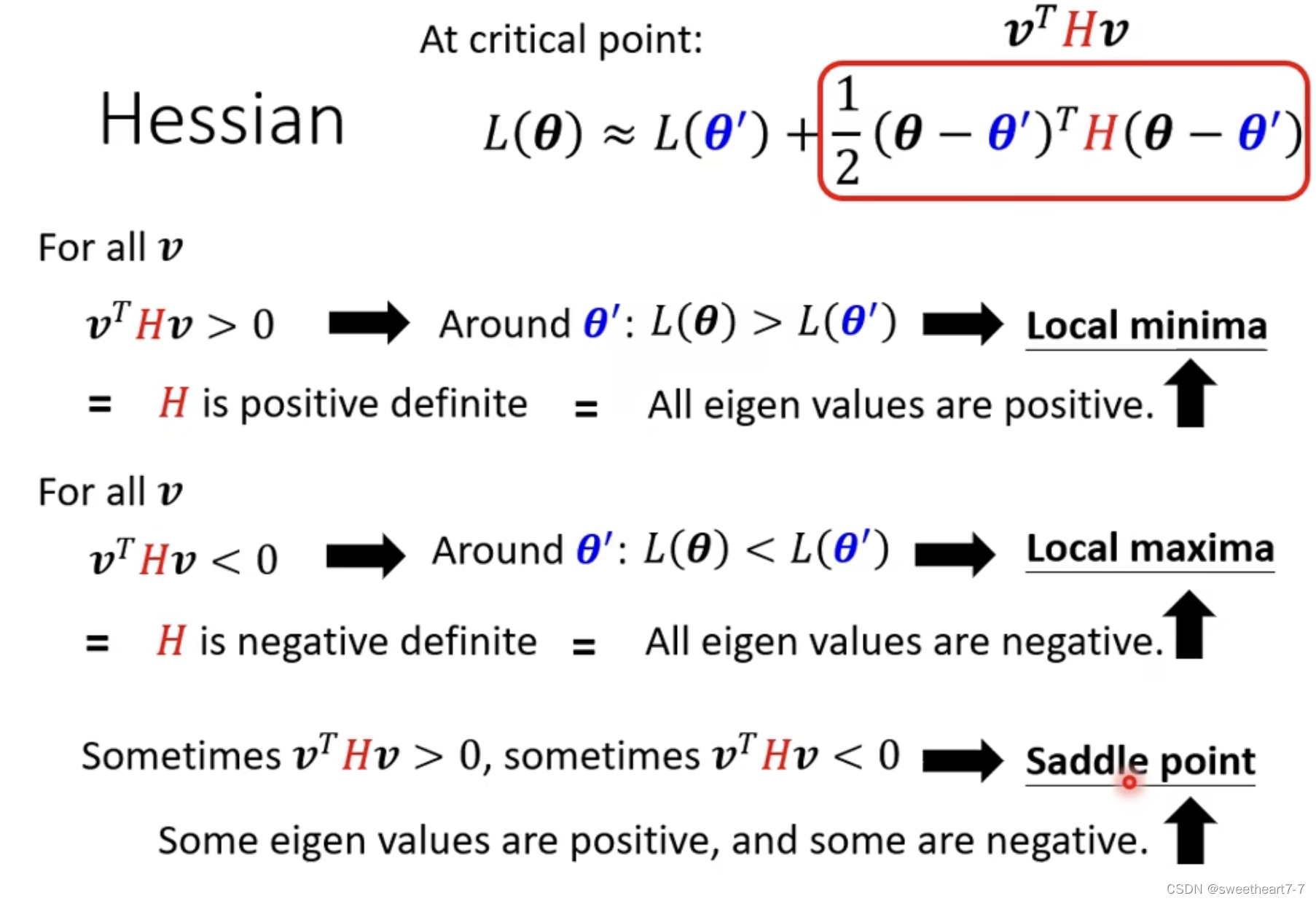

满足 v T H v > 0 v^THv > 0 vTHv>0 的 H H H(hessian) 矩阵叫做 positive definite.

positive definite 的特性:All eigenvalues are positive.

例子:

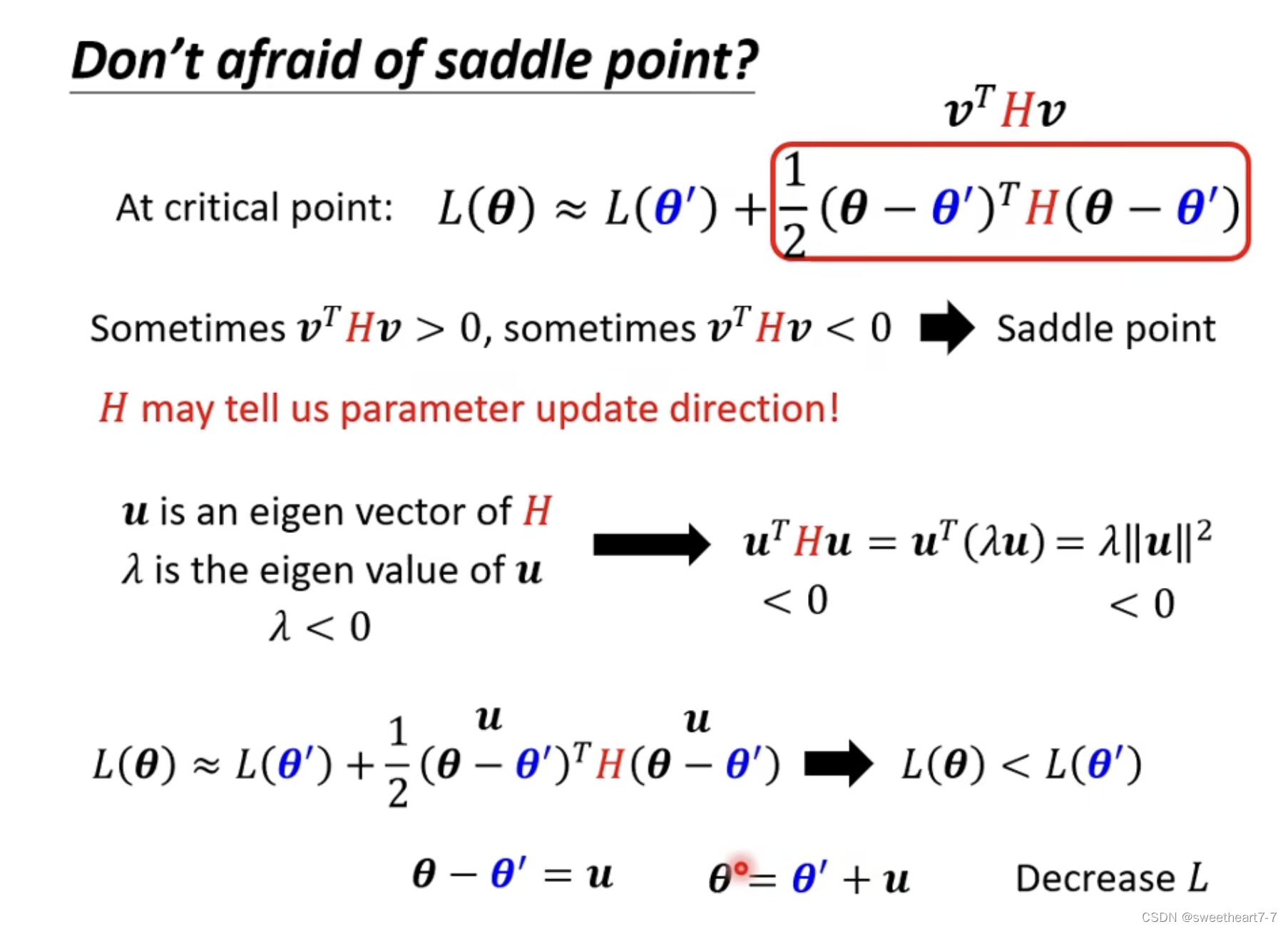

当 critical point 是 saddle point(鞍点)时,可以通过 Hessian 来帮我们判断 update 的方向.

Find the eigenvalue is Negative counterpart The direction of the eigenvectors,Go in this direction,will reduce the gradient.

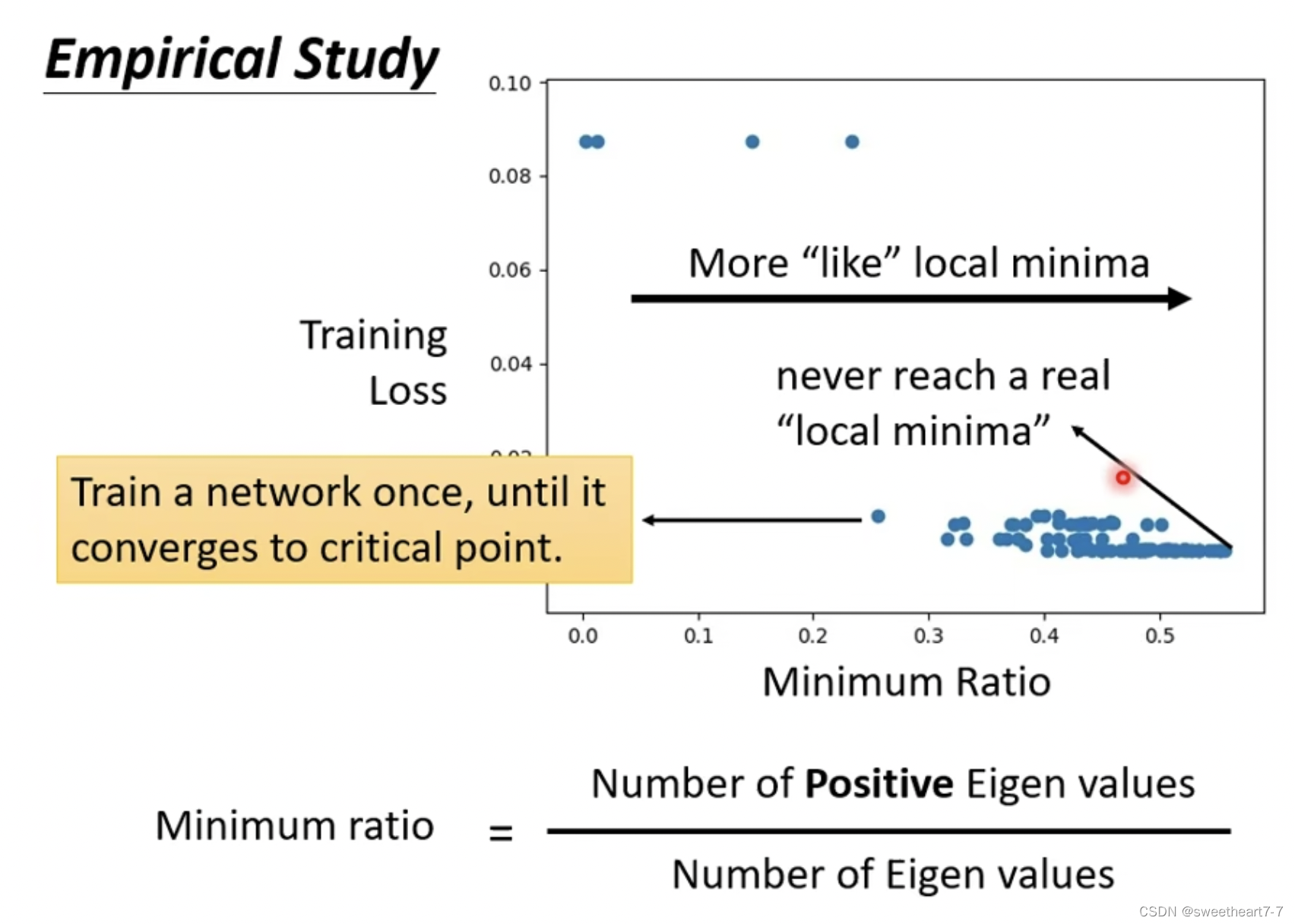

一个点代表一个 network.

The vertical axis represents when the training stops,Loss 的大小.

The horizontal axis represents when the training stops,The ratio of eigenvalues with positive eigenvalues to all eigenvalues.

So in high dimensional spaces most are saddle points rather than local minima.

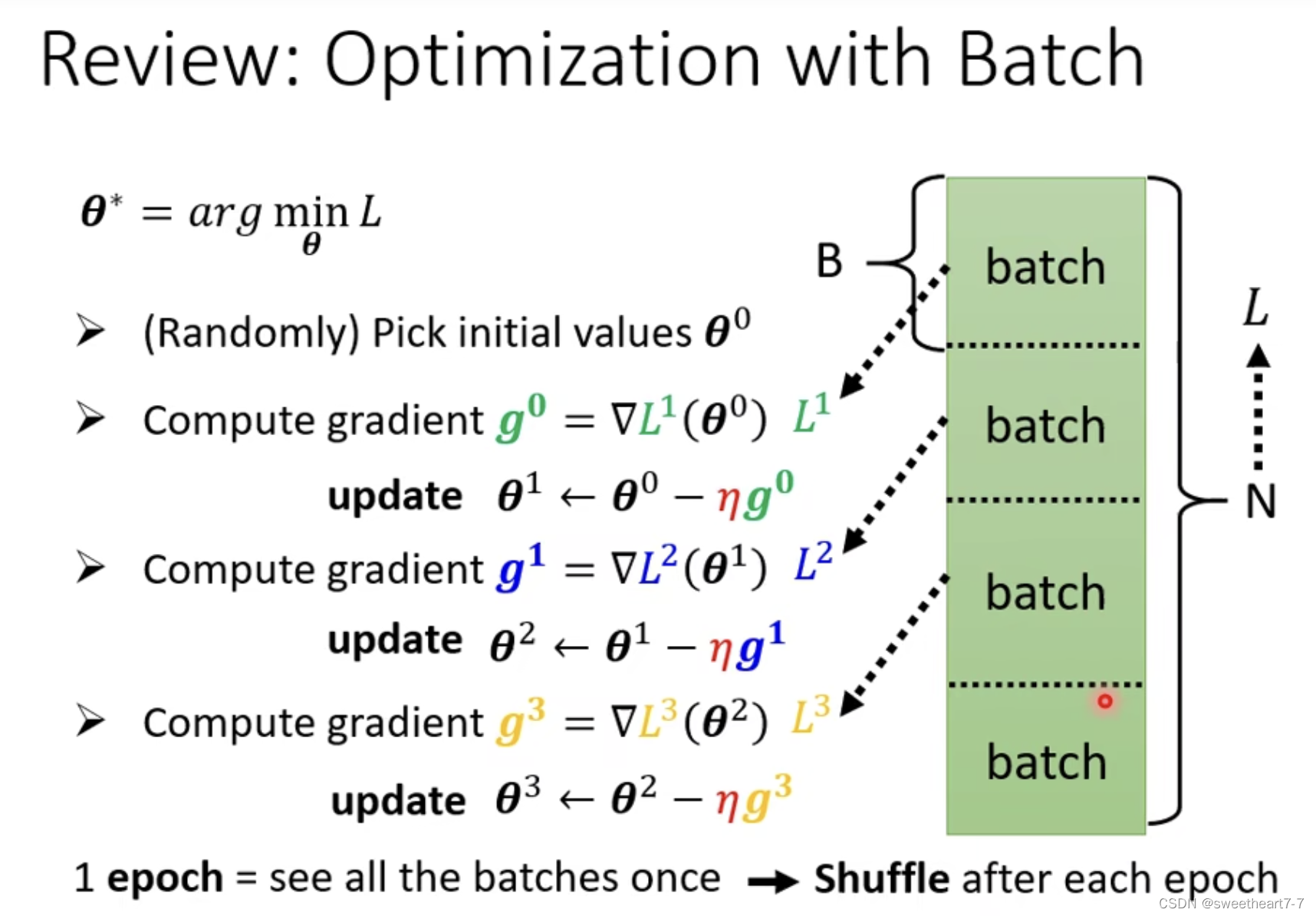

batch 与 momentum

batch

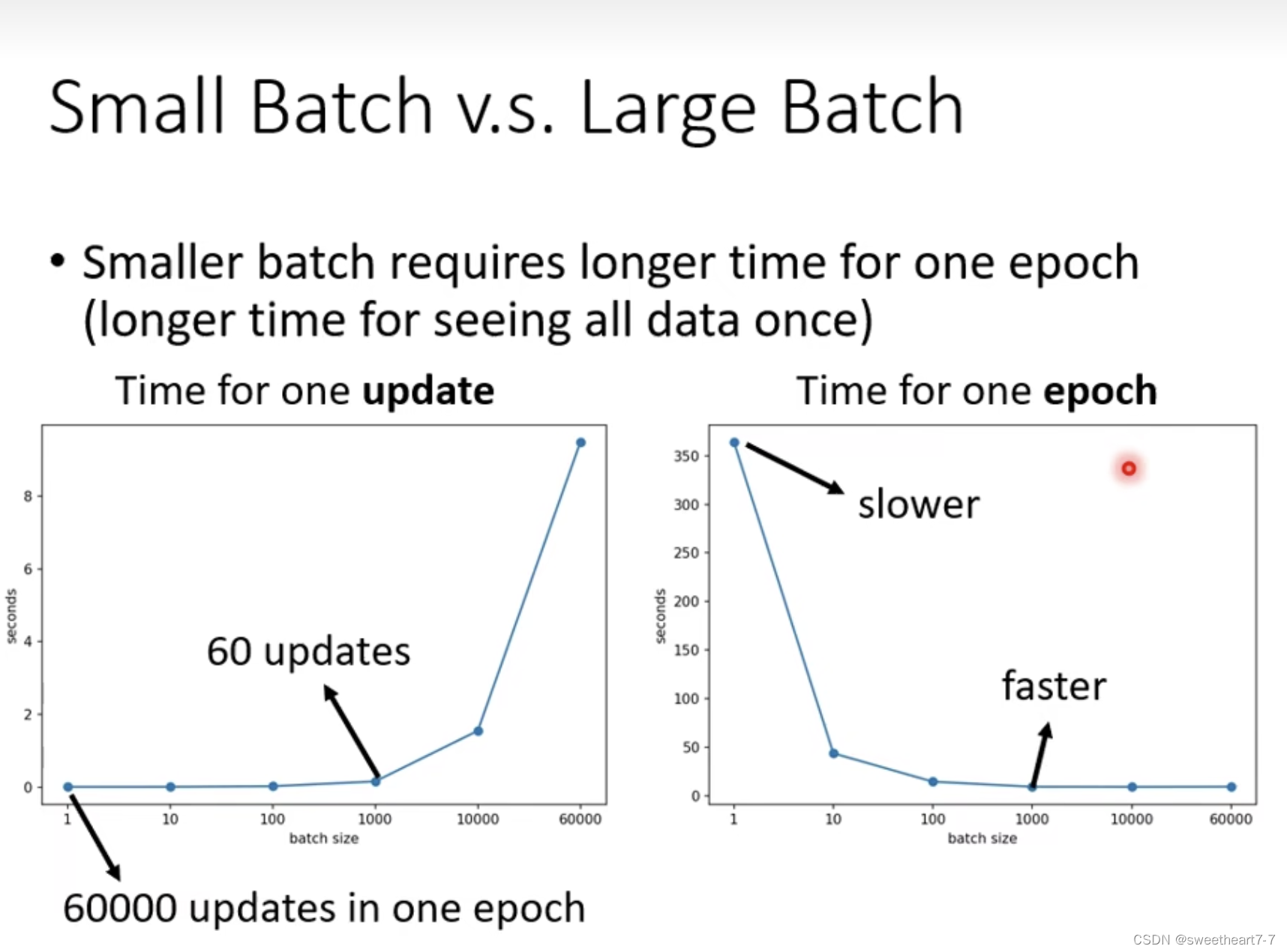

为什么要用 batch:每个 batch A wave of parameters can be updated

When there is parallel operation,batch size The big one might train one epoch 会更快.

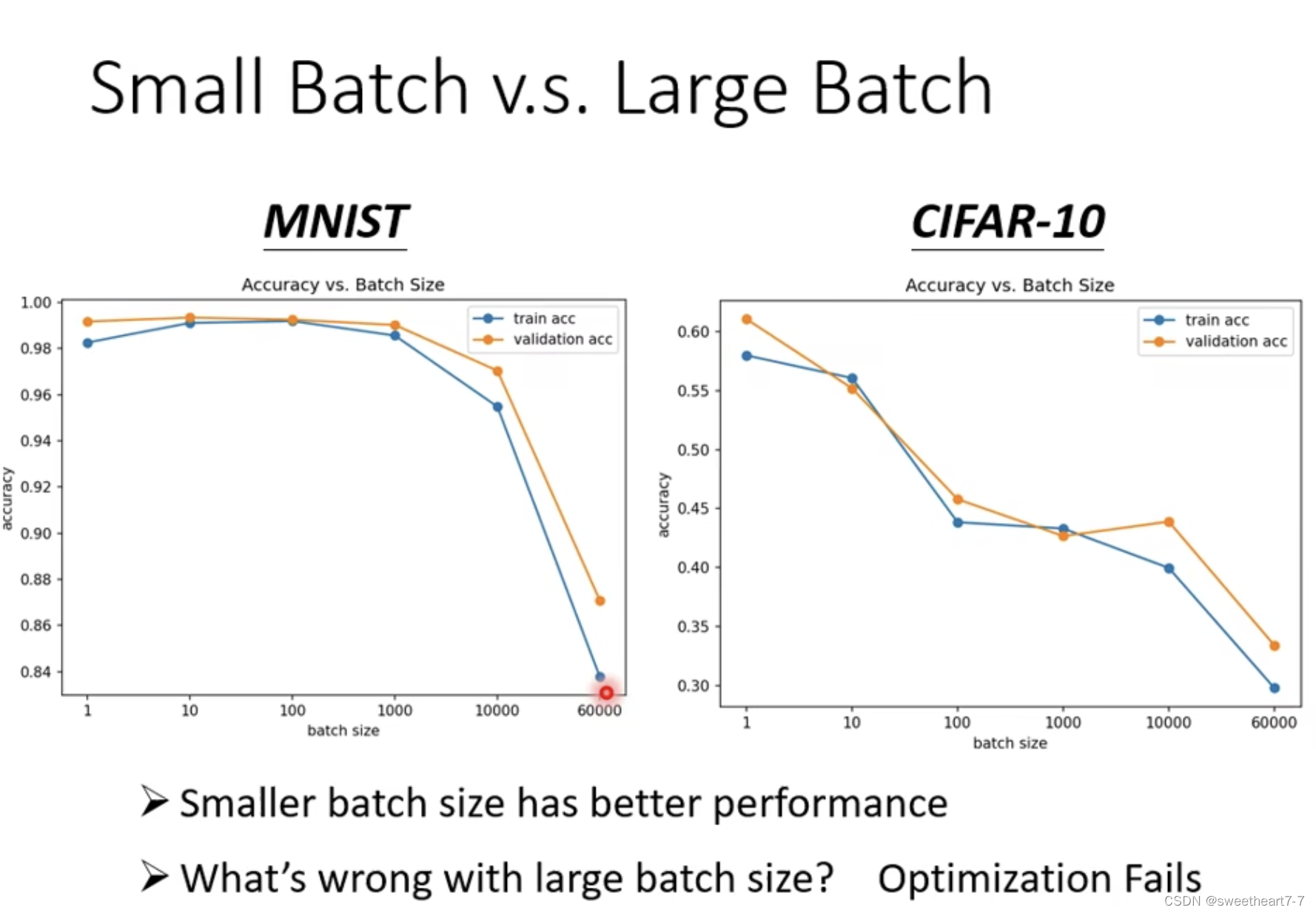

但是在 batch size 小的 noise 对 optimization There may be better results.

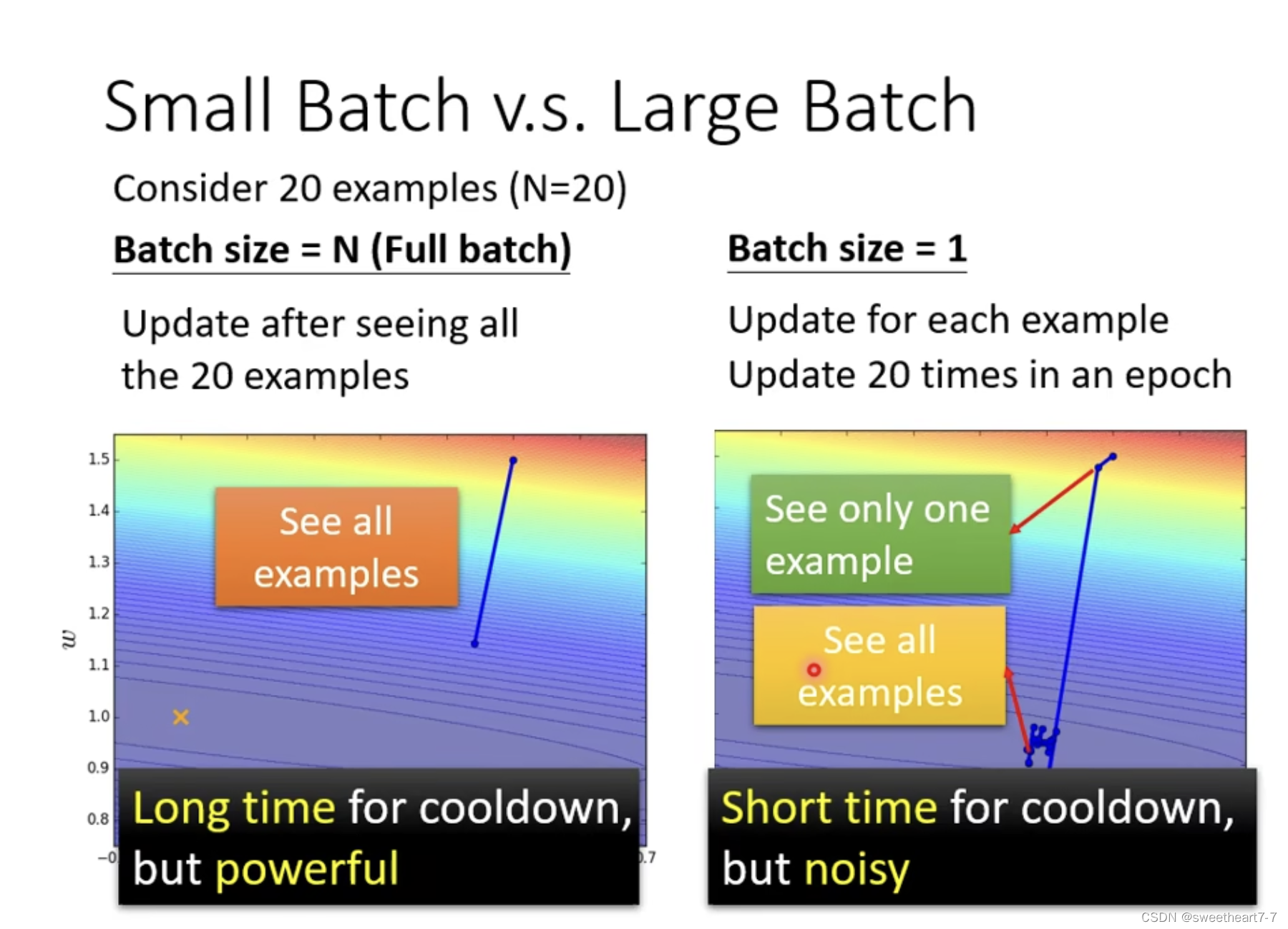

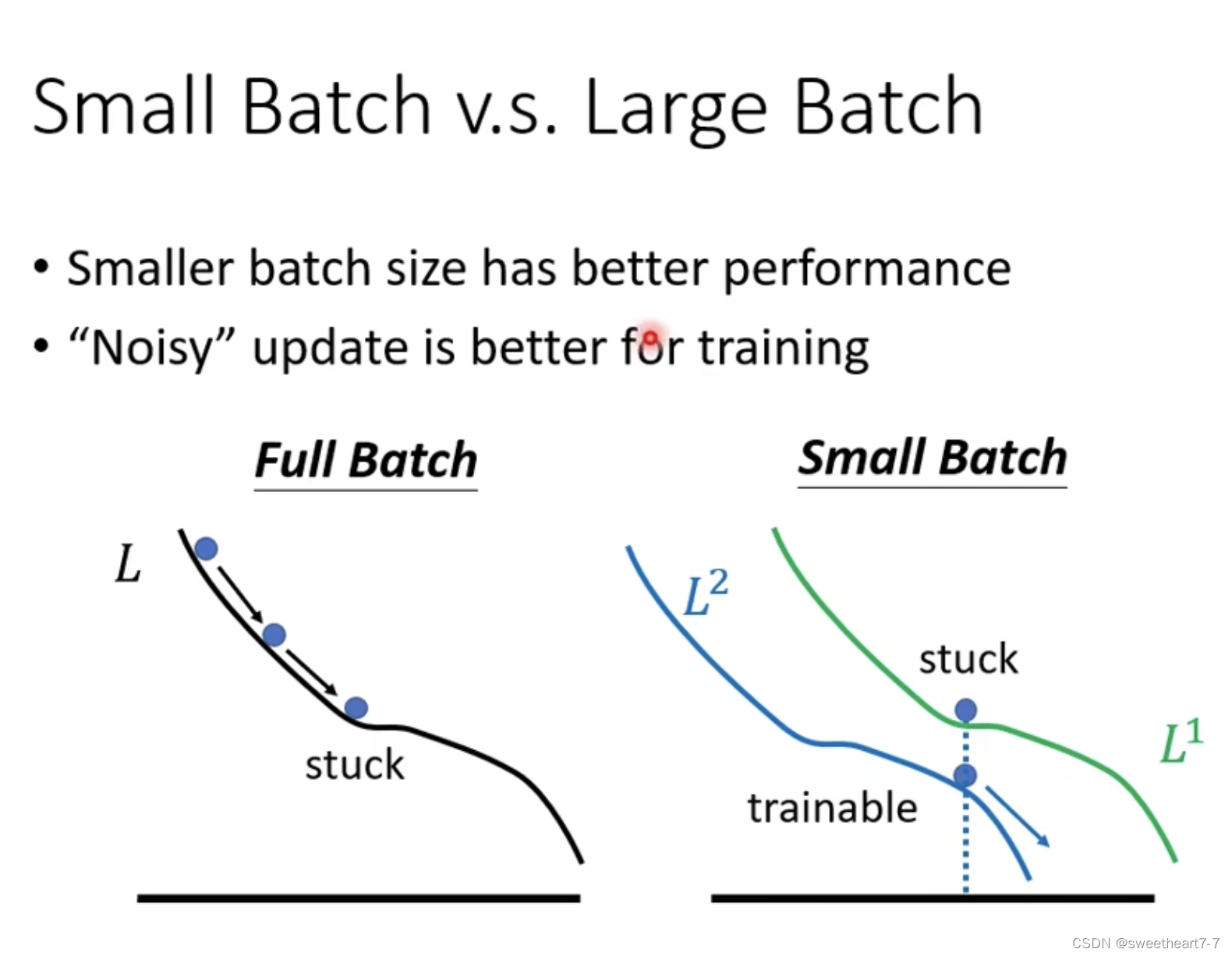

小的 batch 对 training 更好 可能的解释:

每次 batch 时对应的 loss function 有差异,The corresponding gradients are different.

小 batch size 对 testing 更好:

Local minima 也有好坏之分,平原上的 Local minima 更好,in the canyon Local minima 更差,而 大的 batch size 会更倾向于 in the canyon Local minima.

因为小的 batch size 的 update The direction is random,Its easier to jump out Sharp Minima.

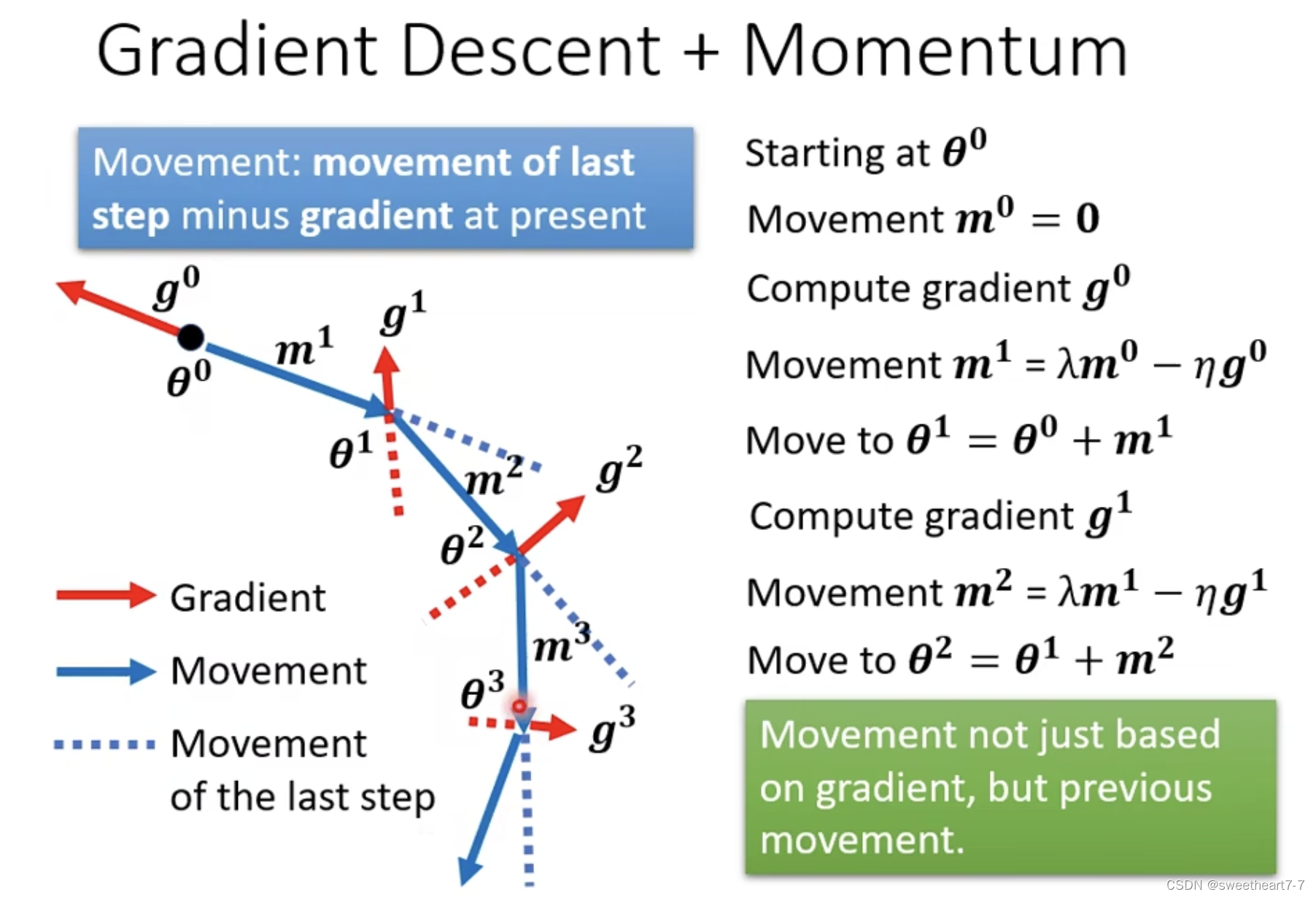

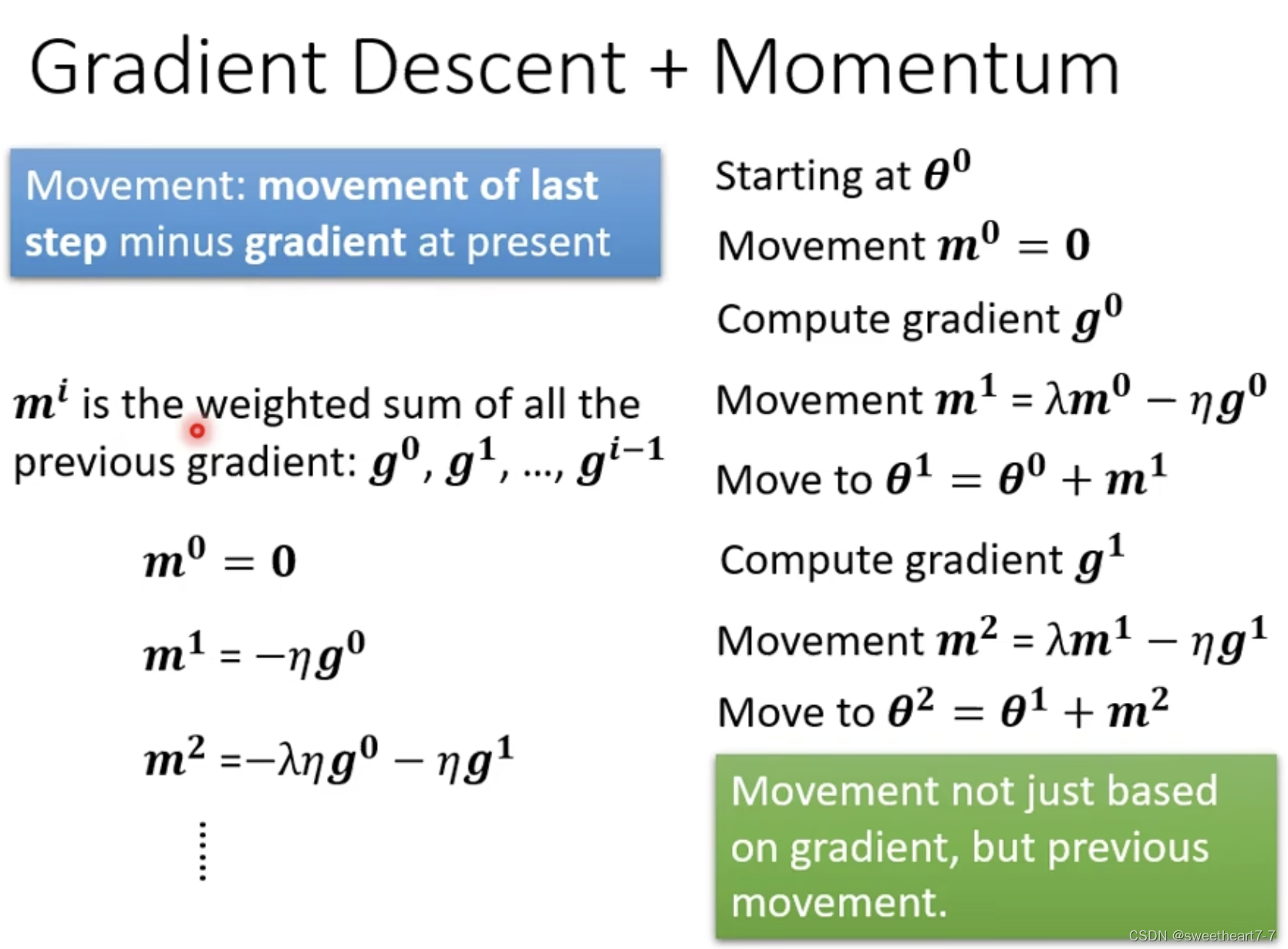

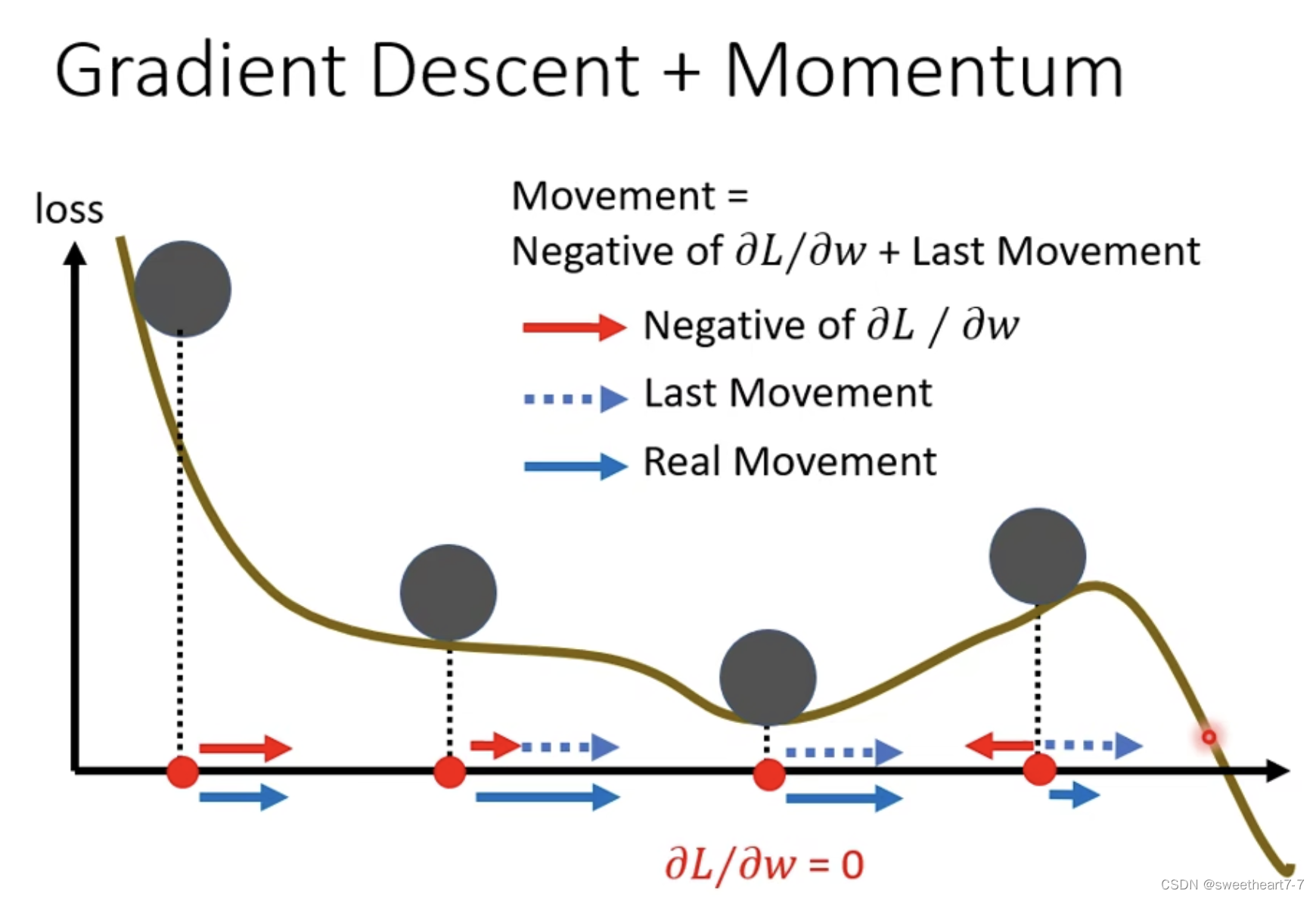

Momentum

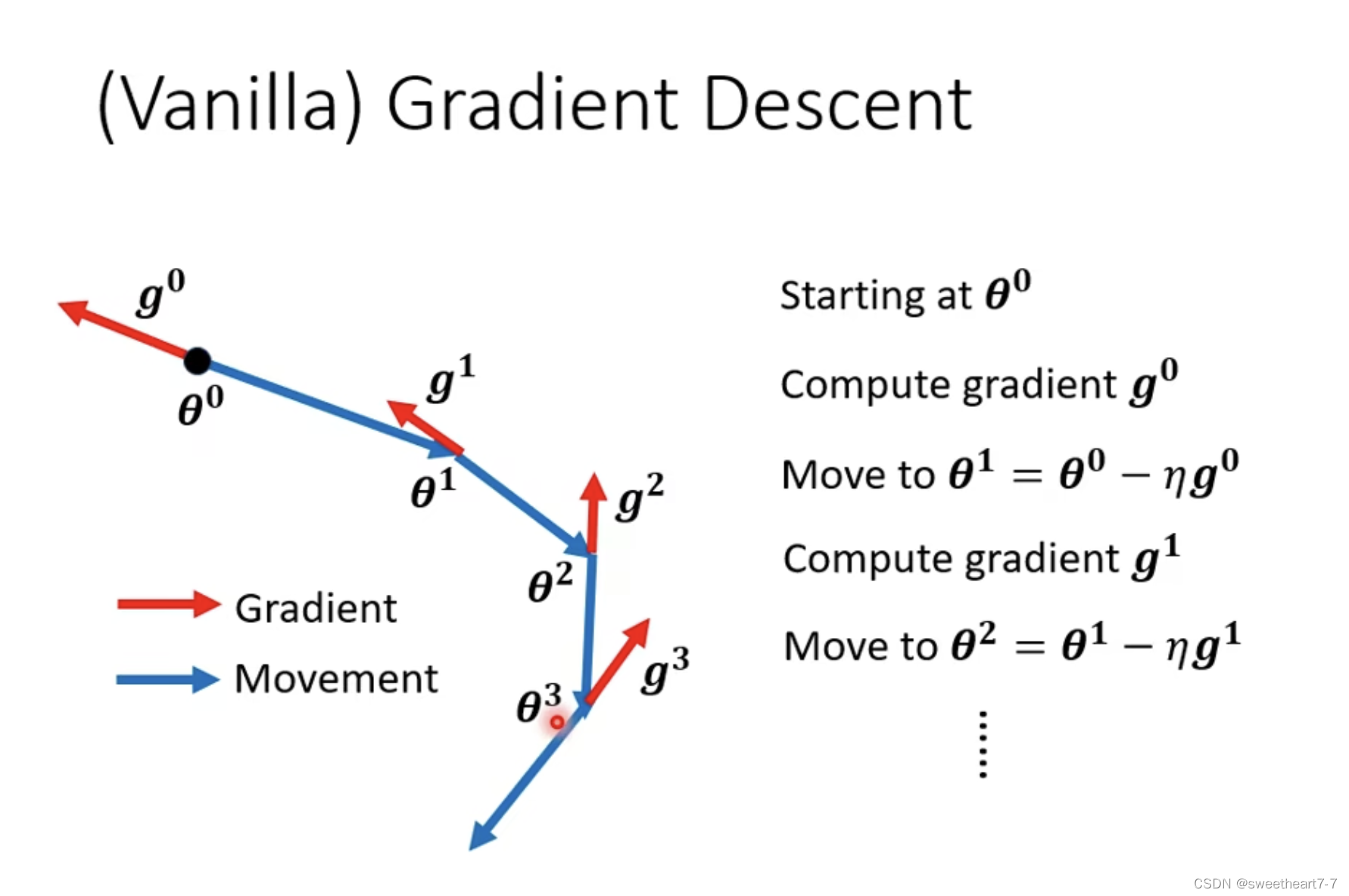

普通的 grident descent 在 update only go 梯度的反方向

加上 Momentum 后,update 时,The gradient will go in the opposite direction of the gradient at this time as well momentum(The direction of the previous step) the inverse of the sum.

而 momentum It is the sum of all previous forward directions.

自动调整学习率 (Learning Rate)

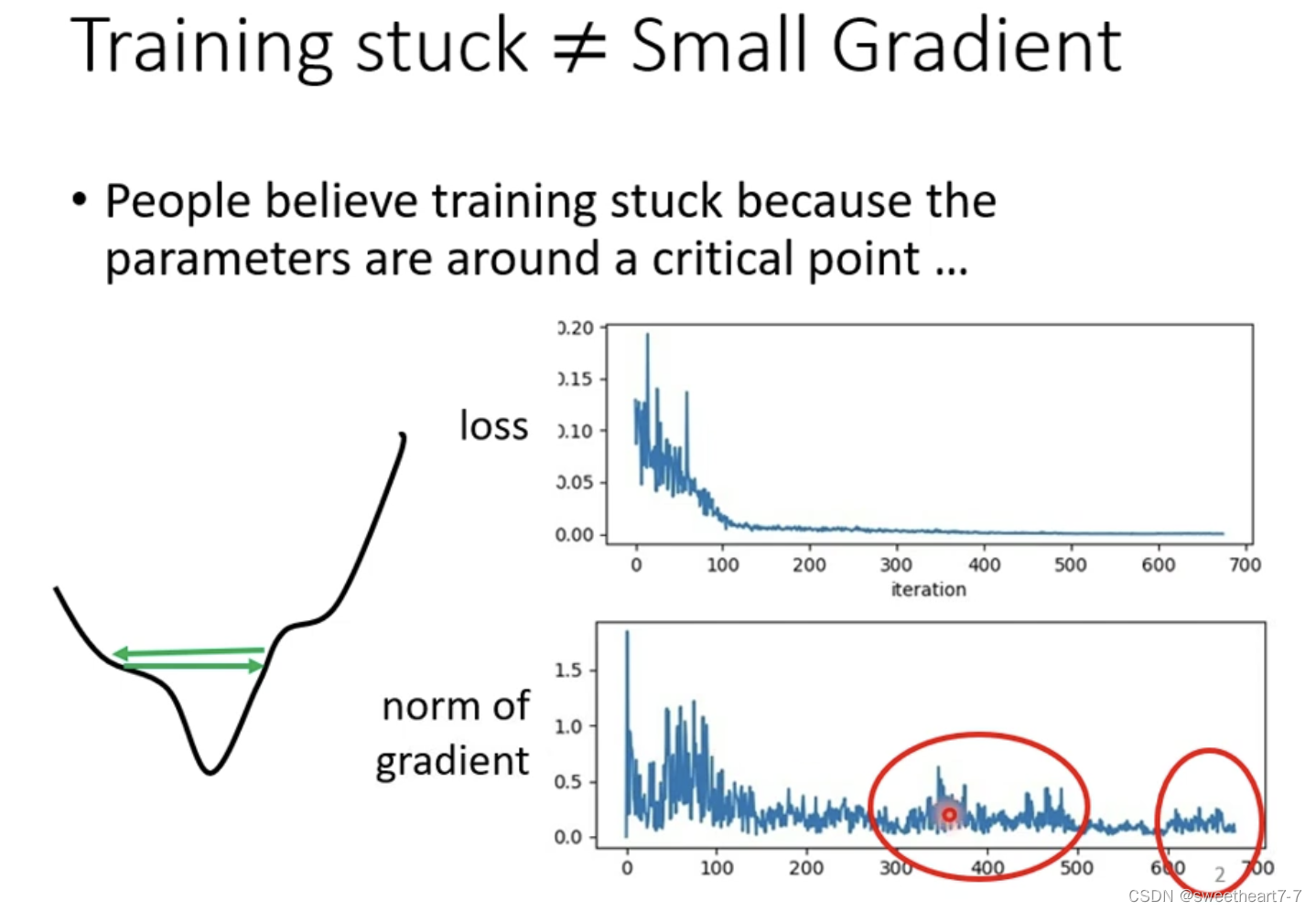

当 Loss 不下降的时候,Not necessarily stuck critical point 处(Hard to get to critical point).

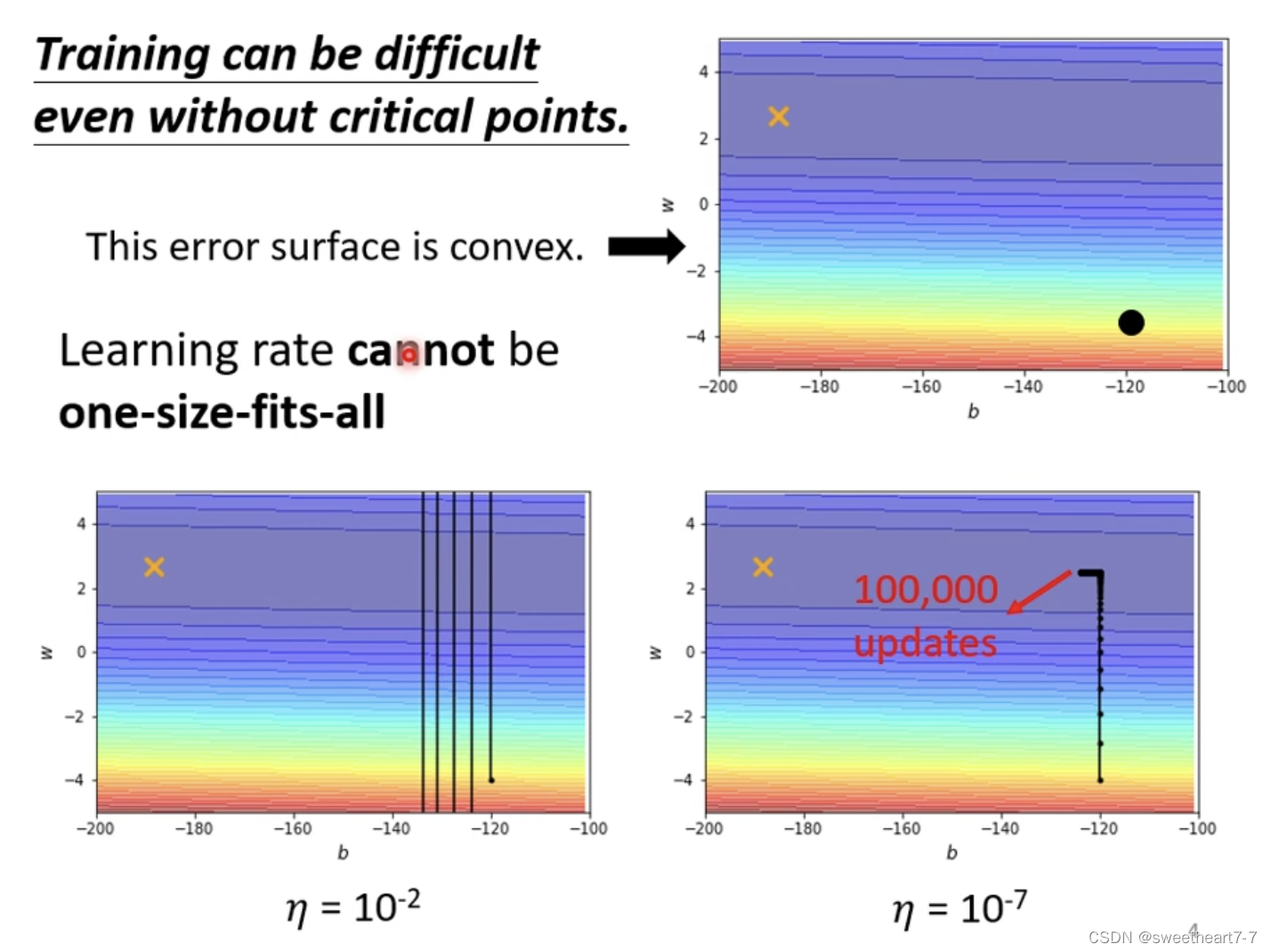

当 learning rate for planting,There may be two problems in the picture above(震荡 与 First normal and then very slow)

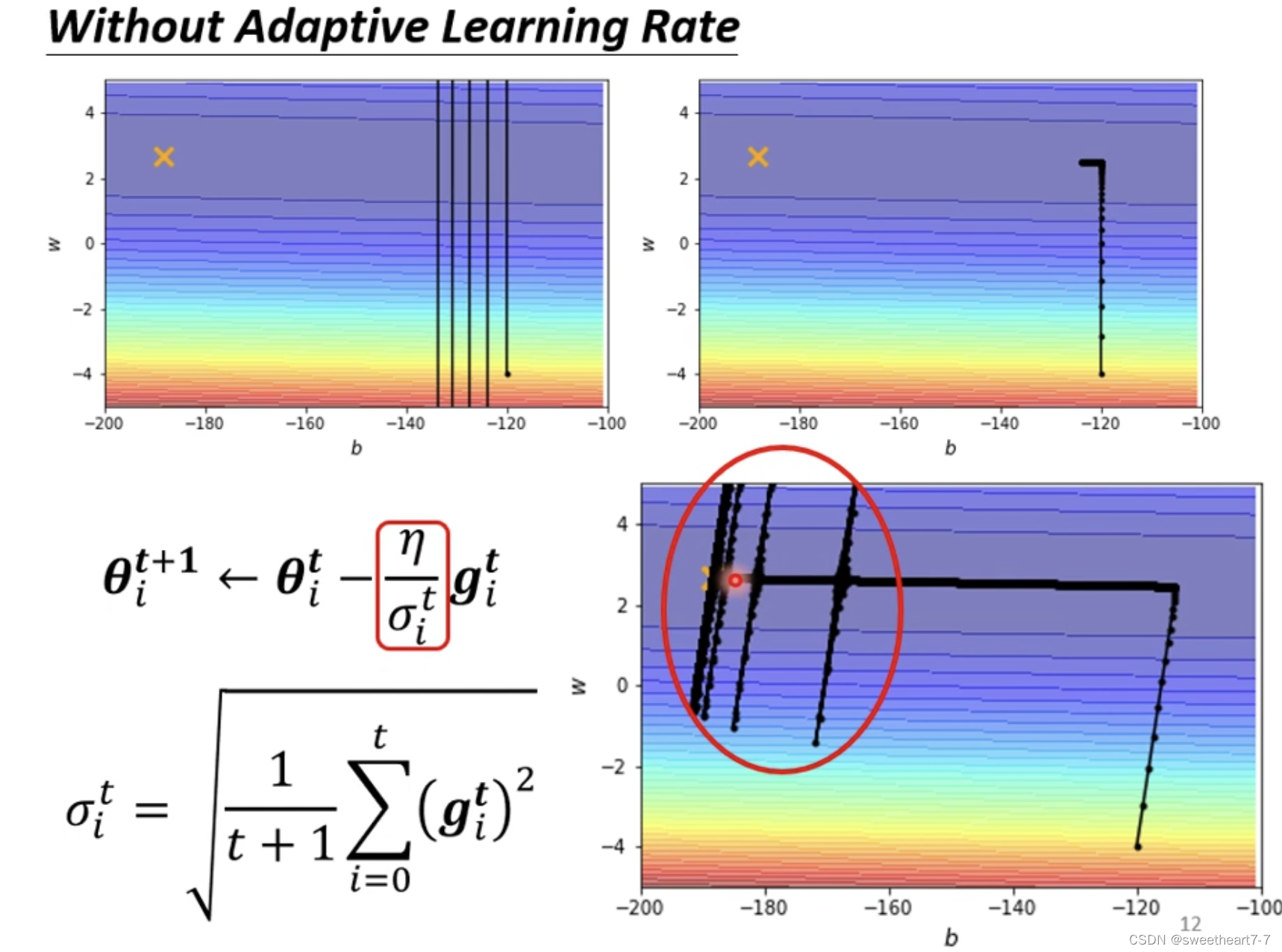

我们要改一下 gradient descend 的式子,make it in steep places learning rate 小,Gentle place learning rate 大.

Adagrad

相当于 如果 grident 大的话,σ 就大,σ 大的话,learning rate 就小了.

RMSProp

引入 α 来表示 新算出来的 grident 所占的比重.

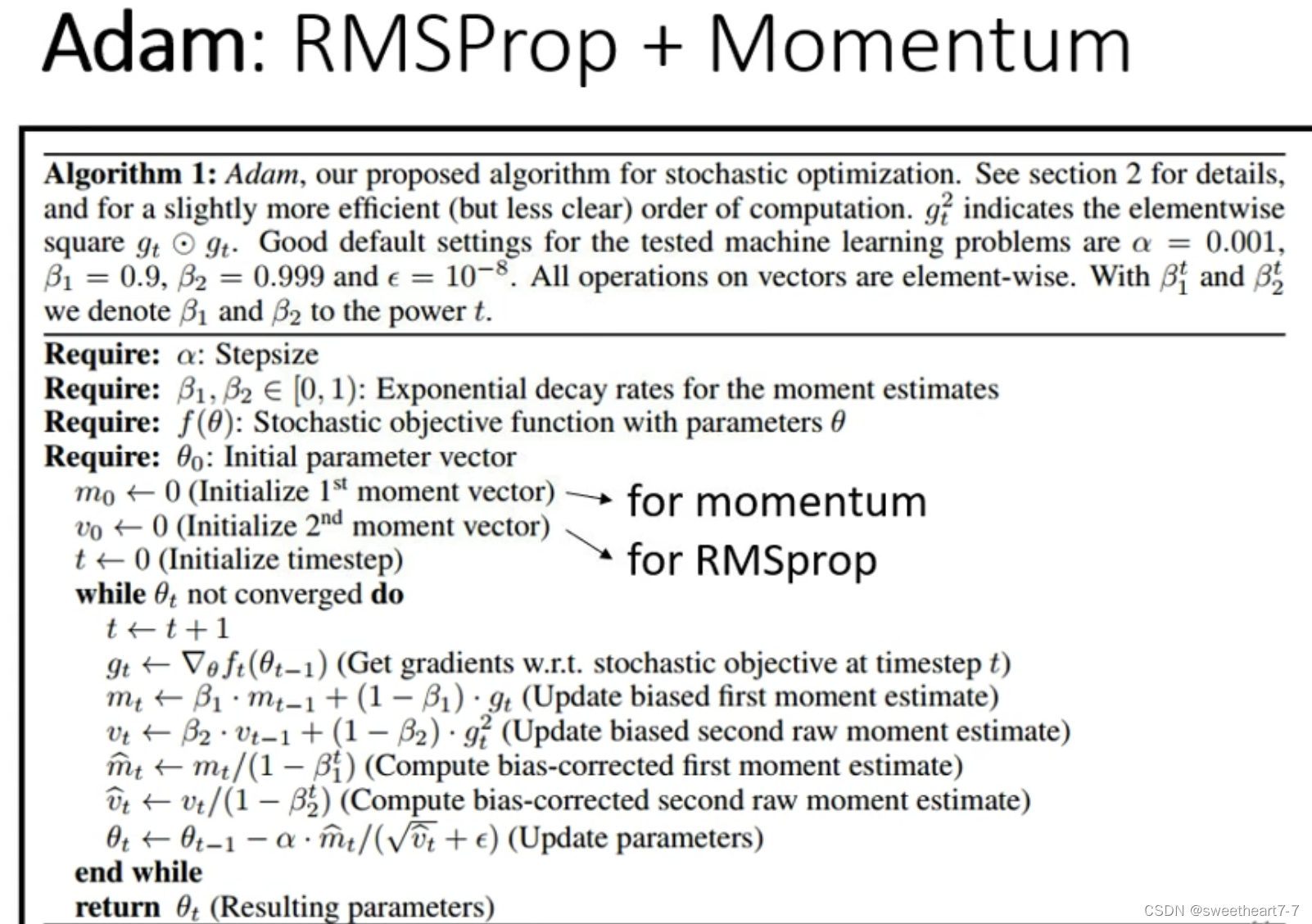

Adam: RMSProp + Momentum

引入 Adagrad

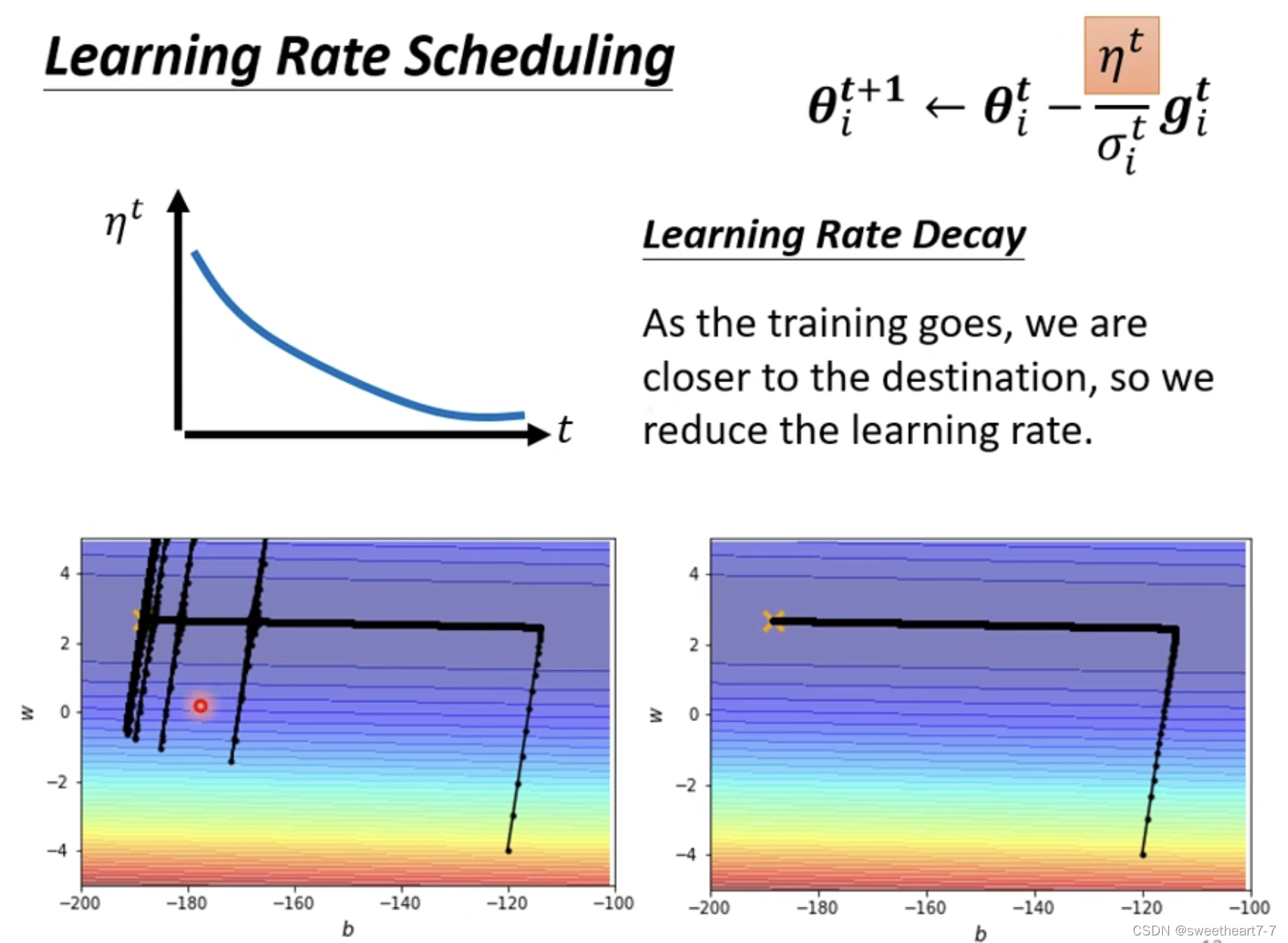

η Set to a function that varies with time,increase over time η (learning rate) 越来越小.

Warm up (黑科技)

momentus It is to increase the inertia of historical movement,RMS is to moderate the size of the pace,become smoother

损失函数 (Loss)

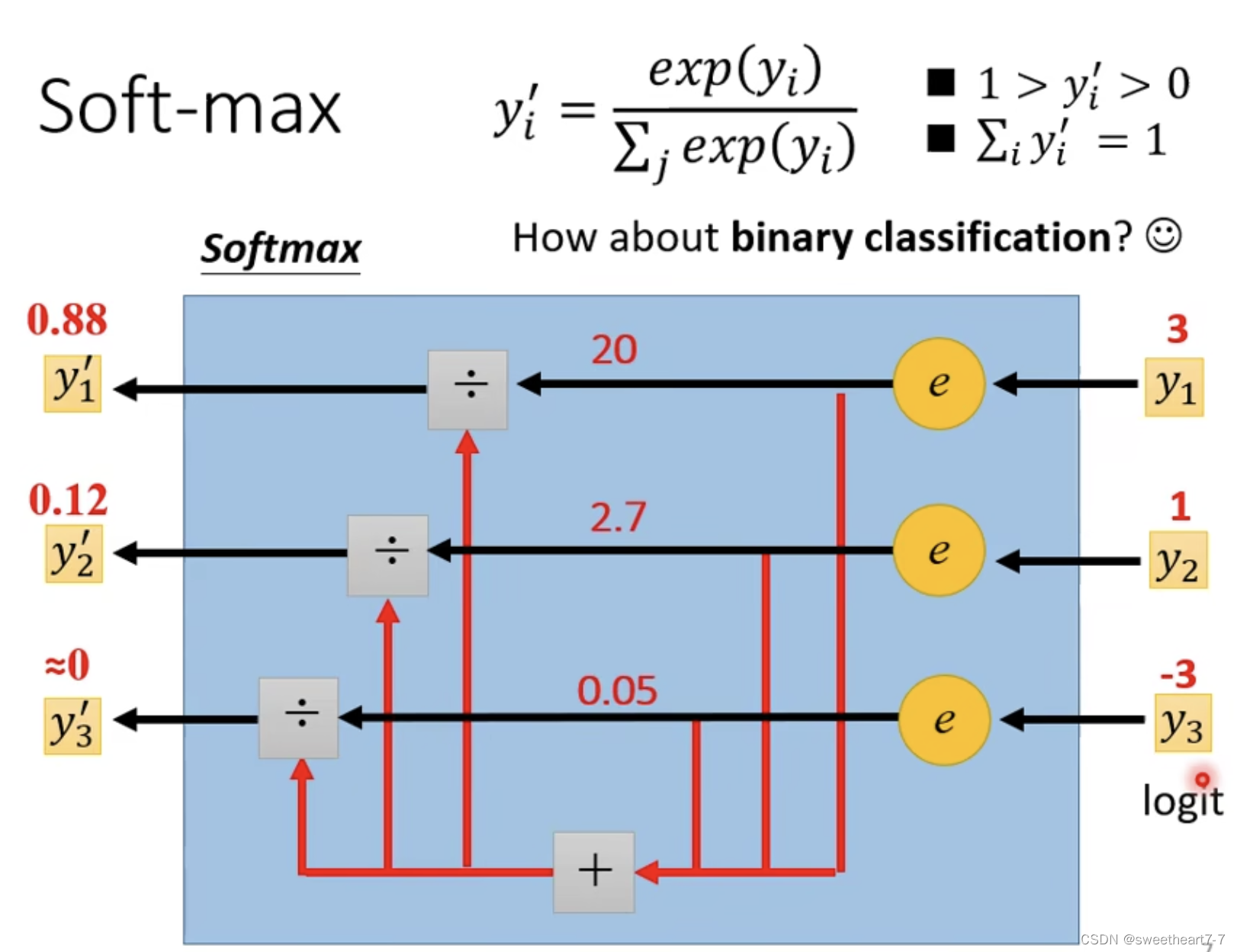

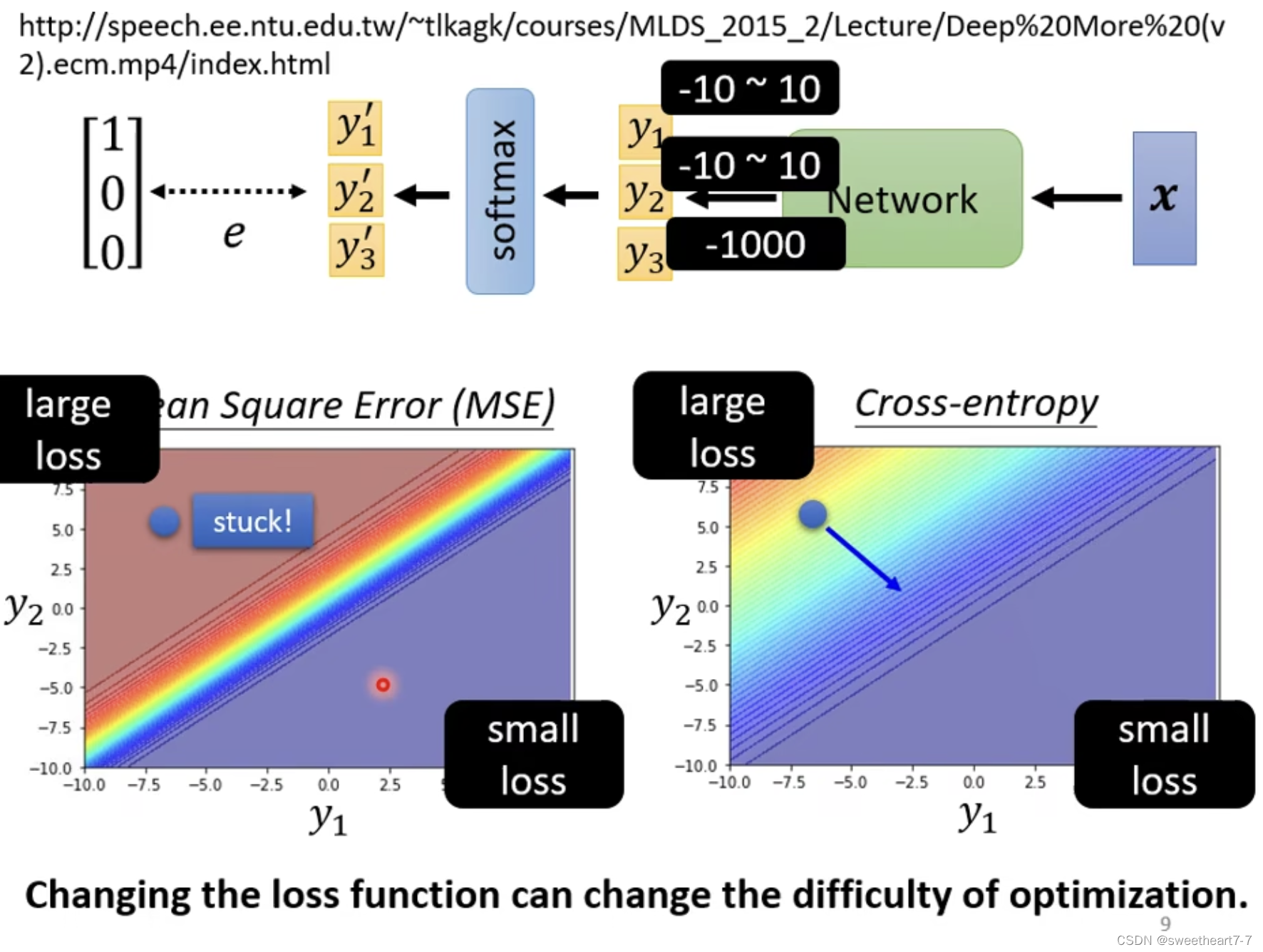

当只有两个 class 时,一般采用 sigmoid ( 此时 sigmoid 跟 softmax 的作用等价),And two or more are used softmax.

minimizing cross-entropy 就相当于 maximizing linklihood

用 Mean Square 处理 classify 问题,May get stuck critical point.

·

边栏推荐

- C# using timer

- 22. Inventory service

- 容器技术真的是环境管理的救星吗?

- SQL语句--获取数据库表信息,表名、列名、描述注释等

- 络达开发---自定义Timer的实现

- 生信实验记录(part3)--scipy.spatial.distance_matrix

- Still using Xshell?You are out, recommend a more modern terminal connection tool, easy to use!

- R语言多元线性回归、ARIMA分析美国不同候选人对经济GDP时间序列影响

- Shell Text Three Musketeers Sed

- std::format格式化自定义类型

猜你喜欢

Dual machine thermal for comprehensive experiment (VRRP + OSPF + + NAT + DHCP + VTP PVSTP + single-arm routing)

#yyds干货盘点#【愚公系列】2022年08月 Go教学课程 008-数据类型之整型

![[GXYCTF2019]BabySQli](/img/8a/7500c0ee275d6ef8909553f34c99cf.png)

[GXYCTF2019]BabySQli

Single-chip human-computer interaction--matrix key

2022年PMP报考指南

22-7-31

Mysql数据库安装配置详细教程

22、库存服务

BEVDepth: Acquisition of Reliable Depth for Multi-view 3D Object Detection Paper Notes

导入数据包上传宝贝提示“类目不能为空”是什么原因,怎么解决?

随机推荐

Shell 文本三剑客 Sed

Sigma development pays attention to details

FPGA学习专栏-串口通信(xinlinx)

Exception: try catch finally throws throw

How to build speed, speed up again

报考PMP需要做些什么准备?

如何防止离职员工把企业文件拷贝带走?法律+技术,4步走

ora-00001违反唯一约束

Mysql database installation and configuration detailed tutorial

The statistical data analysis, interview manual"

【Video】Report Sharing | 2021 Insurance Industry Digital Insights

C# string与stream的相互转换

C# WebBrower1控件可编辑模式保存时会提示“该文档已被修改,是否保存修改结果”

Word set before the title page

ABP中的数据过滤器

How to convert url to obj or obj to url

二维数组实战项目--------《扫雷游戏》

两日总结十

zerorpc:async=True can be written as **{“async“: True}

R language multiple linear regression, ARIMA analysis of the impact of different candidates in the United States on the economic GDP time series