当前位置:网站首页>Yyds dry goods inventory a common error in a web crawler

Yyds dry goods inventory a common error in a web crawler

2022-04-21 11:49:00 【Python advanced】

Hello everyone , I'm Pippi .

One 、 Preface

A few days ago Python There is a member of the silver exchange group called 【 Rain is rain 】 My fans asked one Python The problem with web crawlers , Take it out here and share it with you , Let's study together .

Questions as follows :

.

.

Two 、 To solve the process

It's easy to doubt that the structure of the original web page has changed , Use xpath If the selector extracts , There will be mismatches , List index is out of range , Cause error .

【Python Initiate 】 Gives an idea , It can be used try Exception handling to avoid , But I still can't get the data , It's really a little big .

Then in the afternoon 【Python Initiate 】 He found the reason when running his code , As shown in the figure below .

His

His url here , There is a problem with the structure , One more. /, Cause web page access error .

Modify the , You can run , in addition , There are also many requests in the page details , Remember a little sleep Next , That's all right. . Here is the detailed code , Interested friends , You can run down .

import requests

from lxml import etree

from fake_useragent import UserAgent

import time

class kitchen(object):

u = 0

def __init__(self):

self.url = "https://www.xiachufang.com/category/40076/"

ua = UserAgent(verify_ssl=False)

for i in range(1, 50):

self.headers = {

'User-Agent': ua.random,

}

''' Send a request Get a response '''

def get_page(self, url):

res = requests.get(url=url, headers=self.headers)

html = res.content.decode("utf-8")

time.sleep(2)

return html

def parse_page(self, html):

parse_html = etree.HTML(html)

image_src_list = parse_html.xpath('//li/div/a/@href')

for i in image_src_list:

try:

url = "https://www.xiachufang.com" + i

# print(url)

html1 = self.get_page(url) # The second request occurred

parse_html1 = etree.HTML(html1)

# print(parse_html1)

num = parse_html1.xpath('.//h2[@id="steps"]/text()')[0].strip()

name = parse_html1.xpath('.//li[@class="container"]/p/text()')

ingredients = parse_html1.xpath('.//td//a/text()')

self.u += 1

# print(self.u)

# print(str(self.u)+"."+house_dict[" name call :"]+":")

# da=tuple(house_dict[" material material :"])

food_info = '''

The first %s Kind of

food name : %s

primary material : %s

Next load chain Pick up : %s,

=================================================================

''' % (str(self.u), num, ingredients, url)

# print(food_info)

f = open(' The kitchen menu .txt', 'a', encoding='utf-8')

f.write(str(food_info))

print(str(food_info))

f.close()

except:

print('xpath Didn't get the content !')

def main(self):

startPage = int(input(" The start page :"))

endPage = int(input(" End page :"))

for page in range(startPage, endPage + 1):

url = self.url.format(page)

html = self.get_page(url)

self.parse_page(html)

time.sleep(2.4)

print("==================================== The first %s page climb take become work ====================================" % page)

if __name__ == '__main__':

imageSpider = kitchen()

imageSpider.main()

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

- 64.

- 65.

- 66.

- 67.

- 68.

- 69.

- 70.

- 71.

- 72.

- 73.

- 74.

- 75.

The results will be saved to a txt Inside the document , As shown in the figure below :

Come across this

Come across this url Splicing problem , Recommended urljoin The way , The sample code is as follows :

from urllib.parse import urljoin

source_url = 'https://www.baidu.com/'

child_url1 = '/robots.txt'

child_url2 = 'robots.txt'

final_url1 = urljoin(source_url, child_url1)

final_url2 = urljoin(source_url, child_url2)

print(final_url1)

print(final_url2)

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

The results are shown in the following figure :

urljoin The function of is to connect two parameters url, Fill the missing part of the second parameter with the of the first parameter , If the second has a complete path , The second is the main .

3、 ... and 、 summary

Hello everyone , I'm Pippi . This article mainly reviews a common error problem in a web crawler , In this paper, specific analysis and code demonstration are given to solve this problem , Help the fans solve the problem smoothly . Finally, I gave you an url The way of splicing , It is still very commonly used in web crawlers .

Finally, thank the fans 【 Rain is rain 】 put questions to , thank 【Python Initiate 】 The specific analysis and code demonstration are given , Thank you fans 【꯭】、【 Ash · Gioro 】、【 Luna 】、【dcpeng】、【 Mr. Yu Liang 】 And others participate in learning and communication .

friends , Practice it quickly ! If in the process of learning , Have encountered any Python problem , Welcome to add my friend , I'll pull you in Python The learning exchange group discusses learning together .

版权声明

本文为[Python advanced]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204211109376681.html

边栏推荐

- 会声会影2022发布会声会影2022的8项全新功能介绍(官方)

- 父子类加载顺序 静态 非静态 构造方法

- pycharm中归一化记录

- redis面试问题

- The basic software products of xinghuan science and technology have been fully implemented and blossomed, bringing "Star" momentum to the digital transformation of enterprises

- 2.精准营销实践阿里云odpscmd数据特征工程.

- Internet News: tuojing technology successfully landed on the science and innovation board; Jimi h3s and z6x Pro continue to sell well; HEMA launches "mobile supermarket" in Shanghai

- Path theme -- difference between server and browser

- EL表达式

- 中商惠⺠交易中台架构演进:对 Apache ShardingSphere 的应⽤

猜你喜欢

星环科技基础软件产品全面落地开花,为企业数字化转型带来“星”动能

Solution to the display of the name of xftp file

【招聘测评题】中的(行测)图形推理题基本逻辑总结(附例题)

教你轻松解决CSRF跨站请求伪造攻击

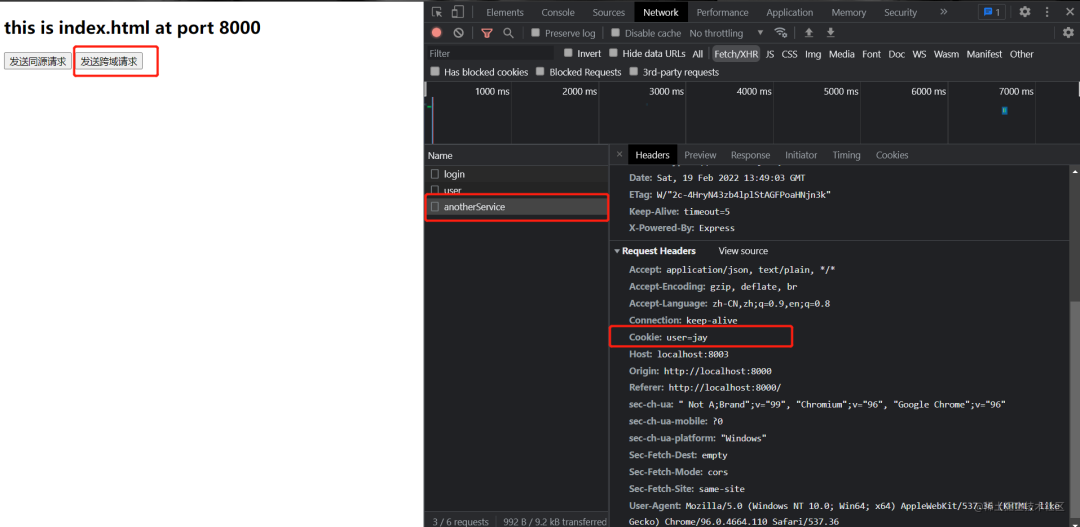

How to carry cookies in cross domain requests?

精彩联动!OpenMLDB Pulsar Connector原理和实操

GET 与 POST请求

离线强化学习(Offline RL)系列4:(数据集) 经验样本复杂度(Sample Complexity)对模型收敛的影响分析

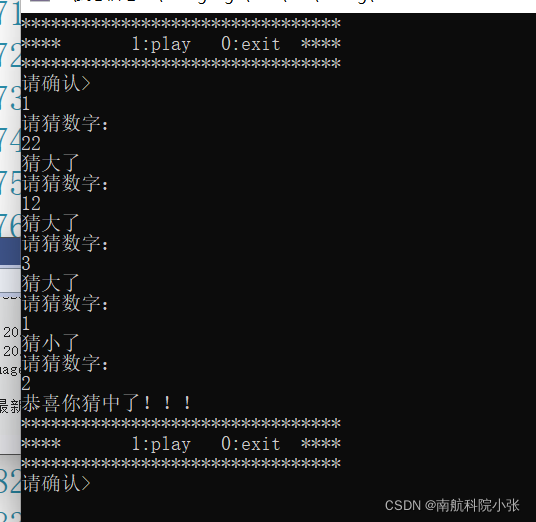

A small game of guessing numbers

Sentinelsat package introduction

随机推荐

基于SSM开发的医院住院管理信息系统(HIMS)-毕业设计-附源码

Hospital inpatient management information system (HIMS) developed based on SSM - graduation design - with source code

L2-004 这是二叉搜索树吗? (25 分)

Cloud native Daas Service - a brief introduction to distributed object storage

redis面试问题

L2-001 emergency rescue (25 points) (Dijkstra comprehensive application)

MQ related processes and contents

【MySQL】对JSON类型字段数据进行提取和查询

How PHP removes array keys

打开应用出现 “需要使用新应用一打开此ms-gamingoverlay链接”

计算整数n位和(C语言)

DR-AP6018-A-wifi6-Access-Point-Qualcomm-IPQ6010-2T2R-2.5G-ETH-port-supporting-5G-celluar-Modem-aluminum-body.

华为云MySQL云数据库,轻松助力数据上云

Leetcode daily question: 824 Goat Latin

PHP 零基础入门笔记(11):字符串 String

无线运维的起源与项目建设思考

分库和分表

AES encryption and decryption with cryptojs

把數組字典寫入csv格式

【招聘测评题】中的(行测)图形推理题基本逻辑总结(附例题)