当前位置:网站首页>Forward Propagation and Back Propagation

Forward Propagation and Back Propagation

2022-08-08 09:33:00 【ZhangJiQun & MXP】

Why use gradient descent to optimize neural network parameters?

Backpropagation (used to optimize neural network parameters): According to the error calculated by the loss function, it guides the update and optimization of deep network parameters through backpropagation.

The reason for using backpropagation: First, the deep network is composed of many linear layers and nonlinear layers stacked, and each nonlinear layer can be regarded as a nonlinear function (the nonlinearity comes from the nonlinear activation function), so the entire deep network can be regarded as a composite nonlinear multivariate function.

Our ultimate goal is to hope that this nonlinear function can well complete the mapping between input and output, that is, to find a minimum value for the loss function.So the final problem becomes a problem of finding the minimum value of a function. Mathematically, it is natural to think of using gradient descent to solve it.

What are the effects of gradient disappearance and explosion

For example, for a simple neural network with three hidden layers, when the gradient disappears, the hidden layer close to the output layer has a relatively normal gradient, so the weight update is relatively normal.However, when it is closer to the input layer, due to the disappearance of the gradient, the weights of the hidden layers close to the input layer will be updated slowly or the update will be stagnant.This leads to the fact that during training, it is only equivalent to the learning of the later layers of the shallow network.

Reason

Gradient disappearance and gradient explosion are essentially the same, both are multiplicative effects in gradient backpropagation caused by the number of network layers being too deep.

Solution

There are mainly the following solutions to solve the gradient disappearance and explosion:

Switch to activation functions such as Relu, LeakyRelu, Elu, etc.

ReLu: Let the derivative of the activation function be 1

边栏推荐

猜你喜欢

LabVIEW前面板和程序框图的最大尺寸

Feign application and source code analysis

COMSOL Multiphysics 6.0 software installation package and installation tutorial

What exactly happens after entering the URL in the browser?

LeetCode:第305场周赛【总结】

COMSOL Multiphysics 6.0软件安装包和安装教程

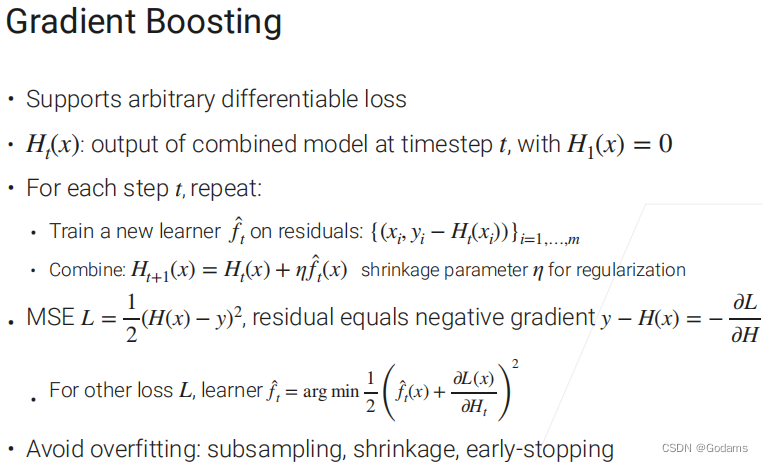

斯坦福21秋季:实用机器学习【第5章】

![Stanford Fall 21: Practical Machine Learning [Chapter 5]](/img/57/8dc286b21e0ae23b25feabeac91b8b.png)

Stanford Fall 21: Practical Machine Learning [Chapter 5]

jupyter lab安装、配置教程

推荐下载软件

随机推荐

C# - var 关键字

mysql-cdc 换2.2.x 版本 怎么读不到 数据 咋回事

交换两个整型变量的三种方法

面试官:工作中用过锁么?说说乐观锁和悲观锁的优劣势和使用场景

Recommended download software

Mobile/Embedded-CV Model-2018: MobileFaceNets

Offensive and defensive world - web2

nodeJs--egg框架介绍

Database Tuning: The Impact of Mysql Indexes on Group By Sorting

shell脚本知识记录

01-MQ介绍以及产品比较

实体List转为excel

Classification of software testing

深度解析网易严选和京东的会员体系,建议收藏

LVS负载均衡群集

移动端/嵌入式-CV模型-2017:MobelNets-v1

关于#sql#的问题:kingwow数据库

巧用Prometheus来扩展kubernetes调度器

AI引领一场新的科学革命

Literature Learning (part33)--Clustering by fast search and find of density peaks