当前位置:网站首页>【mindspore产品】【8卡分布式训练】davinci_model : load task fail, return ret

【mindspore产品】【8卡分布式训练】davinci_model : load task fail, return ret

2022-08-10 03:28:00 【小乐快乐】

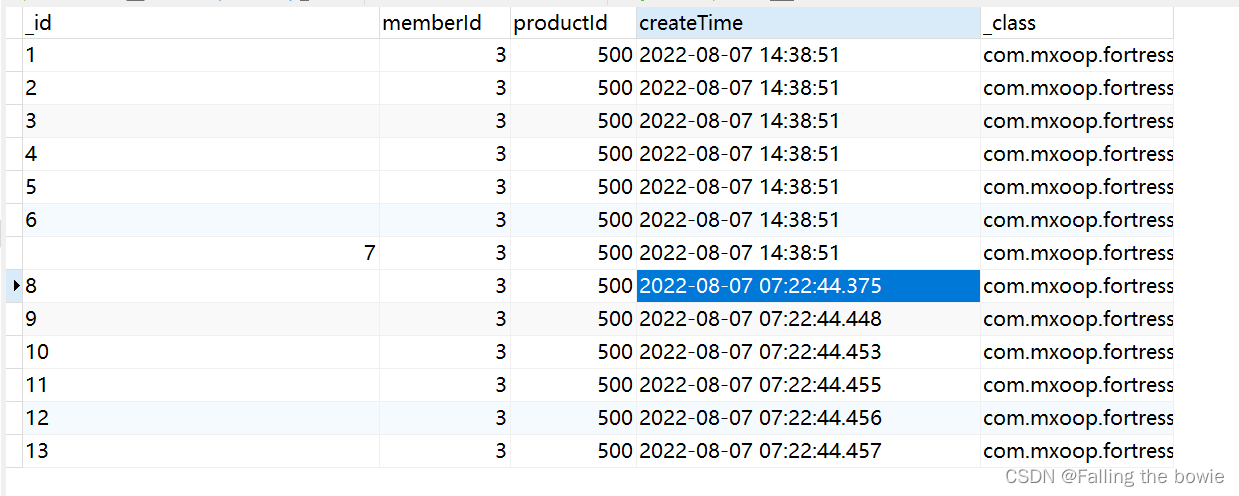

8卡分布式训练失败,出现HCCL错误。

【操作步骤&问题现象】

1、模型为3D卷积模型,分布式相关设置。

2、出现HCCL报错,Distribute Task Failed。注意:数据处理需要40分钟。

1. 报错信息

ERROR] GE(1530736,ffff4809f1e0,python):2021-10-10-19:36:29.319.926 [mindspore/ccsrc/runtime/device/ascend/ge_runtime/task/hccl_task.cc:100] Distribute] davinci_model : load task fail, return ret: 1343225860

[ERROR] DEVICE(1530736,ffff4809f1e0,python):2021-10-10-19:36:29.320.484 [mindspore/ccsrc/runtime/device/ascend/ascend_kernel_runtime.cc:469] LoadTask] Distribute Task Failed, error: mindspore/ccsrc/runtime/device/ascend/ge_runtime/task/hccl_task.cc:100 Distribute] davinci_model : load task fail, return ret: 1343225860

# In file /root/archiconda3/envs/zjc/lib/python3.7/site-packages/mindspore/ops/_grad/grad_nn_ops.py(83)

dx = input_grad(w, dout, get_shape(x))

^

[ERROR] MD(1530736,ffff38fff1e0,python):2021-10-10-19:36:34.542.081 [mindspore/ccsrc/minddata/dataset/util/task_manager.cc:217] InterruptMaster] Task is terminated with err msg(more detail in info level log):Exception thrown from PyFunc. The actual amount of data read from generator 444 is different from generator.len 8400, you should adjust generator.len to make them match.

Line of code : 198

File : /home/jenkins/agent-working-dir/workspace/Compile_Ascend_ARM_CentOS/mindspore/mindspore/ccsrc/minddata/dataset/engine/datasetops/source/generator_op.cc

[WARNING] MD(1530736,ffffa0a34740,python):2021-10-10-19:36:37.914.259 [mindspore/ccsrc/minddata/dataset/engine/datasetops/device_queue_op.cc:73] ~DeviceQueueOp] preprocess_batch: 140; batch_queue: 0, 0, 0, 0, 0, 0, 0, 0, 0, 64; push_start_time: 2021-10-10-19:32:07.652.799, 2021-10-10-19:32:07.695.438, 2021-10-10-19:32:07.732.808, 2021-10-10-19:32:07.779.769, 2021-10-10-19:32:07.817.956, 2021-10-10-19:32:07.866.328, 2021-10-10-19:32:07.905.937, 2021-10-10-19:32:07.931.153, 2021-10-10-19:32:07.936.371, 2021-10-10-19:32:07.945.894; push_end_time: 2021-10-10-19:32:07.653.279, 2021-10-10-19:32:07.695.918, 2021-10-10-19:32:07.733.354, 2021-10-10-19:32:07.780.238, 2021-10-10-19:32:07.818.448, 2021-10-10-19:32:07.866.782, 2021-10-10-19:32:07.906.422, 2021-10-10-19:32:07.931.613, 2021-10-10-19:32:07.936.843, 2021-10-10-19:36:36.347.214.

Traceback (most recent call last):

File "train.py", line 139, in <module>

model.train(config.epoch_size, train_dataset, callbacks=callbacks_list) # , dataset_sink_mode=False

File "/root/archiconda3/envs/zjc/lib/python3.7/site-packages/mindspore/train/model.py", line 649, in train

sink_size=sink_size)

File "/root/archiconda3/envs/zjc/lib/python3.7/site-packages/mindspore/train/model.py", line 439, in _train

self._train_dataset_sink_process(epoch, train_dataset, list_callback, cb_params, sink_size)

File "/root/archiconda3/envs/zjc/lib/python3.7/site-packages/mindspore/train/model.py", line 499, in _train_dataset_sink_process

outputs = self._train_network(*inputs)

File "/root/archiconda3/envs/zjc/lib/python3.7/site-packages/mindspore/nn/cell.py", line 386, in __call__

out = self.compile_and_run(*inputs)

File "/root/archiconda3/envs/zjc/lib/python3.7/site-packages/mindspore/nn/cell.py", line 644, in compile_and_run

self.compile(*inputs)

File "/root/archiconda3/envs/zjc/lib/python3.7/site-packages/mindspore/nn/cell.py", line 631, in compile

_executor.compile(self, *inputs, phase=self.phase, auto_parallel_mode=self._auto_parallel_mode)

File "/root/archiconda3/envs/zjc/lib/python3.7/site-packages/mindspore/common/api.py", line 531, in compile

result = self._executor.compile(obj, args_list, phase, use_vm, self.queue_name)

RuntimeError: mindspore/ccsrc/runtime/device/ascend/ascend_kernel_runtime.cc:469 LoadTask] Distribute Task Failed, error: mindspore/ccsrc/runtime/device/ascend/ge_runtime/task/hccl_task.cc:100 Distribute] davinci_model : load task fail, return ret: 1343225860

# In file /root/archiconda3/envs/zjc/lib/python3.7/site-packages/mindspore/ops/_grad/grad_nn_ops.py(83)

dx = input_grad(w, dout, get_shape(x))

^

# In file /root/archiconda3/envs/zjc/lib/python3.7/site-packages/mindspore/ops/_grad/grad_nn_ops.py(83)

dx = input_grad(w, dout, get_shape(x))

^2. info 日志

[ERROR] HCCL(167557,python):2021-10-09-17:53:10.542.719 [p2p_mgmt.cc:218][167557][218555][Wait][P2PConnected]connected p2p timeout, timeout:120 s. local logicDevid:0, remote physic id:4.

[ERROR] HCCL(167557,python):2021-10-09-17:53:10.542.778 [p2p_mgmt.cc:185][167557][218555]call trace: ret -> 16

[ERROR] HCCL(167557,python):2021-10-09-17:53:10.542.788 [comm_factory.cc:1087][167557][218555][Get][ExchangerNetwork]Enable P2P Failed, ret[16]

[ERROR] HCCL(167557,python):2021-10-09-17:53:10.542.796 [comm_factory.cc:240][167557][218555][Create][CommOuter]exchangerNetwork create failed

[ERROR] HCCL(167557,python):2021-10-09-17:53:10.542.805 [hccl_impl.cc:1958][167557][218555][Create][OuterComm]errNo[0x0000000005000006] tag[HcomAllReduce_6629421139219749105_0], created commOuter fail. commOuter[0] is null

[ERROR] HCCL(167557,python):2021-10-09-17:53:10.542.885 [hccl_impl.cc:1734][167557][213677][Create][CommByAlg]CreateInnerComm [0] or CreateOuterComm[6] failed. commType[2]

[ERROR] HCCL(167557,python):2021-10-09-17:53:10.542.921 [hccl_impl.cc:1831][167557][213677]call trace: ret -> 4

[ERROR] HCCL(167557,python):2021-10-09-17:53:10.542.938 [hccl_impl.cc:893][167557][213677][HcclImpl][AllReduce]errNo[0x0000000005000004] tag[HcomAllReduce_6629421139219749105_0],all reduce create comm failed

[ERROR] HCCL(167557,python):2021-10-09-17:53:10.542.946 [hccl_comm.cc:232][167557][213677]call trace: ret -> 4

[ERROR] HCCL(167557,python):2021-10-09-17:53:10.542.957 [hcom.cc:246][167557][213677][AllReduce][Result]errNo[0x0000000005010004] hcclComm all reduce error, tag[HcomAllReduce_6629421139219749105_0],input_ptr[0x1088eaa69200], output_ptr[0x108800000200], count[1132288], data_type[4], op[0], stream[0xfffdb566b530]

[ERROR] HCCL(167557,python):2021-10-09-17:53:10.542.968 [hcom_ops_kernel_info_store.cc:309][167557][213677]call trace: ret -> 4

[ERROR] HCCL(167557,python):2021-10-09-17:53:10.542.983 [hcom_ops_kernel_info_store.cc:191][167557][213677]call trace: ret -> 4

[ERROR] HCCL(167557,python):2021-10-09-17:53:10.542.992 [hcom_ops_kernel_info_store.cc:806][167557][213677][Load][Task]errNo[0x0000000005010004] load task failed. (load op[HcomAllReduce] fail)

[ERROR] GE(167557,ffff2b2ef1e0,python):2021-10-09-17:53:10.543.045 [mindspore/ccsrc/runtime/device/ascend/ge_runtime/task/hccl_task.cc:100] Distribute] davinci_model : load task fail, return ret: 1343225860

[ERROR] DEVICE(167557,ffff2b2ef1e0,python):2021-10-09-17:53:10.543.320 [mindspore/ccsrc/runtime/device/ascend/ascend_kernel_runtime.cc:469] LoadTask] Distribute Task Failed, error: mindspore/ccsrc/runtime/device/ascend/ge_runtime/task/hccl_task.cc:100 Distribute] davinci_model : load task fail, return ret: 1343225860

# In file /root/archiconda3/envs/zjc/lib/python3.7/site-packages/mindspore/ops/_grad/grad_nn_ops.py(83)

dx = input_grad(w, dout, get_shape(x))

[ERROR] MD(167557,ffff6edbf1e0,python):2021-10-09-17:53:15.902.036 [mindspore/ccsrc/minddata/dataset/util/task_manager.cc:217] InterruptMaster] Task is terminated with err msg(more detail in info level log):Exception thrown from PyFunc. The actual amount of data read from generator 547 is different from generator.len 8400, you should adjust generator.len to make them match.

Line of code : 198

File : /home/jenkins/agent-working-dir/workspace/Compile_Ascend_ARM_CentOS/mindspore/mindspore/ccsrc/minddata/dataset/engine/datasetops/source/generator_op.cc

提示是hccl建连超时了。

有可能是其他卡已经挂了,也有可能是每卡处理的速度不一样,可以把超时时间设置长一点。

HCCL_CONNECT_TIMEOUT=6000,export下这个环境变量。

边栏推荐

猜你喜欢

随机推荐

小程序导航及导航传参

Flink Table&Sql API使用遇到的问题总结

mediaserver创建

fastjson autoType is not support

TCP协议之《TCP_CORK选项》

uva1392

轻流CEO薄智元:从无代码到无边界

文本编辑器vim

TCP协议之《MTU探测功能》

学习总结week4_2正则

如何开启热部署Devtools

flutter异步

驱动程序开发:无设备树和有设备树的platform驱动

TCP协议之《延迟ACCEPT》

【Verilog数字系统设计(夏雨闻)5-------模块的结构、数据类型、变量和基本运算符号1】

applet wxs

Small program subcontracting and subcontracting pre-download

数据库设计中反映用户对数据要求的模式叫什么

【每日一题】大佬们进来看看吧

嵌入式分享合集32