当前位置:网站首页>CS231n: 12 Reinforcement Learning

CS231n: 12 Reinforcement Learning

2022-08-08 17:49:00 【Here_SDUT】

CS231n第十二节:强化学习 本系列文章基于CS231n课程,记录自己的学习过程,所用视频资料为 2017年版CS231n,阅读材料为CS231n官网2022年春季课程相关材料 This section will introduce some reinforcement learning relevant content.

1. 强化学习

1.1 定义

如下图所示,Is the working process of the reinforcement learning.首先,There is a environment,和一个代理,Environment agency first to a state s_t ,Then acting according to the state and output a a_t 给环境.To evaluate environment after accepting this action,Feedback to the agent a bonus r_t ,And the next state s_{t+1} ,So on until the environment is given an end state.Such a model's goal is to get more reward value as much as possible.

1.2 应用

The application of reinforcement learning has a lot of,举几个比较常见的例子:

The car balance issue

目标:Balance is the mobile car pole to make it in above.

状态:The Angle of the pole and horizontal,Rod movement of the angular velocity,小车位置,The car level speed.

动作:For the car to lateral force.

奖励:每一时刻,If directly above the car pole,则得1分.

机器人移动问题

目标:Let the robot learn oneself to move forward

状态:The Angle of the joints and position

动作:Put on the joint torque

奖励:Every moment if a robot to the left forward,则得1分

街机游戏

目标:Get higher score in the game

状态:The current game elements of pixel values

动作:The game's control,Such as before and after around

奖励:Is decided by the rise and fall of every moment game scores

围棋

目标:Win the game

状态:The location of the each piece

动作:The next step should be put in what position

奖励:If the final game victory would have to1分,否则0分

2. 马尔科夫决策过程

2.1 定义

Markov decision process is the mathematical formulation of reinforcement learning,Its accord with markov properties——The current state of the can fully describe the state of the world.

Markov decision process by a tuple containing five elements constitute a:(\mathcal{S}, \mathcal{A}, \mathcal{R}, \mathbb{P}, \gamma),每个元素的含义如下:

- \mathcal{S}:Said a collection of all possible states.

- \mathcal{A}:Said a collection of all possible actions.

- \mathcal{R}:Said a given a pair of(状态,动作)Bonus distribution,即一个从(状态,动作)To reward value mapping.

- \mathbb{P}:状态转移概率,Is given a pair of(状态,动作)Nowadays the distribution of a state.

- \gamma:折扣因子,The reward for the recent and long-term reward a weight between.

2.2 工作方式

- First in the initial stage t=0 时,Environment from the initial state distribution p(s_0) 中进行采样,得到初始状态,即 s_0\sim p(s_0)

- 然后,从 t=0 The whole process has been to the end,重复下述过程:

- Agent according to the current state s_t 选择一个动作 a_t

- Environmental sampling to get a bonus r_t \sim R(.|s_t,a_t)

- Environmental sampling to get the next state s_{t+1}\sim P(.|s_t,a_t)

- Agent receives bonus r_t 和下一个状态 s_{t+1}

其中,定义一个策略 \pi 表示一个从 S 到 A 的函数,Used to indicate in which each state should take action.所以,Goal is to find a strategy \pi^* Make the accumulative discount reward value(即使用 \gamma After the weighted bonus) \sum_{t>0} \gamma^{t} r_{t} 最大.下面举一个简单的例子:

The diagram below the grid,Our state is a grid and the current location,The set of actions to move up and down or so,Each on a mobile will get a-1的奖励值.Our goal is to take the smallest number of the,At any one end(Star grid).As shown in the figure below is two different strategies,即 \pi 函数,One on the left side is completely random strategy,On the right is an optimal strategy.

那么,How can we find such an optimal strategy \pi ^* ,Made to maximize the sum of bonus?首先,我们可以给出 \pi A formal expression:

3. Q-learning

3.1 一些定义

价值函数

对于一个给定的策略 \pi ,We as long as a given initial state s_0 ,Then in turn to produce a sequence s_0,a_0,r_0,s_1,a_1,r_1...

也就是说,给定一个策略 \pi We can calculate the state after s The accumulation of can produce under the expectation of reward value,这就是价值函数:

注意,The value function to evaluate a given strategy \pi 下状态 s 的价值,由策略 \pi 和当前状态 s 所唯一确定.

Q-价值函数 Q-value

Q-Cost function is evaluated for a given strategy \pi 下,在状态 s 下采取动作 a 后,Can bring cumulative reward expectation:

Q-Value function by the strategy \pi 和当前状态 s 和当前动作 a 所唯一确定.

贝尔曼方程 Bellman equation

Now we fixed the current state s 和当前动作 a,The objective is to select the optimal strategy \pi,使得Q-value函数最大,这个最优的Q-valueFunctions are recorded asQ^*:

理解一下 Q^*,In the current state s 下采取动作 a 后,Can achieve the largest cumulative reward expectation.显然 Q^* 仅与 s 和 a 有关.The reinforcement learning method Q-learning 的核心公式——贝尔曼方程,则给出了Q^*Another expression of the:

理解:When we are in the state s 下采取动作 a 后,The environment will feedback to give us a reward r ,And the next moment can transfer state s'(一个集合),And the corresponding action a',We choose our status in the collectionQ-valueBiggest as transfer state,即 Q^{*}(s^{\prime}, a^{\prime}) 最大,That is to say, every time we transfer all select rewards as the next largest state,So we can recursively call Q^* 了.

3.2 Value iteration 算法

Bellman方程中使用 Q-value Give each a case(Is a particular status and action)Indicates the status and action of the next transfer,So as long as obtained 所有的 Q-value ,In order to find the optimal strategy \pi^*.

One of the most simple way of thinking is to behrman equation as an iterative update formula:

即新的Q-value由旧的Q-value得到,All initialQ-value为0.

具体例子可以参考:https://blog.csdn.net/itplus/article/details/9361915

So as long as the number of iteration is enough,Can get all theQ-value值,但是这有个问题,This is the method of expanding is very poor.As a result of the request out allQ-value,So for some task,Such as automatic play games,The status for all pixels,Such a huge calculation is almost impossible.

3.3 神经网络求Q-value

因此,We need to use a function estimator to approximate realQ-value,Usually neural network estimator is a very good function,即:

其中的 \theta 表示神经网络的参数,Using such a formula,Neural network can learn the adjust \theta Make it in a given s 和 a The output value is about Q^* ,To use neural network training requires regulation loss function,由于这是一个回归问题,So naturally we can think of usingL2损失函数:

Below we to the aforementioned arcade games as an example,The goal of first review the arcade games:

- 目标:Get higher score in the game

- 状态:The current game elements of pixel values

- 动作:The game's control,Such as before and after around

- 奖励:Is decided by the rise and fall of every moment game scores

We have the following a network structure,将最近的4Frame game images grayscale change after pretreatment and sampling by some get 84*84*4 的一个输入,Then through two convolution layer extracted features,The use of two output connection layer a 4 维向量,Said input condition,Respectively, the up and down or so four actions Q-value,So we can train the network.

3.4 Experience Replay

But direct training have a problem:数据相关性.In the use of neural network approximating function method in,We modify parameters θ,Because the function is usually continuous,So in order to adjust the parameters of making Q(s,a) 更加接近真实值,也会修改 s Other state within the vicinity of the.For example, we adjust parameters Q(s,a) 变大了,那么如果 s’ 很接近 s,那么 Q(s’,a) 也会变大.Such as in the above game,状态 s Can be represented as a continuous 4 帧的图像,Two consecutive state must be very like(Could mean the difference between a few pixels),And we updatetarget又依赖于 \max _{a^{\prime}} Q\left(s^{\prime}, a^{\prime} ; \theta_{i-1}\right),So it will have a magnified effect,Make all estimate large,It's easy to destabilize the training.于是,提出了 Experience replay 来解决这个问题.

In order to avoid data correlation,它会有一个replay buffer,Used to store some recent (s,a,r,s’) .Training at random fromreplay bufferUniform sampling in a minibatch 的 (s,a,r,s’) 来调整参数 θ.Because is randomly selected,So the data before and after correlation there is unlikely to be.当然,这种方法也有弊端,就是训练的时候是 offline 的形式,无法做到 online 的形式.

3.5 算法流程

The algorithm flow chart of the process is actually in the depth of training a neural network,Because neural network is proved to have the universal approximation ability of,Is able to fit any function;一个 episode 相当于 一个 epoch.

4. Policy Gradients

4.1 定义

上面介绍的Q-learning有个问题就是 Q Function can be very complicated,Such as control the robot to grab objects is a state of high dimension,So for the extraction of each pair of (状态,动作)The corresponding value would be really difficult.但是,Such a goal of strategy is actually very simple——You can just control the robot hand clenched.所以,Whether we can go directly to learning strategies,That is, from a pile of policy set by selecting a best policy,Instead of using the indirect way.首先,We will be parameterized strategy:

4.2 REINFORCE algorithm

在数学上,We can put the value of the above function in another writing:

We can get the following to the conclusion that:

For such a strategy value gradient,有这样的解释:

- 如果 r(\tau) 很高,Then we will improve the probability of our saw action

- 如果 r(\tau) 很高,Then we will reduce the probability that we have seen the action

4.3 Variance reduction

一些方法

使用Baseline

A simple benchmark is the use of so far,All track of constant moving average reward,即:

A better benchmark is pushed up from a state of the probability of an action,If the action should be from the state than we expected values is better.

4.4 Actor-Critic Algorithm

4.5 Recurrent Attention Model

4.6 AlphaGo

边栏推荐

猜你喜欢

随机推荐

记录贴:pytorch学习Part5

记录贴:pytorch学习Part3

How big is 1dp!

记录贴:pytorch学习Part2

XDOJ-统计正整数个数

Vscode LeetCode 教程

为什么MySQL的主键查询这么快

JVM内存模型和结构详解(五大模型图解)

记录贴:pytorch学习Part1

1dp到底多大!

C#异步和多线程

一甲子,正青春,CCF创建六十周年庆典在苏州举行

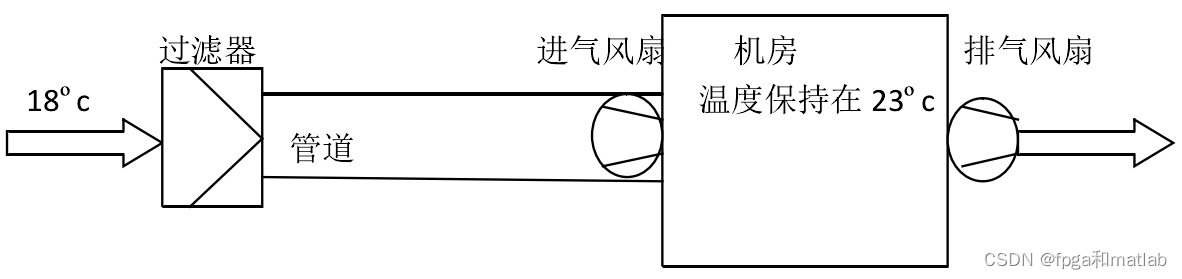

差分信号简述

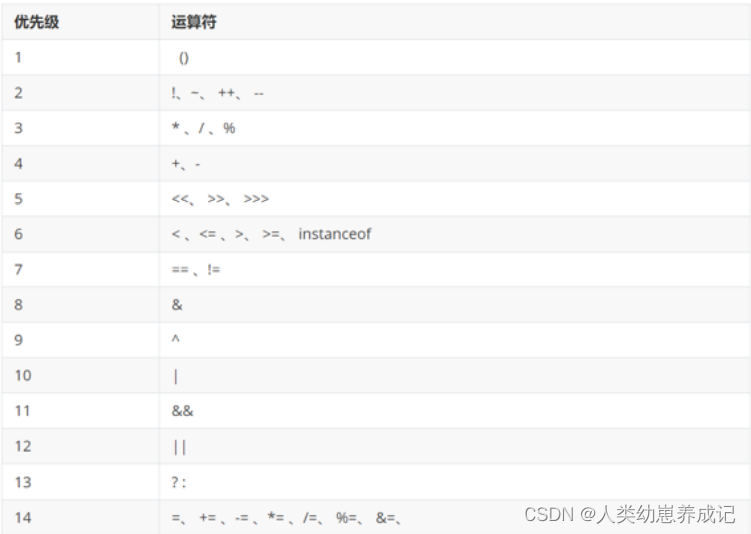

彻底理解 volatile 关键字及应用场景,面试必问,小白都能看懂!

【20210923】Choose the research direction you are interested in?

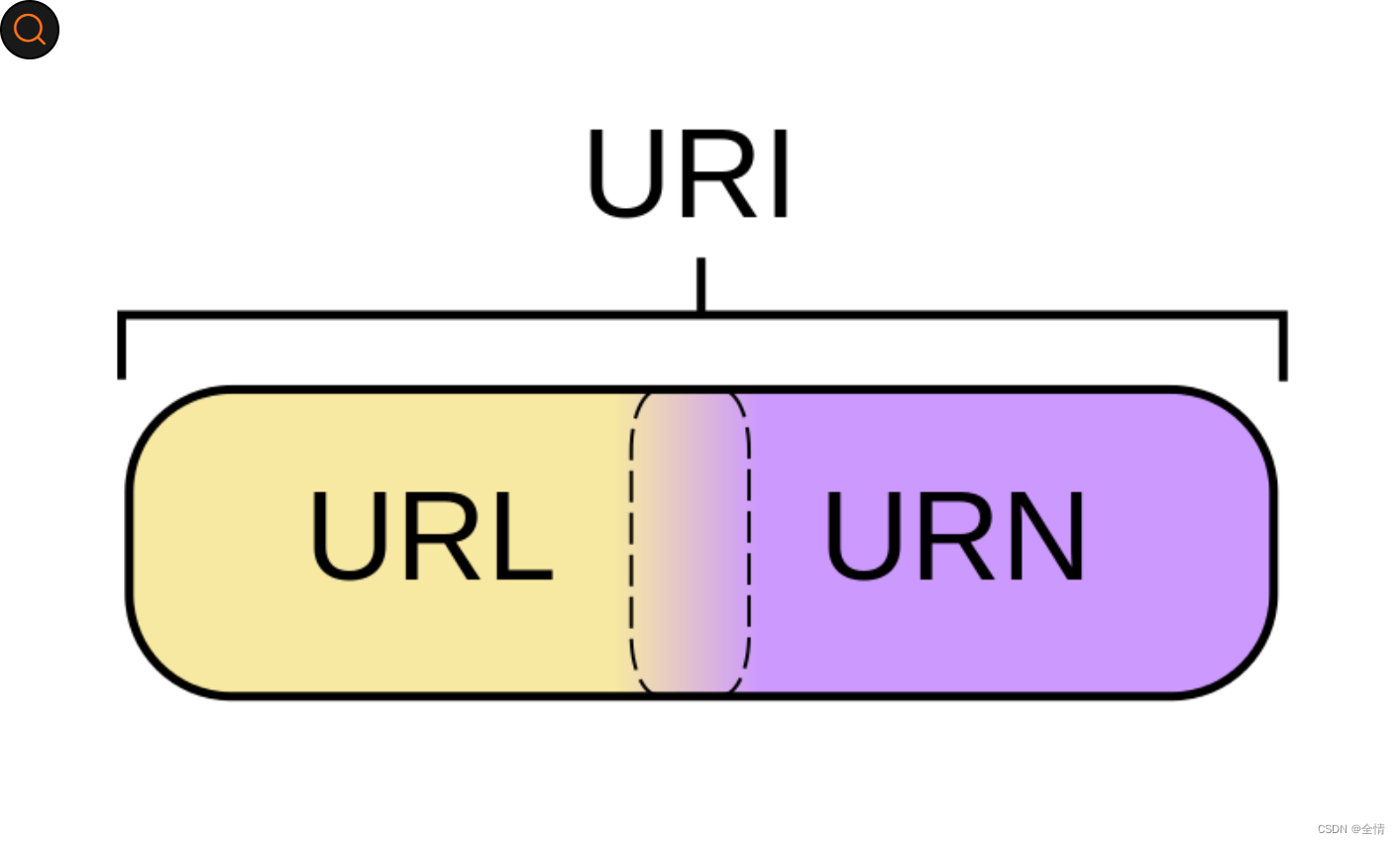

The difference between a uri (url urn)

The difference between rv and sv

c语言指针运算

spark学习笔记(八)——sparkSQL概述-定义/特点/DataFrame/DataSet

串行通信:常见的串行通信接口协议UART、SPI、I2C简介