当前位置:网站首页>The super large image labels in remote sensing data set are cut into specified sizes and saved into coco data set - target detection

The super large image labels in remote sensing data set are cut into specified sizes and saved into coco data set - target detection

2022-04-23 03:38:00 【Blue area soldier】

To be improved :

- The original large data set is not COCO Marked in , To be changed to COCO Mark the large drawing of the original dataset

- Species name and id The acquisition of is directly assigned , The following is changed to COCO The annotation format needs to be obtained from the dataset

thought : Use the sliding window to segment the large picture , Overlap a certain value when sliding at the same time , According to the sliding window image patch The proportion of the target bounding box in the original bounding box. Select whether to keep the annotation or not .

Method : Generate instance image tags and semantic image tags for each large image to determine the sliding window patch Whether the target in keeps the label , If the proportion is too small , such as patch Contains only one instance bbox A few dozen pixels , Proportion 0.01, I won't keep it .

# author scu cj

# Input : coco Format target detection data set

# Output : coco Format target detection data set

# parameters

# image_width: The width of the cut image

# image_height: The height of the cut image

# img_overlap: Number of overlapping pixels during cutting

# iou_thres: When the proportion of the target frame in the cut image block to the true value target frame is greater than, it is determined that there is a target in the cut image block and marked ( Generally speaking, the target detection box IOU Greater than 0.25 Only then )

import os

import numpy as np

import cv2

from natsort import natsorted

from requests import patch

from sklearn import neighbors

from tqdm import tqdm

import json

from skimage import measure

from skimage.measure import label,regionprops

import matplotlib.pyplot as plt

datasrc_dir = "/mnt/datas4t/PlaneRecogData/data/val"

#label_file = "/mnt/datas4t/PlaneRecogData/data"

datadst_dir = "/mnt/datas4t/PlaneRecogData/data_patch/val"

dst_labeljson_file = "/mnt/datas4t/PlaneRecogData/data_patch/instances_val.json"

coco_label = dict()

images = []

annotations = []

categories = {

}

image_width=512

image_height=512

img_overlap=128

iou_thres=0.25

coco_label["info"] = {

"image_width":512,

"image_height":512,

"patch_overlap":128,

"iou_thres":0.25

}

cat_name = "ABCDEFGHIJK"

cat_id = range(1, len(cat_name)+1)

categories = [{

"id":i,"name":j} for i,j in zip(cat_id, cat_name)]

img_id = 1

ann_id = 1

img = {

}

ann = {

}

files = [i for i in os.listdir(datasrc_dir) if os.path.isfile(datasrc_dir+"/"+i)]

files = natsorted(files) # Get all dimension data

root = datasrc_dir

with tqdm(range(0,len(files), 2)) as pbar:

for prno in range(0,len(files),2):

imagepath = root+"/"+files[prno+1]

labelpath = root+"/"+files[prno]

ori_image = cv2.imread(imagepath)

w,h,_ = ori_image.shape

with open(labelpath,"r") as jsonfile:

labeljson = json.load(jsonfile) # labeljson Is the annotation data of the large drawing

# Construct a binary annotation graph

labelimage = np.zeros(ori_image.shape)#.astype(int) # labelimage Is an example of a large drawing

semantic_label = np.zeros(ori_image.shape)#.astype(int) # semantic_label It is the semantic annotation diagram of the big picture

for i1 in range(len(labeljson["shapes"])): # Traverse every instance in the big picture

pt = labeljson["shapes"][i1] # Instance point annotation

labelimage = cv2.fillPoly(labelimage, np.array([pt["points"]]).astype(int), (i1+1,i1+1,i1+1)) # Different instances have different boxes

#plt.title("labelimage")

#plt.imshow(labelimage)

#plt.show()

cat_id = ord(pt["label"])-ord('A')+1 # Instance corresponding type annotation

semantic_label = cv2.fillPoly(semantic_label, np.array([pt["points"]]).astype(int), (cat_id,cat_id,cat_id)) # Different instances have different boxes

#print(labelpath)

objid,idcount = np.unique(labelimage, return_counts=True) # Count the number of image primes occupied by instances

#print(objid)

#print(idcount)

labelimage_statics = dict(zip(objid, idcount))

#print(labelimage_statics)

labelimage_statics.pop(0) # Remove the statistics of the background

# Cut the image

for sx in range(0, w, image_width-img_overlap):

for sy in range(0, h, image_height-img_overlap):

if sx+image_width<w:

ex = sx+image_width

else:

ex = w

sx = w-image_width

if sy+image_height<h:

ey = sy+image_height

else:

sy = h-image_height

ey = h

patch_file_name = datadst_dir+"/"+str(img_id)+".jpg"

img["file_name"] = patch_file_name

img["height"] = ey-sy

img["width"] = ex-sx

img["id"] = img_id

img_id = img_id + 1

labelpatch = labelimage[sy:ey,sx:ex,:]

patchimg = ori_image[sy:ey,sx:ex,:]

if labelpatch.max()==0:

# Explain that there is no goal ,label The maximum value of is equal to 0, Explain that there is no goal , Save directly without labels

cv2.imwrite(patch_file_name, patchimg)

images.append(img)

img = {

}

else:

# Otherwise, calculate patch The proportion of the box of the existing target instance in the overall dimension box , If the ratio is greater than 0.5 Just think it's a true value , And mark it

# objlabel Is the number of the instance in the image , labelarea It's statistics patch How many pixels are there in the instance of the target rotation box

objlabel,labelarea = np.unique(labelpatch, return_counts=True) # To get rid of 0

patch_statics = dict(zip(objlabel, labelarea))

patch_statics.pop(0)

# Calculate the proportion of each instance in the total

for k,v in patch_statics.items():

#print("k:"+str(k))

#print("v:"+str(v))

if (v/labelimage_statics[k])<iou_thres:

continue

# If it is less than the threshold, it doesn't matter , Otherwise, as the annotation of an instance box

# Get the category of the instance first

semantic_patch = semantic_label[sy:ey,sx:ex,:]

cat_id = semantic_patch[labelpatch==k][0]

ann["id"] = ann_id

ann_id += 1

ann["image_id"] = img["id"]

ann["category_id"] = cat_id

tempmat = np.copy(labelpatch)

tempmat = np.where(tempmat!=k, 0, 255)

# plt.figure()

# plt.title("patchimg")

# plt.imshow(patchimg)

# plt.figure()

# plt.title("labelpatch")

# plt.imshow(labelpatch)

# plt.figure()

# plt.title("temp")

# plt.imshow(tempmat)

# plt.show()

labeled_img = label(tempmat, connectivity=1, background=0, return_num=False)

annprops = regionprops(labeled_img)[0]

annsy,annsx,_,anney,annex,_ = annprops.bbox # Because the channel dimension is not removed

ann["bbox"] = [annsx,annsy,annex-annsx,anney-annsy]

#tempmat = cv2.rectangle(tempmat.astype(np.uint8),(23,24),(123,124),(255,0,0),5)

#tempmat = cv2.rectangle(patchimg.astype(np.uint8),(annsx,annsy),(annex,anney),(233,0,0),2)

# plt.figure()

# plt.title("tempmat")

# plt.imshow(tempmat)

# plt.show()

ann["area"] = annprops.area_bbox

ann["iscrowd"] = 0

annotations.append(ann)

ann = {

}

cv2.imwrite(patch_file_name, patchimg)

images.append(img)

img={

}

pbar.update(1)

coco_label["images"] = images

coco_label["annotations"] = annotations

coco_label["categories"] = categories

with open(dst_labeljson_file,"w") as fobj:

json.dump(coco_label, fobj)

版权声明

本文为[Blue area soldier]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204220602025076.html

边栏推荐

- C-10 program error correction (recursive function): number to character

- Definition format of array

- Initial experience of talent plan learning camp: communication + adhering to the only way to learn open source collaborative courses

- The art of concurrent programming (5): the use of reentrantlock

- Three types of jump statements

- VS Studio 修改C语言scanf等报错

- JS takes out the same elements in two arrays

- The content of the website is prohibited from copying, pasting and saving as JS code

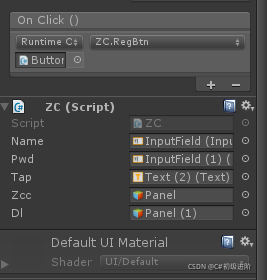

- Unity Basics

- Basic knowledge of convolutional neural network

猜你喜欢

L3-011 直捣黄龙 (30 分)

2022 group programming ladder simulation match 1-8 are prime numbers (20 points)

Test questions and some space wars

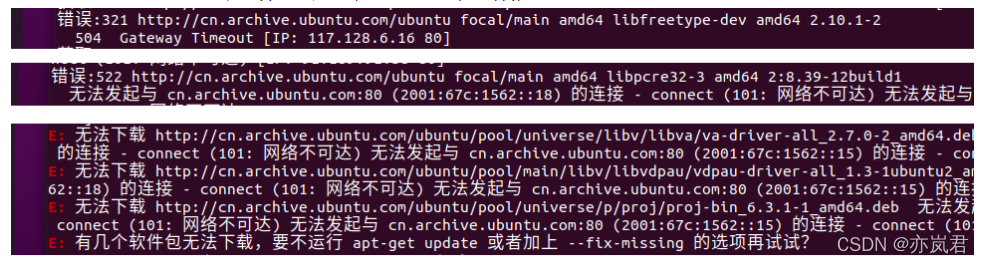

Paddlepaddle does not support arm64 architecture.

2022 group programming ladder simulation l2-1 blind box packaging line (25 points)

ROS series (IV): ROS communication mechanism series (3): parameter server

Unity knowledge points (ugui 2)

2022 团体程序设计天梯赛 模拟赛 L2-1 盲盒包装流水线 (25 分)

Codeforces Round #784 (Div. 4)題解 (第一次AK cf (XD

ROS series (I): rapid installation of ROS

随机推荐

QT dynamic translation of Chinese and English languages

2022 group programming ladder simulation l2-1 blind box packaging line (25 points)

2022 group programming ladder simulation match 1-8 are prime numbers (20 points)

C-10 program error correction (recursive function): number to character

Openvino only supports Intel CPUs of generation 6 and above

2022 group programming ladder game simulation L2-4 Zhezhi game (25 points)

Unity knowledge points (ugui 2)

Design and implementation of redis (3): persistence strategy RDB, AOF

ROS series (IV): ROS communication mechanism series (3): parameter server

C-11 problem I: find balloon

Un aperçu des flux d'E / s et des opérations de fichiers de classe de fichiers

Variable definition and use

Chapter VI, Section III pointer

Use the thread factory to set the thread name in the thread pool

2021-08-31

JS calculates the display date according to the time

According to the category information and coordinate information of the XML file, the category area corresponding to the image is pulled out and stored in the folder.

Design and implementation of redis (2): how to handle expired keys

Identifier, keyword, data type

Websites frequented by old programmers (continuously updated)