当前位置:网站首页>Paper Notes: Bag of Tricks for Long-Tailed Visual Recognition with Deep Convolutional Neural Networks

Paper Notes: Bag of Tricks for Long-Tailed Visual Recognition with Deep Convolutional Neural Networks

2022-08-11 04:53:00 【shier_smile】

论文地址:http://www.lamda.nju.edu.cn/zhangys/papers/AAAI_tricks.pdf

代码地址:https://github.com/zhangyongshun/BagofTricks-LT

文章目录

The author for the existing forlong-tailed visual recognition中的tricksSystematic experiments were carried out.

- Which is verified by a lot of experimentstricksCombining them together improves accuracy,Which can't(Papers tend to be more experimental reports,For some experimental results, the author did not explain the reasons in detail)

- 作者发现了Mixup对于long-tailed It works well for classification tasks,Especially firstinput的时候做MixupAnd then after the training is completefine-tuning.

- 作者基于CAMA new data augmentation method is proposed:CAM-based,and confirmed its sumclass-balance samplingIt works best when combined.

1、动机

The author stated firstmetric learning、meta learning和knowledge transfer在long-tailedThe mission has been successful,But there are still several major problems.

Some existing methods are sensitive to hyperparameters during training

The training process is complicated

Use these in real scenariostricks实际上很难.

不同的tricksWorks well alone,But mixing them together isn't necessarily better,Some may even be worse.The reason is that there are actually manytrickshave a similar effect,比如re-sampling和re-weightingIt's all about letting the model betailed classesbe more careful,It may be caused by overlapping use togethertailed classes的过拟合.

2、 Dataset

2.1、 Long-tailed CIFAR(CIFAR10、CIFAR100)

1、The sampling method uses functions n = n t ∗ μ t n=n_t*\mu^t n=nt∗μt 其中 t t tis the subscript of the category(从0开始), μ ∈ ( 0 , 1 ) \mu\in(0,1) μ∈(0,1), n t n_t ntis the original number of training images, i m b a l a n c e f a c t o r = n l a r g e s t n s m a l l e s t imbalance factor=\frac{n_{largest}}{n_{smallest}} imbalancefactor=nsmallestnlargestIts value can be obtained 10 ∼ 200 10\sim200 10∼200Experiments are generally selected50和100.

2、Preprocessing :

train:

- Padding on each edge4 pixels

- 随机裁减32*32区域

- 0.5The probability flips randomly

- normalization

val:

- The side that will be shorter if the aspect ratio of the image is guaranteedresize到36 pixels

- Center cut32*32区间大小

- normalization

3、 backbone: ResNet-32

4、training details:

batchsize:128

epoch:200

optimizer:momentum: 0.9

weight decay: 2 ∗ 1 0 − 4 2*10^{-4} 2∗10−4

learning rate scheduler: warmup (前5个epoch)+stepdecay(epoch到160和180时lr下降100倍)

2.2 iNaturalist 2018

1、这篇文章在iNaturalist2018的train和val上做的实验

2、Preprocessing:

train:

use scale and aspect ratio data augmentation(Szegedy et al 2015)

224*224random cuts

随机翻转

normalization

val:

- The side that will be shorter if the aspect ratio of the image is guaranteedresize到256 pixels

- Center cut224*224

- normalization

3、backbone:ResNet-50

4、training details:

- batchsize:512

- epoch:90

- optimizer:momentum:0.9

- weight decay: 1 ∗ 1 0 − 4 1*10^{-4} 1∗10−4

- learning rate scheduler:stepdecay(epoch到30、60、80下降10倍)

2.3 ImageNet-LT

1、(Liu19cvpr)用Pareto distribution在原本的ImageNetsampled above,1000类别,每类别 5 ∼ 1280 5\sim1280 5∼1280,共19K训练集

2、Preprocess:同iNaturelist2018

3、backbone:ResNet-10

3、training details:同iNaturalist2018

3、Tricks gallery

3.1 Re-weighting

Give more weight to tail classes,Make the model pay more attention to the expression of the tail class.

参数说明:

c ∈ 1 , 2 , ⋯ , C c\in{1,2,\cdots, C} c∈1,2,⋯,C:图片类别

z = [ z 1 , z 2 , ⋯ , z C ] z=[z_1, z_2, \cdots, z_C] z=[z1,z2,⋯,zC]:预测输出

C:类别总数

n m i n n_{min} nmin:The class with the smallest number of samples

n c n_c nc:cThe number of class training images

1、CE(cross entropy loss):

2、CS_CE(Cost-sensitive softmax cross-entropy loss)

3、Focal loss

其中 p i = s i g m o i d ( z i ) = 1 1 + e z i p_i=sigmoid(z_i)=\frac{1}{1+e^{z_i}} pi=sigmoid(zi)=1+ezi1

4、CB_Focal(Classi-balanced loss)

实验数据:

refer to the originallossThe original paper hyperparameter settings,在CIFAR-10-LT上奏效,但是在CIFAR-100-LT上表现不好,实验结论是:Direct use on different datasetsre-weighting不work

3.2 Re-sampling

By resampling the experimental data,to get a uniformly distributed dataset,The methods used in the paper are:

1、Random over-sampling:Increase sampling for tail classes,But it may cause overfitting of the tail class

2、Random under-sampling:Reduced adoption of header classes,Construct a balanced dataset.

3、Class-balanced sampling:通过公式:

Calculate the sampling probability.(选择一个类别,Then randomly select the samples inside).

4、Square-root sampling:将公式(7)中的q取 1 2 \frac{1}{2} 21,to sample a more balanced dataset

5、Progressively-balanced sampling:The sampling probability is continuously adjusted during the training process.

t:为当前的epoch数, T全部epoch数.

(4,5,6All from the thesis:DECOUPLING REPRESENTATION AND CLASSIFIERFOR LONG-TAILED RECOGNITION有兴趣的可以去看看)

实验数据:

实验结论:直接使用re-samplingProvides a slight boost.

ps:From the results of this experiment,在CIFAR-10-LT上,只有Class-balance samplingBrings a slight boost,而在CIFAR-100-LT上却只有Progressive-balance sampling有提升.It feels rather mysterious,The author does not give a reason,I don't know if it's for comparison experiments,Caused by the setting of hyperparameters,Judgments can only be made after specific experiments are done.(后补)

3.3 Mixup Training

The author has twoMixup在long-tailed 数据集上进行实验,并和fine-tuningExperiments were carried out in combination.

1、Input Mixup

The authors only used it during the training phaseMixup,Slow down by linearly interpolating between the two imagesCNNadversarial interference.

2、Manifold mixup

(By doing it on the output of the middle layer of the networkmixup)

3、fine-tuning

论文:Rethinking the distribution gap between clean and augmented data中表明:先使用mixup训练好模型后,再去掉mixupContinue to train a few moreepoch,can increase the accuracy.

实验结果:

实验结论:

1、Input mixup和maniflod mixupcan bring improvement,Specifically used in different partsMixup产生不同的结果,当 α \alpha α取1, 在pooling层做mixup时效果最好,需要和其他的trick做更多的实验.

2、在Input Mixup后使用fine-tuning能带来提升,但是对于Manifold mixupbut makes the results worse

ps:For both of these two experimental results,The author does not give a reason.

4、Two-stage training

The two-stage training is carried out firstimbalance training 然后再使用balance training 进行fine-tuning.作者做了(deferred re-balancing by re-sampling)DRS和(deferred re-balancing by re-weighting)DRWTwo-part experiment.And the author proposes a basisCAMimage augmentation method.先通过imbalance trainingThe latter model predicts the original imageclass acivate map,Then extract the area that the model pays attention to,更换不同的背景(The background image here will be enhanced first)Then perform other data augmentation to get a new picture.

ps: Change the background here,It means to enhance the background of the image itself and then paste the foreground image.(There are ways of background augmentation:1、rotate and scale, 2、translate, 3、horizontal filp)

作者对CAM-based+re-sampling和DRW进行了实验.

CAM-based+Class-balanced sampling效果最好,而DRW中CS_CE效果最好.

5、Trick combinations

1、The author will table7和表8The two best performersTrick(CS_CE和CAM-BS)组合到一起,However, the effect was found to be somewhat worse,The author says the reason isCS_CE和Class-balance samplingAll make the model pay more attention to the expression of the tail class, which leads to overfitting of the tail class.

2、将Input mixup和manifold mixup组合后Input mixup效果比manifolde mixup好

ps: 1、从表9和表10中可以发现,使用了Manifold mixup之后对于imbalance factor=100situation has improved.但是对于imbalance factor=50situation has worsened.Curious what the reason is here

最后一个表:

作者将Input Mixup和DRS+CAM-BS+fine-tuningCombining to achieve the best results.

总体效果:

The author did not giveCAM-baseDo the experiment alone,I feel like I can try it aloneCAM-baseHow does the experiment work.

以上psplace for personal opinion,如有错误请指出,非常感谢.

ps:本贴写于2022-8-04, 未经本人允许,禁止转载.

边栏推荐

- [Note] Is the value of BatchSize the bigger the better?

- [E-commerce operation] How to formulate a social media marketing strategy?

- CAN/以太网转换器 CAN与以太网互联互通

- The sword refers to offer_abstract modeling capabilities

- The priority queue

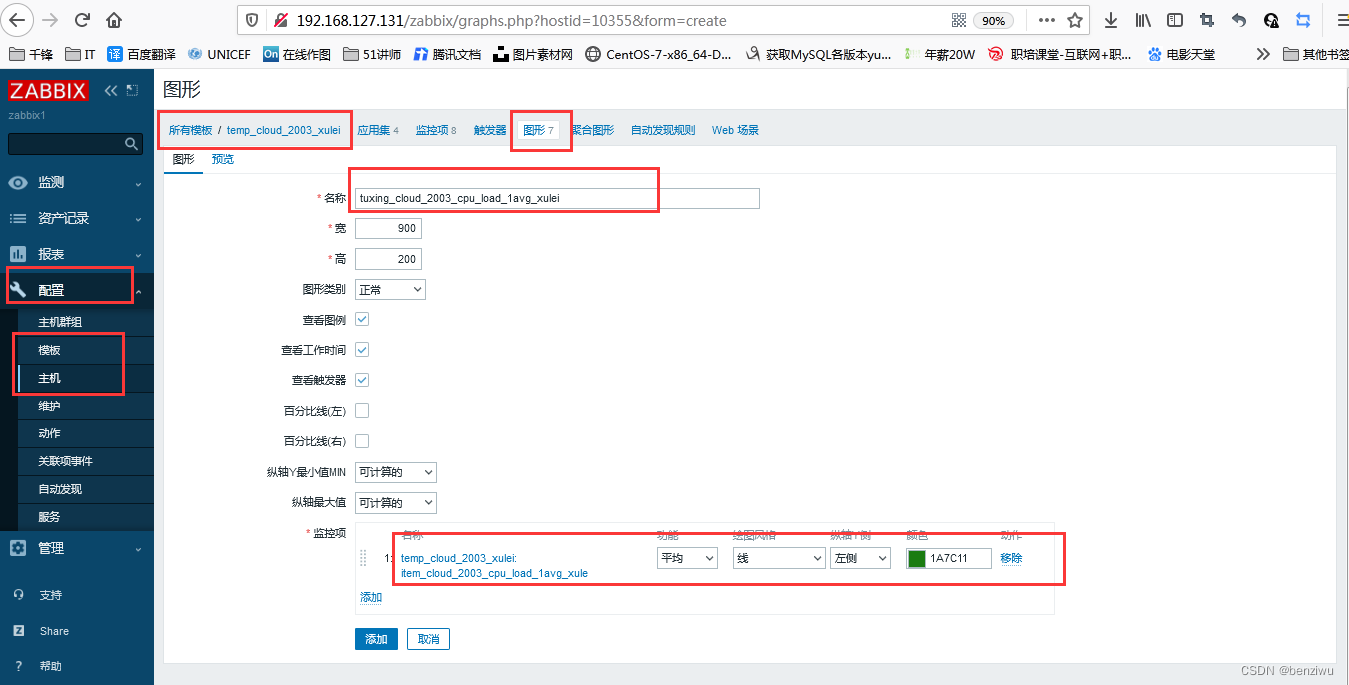

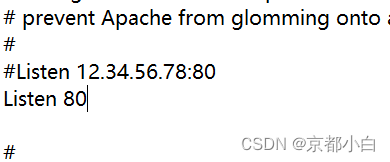

- Zabbix builds enterprise-level monitoring and alarm platform

- Jetson Orin platform 4-16 channel GMSL2/GSML1 camera acquisition kit recommended

- 【实战场景】商城-折扣活动设计方案

- 力扣——旋转数组的最小数字

- MQ框架应用比较

猜你喜欢

随机推荐

【Web3 系列开发教程——创建你的第一个 NFT(9)】如何在手机钱包里查看你的 NFT

破解事务性工作瓶颈,君子签电子合同释放HR“源动力”!

[Server installation Redis] Centos7 offline installation of redis

02.折叠隐藏文字

redis按照正则批量删除key

【小记】BatchSize的数值是设置的越大越好吗

Switch and Router Technology - 22/23 - OSPF Dynamic Routing Protocol/Link State Synchronization Process

IP-Guard如何禁止运行U盘程序

Jetson Orin平台4-16路 GMSL2/GSML1相机采集套件推荐

Switches and routers technology - 21 - RIP routing protocol

开发工具篇第七讲:阿里云日志查询与分析

findViewById返回null的问题

标识密码技术在 IMS 网络中的应用

优化是一种习惯●出发点是“站在靠近临界“的地方

干货:服务器网卡组技术原理与实践

Summary of c language fprintf, fscanf, sscanf and sprintf function knowledge points

Embedded Sharing Collection 33

-Fill in color-

2.2 user manual] [QNX Hypervisor 10.15 vdev timer8254

Switches and routers technology - 24 - configure OSPF single area