当前位置:网站首页>Tensorflow uses keras to create neural networks

Tensorflow uses keras to create neural networks

2022-04-23 11:28:00 【A gentleman reads the river】

List of articles

Create a simple neural network

Use it directly keras.Model Method

def line_fit_model():

""" Model Build a network structure param: return: """

# Input layer

inputs = tf.keras.Input(shape=(1, ), name="inputs")

# Hidden layer -1

layer1 = layers.Dense(10, activation="relu", name="layer1")(inputs)

# Hidden layer -2

layer2 = layers.Dense(15, activation="relu", name="layer2")(layer1)

# Output layer

outputs = layers.Dense(5, activation="softmax", name="outputs")(layer2)

# Instantiation

model = tf.keras.Model(inputs=inputs, outputs=outputs)

# Show network structure

model.summary()

return model

Inherit keras.Model Method

class LiftModel(tf.keras.Model):

""" Inherit keras.Model Rewrite calling function """

def __init__(self):

super(LiftModel, self).__init__()

self.layer1 = layers.Dense(10, activation=tf.nn.relu, name="layer1")

self.layer2 = layers.Dense(15, activation=tf.nn.relu, name="layer2")

self.outputs = layers.Dense(5, activation=tf.nn.softmax, name="outputs")

def call(self, inputs):

layer1 = self.layer1(inputs)

layer2 = self.layer2(layer1)

outputs = self.outputs(layer2)

return outputs

if __name__ =="__main__":

inputs = tf.constant([[1]])

lift = LiftModel()

lift(inputs)

lift.summary()

use keras.Sequential Built in method

def line_fit_sequetnial():

model = tf.keras.Sequential([

layers.Dense(10, activation="relu", input_shape=(1, ), name="layer1"),

layers.Dense(15, activation="relu", name="layer2"),

layers.Dense(5, activation="softmax", name="outputs")

])

model.summary()

return model

use Sequential() Outsourcing method

def outline_fit_sequential():

model = tf.keras.Sequential()

model.add(layers.Dense(10, activation="relu", input_shape=(1, ), name="layer1"))

model.add(layers.Dense(15, activation="relu", name="layer2"))

model.add(layers.Dense(5, activation="softmax", name="output"))

model.summary()

return model

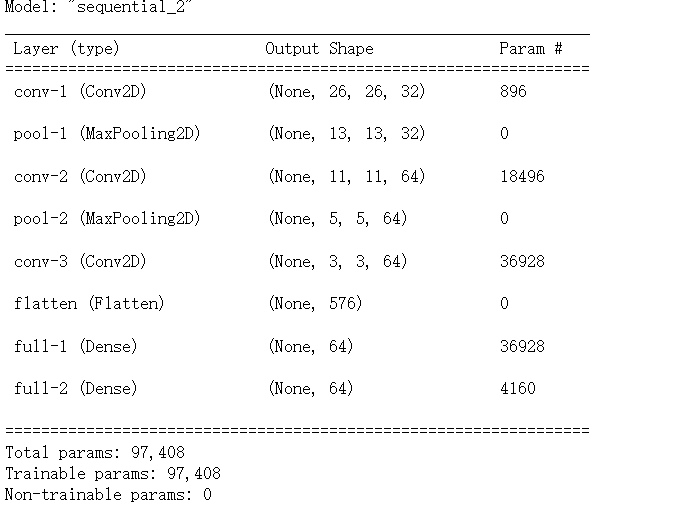

Create a convolutional neural network

Built in Sequentia Method

def cnn_sequential():

model = tf.keras.Sequential([

# Convolution layer -1

layers.Conv2D(32, (3, 3), activation="relu", input_shape=(28, 28, 3), name="conv-1"),

# Pooling layer -1

layers.MaxPooling2D((2, 2), name="pool-1"),

# Convolution layer -2

layers.Conv2D(64, (3, 3),activation="relu" ,name="conv-2"),

# Pooling layer -2

layers.MaxPooling2D((2, 2), name="pool-2"),

# Convolution layer -3

layers.Conv2D(64, (3, 3), activation="relu", name="conv-3"),

# Flatten the column vector

layers.Flatten(),

# Fully connected layer -1

layers.Dense(64, activation="relu", name="full-1"),

# softmax layer

layers.Dense(64, activation="softmax", name="softmax-1")

])

model.summary()

use Sequential Outsourcing method

def outline_cnn_sequential():

model = tf.keras.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation="relu", input_shape=(84, 84, 3), name="conv-1" ))

model.add(layers.MaxPooling2D((2, 2), name="pool-1"))

model.add(layers.Conv2D(64, (3, 3), activation="relu", name="conv-2"))

model.add(layers.MaxPooling2D((2, 2), name="pool-2"))

model.add(layers.Conv2D(64, (3, 3), activation="relu", name="conv-3"))

model.add(layers.MaxPooling2D((2, 2), name="pool-3"))

model.add(layers.Flatten())

model.add(layers.Dense(64, activation="relu", name='full-1'))

model.add(layers.Dense(64, activation="softmax", name="softmax-1"))

model.summary()

版权声明

本文为[A gentleman reads the river]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204231122283595.html

边栏推荐

- ES6学习笔记二

- MySQL Router重装后重新连接集群进行引导出现的——此主机中之前已配置过的问题

- Share two practical shell scripts

- Learning go language 0x02: understanding slice

- 分享两个实用的shell脚本

- Laravel always returns JSON response

- Structure of C language (Advanced)

- 博客文章导航(实时更新)

- Compress the curl library into a sending string of utf8 and send it with curl library

- Laravel增加自定义助手函数

猜你喜欢

随机推荐

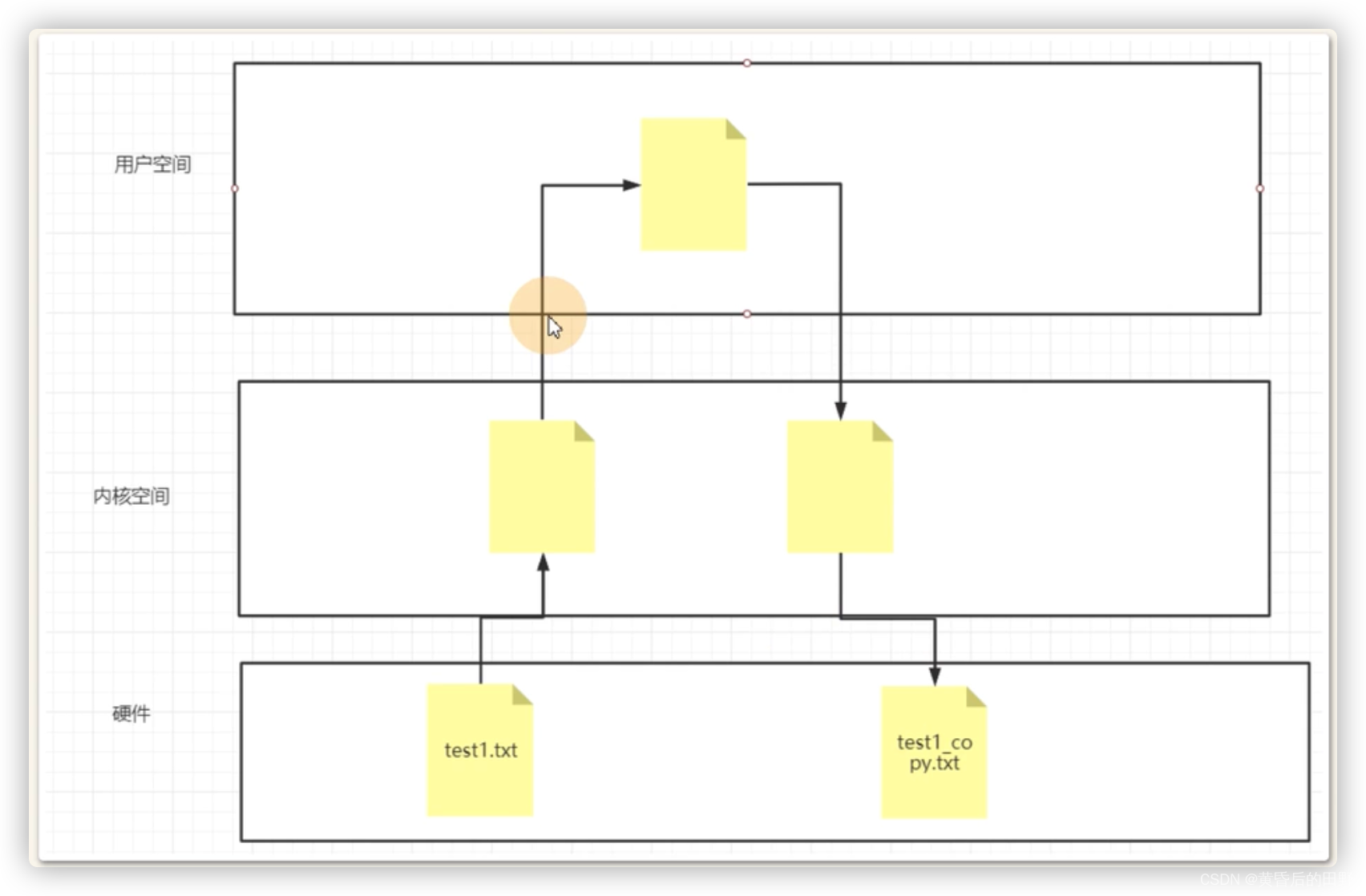

系统编程之高级文件IO(十三)——IO多路复用-select

项目实训-火爆辣椒

2022爱分析· 工业互联网厂商全景报告

MySQL Router重装后重新连接集群进行引导出现的——此主机中之前已配置过的问题

Learn go language 0x04: Code of exercises sliced in go language journey

How does QT turn qwigdet into qdialog

tensorflow常用的函数

ES6 learning notes II

Maker education for primary and middle school students to learn in happiness

mysql插入datetime类型字段不加单引号插入不成功

让中小学生在快乐中学习的创客教育

MQ is easy to use in laravel

Share two practical shell scripts

Compress the curl library into a sending string of utf8 and send it with curl library

Detailed explanation of how to smoothly go online after MySQL table splitting

Interpretation of 2022 robot education industry analysis report

微型机器人的认知和研发技术

小程序 支付

Redis optimization series (II) redis master-slave principle and master-slave common configuration

Learning go language 0x02: understanding slice