当前位置:网站首页>Convolutional Neural Network (CNN) for mnist handwritten digit recognition

Convolutional Neural Network (CNN) for mnist handwritten digit recognition

2022-08-10 05:55:00 【Ape Tongxue】

活动地址:CSDN21天学习挑战赛

首先来了解一下

什么是TensorFlow ?

TensorFlow 是一个采用数据流图(data flow graphs),用于数值计算的开源软件库.

其命名来源于本身的原理,Tensor(张量)意味着N维数组,Flow(流)意味着基于数据流图的计算.Tensorflow运行过程就是张量从图的一端流动到另一端的计算过程.The intuitive picture of the tensor flowing through the graph is its name“TensorFlow”的原因.

张量Tensor:在数学上,张量是N维向量,This means that tensors can be used to representN维数据集.

计算图(流, flow):A stream refers to a computational graph or simply a graph,Graphs cannot form cycles,Each node in the graph represents an operation,如加法、减法等.Each operation results in the formation of a new tensor.

只要能够将计算表示成为一个数据流图,那么就可以使用TensorFlow.If this picture is not a neural network,所以使用TensorFlow框架.

什么是Keras?

Keras是基于TensorFlow或者TheanoA deep learning library under the framework,是由纯python编写而成的高层神经网络API,也仅支持python开发.它是为了支持快速实践而对tensorflow或者Theano的再次封装,让我们可以不用关注过多的底层细节,能够把想法快速转换为结果.目前Keras已经被TensorFlow收录,添加到TensorFlow 中,成为其默认的框架,成为TensorFlowOfficial seniorAPI.

tf.keras和keras的联系

基于同一套API,但是因为tf.keras中比kerasA little more special function,So it can be easily putkeras程序迁移到tf.keras中,但是tf.kerasThe code in is not a catch-all to move tokeras中运行.

Specifications are the same,The format of the model export is also the same.

keras.layers模块

TensorFlow的layersModules are provided for深度Higher-level encapsulation of learningAPI,Use it to easily build models.tf.layersThe methods provided by the module are:

| 方法 | 说明 |

|---|---|

Input | Used to instantiate an inputTensor,作为神经网络的输入 |

average_pooling1d | 1D average pooling layer |

average_pooling2d | 二维平均池化层 |

average_pooling3d | 3D average pooling layer |

batch_normalization | 批量标准化层 |

conv1d | 一维卷积层 |

conv2d | 二维卷积层 |

conv2d_transpose | 2D deconvolution layer |

conv3d | 三维卷积层 |

conv3d_transpose | 3D deconvolution layer |

dense | 全连接层 |

dropout | Dropout层 |

flatten | Flatten层,把一个Tensor展平 |

max_pooling1d | 一维最大池化层 |

max_pooling2d | 二维最大池化层 |

max_pooling3d | 三维最大池化层 |

separable_conv2d | 2D depthwise separable convolutional layers |

参数介绍: TensorFlow之神经网络layers模块详解_Never-Giveup的博客-CSDN博客_神经网络layer

Keras.moleds.Sequential模型

Sequential 模型结构: 层(layers)linear stack of.简单来说,It is a simple linear structure,No extra branches,is a stack of multiple network layers.

- 其中,Dense是一个全连接层,Its activation function defaults to yeslinear线性函数

- The activation function can be passed A separate activation layer 实现,也可以通过 Passed when building the layeractivation实现

一、读取数据

Keras提供了数据集加载函数

import tensorflow as tf

from tensorflow.keras import datasets, layers, models

import matplotlib.pyplot as plt

import numpy as np

(train_images, train_labels), (test_images, test_labels) = datasets.mnist.load_data()

查看数据维度

train_images.shape

test_images.shape

train_labels.shape

test_labels.shape

'''

((60000, 28, 28), (10000, 28, 28), (60000,), (10000,))

'''可以看到训练集有60000个28*28的图片,60000个标签,测试集有10000个28*28的图片,10000个标签.

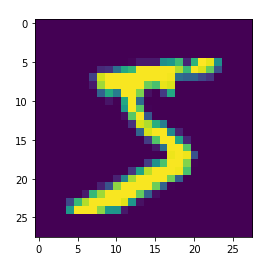

plt.imshow(train_images[0])查看一下图片是一张图片

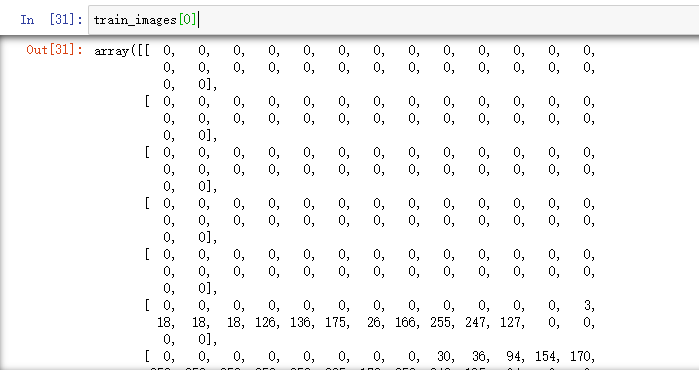

train_images[0]Check out its value

Check its maximum value

print(np.max(train_images))

print(np.min(train_images))

#255

#0那么其取值范围是[0 , 255]之间.

二、数据预处理

1、在数据预处理时,首先采用reshape函数将每个图像矩阵扁平化成一个向量:

#调整数据到我们需要的格式

train_images = train_images.reshape((60000, 28, 28, 1))

test_images = test_images.reshape((10000, 28, 28, 1))

train_images.shape,test_images.shape,train_labels.shape,test_labels.shape

"""

输出:((60000, 28, 28, 1), (10000, 28, 28, 1), (60000,), (10000,))

"""

2、数据归一化,将输入值[0,255]归一化为[0,1]的取值范围:

# 将像素的值标准化至0到1的区间内.

train_images, test_images = train_images / 255.0, test_images / 255.0

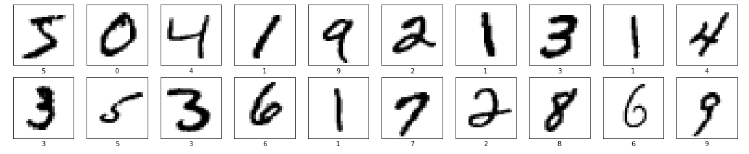

3、数据可视化

plt.figure(figsize=(20,10))

for i in range(20):

plt.subplot(5,10,i+1)

plt.xticks([])

plt.yticks([])

plt.grid(False)

plt.imshow(train_images[i], cmap=plt.cm.binary)

plt.xlabel(train_labels[i])

plt.show()

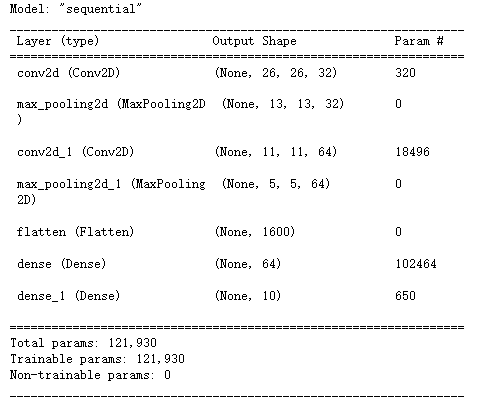

三、构建CNN神经网络模型

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)),#卷积层1,卷积核3*3

layers.MaxPooling2D((2, 2)), #池化层1,2*2采样

layers.Conv2D(64, (3, 3), activation='relu'), #卷积层2,卷积核3*3

layers.MaxPooling2D((2, 2)), #池化层2,2*2采样

layers.Flatten(), #Flatten层,连接卷积层与全连接层

layers.Dense(64, activation='relu'), #全连接层,特征进一步提取

layers.Dense(10) #输出层,输出预期结果

])

# 打印网络结构

model.summary()

四、确定学习的目标

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

compile函数设置了学习的目标,其中:

loss:定义了损失函数,

optimizer:指定了优化算法,

metrics:是评价指标

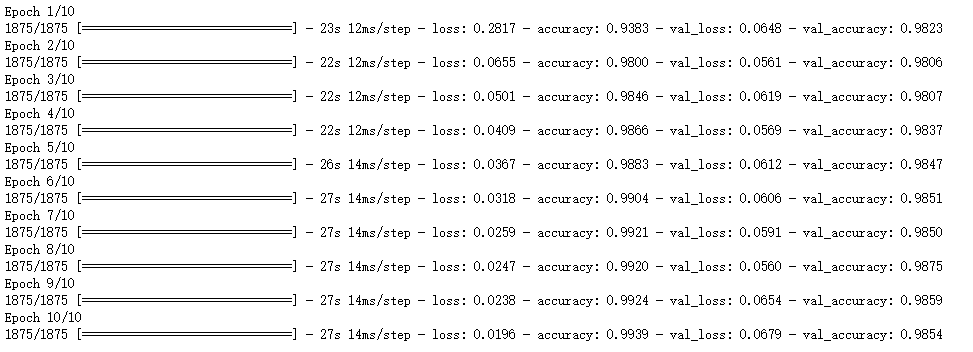

五:模型训练

这里设置输入训练数据集(图片及标签)、验证数据集(图片及标签)以及迭代次数epochs

history = model.fit(train_images, train_labels, epochs=10,

validation_data=(test_images, test_labels))

六、模型评估

调用evaluate函数对测试集进行评估,返回数组score,其中第一维是模型的损失值,第二维是模型评估的精度.

score= model.evaluate(test_images,test_labels)

print("Test loss:",score[0])

print("Test accuracy:",score[1])

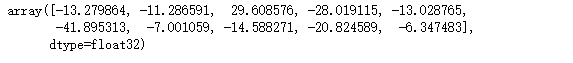

七、模型的预测

输出第一张测试集的预测结果

plt.imshow(test_images[1])

pre = model.predict(test_images) # 对所有测试图片进行预测

pre[1] # 输出第一张图片的预测结果

It can be seen that the value at the third position is the largest,所以预测的是2

得到预测结果:

np.argmax([pre[1]])

#2查看标签

test_labels[1]

#2预测正确

Everyone pay attention to watching

>- 本文为[365天深度学习训练营](https://mp.weixin.qq.com/s/k-vYaC8l7uxX51WoypLkTw) 中的学习记录博客

>- 参考文章地址: 深度学习100例-卷积神经网络(CNN)实现mnist手写数字识别 | 第1天_K同学啊的博客-CSDN博客)

边栏推荐

猜你喜欢

随机推荐

深度学习TensorFlow入门环境配置

PyTorch之CV

ACID四种特性

MySQL中MyISAM为什么比InnoDB查询快

链读好文:Jeff Garzik 推出 Web3 制作公司

视图【】【】【】【】

基于MNIST数据集的简单FC复现

Privatisation build personal network backup NextCloud

链读推荐:从瓷砖到生成式 NFT

十年磨一剑!数字藏品行情软件,链读APP正式开放内测!

Batch add watermark to pictures batch add background zoom batch merge tool picUnionV4.0

Small program wx.request simple Promise package

Index Notes【】【】

卷积神经网络(CNN)实现mnist手写数字识别

LeetCode 938.二叉搜索树的范围和(简单)

The submenu of the el-cascader cascade selector is double-clicked to display the selected content

tinymce rich text editor

Operation table Function usage

Ten years of sharpening a sword!The digital collection market software, Link Reading APP is officially open for internal testing!

深度学习阶段性报告(一)