当前位置:网站首页>[target detection] small script: extract training set images and labels and update the index

[target detection] small script: extract training set images and labels and update the index

2022-08-10 13:21:00 【zstar-_】

问题场景

在做目标检测任务时,I would like to extract images of the training set for external data augmentation alone.因此,need to be divided according totrain.txtto extract training set images and labels.

需求实现

我使用VOC数据集进行测试,实现比较简单.

import shutil

if __name__ == '__main__':

img_src = r"D:\Dataset\VOC2007\images"

xml_src = r"D:\Dataset\VOC2007\Annotations"

img_out = "image_out/"

xml_out = "xml_out/"

txt_path = r"D:\Dataset\VOC2007\ImageSets\Segmentation\train.txt"

# 读取txt文件

with open(txt_path, 'r') as f:

line_list = f.readlines()

for line in line_list:

line_new = line.replace('\n', '') # 将换行符替换为空('')

shutil.copy(img_src + '/' + line_new + ".jpg", img_out)

shutil.copy(xml_src + '/' + line_new + ".xml", xml_out)

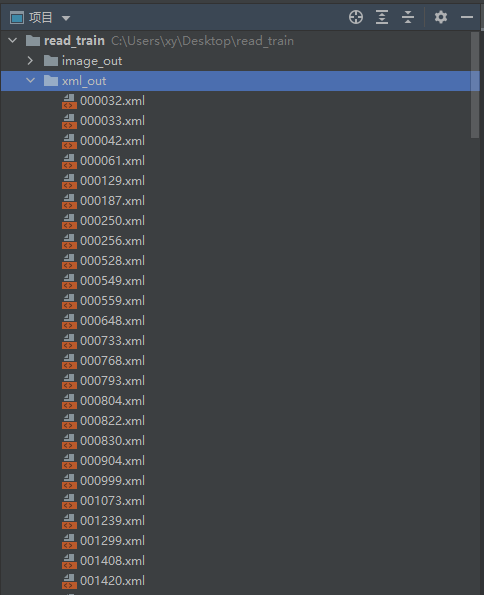

效果:

Update the training set index

使用数据增强之后,Throw the generated image and label thereVOC里面,混在一起.

Then write a script,Add the generated image name to train.txt文件中.

import os

if __name__ == '__main__':

xml_src = r"C:\Users\xy\Desktop\read_train\xml_out_af"

txt_path = r"D:\Dataset\VOC2007\ImageSets\Segmentation\train.txt"

for name in os.listdir(xml_src):

with open(txt_path, 'a') as f:

f.write(name[:-4] + "\n")

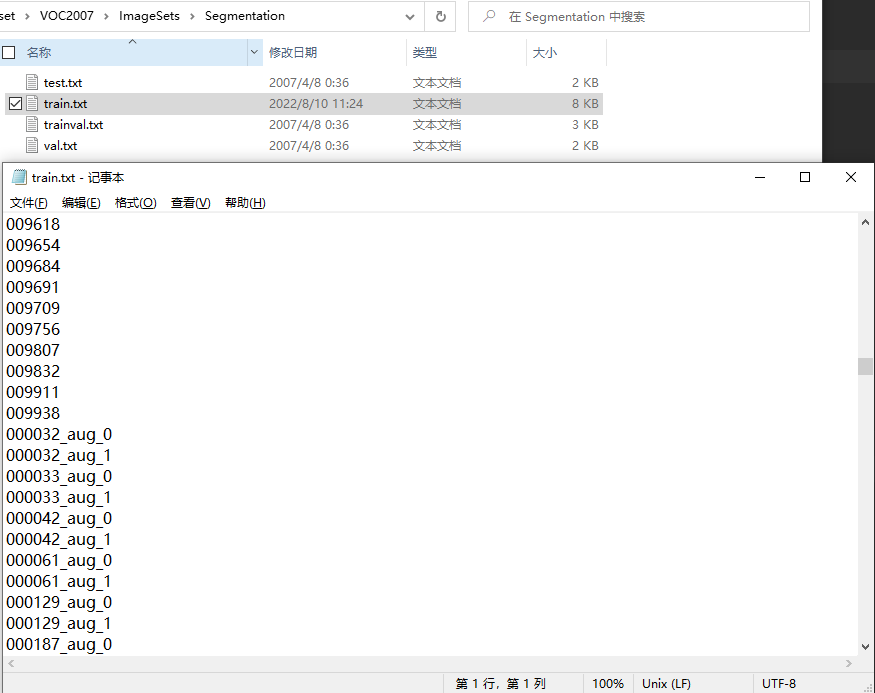

效果:

最后,before running againVOCWritten in the blog postxml2txt脚本:

import xml.etree.ElementTree as ET

import pickle

import os

from os import listdir, getcwd

from os.path import join

sets = ['train', 'test', 'val']

Imgpath = r'D:\Dataset\VOC2007\images' # 图片文件夹

xmlfilepath = r'D:\Dataset\VOC2007\Annotations' # xml文件存放地址

ImageSets_path = r'D:\Dataset\VOC2007\ImageSets\Segmentation'

Label_path = r'D:\Dataset\VOC2007'

classes = ['aeroplane', 'bicycle', 'bird', 'boat', 'bottle', 'bus', 'car', 'cat', 'chair', 'cow', 'diningtable', 'dog',

'horse', 'motorbike', 'person', 'pottedplant', 'sheep', 'sofa', 'train', 'tvmonitor']

def convert(size, box):

dw = 1. / size[0]

dh = 1. / size[1]

x = (box[0] + box[1]) / 2.0

y = (box[2] + box[3]) / 2.0

w = box[1] - box[0]

h = box[3] - box[2]

x = x * dw

w = w * dw

y = y * dh

h = h * dh

return (x, y, w, h)

def convert_annotation(image_id):

in_file = open(xmlfilepath + '/%s.xml' % (image_id))

out_file = open(Label_path + '/labels/%s.txt' % (image_id), 'w')

tree = ET.parse(in_file)

root = tree.getroot()

size = root.find('size')

w = int(size.find('width').text)

h = int(size.find('height').text)

for obj in root.iter('object'):

difficult = obj.find('difficult').text

cls = obj.find('name').text

if cls not in classes or int(difficult) == 1:

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text),

float(xmlbox.find('ymax').text))

bb = convert((w, h), b)

out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n')

for image_set in sets:

if not os.path.exists(Label_path + 'labels/'):

os.makedirs(Label_path + 'labels/')

image_ids = open(ImageSets_path + '/%s.txt' % (image_set)).read().strip().split()

list_file = open(Label_path + '%s.txt' % (image_set), 'w')

for image_id in image_ids:

# print(image_id) # DJI_0013_00360

list_file.write(Imgpath + '/%s.jpg\n' % (image_id))

convert_annotation(image_id)

list_file.close()

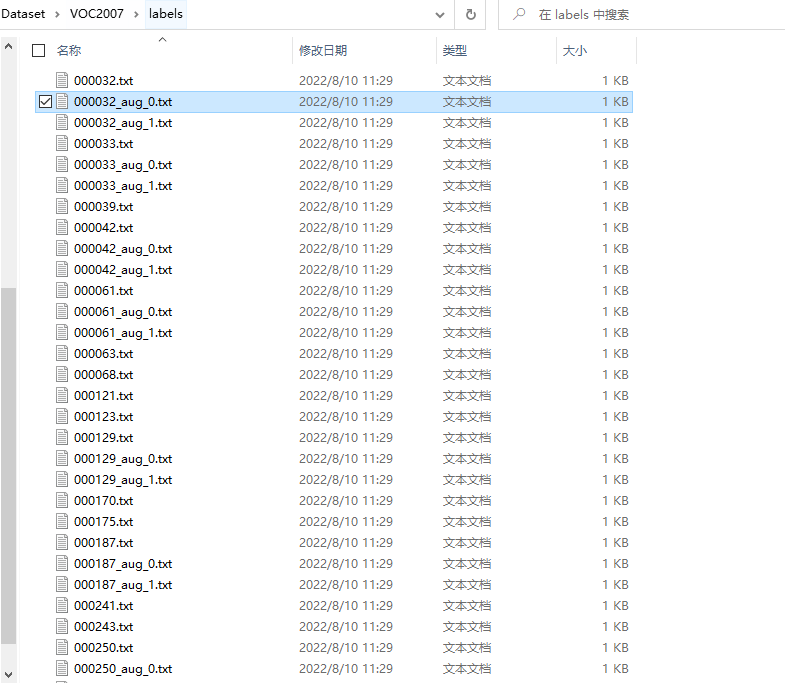

运行之后,You can see that the generated data augmentation samples are perfectly added to the original dataset.

边栏推荐

- ABAP file operations involved in the Chinese character set of problems and solutions for trying to read

- Nanodlp v2.2/v3.0 light curing circuit board, connection method of mechanical switch/photoelectric switch/proximity switch and system state level setting

- 娄底污水处理厂实验室建设管理

- Wirshark common operations and tcp three-way handshake process example analysis

- 表中存在多个索引问题? - 聚集索引,回表,覆盖索引

- Loudi Center for Disease Control and Prevention Laboratory Design Concept Description

- NodeJs原理 - Stream(二)

- 递归递推之计算组合数

- Drive IT Modernization with Low Code

- Short read or OOM loading DB. Unrecoverable error, aborting now

猜你喜欢

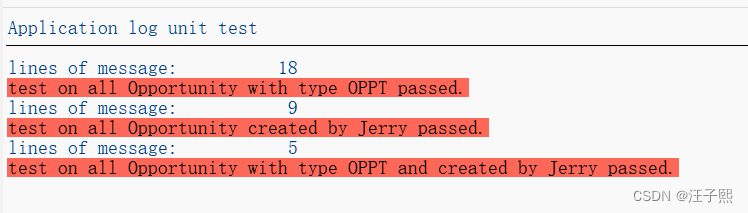

A unit test report for CRM One Order Application log

MySQL面试题整理

把相亲角搬到海外,不愧是咱爸妈

生成树协议STP(Spanning Tree Protocol)

广东10个项目入选工信部2021年物联网示范项目名单

LeetCode·每日一题·640.求解方程·模拟构造

Proprietary cloud ABC Stack, the real strength!

交换机的基础知识

![ArcMAP has a problem of -15 and cannot be accessed [Provide your license server administrator with the following information:Err-15]](/img/da/b49d7ba845c351cefc4efc174de995.png)

ArcMAP has a problem of -15 and cannot be accessed [Provide your license server administrator with the following information:Err-15]

11 + chrome advanced debugging skills, learn to direct efficiency increases by 666%

随机推荐

Keithley DMM7510精准测量超低功耗设备各种运作模式功耗

娄底农产品检验实验室建设指南盘点

Real-time data warehouse practice of Baidu user product flow and batch integration

【jstack、jps命令使用】排查死锁

Jiugongge lottery animation

Ethernet channel 以太信道

娄底疾控中心实验室设计理念说明

Network Saboteur

Short read or OOM loading DB. Unrecoverable error, aborting now

2022年五大云虚拟化趋势

Wirshark common operations and tcp three-way handshake process example analysis

Comparison version number of middle questions in LeetCode

娄底污水处理厂实验室建设管理

DNS欺骗-教程详解

mSystems | 中农汪杰组揭示影响土壤“塑料际”微生物群落的机制

九宫格抽奖动效

矩阵键盘&基于51(UcosII)计算器小项目

Network Saboteur

递归递推之递归的函数

LeetCode简单题之合并相似的物品