当前位置:网站首页>[point cloud series] neural opportunity point cloud (NOPC)

[point cloud series] neural opportunity point cloud (NOPC)

2022-04-23 13:18:00 【^_^ Min Fei】

List of articles

1. Summary

2020 PAMI Journal content

Project address :https://wuminye.github.io/NOPC/

Related contents involved : Image based rendering (IBR)、 Neural rendering 、 Cutout

characteristic : Combined with point cloud to enhance the rendering effect

Yu Jingyi's team work

2. motivation

Conventional Image based opaque shell (Image-Based Opacity Hull, IBOH) Technology can lead to... Due to insufficient sampling Artifacts and overlaps . This problem can be alleviated by using high-quality Geometry , But for Plush object Come on , Obtaining a true and accurate geometric appearance is still a huge challenge . Such objects contain thousands of hair fibers , Because the fibers are very thin and cover each other irregularly , They show a strong perspective related The opacity , This opacity information is difficult to model in terms of geometry and appearance , Even with the latest 3D Scanner , And cannot be fully obtained .

The rendering method proposed by the researchers can make Image based rendering (IBR) And Neural network rendering (Neural Rendering) combination , Take the rough point cloud of the rendered object as the input , Using image data taken from a relatively sparse viewpoint , Render the realistic appearance and accurate opacity of plush objects from a free perspective . At the same time, a photographing system for photographing and collecting real plush object data is proposed . It realizes the high-quality rendering of plush objects from a free perspective . Even if low-quality incomplete 3D point clouds are used , You can also generate realistic renderings .

3. Method

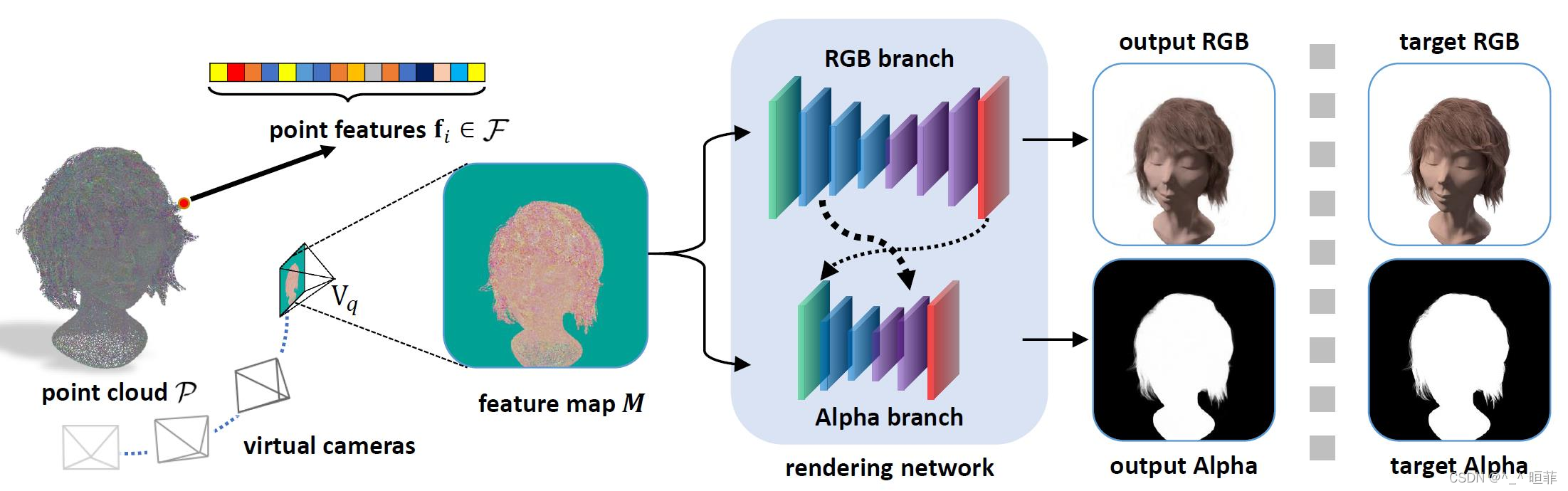

Algorithm flow diagram :

Here's the picture . From point cloud P P P among , Learn its corresponding characteristics F F F. In order to adapt to a new perspective V V V, We will P P P and F F F Project to V V V To build a perspective independent feature map M M M. The proposed multi branch framework will M M M Mapping to V V V Of RGB Images and a alpha On the channel . The Internet can be used GT RGB Map and alpha Channel to achieve end-to-end training .

The formula is described as follows :

Point cloud representation : P = { p i ∈ R 3 } i = 1 n p P=\{\mathbf{p}_i \in \mathbb{R}^3\}^{np}_{i=1} P={

pi∈R3}i=1np

Characteristic means : F = { f ∈ R m } i = 1 n p F =\{\mathbf{f}\in \mathbb{R}^m\}^{np}_{i=1} F={

f∈Rm}i=1np, there n p np np Refers to the number of points , n n n Pictures

I q ^ \hat{\mathbf{I}_q} Iq^: The first q q q It's a perspective RGB chart

A q ^ \hat{\mathbf{A}_q} Aq^: The first q q q It's a perspective alpha passageway

Camera parameters : visual angle V q V_q Vq, K q \mathbf{K}_q Kq, E q \mathbf{E}_q Eq

Ψ \varPsi Ψ: Point projection

R θ R_{\theta} Rθ: Neural rendering , Used to generate in perspective V q V_q Vq Of RGB Map and alpha Access map .

Overall network framework :

say concretely ,NOPC It consists of two modules , Pictured 5:

- The first module aims to learn the of each three-dimensional point features , This feature encodes the local geometry and appearance information around 3D points . By projecting all 3D points and their corresponding features to the virtual viewing angle , You can get the feature map from this perspective ;

- The second module uses convolutional neural network to extract from the feature map Decode the RGB Images and opaque masks . The convolutional neural network is based on U-net Network structure , Use gated convolution (gated convolution) Instead of conventional convolution , In order to robustly deal with rough or broken 3D geometry . At the same time U-net Based on the original hierarchical structure , From prediction RGB The branch of the image expands new alpha Prediction branch , This branch effectively enhances the performance of the whole network model .

RGB The encoder and decoder of :

U-Net framework +gated Convolution ( Instead of ordinary convolution ): It can enhance the ability of denoising and completion

Encoder :1 Convolution blocks +4 Next sampling block ( Halve the size and double the channel )

decoder :4 Upper sample block ( And M q \mathbf{M}_q Mq Same size ) + 1 Convolution blocks ( Output to 3 passageway )

Alpha Channel encoder and decoder :

Alpha The channel is very sensitive to low-level features , For example, image gradients and edges .

Encoder : 1 Convolution blocks +2 Next sampling block ( Only for RGB Encoder channel 2/3)

decoder :alpha Encoder +2 Up sampling module +1 Convolution blocks

Data preprocessing :

calibration : In the f f f Calculate the... On the first image i i i External parameters of a camera , In the frame corresponding to each camera , That is, a perspective V q V_q Vq.

Cutout : To remove the background

Parameter description :

ε \varepsilon ε:0.2

j j j: Pixel position

Perspective independent feature map :

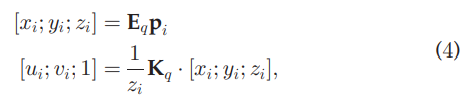

Given point cloud P P P With its characteristics F F F, visual angle V q V_q Vq, Center of the projection c q \mathbf{c}_q cq, Known camera parameters K q \mathbf{K}_q Kq and E q \mathbf{E}_q Eq, Then each point p i \mathbf{p}_i pi Project to :

here [x,y,z] Is the normal three-dimensional coordinate ; [u, v] yes p i \mathbf{p}_i pi Coordinates after projection .

And then according to the formula (4) To calculate the perspective independent feature map M q \mathbf{M}_q Mq, As formula (5), Its have m + 3 m+3 m+3 Channels .

among , d i ⃗ = p i − c q ∣ ∣ p i − c q ∣ ∣ 2 \vec{d_i}=\frac{\mathbf{p}_i-\mathbf{c}_q}{||\mathbf{p}_i-\mathbf{c}_q||_2} di=∣∣pi−cq∣∣2pi−cq, S i = { ( u , v ) ∣ p i yes stay ( u , v ) On can Depending on the turn Of spot } S_i = \{ (u,v)| \mathbf{p}_i Is in (u,v) Visual points on \} Si={

(u,v)∣pi yes stay (u,v) On can Depending on the turn Of spot }

Gradient loss : = fi + f0 Gradient of

there ρ ( . ) \rho(.) ρ(.) Indicates that only the front of the vector is retained m m m dimension .

Nerve opacity rendering

Loss function :

Ω ( A q , G ) \Omega{(\mathbf{A}_q, \mathbf{G})} Ω(Aq,G): Images I And G Of mask, among G yes alpha passageway A Intersection with point cloud depth map .

4. experiment

NOPC There are a wide range of application scenarios . It can be used in virtual reality (VR) And augmented reality (AR) Content collection and rendering process , Objects with transparency but not easy to model ( For example, people's hair 、 Plush toys, etc ) Display realistically in any virtual 3D scene . It's OK to be with idols AR Real time group photo , The proportion, size and position of idols can be adjusted according to needs , It ensures the realism in any background .

Data acquisition and processing :

Please refer to the description on the front page of the project for the specific data set

Main data set : hair 、 and Fur .

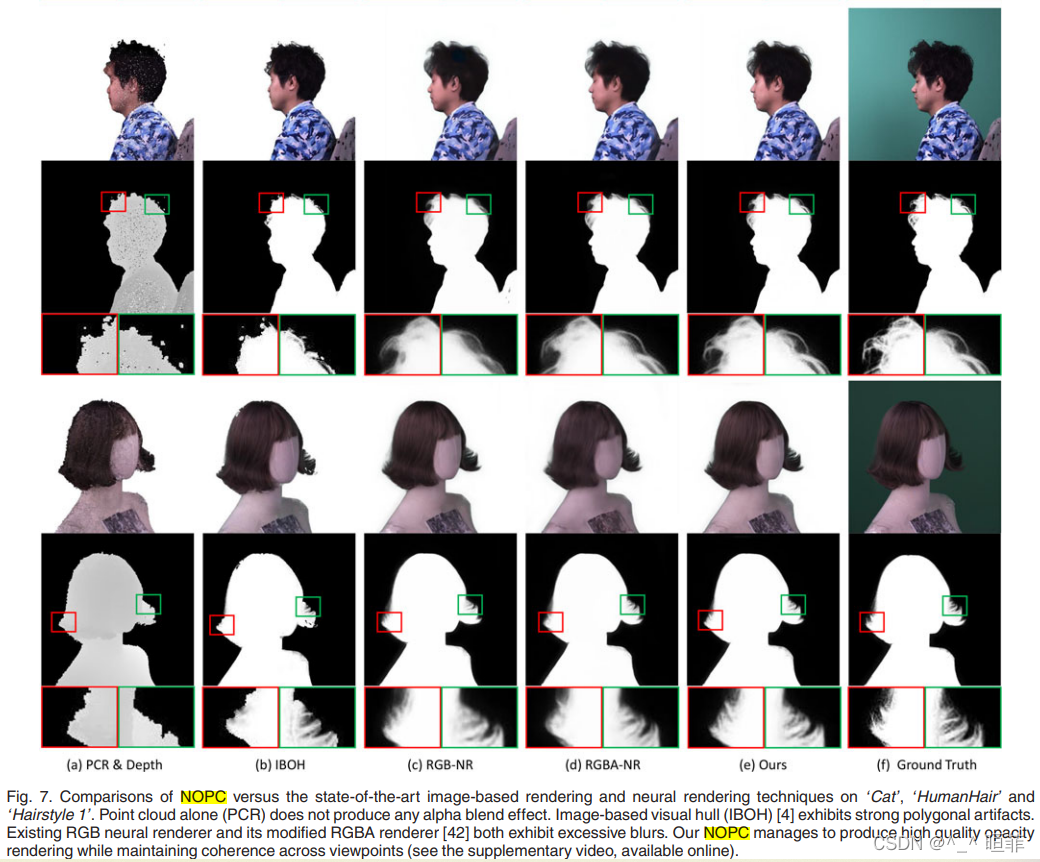

Experimental results :

5. summary

Rendering Rendering : Good Image + Poor Geometry

Reconstruction The reconstruction : Learning based feature,matching,proxy estimation,Optimization

Neural Representation = Neural Modeling + Rendering

Well, this article is actually telling us , A poor point cloud + Good picture = You can get a lot of good pictures

版权声明

本文为[^_^ Min Fei]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230611136837.html

边栏推荐

- AUTOSAR from introduction to mastery 100 lectures (86) - 2F of UDS service foundation

- [dynamic programming] 221 Largest Square

- Proteus 8.10 installation problem (personal test is stable and does not flash back!)

- Design of body fat detection system based on 51 single chip microcomputer (51 + OLED + hx711 + US100)

- mui picker和下拉刷新冲突问题

- FatFs FAT32 learning notes

- Mui close other pages and keep only the first page

- Wu Enda's programming assignment - logistic regression with a neural network mindset

- playwright控制本地穀歌瀏覽打開,並下載文件

- 十万大学生都已成为猿粉,你还在等什么?

猜你喜欢

![[51 single chip microcomputer traffic light simulation]](/img/70/0d78e38c49ce048b179a85312d063f.png)

[51 single chip microcomputer traffic light simulation]

SPI NAND flash summary

hbuilderx + uniapp 打包ipa提交App store踩坑记

MySQL 8.0.11 download, install and connect tutorials using visualization tools

How do ordinary college students get offers from big factories? Ao Bing teaches you one move to win!

Request和Response及其ServletContext总结

![[quick platoon] 215 The kth largest element in the array](/img/14/8cd1c88a7c664738d67dcaca94985d.png)

[quick platoon] 215 The kth largest element in the array

SHA512 / 384 principle and C language implementation (with source code)

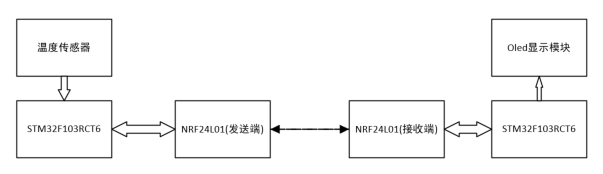

Design of STM32 multi-channel temperature measurement wireless transmission alarm system (industrial timing temperature measurement / engine room temperature timing detection, etc.)

three. JS text ambiguity problem

随机推荐

R语言中dcast 和 melt的使用 简单易懂

web三大组件之Servlet

Data warehouse - what is OLAP

5 tricky activity life cycle interview questions. After learning, go and hang the interviewer!

RTOS mainstream assessment

普通大学生如何拿到大厂offer?敖丙教你一招致胜!

Design and manufacture of 51 single chip microcomputer solar charging treasure with low voltage alarm (complete code data)

Super 40W bonus pool waiting for you to fight! The second "Changsha bank Cup" Tencent yunqi innovation competition is hot!

melt reshape decast 长数据短数据 长短转化 数据清洗 行列转化

榜样专访 | 孙光浩:高校俱乐部伴我成长并创业

"Xiangjian" Technology Salon | programmer & CSDN's advanced road

MySQL basic statement query

"Play with Lighthouse" lightweight application server self built DNS resolution server

(personal) sorting out system vulnerabilities after recent project development

鸿蒙系统是抄袭?还是未来?3分钟听完就懂的专业讲解

Pyqt5 store opencv pictures into the built-in sqllite database and query

Brief introduction of asynchronous encapsulation interface request based on uniapp

你和42W奖金池,就差一次“长沙银行杯”腾讯云启创新大赛!

decast id.var measure. Var data splitting and merging

MySQL5. 5 installation tutorial