当前位置:网站首页>Detailed explanation of ten functional features of ETL's kettle tool

Detailed explanation of ten functional features of ETL's kettle tool

2022-04-22 05:12:00 【Think of the source after drinking water 09】

summary

Kettle Is a foreign open source ETL Tools , pure java To write , Can be in Window、Linux、Unix Up operation .

To put it bluntly , It is necessary to understand the general ETL The essential features and functions of the tool , In this way, we can better master Kettle Use . Today we mainly describe ETL General functions of tools .

ETL One of the functions of the tool : Connect

whatever ETL Tools should have the ability to connect to a wide range of data sources and formats . For the most commonly used relational database system , Also provide a local connection ( As for the Oracle Of OCI),ETL It should be able to provide the following basic functions :

(1) Connect to a normal relational database and get data , As is common Orcal、MS SQL Server、IBM DB/2、Ingres、MySQL and PostgreSQL. There's a lot more

(2) From delimited and fixed format ASCII Get data in file

(3) from XML Get data in file

(4) Get data from popular office software , Such as Access Database and Excel The spreadsheet

(5) Use FTP、SFTP、SSH How to get data ( It's better not to use scripts )

(6) And from Web Services or RSS Get data in . If you need some more ERP Data in the system , Such as Oracle E-Business Suite、SAP/R3、PeopleSoft or JD/Edwards,ETL Tools should also provide connectivity to these systems .

(7) It can also provide Salesforce.com and SAP/R3 Input steps of , But not in the kit , Additional installation required . For others ERP And financial system data extraction also need other solutions . Of course , The most common way is to ask these systems to export data in text format , Use text data as a data source .

ETL The second function of the tool : Platform independence

One ETL The tool should be able to run on any platform or even a combination of different platforms . One 32 Bit operating systems may work well in the initial stages of development , But as the amount of data increases , We need a more powerful operating system . Another situation , Development is usually in Windows or Mac On board . And the production environment is generally Linux Systems or clusters , Yours ETL The solution should be able to seamlessly switch between these systems .

ETL The third function of the tool : Data scale

commonly ETL Can go through the following 3 How to deal with big data .

- Concurrent :ETL The process can handle multiple data streams at the same time , In order to take advantage of modern multi-core hardware architecture .

- Partition :ETL Be able to use specific partition mode , Distribute data to concurrent streams .

- colony :ETL Processes can be assigned to multiple machines to jointly complete .

Kettle Is based on Java Solutions for , Can be run on any installation Java On the computer of the virtual machine ( Include Windows、Linux and Mac). Every step in the transformation is performed in a concurrent way , And can be executed many times , This speeds up the processing speed .

Kettle When running the conversion , According to the user's settings , Data can be sent to multiple data streams in different ways ( There are two ways to send : Distribute and copy ). Giving out is similar to giving out playing cards , Send each row of data to only one data stream in turn , Copy is to send each row of data to all data streams .

In order to control the data more precisely ,Kettle Partition mode is also used , Data with the same characteristics can be sent to the same data stream through partition . The partition here is just conceptually similar to the database partition .

Kettle There is no function for database partition .

ETL The fourth function of the tool : Design flexibility

One ETL Tools should be left free for developers to use , Instead of limiting users' creativity and design needs in a fixed way .ETL Tools can be divided into process based and mapping based .

The mapping based function only provides a set of fixed steps between the source data and the destination data , It severely limits the freedom of design work . Mapping based tools are generally easy to use , But get started quickly , But for more complex tasks , Process based tools are the best choice .

Use Kettle This is a process based tool , According to the actual data and maybe the demand , You can create custom steps and transformations .

ETL The fifth function of the tool : reusability

Designed ETL The transformation should be reusable , This is very important . Copying and pasting existing conversion steps is the most common kind of reuse , But it's not really multiplexing .

Kettle There's a mapping in ( Subconversion ) step , It can complete the reuse of transformation , This step can take one transformation as a child transformation of other transformations . In addition, the conversion can be used multiple times in multiple assignments , The same assignment can also be a sub assignment of other assignments .

ETL The sixth function of the tool : Extensibility

Everybody knows , Almost all ETL The tools all provide scripts , Solve the problem that the tool itself can't solve by programming . in addition , There are a few more ETL Tools can be passed through API Or other ways to add components to the tool . Write functions in scripting language , Functions can be called by other transformations or scripts .

Kettle All the above functions are provided .Java Script steps can be used to develop Java Script , Save this script as a transformation , And then by mapping ( Subconversion ) step , It can be changed into a standard reusable function . actually , Not limited to scripts , Every transformation can be done through this mapping ( Subconversion ) To reuse , It's like creating a component .Kettle It's extensible in design , It provides a plug-in platform . This plug-in architecture allows third parties to Kettle Platform development plug-ins .

Kettle All the plug-ins in , Even the default provided components , In fact, they are all plug-ins . Built in third-party plug-ins and Pentaho The only difference between plug-ins is technical support . Suppose you buy a third-party plug-in ( For example, one SugarCRM The connection of ), Technical support is provided by a third party , Rather than by Pentaho Provide .

ETL The seventh function of the tool : Data conversion

ETL A large part of the project is doing data conversion . Between input and output , The data has to be verified 、 Connect 、 Separate 、 Merge 、 Transposition 、 Sort 、 Merge 、 clone 、 Weight removal 、 Filter 、 Delete 、 Replacement or other operations .

In different institutions 、 In projects and solutions , The requirements for data conversion are quite different , So it's hard to say one ETL What transformation functions should tools provide at least . But what? , frequently-used ETL Tools ( Include Kettle) All provide the following basic integration functions :

- Slowly change dimensions

- The query value

- Row column conversion

- Conditional separation

- Sort 、 Merge 、 Connect

- Gather

ETL The eighth function of the tool : Testing and debugging

Tests are usually divided into black box tests ( It's also called functional testing ) And white box test ( Structural testing ).

Black box testing ,ETL Conversion is considered a black box , The tester didn't understand the function of the black box , Only the input and the expected output .

White box testing , The tester is required to know the internal working mechanism of the transformation and design test cases to check whether the specific transformation has specific results .

Debugging is actually part of white box testing , The height allows developers or testers to run a transformation step by step , And find out what the problem is .

ETL The ninth function of the tool : Lineage analysis and impact analysis

whatever ETL Tools should have an important function : Read the metadata of the transformation , It is to extract the information of data flow composed of different transformations .

Lineage analysis and impact analysis are two related features based on metadata .

Lineage is a retrospective mechanism , It can see the source of the data .

Impact analysis is another analysis method based on metadata , You can analyze the impact of source data on subsequent transformations and target tables .

ETL Ten functions of tools : Logs and audits

The purpose of data warehouse is to provide an accurate information source , So the data in the data warehouse should be reliable 、 A trusted . In order to ensure the reliability of this array , At the same time, all data conversion operations can be recorded ,ETL Tools should provide logging and auditing capabilities .

The log can record what steps were taken during the conversion process , Include the start and end timestamps for each step .

The audit can track all operations on the data , Including the number of lines read 、 Convert lines 、 Write lines .

版权声明

本文为[Think of the source after drinking water 09]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204210627384224.html

边栏推荐

- MySQL view character set and proofing rules

- 【Pytorch】torch. Bmm() method usage

- Request and response objects

- Analyzing redis distributed locks from the perspective of source code

- Pydeck enables efficient visual rendering of millions of data points

- Vs2019 official free print control

- Image segmentation using deep learning: a survey

- Nexus private server - (III) using private server warehouse in project practice

- Autojs automation script how to develop on the computer, detailed reliable tutorial!!!

- [boutique] using dynamic agent to realize unified transaction management

猜你喜欢

Multi level cache architecture for 100 million traffic

How to modify the IP address of the rancher server

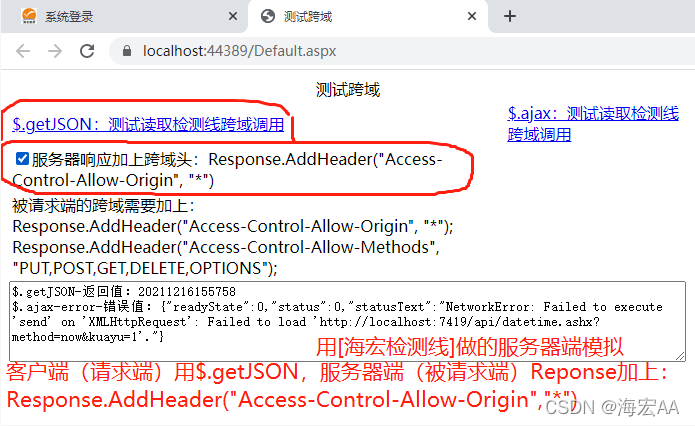

Summary of browser cross domain problems

Learn from me: how to release your own plugin or package in China

Programme de démarrage Spark: WordCount

![[I. XXX pest detection project] 2. Trying to improve the network structure: resnet50, Se, CBAM, feature fusion](/img/78/c50eaa02e26ef7c5e88ec8ad92ab13.png)

[I. XXX pest detection project] 2. Trying to improve the network structure: resnet50, Se, CBAM, feature fusion

![[I. XXX pest detection items] 3. Loss function attempt: focal loss](/img/91/5771f6d4b73663c75da68056207640.png)

[I. XXX pest detection items] 3. Loss function attempt: focal loss

![[Reading Notes - > statistics] 07-02 introduction to the concept of discrete probability distribution binomial distribution](/img/32/d57325bf91b34761f85829aa7cbb78.png)

[Reading Notes - > statistics] 07-02 introduction to the concept of discrete probability distribution binomial distribution

PyTorch搭建双向LSTM实现时间序列预测(负荷预测)

VIM is so difficult to use, why are so many people keen?

随机推荐

Summary of 2019 personal collection Framework Library

Considerations for importing idea package and calling its methods

style/TextAppearance. Compat. Notification. Info) not found.

Western dichotomy and Eastern trisection

Speech feature extraction of emo-db dataset

Transaction isolation level and mvcc

Joint type and type protection

PyTorch搭建双向LSTM实现时间序列预测(负荷预测)

数据库(二)MySQL表的增删改查(基础)

What kind of programming language is this to insert into the database

Nexus私服——(三) 在项目实战中使用私服仓库

Summary of browser cross domain problems

How does docker access redis service 6379 port in the host

JS Chapter 12

Vs2019 official free print control

[redis notes] data structure and object: Dictionary

Pytorch builds a two-way LSTM to realize time series forecasting (load forecasting)

Realization of online Preview PDF file function

All conditions that trigger epollin and epollout

在线预览PDF文件功能实现