当前位置:网站首页>Postgraduate Work Weekly (Week 13)

Postgraduate Work Weekly (Week 13)

2022-08-09 16:48:00 【wangyunpeng33】

Tip: After the article is written, the table of contents can be automatically generated. For how to generate it, please refer to the help document on the right

NICE-GAN performance evaluation

Foreword

This week's talk is mainly to look at the source code of the paper, think about some questions, and ask the brothers who have experience in pytorch code and the bloggers you know for some experience. I have a lot of insights and summarize it.

I. How to write deep learning code?

Of course, writing code has always been done as a college student, but the code of deep learning is obviously different from the code of the engineering project I did before. I got the source code of a paper, several main modules model, dataset, trainIt is still necessary to clarify the purpose and logic behind them.

Generally speaking, a better code sequence is to write the model first, then the dataset, and finally the train.

model constitutes the skeleton of the entire deep learning training and inference system, and also determines the input and output formats of the entire AI model.For vision tasks, the model architectures are mostly convolutional neural networks or the latest ViT model; for NLP tasks, the model architectures are mostly Transformer and Bert; for time series prediction, the model architectures are mostly RNN or LSTM.Different models correspond to different data input formats. For example, ResNet generally inputs a multi-channel two-dimensional matrix, while ViT needs to input image patches with position information.After determining what kind of model to use, the input format of the data is also determined.According to the determined input format, we can build the corresponding dataset.

dataset constructs the input and output format of the entire AI model.When writing the dataset component, we need to consider the storage location and storage method of the data, such as whether the data is stored in a distributed manner, whether the model needs to run in the case of multiple machines and multiple cards, and whether there is a bottleneck in the read and write speed.When the bottleneck of reading and writing comes, it is necessary to preload data into memory, etc.When writing the dataset component, we also fine-tune the model component in reverse.For example, after determining the data read and write for distributed training, you need to wrap the model with modules such as nn.DataParallel or nn.DistributedDataParallel, so that the model can run on multiple machines and multiple cards.In addition, the writing of the dataset component also affects the training strategy, which also paved the way for building the train component.For example, according to the size of the video memory, we need to determine the corresponding BatchSize, and the BatchSize directly affects the size of the learning rate.For another example, according to the distribution of data, we need to choose different sampling strategies for Feature Balance, which will also be reflected in the training strategy.

train builds the training strategy and evaluation method of the model, which is the most important and complex component.Building the model and dataset first can add restrictions and reduce the complexity of the train component.In the train component, we need to determine the model update strategy according to the training environment (single-machine multi-card, multi-machine multi-card or federated learning), as well as determine the total training time epochs, the type of optimizer, the size of the learning rate and the decay strategy,The initialization method of the parameters, the model loss function.In addition, in order to combat overfitting and improve generalization, it is also necessary to introduce appropriate regularization methods, such as Dropout, BatchNorm, L2-Regularization, Data Augmentation, etc.Some methods to improve generalization performance can be implemented directly in the train component (such as adding L2-Reg, Mixup), some need to be added to the model (such as Dropout and BatchNorm), and some need to be added to the dataset (such as Data Augmentation).

In addition, train also needs to record some important information of the training process and visualize this information, such as recording the average loss of the training set and the accuracy of the test set at each epoch, and writing this information to tensorboard, and then in theWeb-side real-time monitoring.In the construction of the train component, we need to fine-tune the parameters according to the model performance at any time, and improve the model and dataset components according to the results.

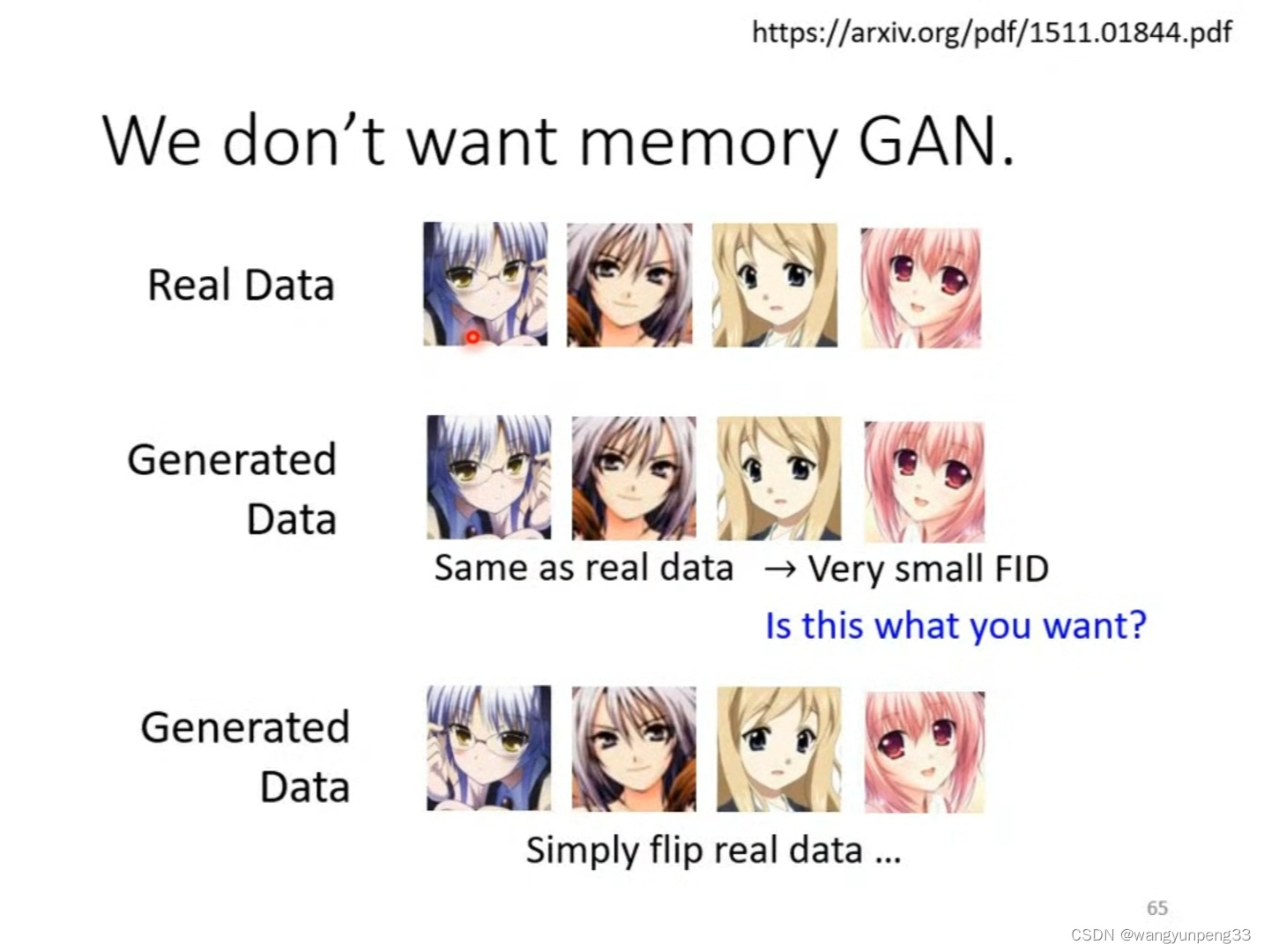

II. Generator Efficiency Evaluation

In fact, generators with large differences in efficiency can be directly identified by the naked eye. For generators with small gaps, the indicators that can quantify performance generally include: Inception Score (KL divergence), FID (calculating distance at feature level),KID (Kernel Unbiased Estimate).

In order to overcome the limitation of a single indicator, the ablation experiment in the paper uses FID+KID to measure the model performance.

Ablation experiment

Separation of key components to verify validity: NICE: No independent for encodingComponents; RA: Add residual connections in the CAM attention module; C0x represents (10 x 10 receptive field), C1x represents intermediate scale (70 x 70 receptive field), C2x represents global scale (286 x 286 receptive field); −: willThe number of shared layers decreases by 1; +: increases by 1.

It can be seen that NICE RA improves performance.

The second is model compression. Whether sharing the first layer encoder or the last layer decoder, it will damage the conversion performance and weaken the domain translation ability.

MMD (Maximum Mean Error)

By shortening the transition path between domains in the latent space, NICE-GAN built upon the shared latent space assumption can probably facilitate domain translation in the image space.

NICE-GAN based on shared latent space hypothesis may help domain transition in image space by shortening the transition path between domains in latent space

Based on the network architecture provided by the paper, NICE-GAN has more affinity than Cycle-GAN when applied to the cat and dog dataset.

(a)input (b)NICE-GAN (c)Cycle-GAN边栏推荐

- 【深度学习】attention机制

- VS2010: devenv.sln solution save dialog appears

- 将从后台获取到的数据 转换成 树形结构数据

- 【深度学习】SVM解决线性不可分情况(八)

- 研究生工作周报(第四周)

- 【论文阅读】LIME:Low-light Image Enhancement via Illumination Map Estimation(笔记最全篇)

- cheerio根据多个class匹配

- 【研究生工作周报】(第十二周)

- LNK1123: Failed during transition to COFF: invalid or corrupt file

- 理解泛型之得到泛型类型

猜你喜欢

封装仿支付宝密码输入效果

【深度学习】梯度下降与梯度爆炸(十)

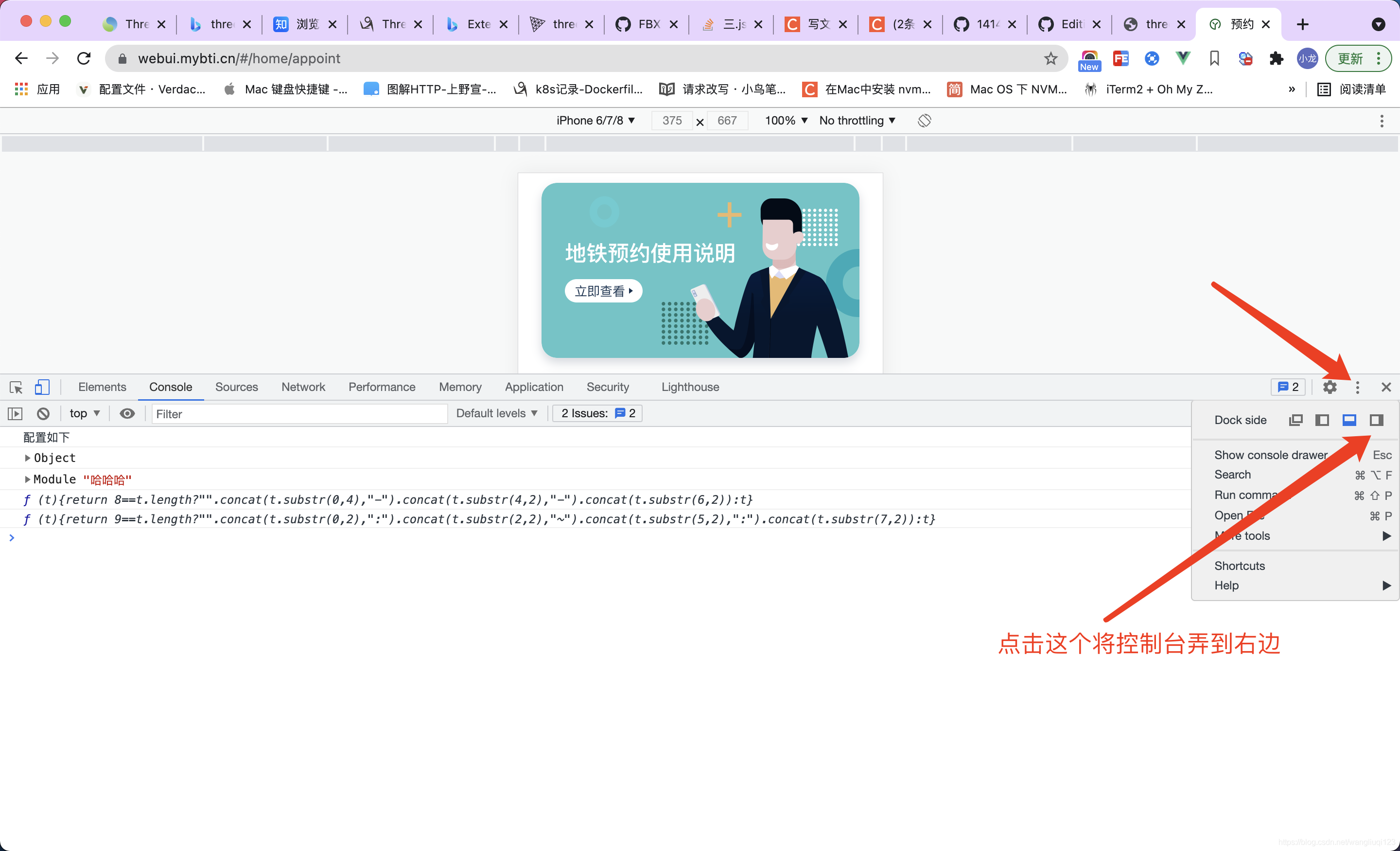

地铁预约Postman脚本使用

用广搜和动态规划写个路径规划程序

OpenCV下载、安装以及使用

Inverted order at the beginning of the C language 】 【 string (type I like Beijing. Output Beijing. Like I)

.Net Core 技巧小结

【研究生工作周报】(第八周)

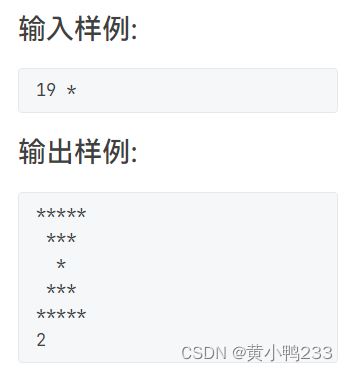

PAT1027 Printing Hourglass

【论文阅读】LIME:Low-light Image Enhancement via Illumination Map Estimation(笔记最全篇)

随机推荐

Example of file operations - downloading and merging streaming video files

Welcome to use CSDN - markdown editor

模型训练的auc和loss比较问题

Qt control - QTextEdit usage record

Noun concept summary (not regularly updated ~ ~)

Entity Framework Core知识小结

鸡生蛋,蛋生鸡问题。JS顶级对象Function,Object关系

【深度学习】梯度下降与梯度爆炸(十)

pyspark.sql之实现collect_list的排序

【研究生工作周报】(第八周)

类别特征编码分类任务选择及效果影响

XGB系列-XGB参数指南

【深度学习】SVM解决线性不可分情况(八)

At the beginning of the C language order 】 【 o least common multiple of three methods

【论文阅读】Deep Learning for Image Super-resolution: A Survey(DL超分辨率最新综述)

The recycle bin has been showed no problem to empty the icon

Region实战SVG地图点击

Several important functional operations of general two-way circular list

地铁预约Postman脚本使用

研究生工作周报