当前位置:网站首页>Practice of unified storage technology of oppo data Lake

Practice of unified storage technology of oppo data Lake

2022-04-23 14:52:00 【From big data to artificial intelligence】

Reading guide

OPPO Is an intelligent terminal manufacturing company , There are hundreds of millions of end users , A lot of text is produced every day 、 picture 、 Unstructured data such as audio and video . In ensuring data connectivity 、 On the premise of real-time and data security management requirements , How low cost 、 Efficiently and fully tap the value of data , It has become a big problem for companies with massive data . At present, the popular solution in the industry is Data Lake , This paper introduces OPPO Self developed data Lake storage CBFS To a large extent, it can solve the current pain points .

Brief description of data Lake

Data Lake definition : A centralized storage warehouse , It stores data in its original data format , Usually binary blob Or documents . A data lake is usually a single data set , Including original data and transformed data ( report form , visualization , Advanced analysis and machine learning )

The value of data storage

Compared with the traditional Hadoop framework , Data lake has the following advantages :

- Highly flexible : Data reading 、 Writing and processing are convenient , All original data can be saved

- Multiple analysis : Support includes batch 、 Flow calculation , Interactive query , Machine learning and other loads

- Low cost : Storage computing resources are expanded independently ; Using object storage , Separation of heat and cold , A lower cost

- Easy to manage : Perfect user management authentication , Compliance and audit , data “ For custody ” Full traceability

OPPO Overall solution of data lake

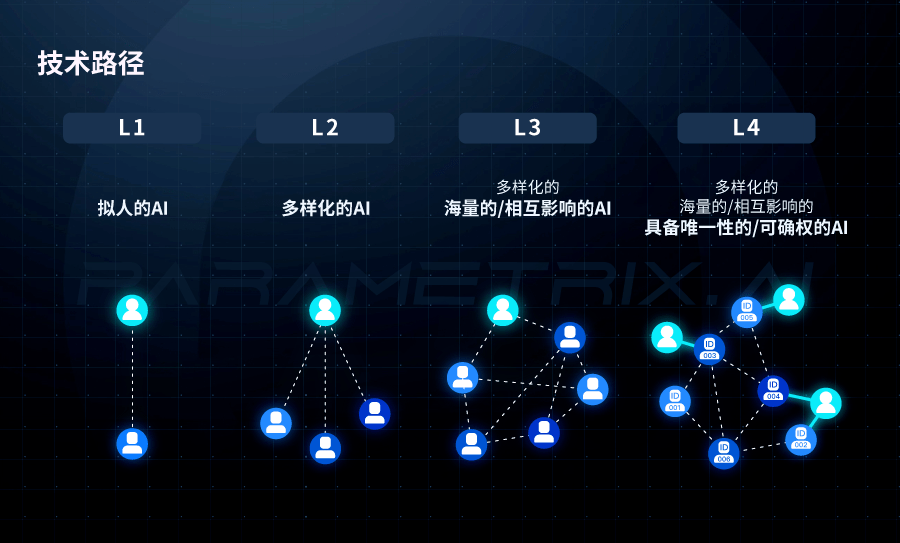

OPPO The data lake is mainly constructed from three dimensions : The bottom Lake , We're going to use CBFS, It is a way to support S3、HDFS、POSIX file 3 Low cost storage of an access protocol ; The middle layer is the real-time data storage format , We used iceberg; The top layer can support a variety of different computing engines

OPPO Characteristics of data Lake architecture

The characteristic of early big data storage is that stream computing and batch computing are stored in different systems , The upgraded architecture unified metadata management , batch 、 Flow computing integration ; At the same time, it provides unified interactive query , The interface is more friendly , Second response , High concurrency , At the same time, it supports data sources Upsert Change operation ; The bottom layer uses large-scale low-cost object storage as a unified data base , Support multi engine data sharing , Improve data reuse capability

Data Lake storage CBFS framework

Our goal is to build something that can support EB Data storage of level data , Solve the problem of data analysis in cost , Performance and experience challenges , The whole data storage lake is divided into six subsystems :

- Protocol access layer : Support for many different protocols (S3、HDFS、Posix file ), Data can be written in one of the protocols , You can also read directly with the other two protocols

- Metadata layer : Render the hierarchical namespace of the file system and the flat namespace of the object , The whole metadata is distributed , Support fragmentation , Linear scalability

- Metadata cache layer : Used to manage metadata cache , Provide metadata access acceleration

- Resource Management : In the picture Master node , Responsible for physical resources ( Data nodes , Metadata node ) And logical resources ( volume / bucket , Data fragmentation , Metadata fragmentation ) Management of

- Multiple replica layers : Support additional write and random write , It is friendly to both large and small objects . One function of the subsystem is to store multiple copies as persistent ; Another function is the data cache layer , Supports elastic replicas , Accelerate data access , Then expand .

- Erasure code storage layer : Can significantly reduce storage costs , It also supports multi availability zone deployment , Support different erasure code models , Easy support EB Level storage scale

Next , Will focus on sharing CBFS Key technologies used , Including high-performance metadata management 、 Erasure code storage 、 And lake acceleration

CBFS key technology

Metadata management

The file system provides a hierarchical namespace view , The logical directory tree of the entire file system is divided into multiple layers , As shown on the right , Each metadata node (MetaNode) Contains hundreds of metadata fragments (MetaPartition), Each slice consists of InodeTree(BTree) and DentryTree(BTree) form , Every dentry Represents a catalog entry ,dentry from parentId and name form . stay DentryTree in , With PartentId and name Make up the index , To store and retrieve ; stay InodeTree in , with inode id Index . Use multiRaft The protocol guarantees high availability and data consistency replication , And each node set will contain a large number of fragment groups , Each slice group corresponds to one raft group; Each slice group belongs to a volume; Each slice group is a volume A metadata range of ( a section inode id ); The metadata subsystem completes dynamic capacity expansion by splitting ; When a slice group resource ( performance 、 Capacity ) Next to the value , The resource manager service estimates an end point , And notify this group of node devices , Only serve data before this point , At the same time, a new group of nodes will be selected , And dynamically add it to the current business system . A single directory supports millions of levels of capacity , Full memory of metadata , Ensure excellent read and write performance , The memory metadata is partitioned through snapshot Persistent to disk for backup and recovery .

Object storage provides a flat namespace ; To visit objectkey by /bucket/a/b/c For example , Start at root , adopt ”/” Separator layer by layer parsing , Find the last Directory (/bucket/a/b) Of Dentry, Finally found /bucket/a/b/c For the Inode, This process involves multiple interactions between nodes , The deeper the level , Poor performance ; So we introduce PathCache The module is used to speed up ObjectKey analysis , The simple way is to PathCache Chinese vs ObjectKey The parent directory of (/bucket/a/b) Of Dentry Do the cache ; Analyzing online clusters, we find that , The average size of the directory is about 100, Suppose the scale of the storage cluster is at the level of 100 billion , Directory entries are just 10 One hundred million , Single machine cache is very efficient , At the same time, the read performance can be improved through different nodes ; Support at the same time ” flat ” and ” level ” Design of namespace management , Compared with other systems in the industry ,CBFS It's simpler to implement , More efficient , You can easily implement a copy of data without any conversion , Multiple protocol access and interworking , And there is no data consistency problem .

Erasure code storage

One of the key technologies to reduce storage cost is erasure code (Erasure Code, abbreviation EC), Briefly introduce the principle of erasure code : take k Raw data , A new... Is obtained by coding calculation m Copy of the data , When k+m No more than... Copies of data are lost arbitrarily m Share time , The original data can be restored by decoding ( It works a bit like a disk raid); Compared with traditional multi replica storage , EC Lower data redundancy , But data durability (durability) Higher ; In fact, there are many different ways , Most are based on XOR or Reed-Solomon(RS) code , our CBFS It also uses RS code

computational procedure :

- Coding matrix , above n The row is the unit matrix I, below m The row is the encoding matrix ;k+m A vector of data blocks , Contains the original data block and m Check blocks

- When a piece is lost : From matrix B Delete the row corresponding to the block , Get a new matrix B’, Then multiply by... On the left B‘ The inverse matrix , You can recover lost blocks , Detailed calculation process, you can read relevant materials offline

ordinary RS There are some problems with coding : Pictured above is an example , hypothesis X1~X6 ,Y1~Y6 For data blocks ,P1 and P2 Is a check block , If any of them is lost , Need to read the rest 12 Blocks to repair data , disk IO Big loss , High bandwidth required for data repair , In a multiple AZ When the deployment , The problem is particularly obvious ; Proposed by Microsoft LRC code , This problem is solved by introducing local check blocks , As shown in the figure , In the original global check block P1 and P2 On the basis of , newly added 2 A local check block PX and PY, hypothesis X1 damage , Just read its associated X1~X6 common 6 One block can fix the data . Statistics show that , In the data center , The probability of single disk failure of a strip in a certain time is 98%,2 The probability of simultaneous disk damage is 1%, therefore LRC In most scenarios, it can greatly improve the efficiency of data repair , But the disadvantage is its non maximum distance separable coding , Can't be like the whole situation RS Any loss of coding m One piece of data can throw all the data back .

EC type

- offline EC: The whole strip k When all data units are filled , The overall calculation generates m Check block

- On-line EC: After receiving the data, split it synchronously and calculate the check block in real time , Write at the same time k Data blocks and m Check blocks

CBFS Span AZ Multimode online EC

CBFS Supports cross AZ Online of multimode strips EC Storage , For different machine room conditions (1/2/3AZ)、 Objects of different sizes 、 Different service availability and data durability requirements , The system can flexibly configure different coding modes In the picture “1AZ-RS” Model as an example ,6 Data block plus 3 Check block list AZ Deploy ; 2AZ-RS Pattern , Adopted 6 Data block plus 10 Check blocks 2AZ Deploy , Data redundancy is 16/6=2.67;3AZ-LRC Pattern , use 6 Data blocks ,6 A global check block plus 3 A local check block pattern ; Different coding modes are supported in the same cluster .

On-line EC Storage architecture

Contains several modules Access: Data access layer , At the same time provide EC Encoding and decoding capabilities CM: Cluster management , The management node , disk , Volume and other resources , Also responsible for migration , Repair , equilibrium , Patrol mission , The same cluster supports different EC Coding modes coexist Allocator: Responsible for volume space allocation EC-Node: Stand alone storage engine , Responsible for the actual storage of data

Correction code write

1、 Streaming data collection 2、 Generate multiple data blocks for data slices , Calculate the check block at the same time 3、 Request storage volume 4、 Distribute data blocks or check blocks to each storage node concurrently Data is written in a simple way NRW agreement , Just ensure the minimum number of written copies , In this way, when the normalized node and network fail , The request will not block , Guarantee availability ; Data reception 、 segmentation 、 Check block coding adopts asynchronous pipeline mode , It also ensures high throughput and low delay .

Erasure code reading

Data reading also takes NRW Model , With k=m=2 Example of coding mode , Just read correctly 2 Block ( Whether it's a data block or a check block ), Fast RS Decode and calculate the original data and ; In addition, to improve availability and reduce latency ,Access Priority will be given to nearby or low load storage nodes EC-Node You can see , On-line EC combination NRW The protocol ensures the strong consistency of data , At the same time, it ensures high throughput and low delay , It is very suitable for big data business model .

Data Lake access acceleration

One of the significant benefits of data Lake architecture is cost savings , However, the memory computing separation architecture will also encounter bandwidth bottlenecks and performance challenges , Therefore, we also provide a series of Access Acceleration technologies : The first is the multi-level cache capability :

- First level cache : Local cache , It is deployed on the same machine as the computing node , Support metadata and data caching , Memory support 、PMem、NVme、HDD Different types of media , It is characterized by low access delay , But less capacity

- Second level cache : Distributed cache , The number of copies is flexible , Provide location awareness , Support user / bucket / Active preheating and passive caching at the object level , The data obsolescence policy can also be configured

The multi-level cache strategy has a good acceleration effect in our machine learning training scenario

In addition, the storage data layer also supports predicate push down , It can significantly reduce a large amount of data flow between storage and computing nodes , Reduce resource overhead and improve computing performance ;

There is still a lot of detailed work to accelerate the data Lake , We are also in the process of continuous improvement

Future outlook

at present CBFS-2.x Version is open source , Support online EC、 Lake acceleration 、 Multi protocol access and other key features 3.0 The version is expected on 2021 year 10 In open source ; follow-up CBFS Will increase the stock HDFS The cluster is mounted directly ( No data relocation ), Cold and hot data, intelligent layering and other characteristics , To support big data and AI Under the original structure, the stock data smoothly enters the lake .

Author's brief introduction : Xiaochun OPPO Storage architect

Reprinted from Xiaochun, Link to the original text :https://blog.csdn.net/weixin_59152315/article/details/119750978.

版权声明

本文为[From big data to artificial intelligence]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204231450357983.html

边栏推荐

- 想要成为架构师?夯实基础最重要

- [untitled]

- do(Local scope)、初始化器、内存冲突、Swift指针、inout、unsafepointer、unsafeBitCast、successor、

- Programming philosophy - automatic loading, dependency injection and control inversion

- What is the main purpose of PCIe X1 slot?

- I/O复用的高级应用:同时处理 TCP 和 UDP 服务

- Daily question - leetcode396 - rotation function - recursion

- 抑郁症治疗的进展

- Swift: entry of program, swift calls OC@_ silgen_ Name, OC calls swift, dynamic, string, substring

- Detailed comparison between asemi three-phase rectifier bridge and single-phase rectifier bridge

猜你喜欢

![[servlet] detailed explanation of servlet (use + principle)](/img/7e/69b768f85bad14a71ce9fcef922283.png)

[servlet] detailed explanation of servlet (use + principle)

qt之.pro文件详解

![[jz46 translate numbers into strings]](/img/e0/f32249d3d2e48110ed9ed9904367c6.png)

[jz46 translate numbers into strings]

Leetcode165 compare version number double pointer string

在游戏世界组建一支AI团队,超参数的多智能体「大乱斗」开赛

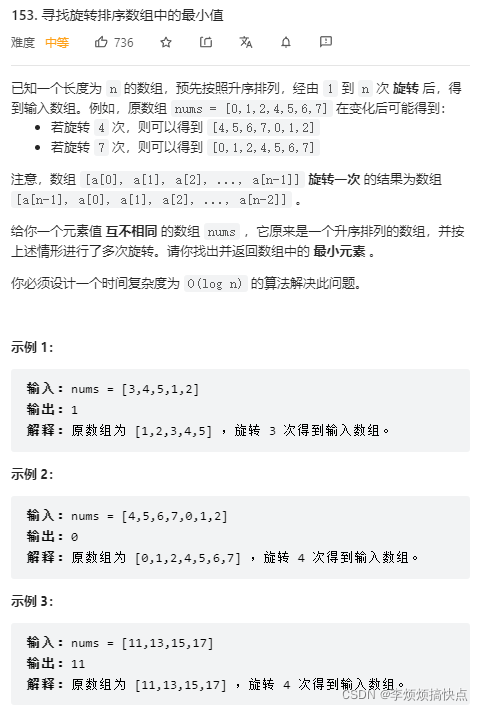

LeetCode153-寻找旋转排序数组中的最小值-数组-二分查找

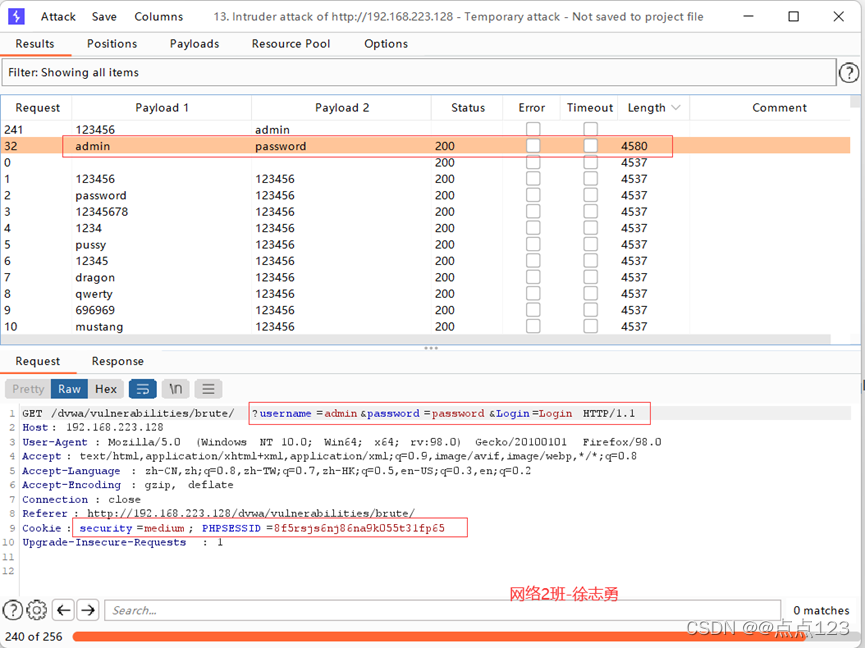

Brute force of DVWA low -- > High

1-初识Go语言

We reference My97DatePicker to realize the use of time plug-in

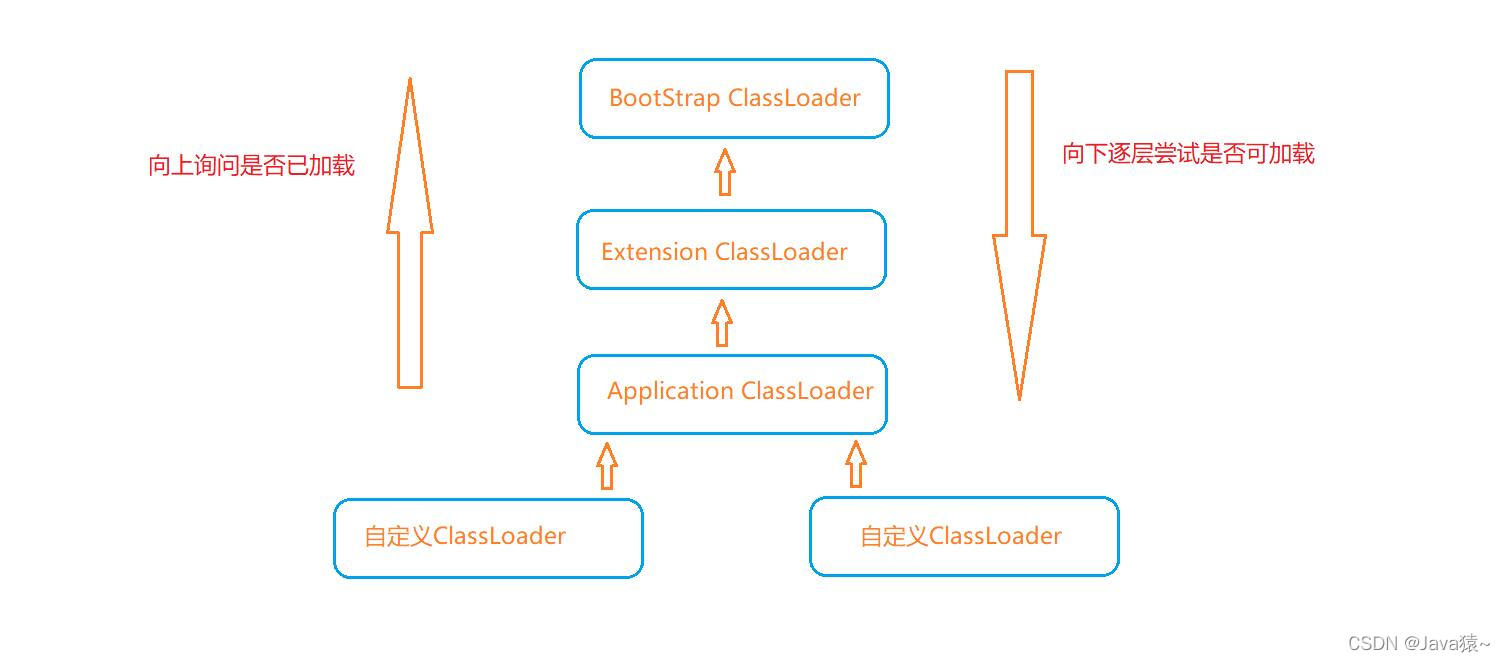

面试官:说一下类加载的过程以及类加载的机制(双亲委派机制)

随机推荐

Find daffodils - for loop practice

ASEMI超快恢复二极管与肖特基二极管可以互换吗

分享 20 个不容错过的 ES6 的技巧

Advanced application of I / O multiplexing: Processing TCP and UDP services at the same time

SVN详细使用教程

Comment eolink facilite le télétravail

Detailed comparison between asemi three-phase rectifier bridge and single-phase rectifier bridge

Set up an AI team in the game world and start the super parametric multi-agent "chaos fight"

epoll 的 ET,LT工作模式———实例程序

QT interface optimization: QT border removal and form rounding

Detailed explanation of C language knowledge points -- first knowledge of C language [1]

想要成为架构师?夯实基础最重要

每日一题-LeetCode396-旋转函数-递推

DVWA之暴力破解(Brute Force)Low-->high

Want to be an architect? Tamping the foundation is the most important

MySQL error packet out of order

一个月把字节,腾讯,阿里都面了,写点面经总结……

【JZ46 把数字翻译成字符串】

Vscode Chinese plug-in doesn't work. Problem solving

Epoll's et, lt working mode -- example program