当前位置:网站首页>PYSPARK ON YARN报错集合

PYSPARK ON YARN报错集合

2022-08-10 15:25:00 【不吃天鹅肉】

错误一:a non-zero exit code 13. Error file: prelaunch.err. Last 4096 bytes of prelaunch.err :

这个错误整整困扰了我两天,用client模式提交没有问题,一旦切换成cluster模式就报错

Application application_1659522163899_1043 failed 2 times due to AM Container for appattempt_1659522163899_1043_000002 exited with exitCode: 13

Failing this attempt.Diagnostics: [2022-08-04 17:47:23.976]Exception from container-launch.

Container id: container_e207_1659522163899_1043_02_000001

Exit code: 13

[2022-08-04 17:47:24.174]Container exited with a non-zero exit code 13. Error file: prelaunch.err.

Last 4096 bytes of prelaunch.err :

Last 4096 bytes of stderr :

22/08/04 17:47:22 INFO util.SignalUtils: Registering signal handler for TERM

22/08/04 17:47:22 INFO util.SignalUtils: Registering signal handler for HUP

22/08/04 17:47:22 INFO util.SignalUtils: Registering signal handler for INT

22/08/04 17:47:22 INFO spark.SecurityManager: Changing view acls to: yarn,hdfs

22/08/04 17:47:22 INFO spark.SecurityManager: Changing modify acls to: yarn,hdfs

22/08/04 17:47:22 INFO spark.SecurityManager: Changing view acls groups to:

22/08/04 17:47:22 INFO spark.SecurityManager: Changing modify acls groups to:

22/08/04 17:47:22 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(yarn, hdfs); groups with view permissions: Set(); users with modify permissions: Set(yarn, hdfs); groups with modify permissions: Set()

22/08/04 17:47:22 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

22/08/04 17:47:23 INFO yarn.ApplicationMaster: ApplicationAttemptId: appattempt_1659522163899_1043_000002

22/08/04 17:47:23 INFO yarn.ApplicationMaster: Starting the user application in a separate Thread

22/08/04 17:47:23 INFO yarn.ApplicationMaster: Waiting for spark context initialization...

22/08/04 17:47:23 ERROR yarn.ApplicationMaster: User application exited with status 1

22/08/04 17:47:23 INFO yarn.ApplicationMaster: Final app status: FAILED, exitCode: 13, (reason: User application exited with status 1)

22/08/04 17:47:23 ERROR yarn.ApplicationMaster: Uncaught exception:

org.apache.spark.SparkException: Exception thrown in awaitResult:

at org.apache.spark.util.ThreadUtils$.awaitResult(ThreadUtils.scala:301)

at org.apache.spark.deploy.yarn.ApplicationMaster.runDriver(ApplicationMaster.scala:509)

at org.apache.spark.deploy.yarn.ApplicationMaster.run(ApplicationMaster.scala:273)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$3.run(ApplicationMaster.scala:913)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$3.run(ApplicationMaster.scala:912)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1878)

at org.apache.spark.deploy.yarn.ApplicationMaster$.main(ApplicationMaster.scala:912)

at org.apache.spark.deploy.yarn.ApplicationMaster.main(ApplicationMaster.scala)

Caused by: org.apache.spark.SparkUserAppException: User application exited with 1

at org.apache.spark.deploy.PythonRunner$.main(PythonRunner.scala:103)

at org.apache.spark.deploy.PythonRunner.main(PythonRunner.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:737)

22/08/04 17:47:23 INFO yarn.ApplicationMaster: Deleting staging directory hdfs://cluster/user/hdfs/.sparkStaging/application_1659522163899_1043

22/08/04 17:47:23 WARN shortcircuit.DomainSocketFactory: The short-circuit local reads feature cannot be used because libhadoop cannot be loaded.

22/08/04 17:47:23 INFO util.ShutdownHookManager: Shutdown hook called

[2022-08-04 17:47:24.184]Container exited with a non-zero exit code 13. Error file: prelaunch.err.

Last 4096 bytes of prelaunch.err :

Last 4096 bytes of stderr :

22/08/04 17:47:22 INFO util.SignalUtils: Registering signal handler for TERM

22/08/04 17:47:22 INFO util.SignalUtils: Registering signal handler for HUP

22/08/04 17:47:22 INFO util.SignalUtils: Registering signal handler for INT

22/08/04 17:47:22 INFO spark.SecurityManager: Changing view acls to: yarn,hdfs

22/08/04 17:47:22 INFO spark.SecurityManager: Changing modify acls to: yarn,hdfs

22/08/04 17:47:22 INFO spark.SecurityManager: Changing view acls groups to:

22/08/04 17:47:22 INFO spark.SecurityManager: Changing modify acls groups to:

22/08/04 17:47:22 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(yarn, hdfs); groups with view permissions: Set(); users with modify permissions: Set(yarn, hdfs); groups with modify permissions: Set()

22/08/04 17:47:22 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

22/08/04 17:47:23 INFO yarn.ApplicationMaster: ApplicationAttemptId: appattempt_1659522163899_1043_000002

22/08/04 17:47:23 INFO yarn.ApplicationMaster: Starting the user application in a separate Thread

22/08/04 17:47:23 INFO yarn.ApplicationMaster: Waiting for spark context initialization...

22/08/04 17:47:23 ERROR yarn.ApplicationMaster: User application exited with status 1

22/08/04 17:47:23 INFO yarn.ApplicationMaster: Final app status: FAILED, exitCode: 13, (reason: User application exited with status 1)

22/08/04 17:47:23 ERROR yarn.ApplicationMaster: Uncaught exception:

org.apache.spark.SparkException: Exception thrown in awaitResult:

at org.apache.spark.util.ThreadUtils$.awaitResult(ThreadUtils.scala:301)

at org.apache.spark.deploy.yarn.ApplicationMaster.runDriver(ApplicationMaster.scala:509)

at org.apache.spark.deploy.yarn.ApplicationMaster.run(ApplicationMaster.scala:273)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$3.run(ApplicationMaster.scala:913)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$3.run(ApplicationMaster.scala:912)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1878)

at org.apache.spark.deploy.yarn.ApplicationMaster$.main(ApplicationMaster.scala:912)

at org.apache.spark.deploy.yarn.ApplicationMaster.main(ApplicationMaster.scala)

Caused by: org.apache.spark.SparkUserAppException: User application exited with 1

at org.apache.spark.deploy.PythonRunner$.main(PythonRunner.scala:103)

at org.apache.spark.deploy.PythonRunner.main(PythonRunner.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:737)

22/08/04 17:47:23 INFO yarn.ApplicationMaster: Deleting staging directory hdfs://cluster/user/hdfs/.sparkStaging/application_1659522163899_1043

22/08/04 17:47:23 WARN shortcircuit.DomainSocketFactory: The short-circuit local reads feature cannot be used because libhadoop cannot be loaded.

22/08/04 17:47:23 INFO util.ShutdownHookManager: Shutdown hook called

For more detailed output, check the application tracking page: http://wj-hdp-3:8088/cluster/app/application_1659522163899_1043 Then click on links to logs of each attempt.

. Failing the application.

各种查资料,网上也没有一样得问题,仅有得两个问题都没有答案,疯了,打开yarn看不到日志,打开spark webui更是连application都没有,最后尝试着用yarn logs -applicationId application_1659522163899_1651

看了一下,结果发现,报错导入模块失效,可是我明明安装了呀

End of LogType:stderr

***********************************************************************

Container: container_e207_1659522163899_1651_02_000001 on wj-hdp-9_45454_1659674465064

LogAggregationType: AGGREGATED

======================================================================================

LogType:stdout

LogLastModifiedTime:Fri Aug 05 12:41:05 +0800 2022

LogLength:151

LogContents:

Traceback (most recent call last):

File "pyspark_test.py", line 3, in <module>

import findspark

ModuleNotFoundError: No module named 'findspark'

End of LogType:stdout

最后看见那个 on wj-hdp-,明白了,由于我是用的pyspark集群模式运行,你的代码在每个服务器乱窜,所以当前节点安装对应模块根本没用,得在所有节点全部安装才行。这就是为什么客户端模式运行没问题,因为当前节点是有这个模块的。然后集群模式其他节点没有,最后全部安装上相关模块成功了。重点是yarn logs查看日志,上面简单的报错信息根本没用。

错误二:hive表或视图找不到

这个简单,把hive的hive-site.xml复制到spark的conf目录下就ok了,或者也可以在代码里添加config

spark = SparkSession.builder.config('','').enableHiveSupport().getOrCreate()

里面填hive-site.xml里复制过来的东西,或者把hive-site.xml在提交的时候通过–files的方式加上也行。

目前还有一个问题,就是我的–files好像不起作用的样子,随后在研究。

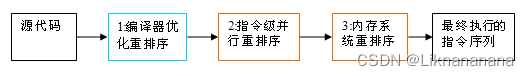

边栏推荐

猜你喜欢

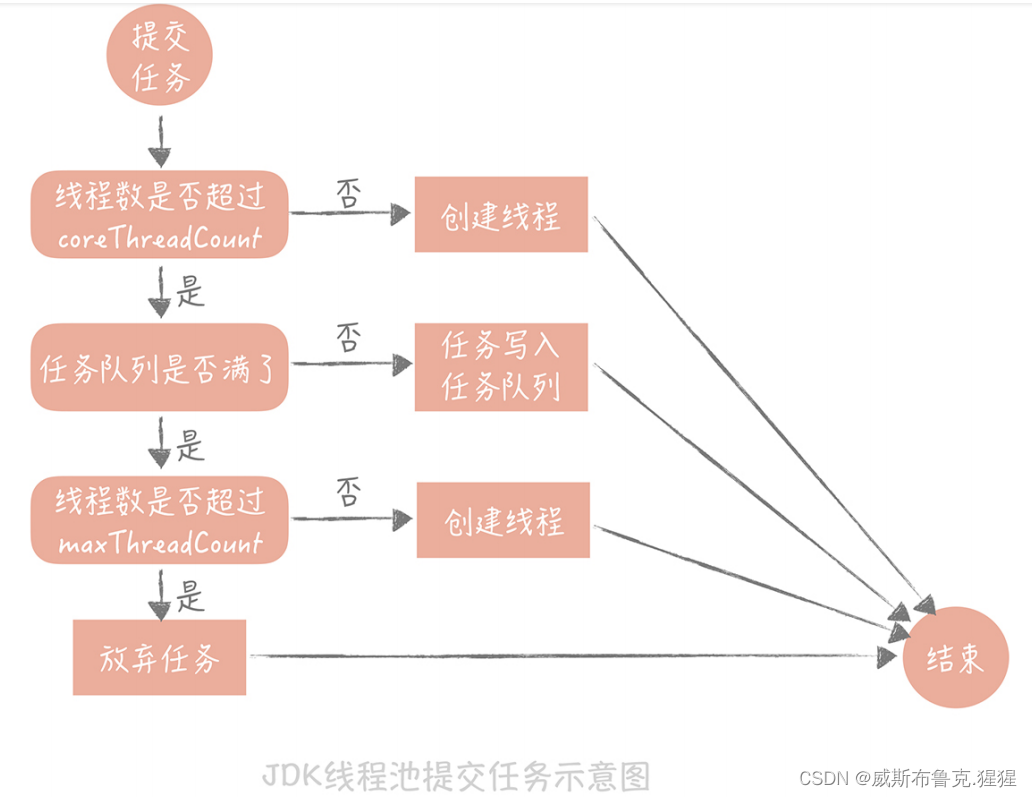

多线程面试指南

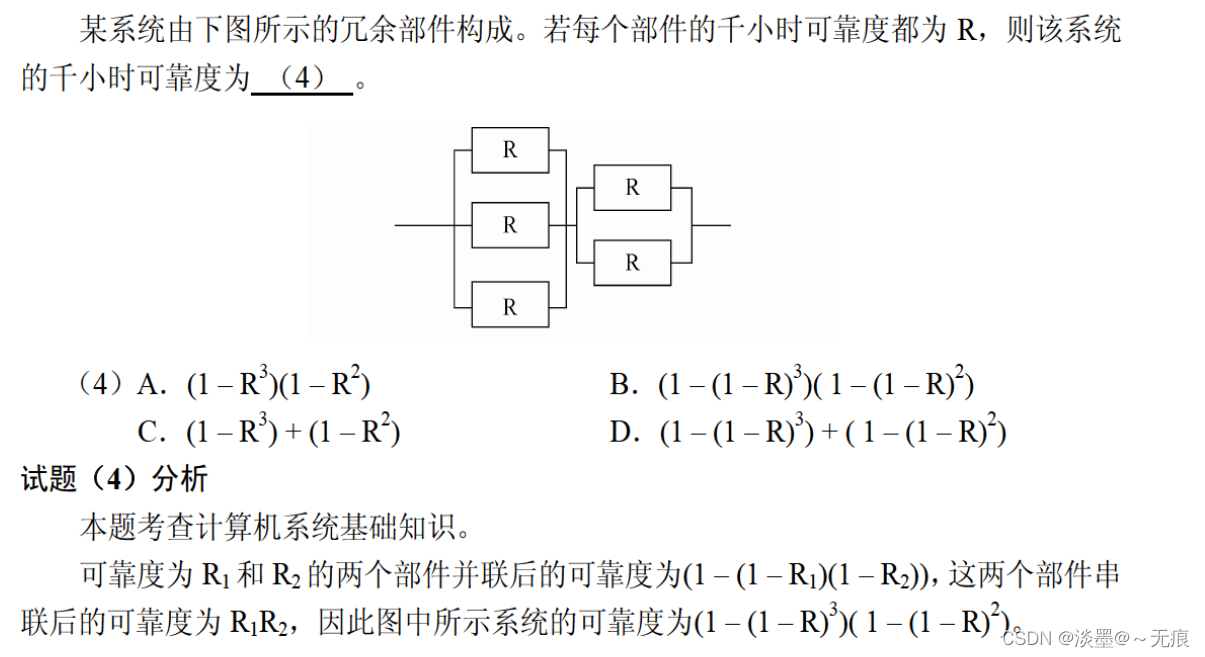

2022年软考复习笔记一

E-commerce spike project harvest (2)

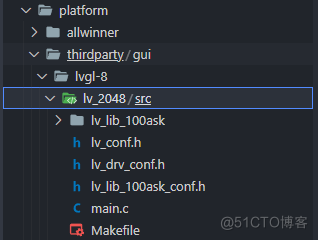

Allwinner V853 development board transplants LVGL-based 2048 games

“低代码”编程或将是软件开发的未来

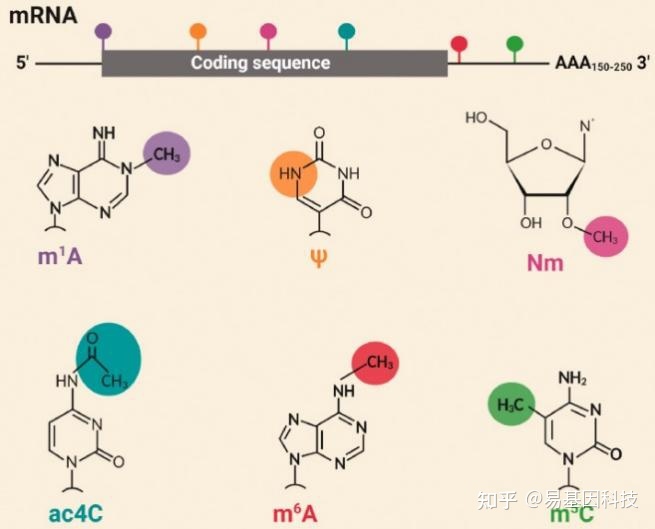

易基因|深度综述:m6A RNA甲基化在大脑发育和疾病中的表观转录调控作用

Problem solving-->Online OJ (19)

NFT digital collection development issue - digital collection platform

持续集成实战 —— Jenkins自动化测试环境搭建

为什么中国的数字是四位一进,而西方的是三位一进?

随机推荐

web安全入门-Kill Chain测试流程

Mobileye携手极氪通过OTA升级开启高级驾驶辅助新篇章

Zhaoqi Technology Innovation High-level Talent Entrepreneurship Competition Platform

为什么中国的数字是四位一进,而西方的是三位一进?

Yi Gene|In-depth review: epigenetic regulation of m6A RNA methylation in brain development and disease

全部内置函数详细认识(中篇)

华为云DevCloud获信通院首批云原生技术架构成熟度评估的最高级认证

QOS功能介绍

JS entry to proficient full version

NFT digital collection development issue - digital collection platform

metaForce佛萨奇2.0系统开发功能逻辑介绍

关于Web渗透测试需要知道的一切:完整指南

请查收 2022华为开发者大赛备赛攻略

使用 ABAP 正则表达式解析 uuid 的值

Oracle数据库备份dmp文件太大,有什么办法可以在备份的时候拆分成多个dmp吗?

$'\r': command not found

安克创新每一个“五星好评”背后,有怎样的流程管理?

Please check the preparation guide for the 2022 Huawei Developer Competition

消息称原美图高管加盟蔚来手机 顶配产品或超7000元

持续集成实战 —— Jenkins自动化测试环境搭建