当前位置:网站首页>[Letter from Wu Enda] The development of reinforcement learning!

[Letter from Wu Enda] The development of reinforcement learning!

2022-08-10 15:12:00 【Zhengyi】

一、译文

亲爱的朋友们,

In preparation for the third session of the machine learning specialization course(which includes reinforcement learning),I thought hard about why reinforcement learning algorithms are still very demanding in use.They are very sensitive to the choice of hyperparameters,Those with experience in hyperparameter tuning may gain10倍或100倍的性能提升.十年前,Using supervised deep learning algorithms is equally demanding,But as research into systematic approaches to building supervised models continues to advance,It also became more stable.

Will reinforcement learning algorithms become more robust in the next decade??我希望如此.然而,Reinforcement learning in building the real world(非模拟)Benchmarking faces a unique hurdle.

When supervised deep learning is in the early stages of development,Experienced hyperparameter regulators can achieve better results than less experienced regulators.We have to choose the neural network structure、正则化方法、学习速率、Reduced learning rate schedule、mini-batch 的尺寸、动量、Random weight initialization methods, etc..The right choice can have a huge impact on the convergence speed and final performance of the algorithm.

Credit to research progress over the past decade,We now have a more robust optimization algorithm,如Adam,更好的神经网络架构,and more systematic guidance on default choices for many other hyperparameters,making it easier to get good results.I suspect that scaling up neural networks would also make them more robust.这些天来,even if i only have100个训练样本,I would also have no hesitation in training a containing2000Network with more than 10,000 parameters(比如 ResNet-50).相反,如果在100training on samples containing1000parameter network,Every parameter is more important,So we need to tune more carefully.

My buddies and I have applied reinforcement learning to cars、直升机、四足动物、Robot Snake and many other applications.然而,Reinforcement learning algorithms today are still picky.While poorly tuned hyperparameters in supervised deep learning can cause your algorithm to train slowly3倍或10倍(这很糟糕),But in reinforcement learning,If the algorithm does not converge,May result in slower training speed100倍!Similar to supervised learning ten years ago,We have developed many techniques to help reinforcement learning algorithms converge(如双 Q 学习、软更新、Experience replay and use epsilon slowly decreasingepsilon-greedy exploration).These methods are clever,I praised the development of their researchers,But many of these techniques generate additional hyperparameters,在我看来,These parameters are difficult to tune.

Further research into reinforcement learning may follow the path of supervised deep learning,and provide us with more robust algorithms and systematic guidance on how to make those choices.but one thing worries me.在监督学习中,Benchmark datasets enable researchers around the world to tune algorithms against the same dataset,and build on each other's work.在强化学习中,More commonly used benchmarks are simulated environments,如 OpenAI Gym.But getting reinforcement learning algorithms to run on simulated robots is much easier than getting them to run on physical robots.

Many algorithms that excel at simulated tasks struggle with physical robots.Even two copies of the same robot design will be different.此外,Giving every aspiring reinforcement learning researcher their own copy of the robot is not feasible.While researchers are simulating robots(And playing video games)Rapid progress has been made in reinforcement learning,But bridges used in applications in non-simulated environments are often missing.Many excellent research labs are working on physical robotics.But since every robot is unique,Results from one lab may be difficult to replicate by other labs,This hinders the speed of development.

So far I haven't found a solution to these tricky problems.But I hope that all people can work together in the field of artificial intelligence,make these algorithms more robust and broadly effective.

Please keep learning!

吴恩达

二、原文

Dear friends,

While working on Course 3 of the Machine Learning Specialization, which covers reinforcement learning, I was reflecting on how reinforcement learning algorithms are still quite finicky. They’re very sensitive to hyperparameter choices, and someone experienced at hyperparameter tuning might get 10x or 100x better performance. Supervised deep learning was equally finicky a decade ago, but it has gradually become more robust with research progress on systematic ways to build supervised models.

Will reinforcement learning (RL) algorithms also become more robust in the next decade? I hope so. However, RL faces a unique obstacle in the difficulty of establishing real-world (non-simulation) benchmarks.

When supervised deep learning was at an earlier stage of development, experienced hyperparameter tuners could get much better results than less-experienced ones. We had to pick the neural network architecture, regularization method, learning rate, schedule for decreasing the learning rate, mini-batch size, momentum, random weight initialization method, and so on. Picking well made a huge difference in the algorithm’s convergence speed and final performance.

Thanks to research progress over the past decade, we now have more robust optimization algorithms like Adam, better neural network architectures, and more systematic guidance for default choices of many other hyperparameters, making it easier to get good results. I suspect that scaling up neural networks — these days, I don’t hesitate to train a 20 million-plus parameter network (like ResNet-50) even if I have only 100 training examples — has also made them more robust. In contrast, if you’re training a 1,000-parameter network on 100 examples, every parameter matters much more, so tuning needs to be done much more carefully.D

My collaborators and I have applied RL to cars, helicopters, quadrupeds, robot snakes, and many other applications. Yet today’s RL algorithms still feel finicky. Whereas poorly tuned hyperparameters in supervised deep learning might mean that your algorithm trains 3x or 10x more slowly (which is bad), in reinforcement learning, it feels like they might result in training 100x more slowly — if it converges at all! Similar to supervised learning a decade ago, numerous techniques have been developed to help RL algorithms converge (like double Q learning, soft updates, experience replay, and epsilon-greedy exploration with slowly decreasing epsilon). They’re all clever, and I commend the researchers who developed them, but many of these techniques create additional hyperparameters that seem to me very hard to tune.

Further research in RL may follow the path of supervised deep learning and give us more robust algorithms and systematic guidance for how to make these choices. One thing worries me, though. In supervised learning, benchmark datasets enable the global community of researchers to tune algorithms against the same dataset and build on each other’s work. In RL, the more-commonly used benchmarks are simulated environments like OpenAI Gym. But getting an RL algorithm to work on a simulated robot is much easier than getting it to work on a physical robot.

Many algorithms that work brilliantly in simulation struggle with physical robots. Even two copies of the same robot design will be different. Further, it’s infeasible to give every aspiring RL researcher their own copy of every robot. While researchers are making rapid progress on RL for simulated robots (and for playing video games), the bridge to application in non-simulated environments is often missing. Many excellent research labs are working on physical robots. But because each robot is unique, one lab’s results can be difficult for other labs to replicate, and this impedes the rate of progress.

I don’t have a solution to these knotty issues. But I hope that all of us in AI collectively will manage to make these algorithms more robust and more widely useful.

Keep learning!

Andrew

Andrew Ng Posted in:2022-08-05 10:10

边栏推荐

- 紫金示例

- E. Cross Swapping(并查集变形/好题)

- The a-modal in the antd component is set to a fixed height, and the content is scrolled and displayed

- pm2 static file service

- 基于 Azuki 系列:NFT估值分析框架“DRIC”

- 强意识 压责任 安全培训筑牢生产屏障

- 产品使用说明书小程序开发制作说明

- 使用Uiautomator2进行APP自动化测试

- Azure IoT Partner Technology Empowerment Workshop: IoT Dev Hack

- 领域驱动模型设计与微服务架构落地-从项目去剖析领域驱动

猜你喜欢

强意识 压责任 安全培训筑牢生产屏障

It is reported that the original Meitu executive joined Weilai mobile phone, the top product may exceed 7,000 yuan

线上线下课程教学培训小程序开发制作功能介绍

蓝帽杯半决赛火炬木wp

学习MySQL 临时表

PyTorch multi-machine multi-card training: DDP combat and skills

PyTorch 多机多卡训练:DDP 实战与技巧

JS 从零手写实现一个bind方法

基于 Azuki 系列:NFT估值分析框架“DRIC”

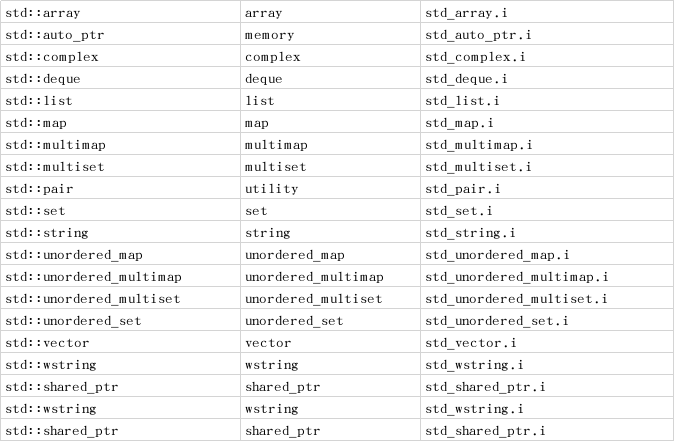

SWIG教程《一》

随机推荐

Digital Collection Platform System Development Practice

epoll学习:思考一种高性能的服务器处理框架

Flask框架——基于Celery的后台任务

2022年网络安全培训火了,缺口达95%,揭开网络安全岗位神秘面纱

Zijin Example

学习MySQL 临时表

易基因|深度综述:m6A RNA甲基化在大脑发育和疾病中的表观转录调控作用

1004 (tree array + offline operation + discretization)

"Thesis Reading" PLATO: Pre-trained Dialogue Generation Model with Discrete Latent Variable

How to code like a pro in 2022 and avoid If-Else

PAT甲级 1014 排队等候(队列大模拟+格式化时间)

网络安全(加密技术、数字签名、证书)

小程序-语音播报功能

静态变量存储在哪个区

WSL 提示音关闭

2022-08-10 Daily: Swin Transformer author Cao Yue joins Zhiyuan to carry out research on basic vision models

富爸爸穷爸爸之读书笔记

C#实现访问OPC UA服务器

字节终面:CPU 是如何读写内存的?

It is reported that the original Meitu executive joined Weilai mobile phone, the top product may exceed 7,000 yuan