当前位置:网站首页>Spark / Scala - read rcfile & orcfile

Spark / Scala - read rcfile & orcfile

2022-04-21 15:13:00 【BIT_ six hundred and sixty-six】

One . introduction

As mentioned above MapReduce - Read OrcFile, RcFile file , Through here Java + MapReduce Implemented read RcFile and OrcFile file , Later encountered MapReduce - Read at the same time RcFile and OrcFile The conflict of dependence , Also solved smoothly , But the usual development is still used to spark So use spark Implement read OrcFile and RcFile as well as Map-Reduce The function of .

Two . Read RcFile

front mr Our mission has been to RcFile The form of , Its key The form of is not critical ,LongWritable Is the line number , NullWritable Just ignore the line number , Mainly value In the form of : BytesRefArrayWritable, So you can use spark Of hadoopFile API Realization RcFile The read , Let's take a look at hadoopFile Parameters of :

def hadoopFile[K, V](

path: String,

inputFormatClass: Class[_ <: InputFormat[K, V]],

keyClass: Class[K],

valueClass: Class[V],

minPartitions: Int = defaultMinPartitions): RDD[(K, V)]keyClass and ValueClass It has been determined that ,inputFormat It's OK to be sure , according to MR - MultipleInputs.addInputPath We can know its inputFormatClass by RCFileInputFormat, Now start reading :

val conf = (new SparkConf).setAppName("TestReadRcFile").setMaster("local[1]")

val spark = SparkSession

.builder

.config(conf)

.getOrCreate()

val sc = spark.sparkContext

val rcFileInput = “”

val minPartitions = 100

println(repeatString("=", 30) + " Start reading RcFile" + repeatString("=", 30))

val rcFileRdd = sc.hadoopFile(rcFileInput, classOf[org.apache.hadoop.hive.ql.io.RCFileInputFormat[LongWritable, BytesRefArrayWritable]], classOf[LongWritable], classOf[BytesRefArrayWritable], minPartitions)

.map(line => {

val key = LazyBinaryRCFileUtils.readString(line._2.get(0))

val value = LazyBinaryRCFileUtils.readString(line._2.get(1))

(key, value)

})

println(repeatString("=", 30) + " End read RcFile" + repeatString("=", 30))

3、 ... and . Read OrcFile

Compared to reading RcFile Need to use hadoopFile, because SparkSession Provides direct reading orcFile Of API, bring spark Read OrcFile Quite silky , Note that there orc After reading, you will return DataSet, Need to pass through .rdd Turn into Spark The conventional RDD.

val conf = (new SparkConf).setAppName("TestReadRcFile").setMaster("local[1]")

val spark = SparkSession

.builder

.config(conf)

.getOrCreate()

println(repeatString("=", 30) + " Start reading OrcFile" + repeatString("=", 30))

import spark.implicits._

val orcInput = “”

val orcFileRdd = spark.read.orc(orcInput).map(row => {

val key = row.getString(0)

val value = row.getString(1)

(key, value)

}).rdd

println(repeatString("=", 30) + " End read OrcFile" + repeatString("=", 30))

Four .spark Realization Map-Reduce

Above rcFileRdd and orcFileRdd Two PairRdd It can be regarded as two Mapper, Following execution reduce operation , adopt union Achieve each pairRdd The merger of , Follow up groupByKey To the goal key Conduct value polymerization , Follow up reduce The operation of :

rcFileRdd.union(orcFileRdd).groupByKey().map(info => {

val key: String = info._1

val values: Iterable[String] = info._2

... reduce func ...

})5、 ... and . summary

Compared with MR Read RcFile And OrcFile,spark Of API It's still relatively simple , Small partners in need can try , Nice with fat intestines ~

版权声明

本文为[BIT_ six hundred and sixty-six]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204211507231856.html

边栏推荐

- The conversion between RDD and dataframe in pyspark is realized by RDD processing dataframe: data segmentation and other functions

- 亚马逊测评自养号,卖家想要获得review应该怎么做?

- [binary search - simple] 69 Square root of X

- ThreadLocal应用及原理解析

- Mysql8. Method for resetting initial password above 0

- 架构实战毕业总结

- [binary search - simple] 374 Guess the size of the number

- SQL性能优化以及性能测试

- ENSP三层交换机连接二层交换机及路由器的做法

- 应用要在AppStore上线,需要满足什么条件?

猜你喜欢

让阿里P8都为之着迷的分布式核心原理解析到底讲了啥?看完我惊了

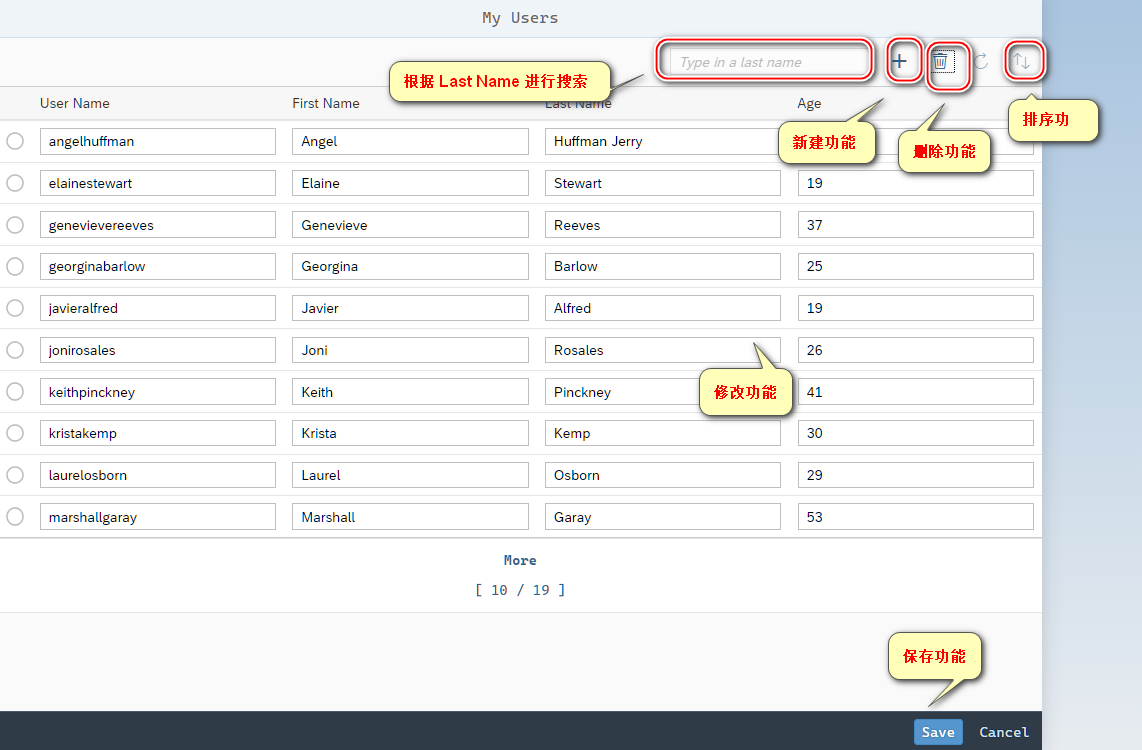

SAP UI5 应用开发教程之七十 - 如何使用按钮控件触发页面路由跳转试读版

Mysql 执行流程

突然掉电,为啥MySQL也不会丢失数据?(收藏)

Storage system and memory

SAP UI5 应用开发教程之七十 - 如何使用按钮控件触发页面路由跳转

![[JS] urlsearchparams object (upload parameters to URL in the form of object)](/img/e1/5ff277e990f9aa61072c07142febca.png)

[JS] urlsearchparams object (upload parameters to URL in the form of object)

ENSP三层交换机连接二层交换机及路由器的做法

【C语言进阶】自定义类型:结构体,位段,枚举,联合

Mysql8.0以上重置初始密码的方法

随机推荐

Software testing (III) p51-p104 software test case methods and defects

MySQL 8.0.11安装教程(Windows版)

如何选择合适的 Neo4j 版本(2022)

Technology sharing | black box testing methodology - boundary value

Excel小技巧-自动填充相邻单元格内容

干货 | 应用打包还是测试团队老大难问题?

融云首席科学家任杰:互联网兵无常势,但总有人正年轻

技术分享 | 黑盒测试方法论—边界值

In the digital age, how can SaaS software become a light cavalry replaced by localization?

Libmysql.com in vs2019 Lib garbled code

lightGBM专题2:基于pyspark在spark平台下lightgbm训练详解

[binary search - simple] sword finger offer II 068 Find insertion location

Architecture practical graduation summary

Manually adjust slf4j the log level

你真的会用`timescale吗?

lightGBM专题5:pyspark表数据处理之数据合并

登录重构小记

【二分搜索-简单】LCP 28. 采购方案

[JS] urlsearchparams object (upload parameters to URL in the form of object)

数字化时代,企业运维面临现状及挑战分析解读