当前位置:网站首页>Jupyter notebook crawling web pages

Jupyter notebook crawling web pages

2022-04-23 05:08:00 【FOWng_ lp】

urllib Send a request

Take Baidu for example

from urllib import request

url = "https://www.baidu.com" # Get a response

res = request.urlopen(url)

print(res.info())# Response head

print(res.getcode())# Status code 2xx( normal ) 3xx( forward )4xx(404) 5xx( Server internal error )

print(res.geturl())# Return response address

utf-8

html = res.read()

html = html.decode("utf-8")

print(html)

The crawler ( Take popular reviews for example )

add to header Information

url = "https://www.dianping.com" # Get a response

header={

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36"

}

req = request.Request(url,headers=header)

res = request.urlopen(req)

print(res.info())# Response head

print(res.getcode())# Status code 2xx( normal ) 3xx( forward ) 4xx(404) 5xx( Server internal error )

print(res.geturl())# Return response address

Used request

request Send a request

import requests

url = "https://www.baidu.com"

res = requests.get(url)

print(res.encoding)

print(res.headers) # If there is no 'Content-Type' ,encoding = utf-8 Yes Content-Type Words , If set charset, So charset Subject to , There is no setting ISO-8859-1

print(res.url)

Running results

ISO-8859-1

{'Cache-Control': 'private, no-cache, no-store, proxy-revalidate, no-transform', 'Connection': 'keep-alive', 'Content-Encoding': 'gzip', 'Content-Type': 'text/html', 'Date': 'Sun, 05 Apr 2020 05:50:23 GMT', 'Last-Modified': 'Mon, 23 Jan 2017 13:24:33 GMT', 'Pragma': 'no-cache', 'Server': 'bfe/1.0.8.18', 'Set-Cookie': 'BDORZ=27315; max-age=86400; domain=.baidu.com; path=/', 'Transfer-Encoding': 'chunked'}

https://www.baidu.com/

res.encoding = "utf-8"

print(res.text)

Running results

The crawler ( Take popular reviews for example )

add to header Information

import requests

url = "https://www.dianping.com"

header = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36"

}

res = requests.get(url,headers=header)

print(res.encoding)

print(res.headers) # If there is no 'Content-Type' ,encoding = utf-8 Yes Content-Type Words , If set charset, So charset Subject to , There is no setting ISO-8859-1

print(res.url)

print(res.status_code)

print(res.text)

BuautifulSoup4 Parsing content

Sichuan health commission as an example

from bs4 import BeautifulSoup

import requests

url = 'http://wsjkw.sc.gov.cn/scwsjkw/gzbd/fyzt.shtml'

res = requests.get(url)

res.encoding = 'utf-8'

html = res.text

soup = BeautifulSoup(html)# Wrapper class

soup.find('h2').text

a = soup.find('a')

print(a)

print(a.attrs)

print(a.attrs['href'])

Running results

<a href="/scwsjkw/gzbd01/2020/4/6/2d06e73d4ee14597bb375ece4b6f02ac.shtml" target="_blank"><img alt=" The latest situation of New Coronavirus pneumonia in Hunan Province (4 month ..." src="/scwsjkw/gzbd01/2020/4/6/2d06e73d4ee14597bb375ece4b6f02ac/images/b1bc5f23725045d7940a854fbe2d70a9.jpg

"/></a>

{'target': '_blank', 'href': '/scwsjkw/gzbd01/2020/4/6/2d06e73d4ee14597bb375ece4b6f02ac.shtml'}

/scwsjkw/gzbd01/2020/4/6/2d06e73d4ee14597bb375ece4b6f02ac.shtml

url_new = "http://wsjkw.sc.gov.cn" + a.attrs['href'] # Use the public part of the website to add href Assemble a new one url Address

# In this way, you can get all the tags inside

#url_new# Here is the latest address

res = requests.get(url_new)

res.encoding = 'utf-8'

soup = BeautifulSoup(res.text)

contest = soup.find('p')

print(contest)

Running results

re Parsing content (Regular Expression)

python Built in regular expression module

re.search(regex,str)

1. stay str Find a string that meets the criteria in , No match returns None

2. The returned results can be grouped , You can add parentheses to the string to separate data

groups()

group(index) Return grouped content

import re

text = contest.text

#print(text)

patten = " newly added (\d+) Of the confirmed cases "

res = re.search(patten,text)

print(res)

Running results

Supplement to regular expressions

Crawling Tencent data

How to handle the interface of Tencent historical data list

Please use the latest interface address

版权声明

本文为[FOWng_ lp]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204220549345999.html

边栏推荐

- 【数据库】MySQL多表查询(一)

- 2022/4/22

- 2022/4/22

- 《2021多多阅读报告》发布,95后、00后图书消费潜力攀升

- Details related to fingerprint payment

- 负载均衡简介

- In aggregated query without group by, expression 1 of select list contains nonaggregated column

- PHP 统计指定文件夹下文件的数量

- Progress of innovation training (IV)

- Transaction isolation level of MySQL transactions

猜你喜欢

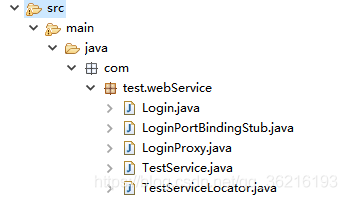

The WebService interface writes and publishes calls to the WebService interface (2)

Download PDF from HowNet (I don't want to use CAJViewer anymore!!!)

《2021多多阅读报告》发布,95后、00后图书消费潜力攀升

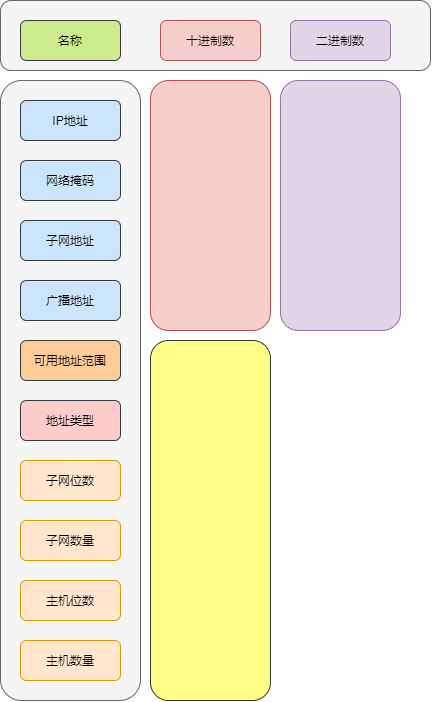

DIY is an excel version of subnet calculator

Field injection is not recommended using @ Autowired

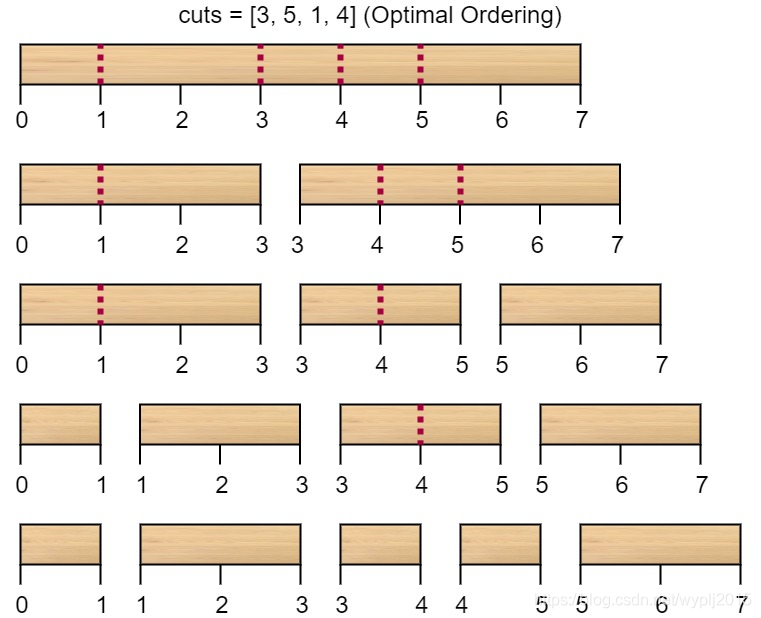

Leetcode 1547: minimum cost of cutting sticks

Basic theory of Flink

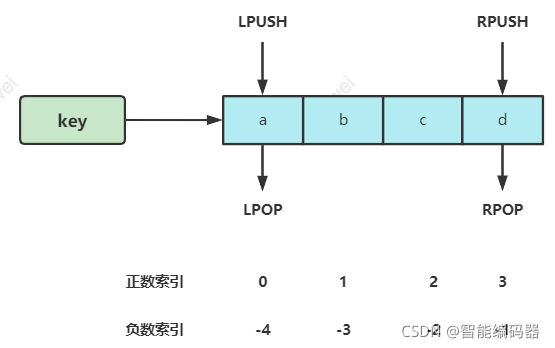

Redis data type usage scenario

![[WinUI3]编写一个仿Explorer文件管理器](/img/3e/62794f1939da7f36f7a4e9dbf0aa7a.png)

[WinUI3]编写一个仿Explorer文件管理器

What are the redis data types

随机推荐

Field injection is not recommended using @ Autowired

The 2021 more reading report was released, and the book consumption potential of post-95 and Post-00 rose

Acid of MySQL transaction

C list field sorting contains numbers and characters

[database] MySQL basic operation (basic operation ~)

MySQL realizes row to column SQL

In aggregated query without group by, expression 1 of select list contains nonaggregated column

独立站运营 | FaceBook营销神器——聊天机器人ManyChat

Cross border e-commerce | Facebook and instagram: which social media is more suitable for you?

How does PostgreSQL parse URLs

Details related to fingerprint payment

Learning Android from scratch -- Introduction

Unity C e-learning (IV)

Detailed explanation of the differences between TCP and UDP

Wine (COM) - basic concept

What are the redis data types

Golang select priority execution

Mac enters MySQL terminal command

Progress of innovation training (III)

Use model load_ state_ Attributeerror appears when dict(): 'STR' object has no attribute 'copy‘