当前位置:网站首页>深度学习之-01

深度学习之-01

2022-08-10 04:22:00 【kyccom】

深度学习之-01

深度学习近来火爆, 正好C站也有相关活动,参与了大神K同学的【21天学习挑战赛】K同学啊 邀你参加深度学习研讨班,趁下班时间练练手,把fashion服装数据集跟着博主抄了一下作业。

下载数据

import tensorflow as tf

from tensorflow.keras import datasets, layers, models

import matplotlib.pyplot as plt

(train_images, train_labels), (test_images, test_labels) = datasets.fashion_mnist.load_data()

数据下载好后, 来看一下数据集的形状:

train_images.shape, train_labels.shape, test_images.shape, test_labels.shape

结果如下:

((60000, 28, 28), (60000,), (10000, 28, 28), (10000,))

目前的像素点值在0~255之间,下面将数据进行简单归一化

train_images, test_images = train_images/255.0, test_images/255.0

归一化后的像素点的数据值将在0~1之间, 适合做学度运算

下面给数据增加维度,以适合涨量运算

train_images = train_images.reshape((60000, 28, 28, 1))

test_images = test_images.reshape((10000, 28, 28, 1))

train_images.shape, train_labels.shape, test_images.shape, test_labels.shape

增加维芳后的数据形状如下:

((60000, 28, 28), (60000,), (10000, 28, 28), (10000,))

数据集中对应的服装名称如下表

class_names = [‘T_shirt/top’, ‘Trouser’, ‘Pullover’, ‘Dress’, ‘Coat’, ‘Sandal’, ‘Shirt’, ‘Sneaker’, ‘Bag’, ‘Ankle book’]

下面利用此表,结合matplotlit,打印一些图片出来

plt.figure(figsize=(20, 10))

for i in range(20):

plt.subplot(5, 10, i+1)

plt.xticks([])

plt.yticks([])

plt.grid(True)

plt.imshow(train_images[i], cmap=plt.cm.binary)

plt.xlabel(calass_names[train_labels[i]])

plt.show()

建议模型

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)),

layers.MaxPool2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.MaxPool2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.Flatten(),

layers.Dense(64, activation='relu'),

layers.Dense(10)

])

model.summary()

模型信息如下:

Model: “sequential”

Layer (type) Output Shape Param #

conv2d (Conv2D) (None, 26, 26, 32) 320

max_pooling2d (MaxPooling2D (None, 13, 13, 32) 0

)

conv2d_1 (Conv2D) (None, 11, 11, 64) 18496

max_pooling2d_1 (MaxPooling (None, 5, 5, 64) 0

2D)

conv2d_2 (Conv2D) (None, 3, 3, 64) 36928

flatten (Flatten) (None, 576) 0

dense (Dense) (None, 64) 36928

dense_1 (Dense) (None, 10) 650

=================================================================

Total params: 93,322

Trainable params: 93,322

Non-trainable params: 0

编译模型,设定超参数

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

到些为止,模型与数据已准备好了,下面用前面准备好的训练数据,对模型进行训练:

history = model.fit(train_images, train_labels, epochs=10,

validation_data=(test_images, test_labels))

经过10轮训练后,模型的精度达到90.6%

Epoch 1/10

1875/1875 [] - 79s 42ms/step - loss: 0.5033 - accuracy: 0.8181 - val_loss: 0.3700 - val_accuracy: 0.8642

Epoch 2/10

1875/1875 [] - 84s 45ms/step - loss: 0.3234 - accuracy: 0.8823 - val_loss: 0.3330 - val_accuracy: 0.8831

Epoch 3/10

1875/1875 [] - 89s 48ms/step - loss: 0.2747 - accuracy: 0.9002 - val_loss: 0.2929 - val_accuracy: 0.8951

Epoch 4/10

1875/1875 [] - 96s 51ms/step - loss: 0.2432 - accuracy: 0.9101 - val_loss: 0.2674 - val_accuracy: 0.9014

Epoch 5/10

1875/1875 [] - 93s 50ms/step - loss: 0.2184 - accuracy: 0.9189 - val_loss: 0.2945 - val_accuracy: 0.8900

Epoch 6/10

1875/1875 [] - 93s 50ms/step - loss: 0.1997 - accuracy: 0.9258 - val_loss: 0.2577 - val_accuracy: 0.9090

Epoch 7/10

1875/1875 [] - 95s 51ms/step - loss: 0.1791 - accuracy: 0.9330 - val_loss: 0.2621 - val_accuracy: 0.9064

Epoch 8/10

1875/1875 [] - 89s 47ms/step - loss: 0.1666 - accuracy: 0.9371 - val_loss: 0.2994 - val_accuracy: 0.9002

Epoch 9/10

1875/1875 [] - 78s 41ms/step - loss: 0.1518 - accuracy: 0.9434 - val_loss: 0.2773 - val_accuracy: 0.9046

Epoch 10/10

1875/1875 [] - 78s 42ms/step - loss: 0.1411 - accuracy: 0.9476 - val_loss: 0.2913 - val_accuracy: 0.9065

可视化

将训练数据与测试数据的准确率放在同一图中进行对比

plt.plot(history.history['accuracy'], label='accuracy')

plt.plot(history.history['val_accuracy'], label='val_accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.ylim([0.5, 1])

plt.legend(loc='lower right')

plt.show()

从图上可以看出, 蓝色的训练数据的精度随着训练次数的增加,精度在不断提升。而黄色线代表的测数数据的精度比较平稳,说明此模型比较好。

边栏推荐

- webrtc学习--websocket服务器(二) (web端播放h264)

- 基于 EasyCV 复现 DETR 和 DAB-DETR,Object Query 的正确打开方式

- 7、Chrome浏览器在Citrix虚拟应用会话中没有声音

- 域名DNS解析工具ping/nslookup/dig/host

- 若依系统前后台漏洞大全

- 2022年P气瓶充装操作证考试题库及模拟考试

- 【Mindspore】【310推理】导入mindir文件出错

- RK3568处理器体验小记

- 【OpenCV图像处理5】图像的变换

- What is the relationship between legal representative and shareholders?

猜你喜欢

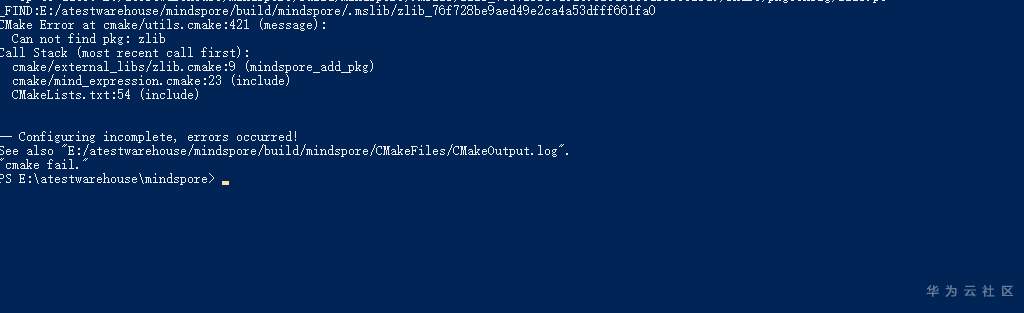

mindspore安装过程中报错cannot find zlib

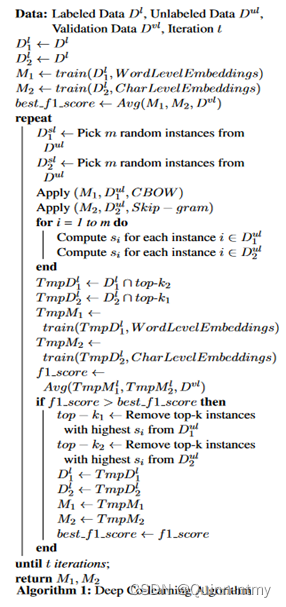

虚假新闻检测论文阅读(八):Assessing Arabic Weblog Credibility via Deep Co-learning

微信公众号开发

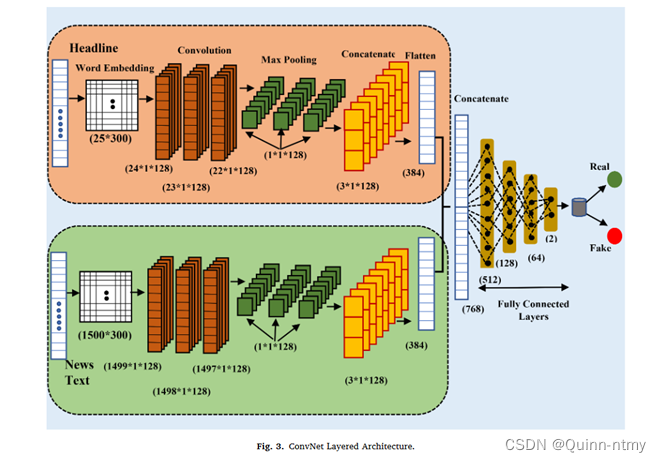

虚假新闻检测论文阅读(七):A temporal ensembling based semi-supervised ConvNet for the detection of fake news

网络层与数据链路层

2022G3 Boiler Water Treatment Exam Mock 100 Questions and Mock Exam

数据切片问题

openvino 安装(01)

2022年电工(初级)国家题库及模拟考试

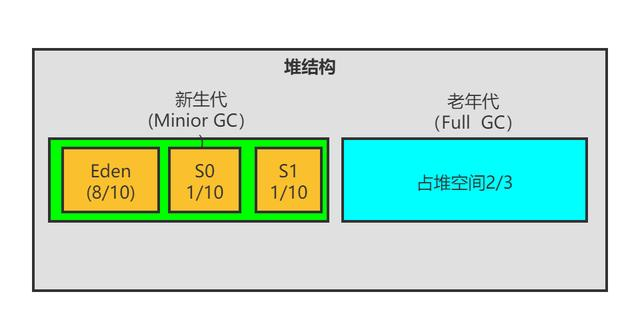

JVM内存模型

随机推荐

ZZULIOJ:1026: 字符类型判断

requests库

什么是SRM?有什么作用?在企业管理中能实现哪些功能?

2022年危险化学品经营单位主要负责人题库及模拟考试

3、ROS工作空间的创建

Acwing 59. 把数字翻译成字符串 计数类DP

【u-boot】u-boot驱动模型分析(02)

What is the relationship between legal representative and shareholders?

多元函数的3D可视化,终于被我总结出来了,数学真是太美了

ZZULIOJ:1029: 三角形判定

基于 EasyCV 复现 DETR 和 DAB-DETR,Object Query 的正确打开方式

MySQL事务的保证机制

留言板

ZZULIOJ:1018: 奇数偶数

【OpenCV图像处理4】算术与位运算

[crit] 23856#0: *101796511 stat()

第九章、类的生命周期

874. 筛法求欧拉函数

【Mindspore】【310推理】导入mindir文件出错

torch.nn.CrossEntropyLoss()对应的MindSpore算子是哪个?